Guoru Ding

Corresponding author

Spectrum Prediction With Deep 3D Pyramid Vision Transformer Learning

Aug 15, 2024Abstract:In this paper, we propose a deep learning (DL)-based task-driven spectrum prediction framework, named DeepSPred. The DeepSPred comprises a feature encoder and a task predictor, where the encoder extracts spectrum usage pattern features, and the predictor configures different networks according to the task requirements to predict future spectrum. Based on the Deep- SPred, we first propose a novel 3D spectrum prediction method combining a flow processing strategy with 3D vision Transformer (ViT, i.e., Swin) and a pyramid to serve possible applications such as spectrum monitoring task, named 3D-SwinSTB. 3D-SwinSTB unique 3D Patch Merging ViT-to-3D ViT Patch Expanding and pyramid designs help the model accurately learn the potential correlation of the evolution of the spectrogram over time. Then, we propose a novel spectrum occupancy rate (SOR) method by redesigning a predictor consisting exclusively of 3D convolutional and linear layers to serve possible applications such as dynamic spectrum access (DSA) task, named 3D-SwinLinear. Unlike the 3D-SwinSTB output spectrogram, 3D-SwinLinear projects the spectrogram directly as the SOR. Finally, we employ transfer learning (TL) to ensure the applicability of our two methods to diverse spectrum services. The results show that our 3D-SwinSTB outperforms recent benchmarks by more than 5%, while our 3D-SwinLinear achieves a 90% accuracy, with a performance improvement exceeding 10%.

Multi-Agent Probabilistic Ensembles with Trajectory Sampling for Connected Autonomous Vehicles

Dec 21, 2023Abstract:Autonomous Vehicles (AVs) have attracted significant attention in recent years and Reinforcement Learning (RL) has shown remarkable performance in improving the autonomy of vehicles. In that regard, the widely adopted Model-Free RL (MFRL) promises to solve decision-making tasks in connected AVs (CAVs), contingent on the readiness of a significant amount of data samples for training. Nevertheless, it might be infeasible in practice and possibly lead to learning instability. In contrast, Model-Based RL (MBRL) manifests itself in sample-efficient learning, but the asymptotic performance of MBRL might lag behind the state-of-the-art MFRL algorithms. Furthermore, most studies for CAVs are limited to the decision-making of a single AV only, thus underscoring the performance due to the absence of communications. In this study, we try to address the decision-making problem of multiple CAVs with limited communications and propose a decentralized Multi-Agent Probabilistic Ensembles with Trajectory Sampling algorithm MA-PETS. In particular, in order to better capture the uncertainty of the unknown environment, MA-PETS leverages Probabilistic Ensemble (PE) neural networks to learn from communicated samples among neighboring CAVs. Afterwards, MA-PETS capably develops Trajectory Sampling (TS)-based model-predictive control for decision-making. On this basis, we derive the multi-agent group regret bound affected by the number of agents within the communication range and mathematically validate that incorporating effective information exchange among agents into the multi-agent learning scheme contributes to reducing the group regret bound in the worst case. Finally, we empirically demonstrate the superiority of MA-PETS in terms of the sample efficiency comparable to MFBL.

AoI-based Temporal Attention Graph Neural Network for Popularity Prediction and Content Caching

Aug 18, 2022

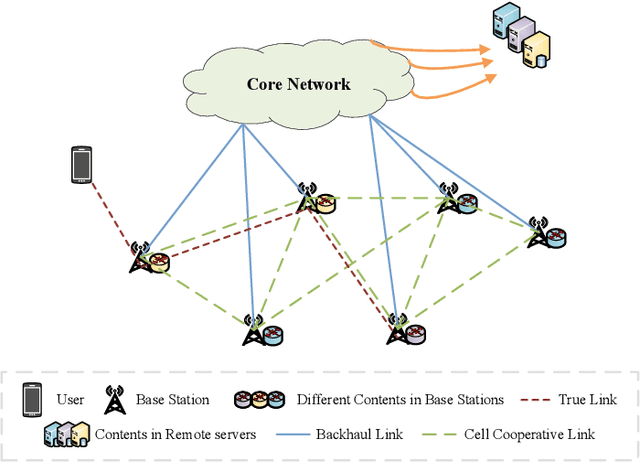

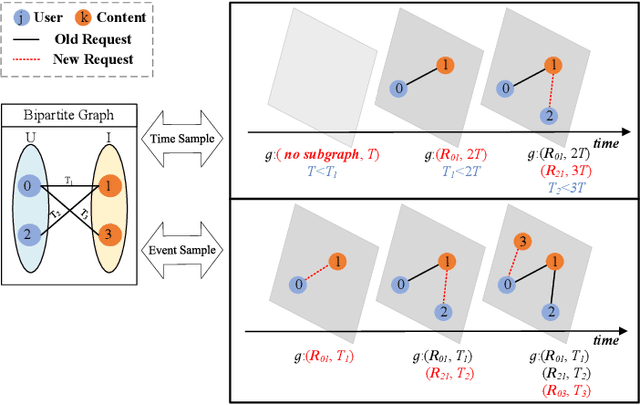

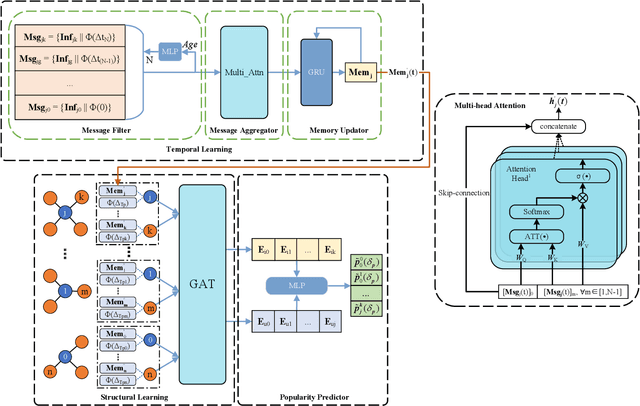

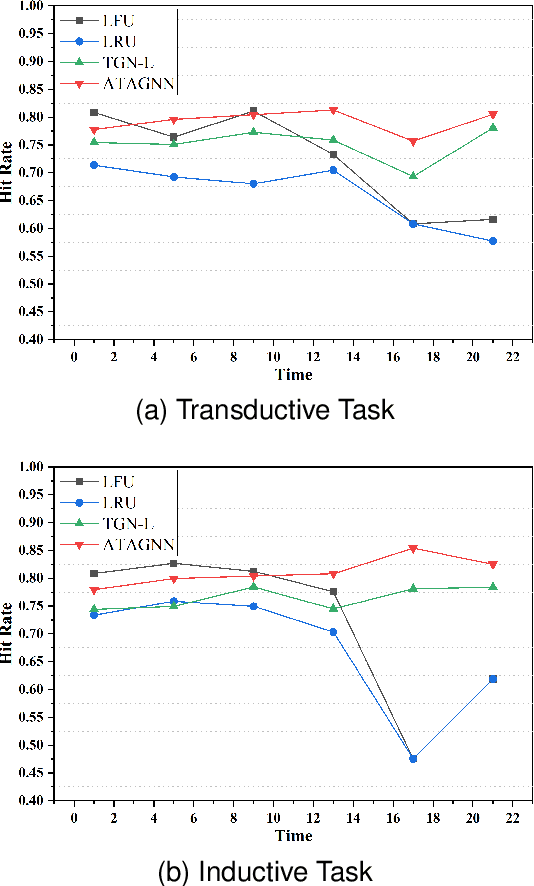

Abstract:Along with the fast development of network technology and the rapid growth of network equipment, the data throughput is sharply increasing. To handle the problem of backhaul bottleneck in cellular network and satisfy people's requirements about latency, the network architecture like information-centric network (ICN) intends to proactively keep limited popular content at the edge of network based on predicted results. Meanwhile, the interactions between the content (e.g., deep neural network models, Wikipedia-alike knowledge base) and users could be regarded as a dynamic bipartite graph. In this paper, to maximize the cache hit rate, we leverage an effective dynamic graph neural network (DGNN) to jointly learn the structural and temporal patterns embedded in the bipartite graph. Furthermore, in order to have deeper insights into the dynamics within the evolving graph, we propose an age of information (AoI) based attention mechanism to extract valuable historical information while avoiding the problem of message staleness. Combining this aforementioned prediction model, we also develop a cache selection algorithm to make caching decisions in accordance with the prediction results. Extensive results demonstrate that our model can obtain a higher prediction accuracy than other state-of-the-art schemes in two real-world datasets. The results of hit rate further verify the superiority of the caching policy based on our proposed model over other traditional ways.

Abnormal Signal Recognition with Time-Frequency Spectrogram: A Deep Learning Approach

May 30, 2022

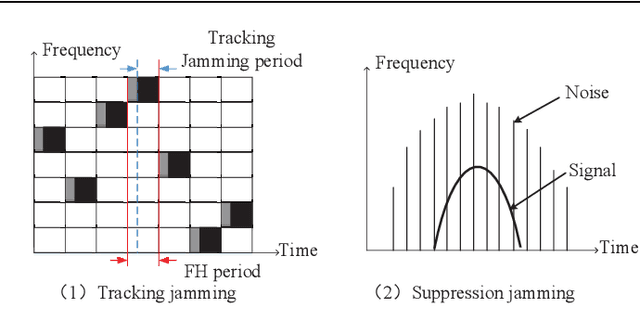

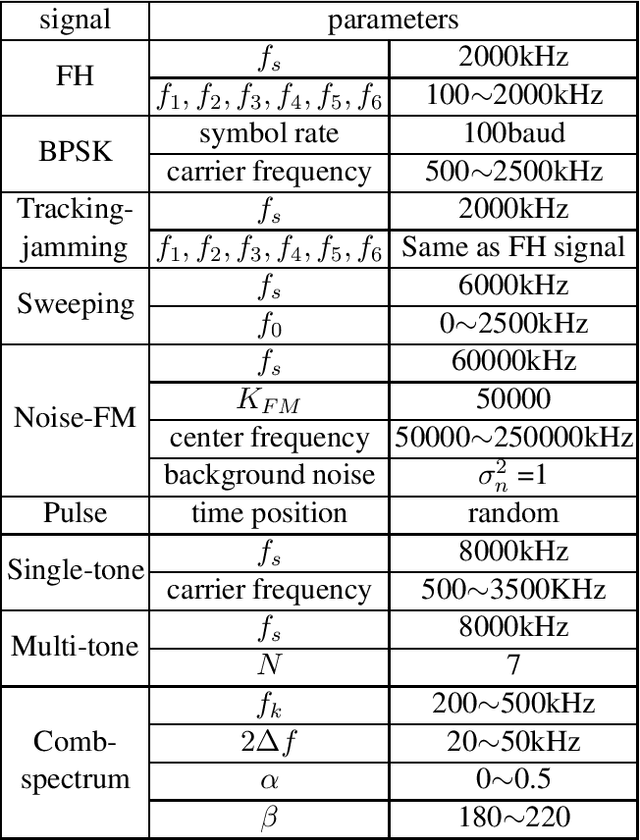

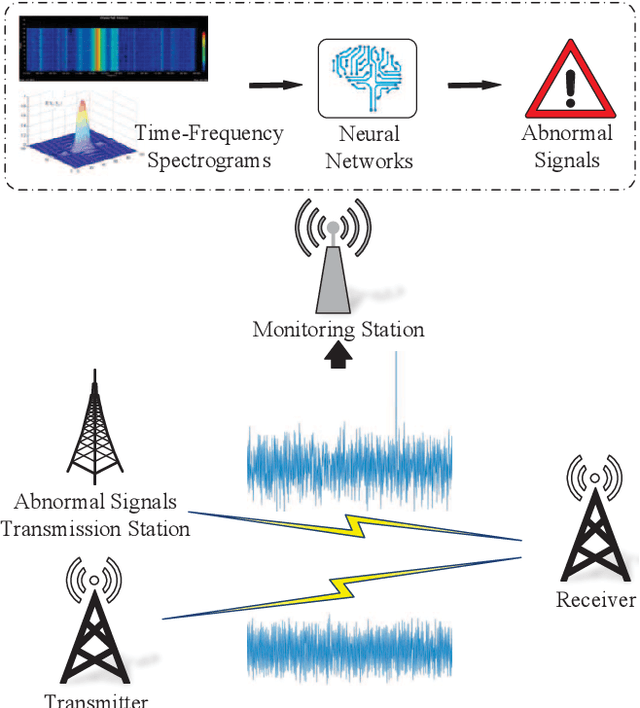

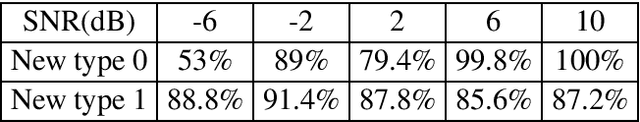

Abstract:With the increasingly complex and changeable electromagnetic environment, wireless communication systems are facing jamming and abnormal signal injection, which significantly affects the normal operation of a communication system. In particular, the abnormal signals may emulate the normal signals, which makes it very challenging for abnormal signal recognition. In this paper, we propose a new abnormal signal recognition scheme, which combines time-frequency analysis with deep learning to effectively identify synthetic abnormal communication signals. Firstly, we emulate synthetic abnormal communication signals including seven jamming patterns. Then, we model an abnormal communication signals recognition system based on the communication protocol between the transmitter and the receiver. To improve the performance, we convert the original signal into the time-frequency spectrogram to develop an image classification algorithm. Simulation results demonstrate that the proposed method can effectively recognize the abnormal signals under various parameter configurations, even under low signal-to-noise ratio (SNR) and low jamming-to-signal ratio (JSR) conditions.

Edge Semantic Cognitive Intelligence for 6G Networks: Models, Framework, and Applications

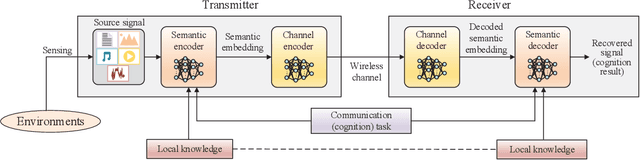

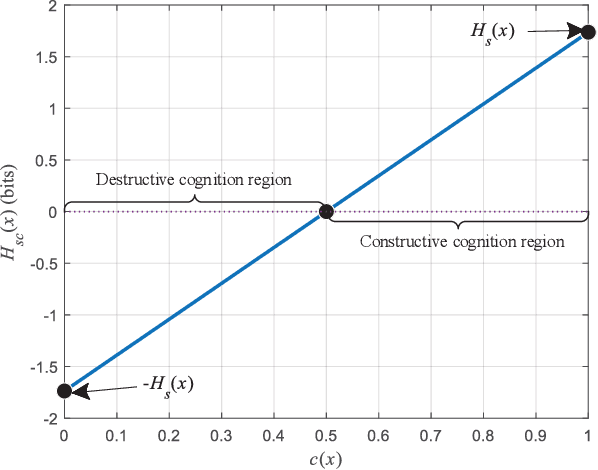

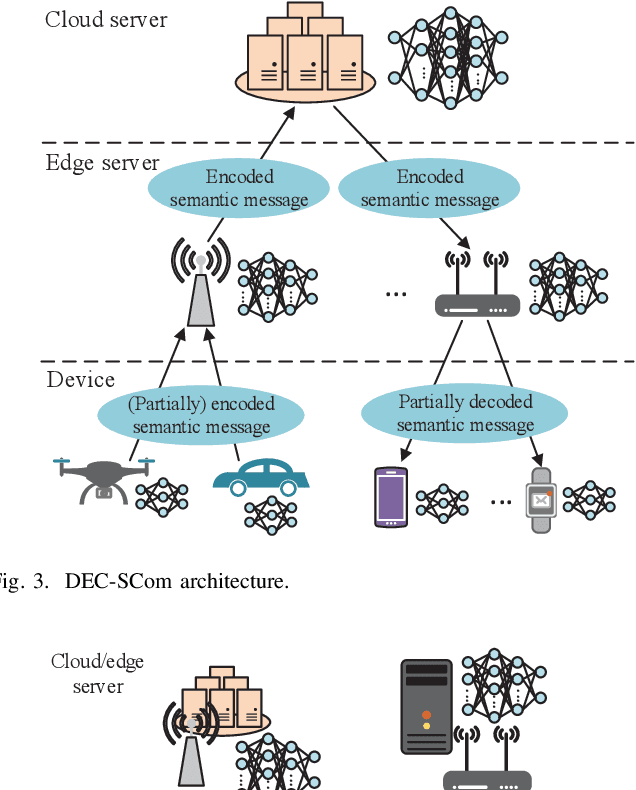

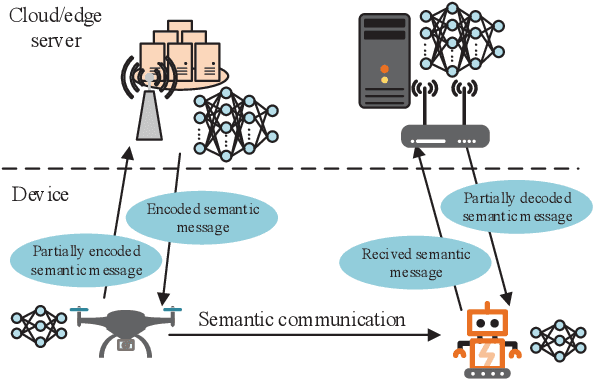

May 24, 2022

Abstract:Edge intelligence is anticipated to underlay the pathway to connected intelligence for 6G networks, but the organic confluence of edge computing and artificial intelligence still needs to be carefully treated. To this end, this article discusses the concepts of edge intelligence from the semantic cognitive perspective. Two instructive theoretical models for edge semantic cognitive intelligence (ESCI) are first established. Afterwards, the ESCI framework orchestrating deep learning with semantic communication is discussed. Two representative applications are present to shed light on the prospect of ESCI in 6G networks. Some open problems are finally listed to elicit the future research directions of ESCI.

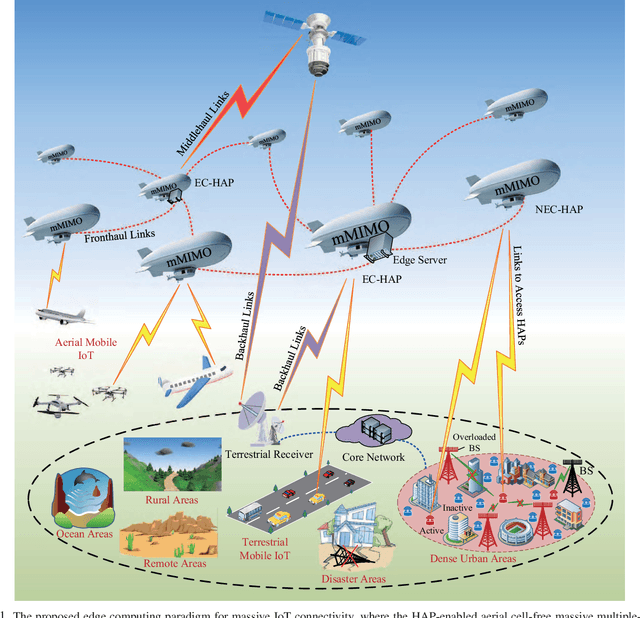

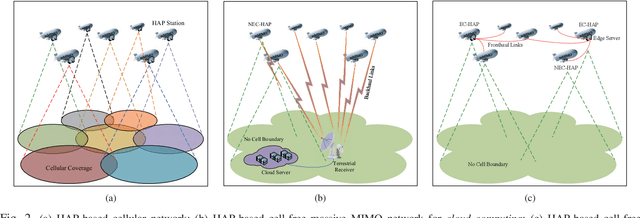

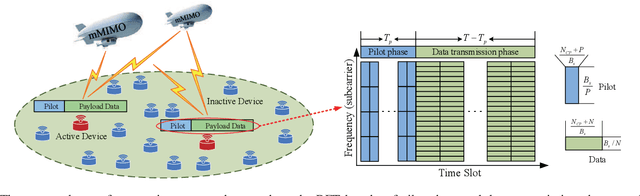

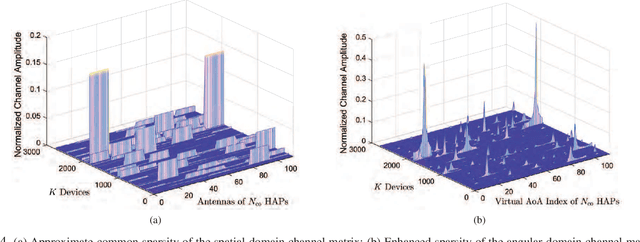

An Edge Computing Paradigm for Massive IoT Connectivity over High-Altitude Platform Networks

Jun 25, 2021

Abstract:With the advent of the Internet-of-Things (IoT) era, the ever-increasing number of devices and emerging applications have triggered the need for ubiquitous connectivity and more efficient computing paradigms. These stringent demands have posed significant challenges to the current wireless networks and their computing architectures. In this article, we propose a high-altitude platform (HAP) network-enabled edge computing paradigm to tackle the key issues of massive IoT connectivity. Specifically, we first provide a comprehensive overview of the recent advances in non-terrestrial network-based edge computing architectures. Then, the limitations of the existing solutions are further summarized from the perspectives of the network architecture, random access procedure, and multiple access techniques. To overcome the limitations, we propose a HAP-enabled aerial cell-free massive multiple-input multiple-output network to realize the edge computing paradigm, where multiple HAPs cooperate via the edge servers to serve IoT devices. For the case of a massive number of devices, we further adopt a grant-free massive access scheme to guarantee low-latency and high-efficiency massive IoT connectivity to the network. Besides, a case study is provided to demonstrate the effectiveness of the proposed solution. Finally, to shed light on the future research directions of HAP network-enabled edge computing paradigms, the key challenges and open issues are discussed.

Deep Learning for Signal Demodulation in Physical Layer Wireless Communications: Prototype Platform, Open Dataset, and Analytics

Mar 08, 2019

Abstract:In this paper, we investigate deep learning (DL)-enabled signal demodulation methods and establish the first open dataset of real modulated signals for wireless communication systems. Specifically, we propose a flexible communication prototype platform for measuring real modulation dataset. Then, based on the measured dataset, two DL-based demodulators, called deep belief network (DBN)-support vector machine (SVM) demodulator and adaptive boosting (AdaBoost) based demodulator, are proposed. The proposed DBN-SVM based demodulator exploits the advantages of both DBN and SVM, i.e., the advantage of DBN as a feature extractor and SVM as a feature classifier. In DBN-SVM based demodulator, the received signals are normalized before being fed to the DBN network. Furthermore, an AdaBoost based demodulator is developed, which employs the $k$-Nearest Neighbor (KNN) as a weak classifier to form a strong combined classifier. Finally, experimental results indicate that the proposed DBN-SVM based demodulator and AdaBoost based demodulator are superior to the single classification method using DBN, SVM, and maximum likelihood (MLD) based demodulator.

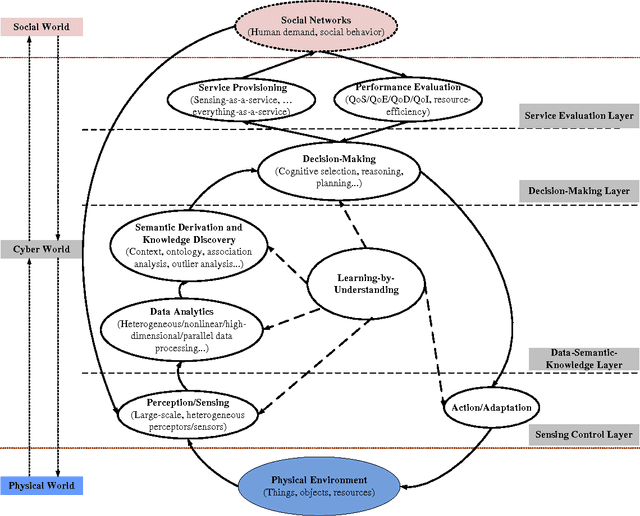

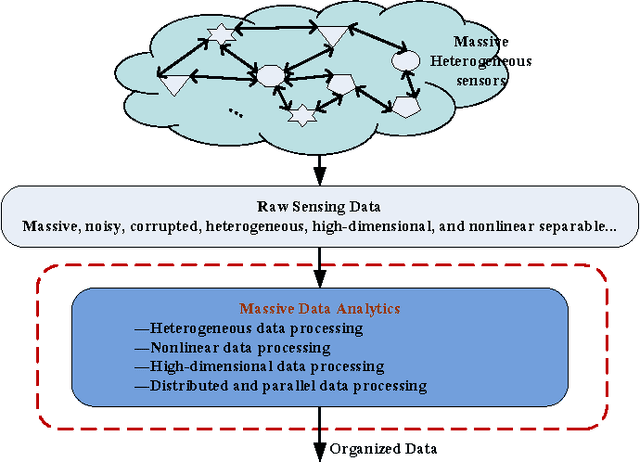

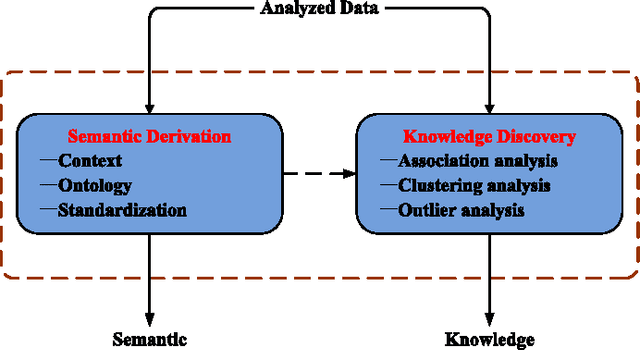

Cognitive Internet of Things: A New Paradigm beyond Connection

Mar 11, 2014

Abstract:Current research on Internet of Things (IoT) mainly focuses on how to enable general objects to see, hear, and smell the physical world for themselves, and make them connected to share the observations. In this paper, we argue that only connected is not enough, beyond that, general objects should have the capability to learn, think, and understand both physical and social worlds by themselves. This practical need impels us to develop a new paradigm, named Cognitive Internet of Things (CIoT), to empower the current IoT with a `brain' for high-level intelligence. Specifically, we first present a comprehensive definition for CIoT, primarily inspired by the effectiveness of human cognition. Then, we propose an operational framework of CIoT, which mainly characterizes the interactions among five fundamental cognitive tasks: perception-action cycle, massive data analytics, semantic derivation and knowledge discovery, intelligent decision-making, and on-demand service provisioning. Furthermore, we provide a systematic tutorial on key enabling techniques involved in the cognitive tasks. In addition, we also discuss the design of proper performance metrics on evaluating the enabling techniques. Last but not least, we present the research challenges and open issues ahead. Building on the present work and potentially fruitful future studies, CIoT has the capability to bridge the physical world (with objects, resources, etc.) and the social world (with human demand, social behavior, etc.), and enhance smart resource allocation, automatic network operation, and intelligent service provisioning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge