Guiquan Liu

On Predictability of Reinforcement Learning Dynamics for Large Language Models

Oct 02, 2025Abstract:Recent advances in reasoning capabilities of large language models (LLMs) are largely driven by reinforcement learning (RL), yet the underlying parameter dynamics during RL training remain poorly understood. This work identifies two fundamental properties of RL-induced parameter updates in LLMs: (1) Rank-1 Dominance, where the top singular subspace of the parameter update matrix nearly fully determines reasoning improvements, recovering over 99\% of performance gains; and (2) Rank-1 Linear Dynamics, where this dominant subspace evolves linearly throughout training, enabling accurate prediction from early checkpoints. Extensive experiments across 8 LLMs and 7 algorithms validate the generalizability of these properties. More importantly, based on these findings, we propose AlphaRL, a plug-in acceleration framework that extrapolates the final parameter update using a short early training window, achieving up to 2.5 speedup while retaining \textgreater 96\% of reasoning performance without extra modules or hyperparameter tuning. This positions our finding as a versatile and practical tool for large-scale RL, opening a path toward principled, interpretable, and efficient training paradigm for LLMs.

SelfAug: Mitigating Catastrophic Forgetting in Retrieval-Augmented Generation via Distribution Self-Alignment

Sep 04, 2025

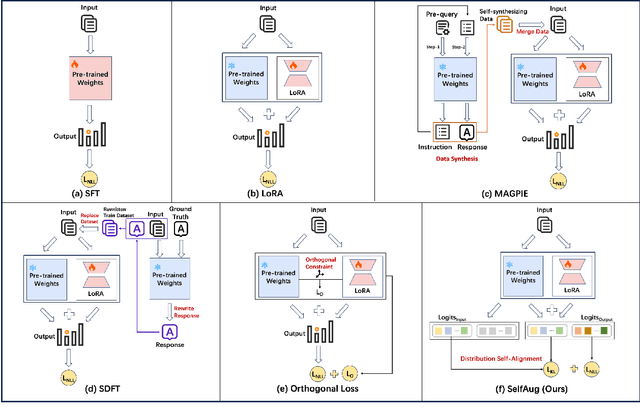

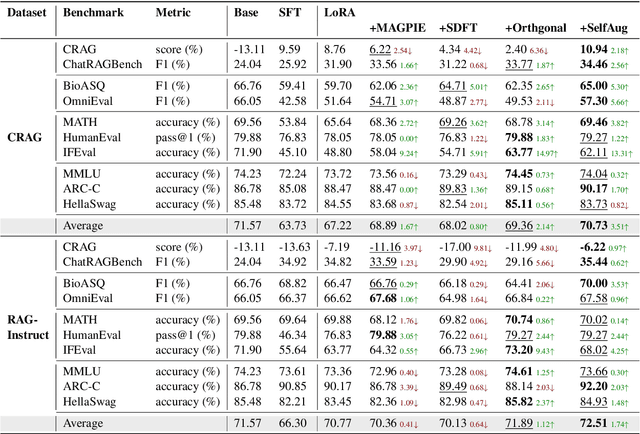

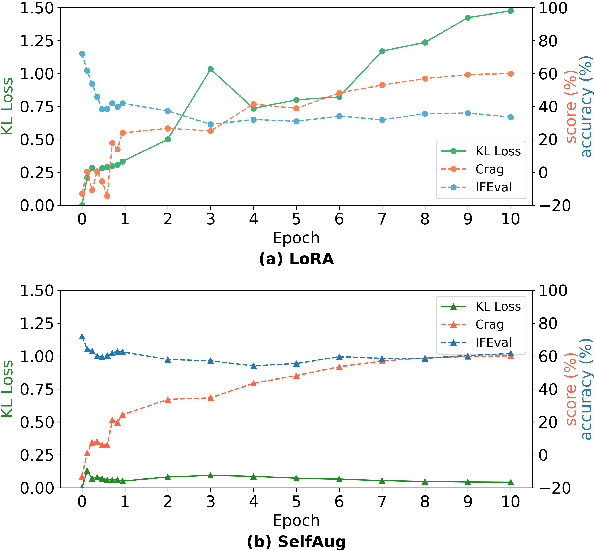

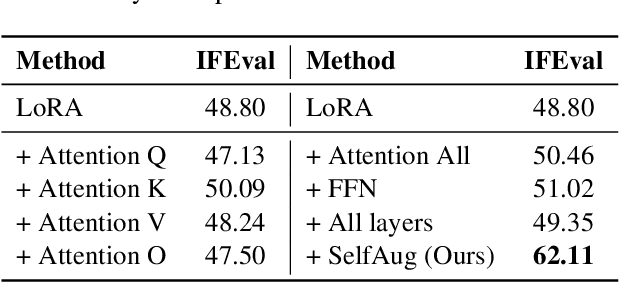

Abstract:Recent advancements in large language models (LLMs) have revolutionized natural language processing through their remarkable capabilities in understanding and executing diverse tasks. While supervised fine-tuning, particularly in Retrieval-Augmented Generation (RAG) scenarios, effectively enhances task-specific performance, it often leads to catastrophic forgetting, where models lose their previously acquired knowledge and general capabilities. Existing solutions either require access to general instruction data or face limitations in preserving the model's original distribution. To overcome these limitations, we propose SelfAug, a self-distribution alignment method that aligns input sequence logits to preserve the model's semantic distribution, thereby mitigating catastrophic forgetting and improving downstream performance. Extensive experiments demonstrate that SelfAug achieves a superior balance between downstream learning and general capability retention. Our comprehensive empirical analysis reveals a direct correlation between distribution shifts and the severity of catastrophic forgetting in RAG scenarios, highlighting how the absence of RAG capabilities in general instruction tuning leads to significant distribution shifts during fine-tuning. Our findings not only advance the understanding of catastrophic forgetting in RAG contexts but also provide a practical solution applicable across diverse fine-tuning scenarios. Our code is publicly available at https://github.com/USTC-StarTeam/SelfAug.

DeltaEdit: Enhancing Sequential Editing in Large Language Models by Controlling Superimposed Noise

May 12, 2025Abstract:Sequential knowledge editing techniques aim to continuously update the knowledge in large language models at a low cost, preventing the models from generating outdated or incorrect information. However, existing sequential editing methods suffer from a significant decline in editing success rates after long-term editing. Through theoretical analysis and experiments, we identify that as the number of edits increases, the model's output increasingly deviates from the desired target, leading to a drop in editing success rates. We refer to this issue as the accumulation of superimposed noise problem. To address this, we identify the factors contributing to this deviation and propose DeltaEdit, a novel method that optimizes update parameters through a dynamic orthogonal constraints strategy, effectively reducing interference between edits to mitigate deviation. Experimental results demonstrate that DeltaEdit significantly outperforms existing methods in edit success rates and the retention of generalization capabilities, ensuring stable and reliable model performance even under extensive sequential editing.

ChemEval: A Comprehensive Multi-Level Chemical Evaluation for Large Language Models

Sep 21, 2024

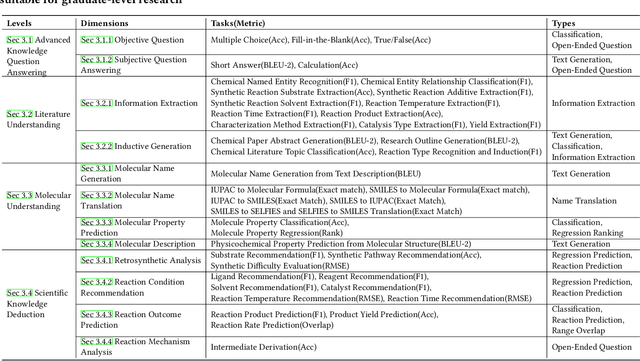

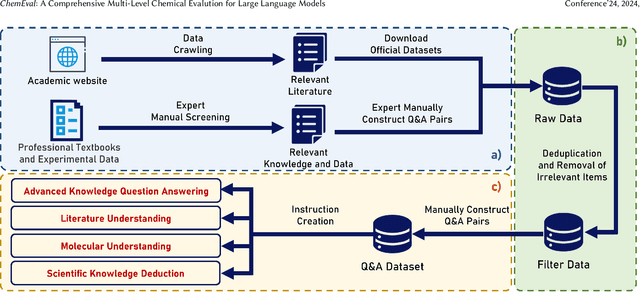

Abstract:There is a growing interest in the role that LLMs play in chemistry which lead to an increased focus on the development of LLMs benchmarks tailored to chemical domains to assess the performance of LLMs across a spectrum of chemical tasks varying in type and complexity. However, existing benchmarks in this domain fail to adequately meet the specific requirements of chemical research professionals. To this end, we propose \textbf{\textit{ChemEval}}, which provides a comprehensive assessment of the capabilities of LLMs across a wide range of chemical domain tasks. Specifically, ChemEval identified 4 crucial progressive levels in chemistry, assessing 12 dimensions of LLMs across 42 distinct chemical tasks which are informed by open-source data and the data meticulously crafted by chemical experts, ensuring that the tasks have practical value and can effectively evaluate the capabilities of LLMs. In the experiment, we evaluate 12 mainstream LLMs on ChemEval under zero-shot and few-shot learning contexts, which included carefully selected demonstration examples and carefully designed prompts. The results show that while general LLMs like GPT-4 and Claude-3.5 excel in literature understanding and instruction following, they fall short in tasks demanding advanced chemical knowledge. Conversely, specialized LLMs exhibit enhanced chemical competencies, albeit with reduced literary comprehension. This suggests that LLMs have significant potential for enhancement when tackling sophisticated tasks in the field of chemistry. We believe our work will facilitate the exploration of their potential to drive progress in chemistry. Our benchmark and analysis will be available at {\color{blue} \url{https://github.com/USTC-StarTeam/ChemEval}}.

Editing Knowledge Representation of Language Lodel via Rephrased Prefix Prompts

Mar 21, 2024

Abstract:Neural language models (LMs) have been extensively trained on vast corpora to store factual knowledge about various aspects of the world described in texts. Current technologies typically employ knowledge editing methods or specific prompts to modify LM outputs. However, existing knowledge editing methods are costly and inefficient, struggling to produce appropriate text. Additionally, prompt engineering is opaque and requires significant effort to find suitable prompts. To address these issues, we introduce a new method called PSPEM (Prefix Soft Prompt Editing Method), that can be used for a lifetime with just one training. It resolves the inefficiencies and generalizability issues in knowledge editing methods and overcomes the opacity of prompt engineering by automatically seeking optimal soft prompts. Specifically, PSPEM utilizes a prompt encoder and an encoding converter to refine key information in prompts and uses prompt alignment techniques to guide model generation, ensuring text consistency and adherence to the intended structure and content, thereby maintaining an optimal balance between efficiency and accuracy. We have validated the effectiveness of PSPEM through knowledge editing and attribute inserting. On the COUNTERFACT dataset, PSPEM achieved nearly 100\% editing accuracy and demonstrated the highest level of fluency. We further analyzed the similarities between PSPEM and original prompts and their impact on the model's internals. The results indicate that PSPEM can serve as an alternative to original prompts, supporting the model in effective editing.

Locating and Mitigating Gender Bias in Large Language Models

Mar 21, 2024

Abstract:Large language models(LLM) are pre-trained on extensive corpora to learn facts and human cognition which contain human preferences. However, this process can inadvertently lead to these models acquiring biases and stereotypes prevalent in society. Prior research has typically tackled the issue of bias through a one-dimensional perspective, concentrating either on locating or mitigating it. This limited perspective has created obstacles in facilitating research on bias to synergistically complement and progressively build upon one another. In this study, we integrate the processes of locating and mitigating bias within a unified framework. Initially, we use causal mediation analysis to trace the causal effects of different components' activation within a large language model. Building on this, we propose the LSDM (Least Square Debias Method), a knowledge-editing based method for mitigating gender bias in occupational pronouns, and compare it against two baselines on three gender bias datasets and seven knowledge competency test datasets. The experimental results indicate that the primary contributors to gender bias are the bottom MLP modules acting on the last token of occupational pronouns and the top attention module acting on the final word in the sentence. Furthermore, LSDM mitigates gender bias in the model more effectively than the other baselines, while fully preserving the model's capabilities in all other aspects.

Hierarchy-Aware T5 with Path-Adaptive Mask Mechanism for Hierarchical Text Classification

Sep 17, 2021

Abstract:Hierarchical Text Classification (HTC), which aims to predict text labels organized in hierarchical space, is a significant task lacking in investigation in natural language processing. Existing methods usually encode the entire hierarchical structure and fail to construct a robust label-dependent model, making it hard to make accurate predictions on sparse lower-level labels and achieving low Macro-F1. In this paper, we propose a novel PAMM-HiA-T5 model for HTC: a hierarchy-aware T5 model with path-adaptive mask mechanism that not only builds the knowledge of upper-level labels into low-level ones but also introduces path dependency information in label prediction. Specifically, we generate a multi-level sequential label structure to exploit hierarchical dependency across different levels with Breadth-First Search (BFS) and T5 model. To further improve label dependency prediction within each path, we then propose an original path-adaptive mask mechanism (PAMM) to identify the label's path information, eliminating sources of noises from other paths. Comprehensive experiments on three benchmark datasets show that our novel PAMM-HiA-T5 model greatly outperforms all state-of-the-art HTC approaches especially in Macro-F1. The ablation studies show that the improvements mainly come from our innovative approach instead of T5.

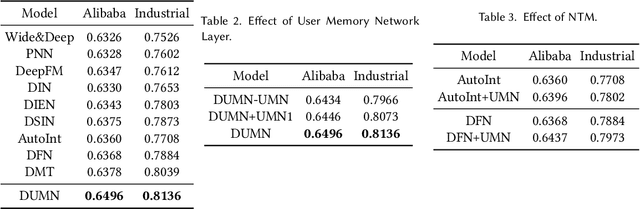

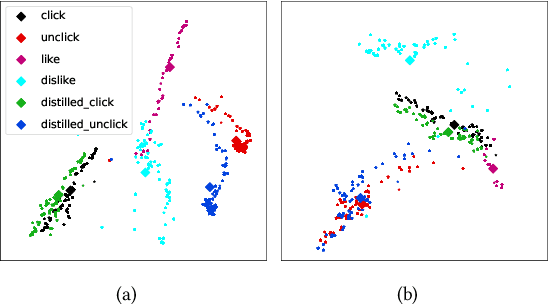

Denoising User-aware Memory Network for Recommendation

Jul 12, 2021

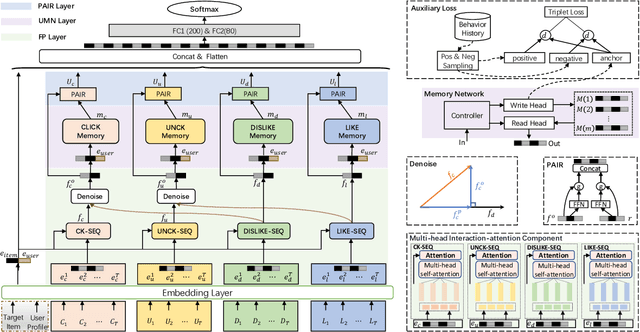

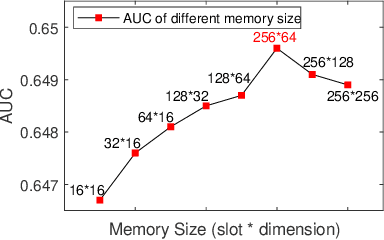

Abstract:For better user satisfaction and business effectiveness, more and more attention has been paid to the sequence-based recommendation system, which is used to infer the evolution of users' dynamic preferences, and recent studies have noticed that the evolution of users' preferences can be better understood from the implicit and explicit feedback sequences. However, most of the existing recommendation techniques do not consider the noise contained in implicit feedback, which will lead to the biased representation of user interest and a suboptimal recommendation performance. Meanwhile, the existing methods utilize item sequence for capturing the evolution of user interest. The performance of these methods is limited by the length of the sequence, and can not effectively model the long-term interest in a long period of time. Based on this observation, we propose a novel CTR model named denoising user-aware memory network (DUMN). Specifically, the framework: (i) proposes a feature purification module based on orthogonal mapping, which use the representation of explicit feedback to purify the representation of implicit feedback, and effectively denoise the implicit feedback; (ii) designs a user memory network to model the long-term interests in a fine-grained way by improving the memory network, which is ignored by the existing methods; and (iii) develops a preference-aware interactive representation component to fuse the long-term and short-term interests of users based on gating to understand the evolution of unbiased preferences of users. Extensive experiments on two real e-commerce user behavior datasets show that DUMN has a significant improvement over the state-of-the-art baselines. The code of DUMN model has been uploaded as an additional material.

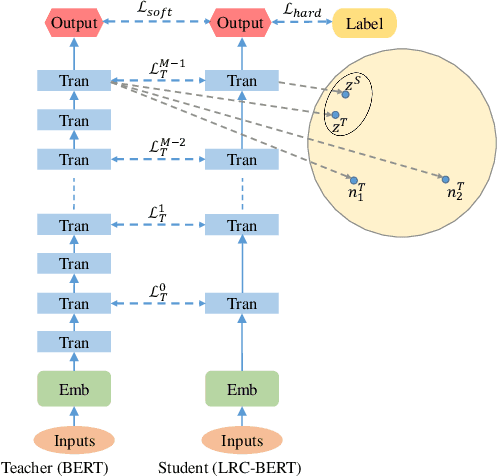

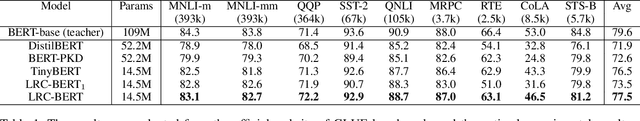

LRC-BERT: Latent-representation Contrastive Knowledge Distillation for Natural Language Understanding

Dec 14, 2020

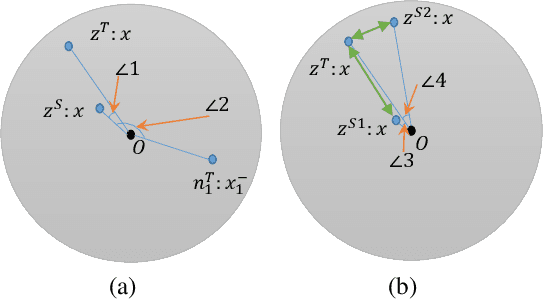

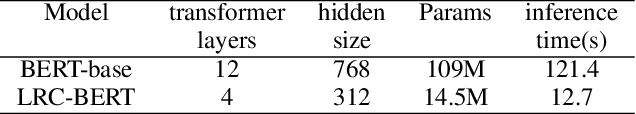

Abstract:The pre-training models such as BERT have achieved great results in various natural language processing problems. However, a large number of parameters need significant amounts of memory and the consumption of inference time, which makes it difficult to deploy them on edge devices. In this work, we propose a knowledge distillation method LRC-BERT based on contrastive learning to fit the output of the intermediate layer from the angular distance aspect, which is not considered by the existing distillation methods. Furthermore, we introduce a gradient perturbation-based training architecture in the training phase to increase the robustness of LRC-BERT, which is the first attempt in knowledge distillation. Additionally, in order to better capture the distribution characteristics of the intermediate layer, we design a two-stage training method for the total distillation loss. Finally, by verifying 8 datasets on the General Language Understanding Evaluation (GLUE) benchmark, the performance of the proposed LRC-BERT exceeds the existing state-of-the-art methods, which proves the effectiveness of our method.

Privacy Preserving PCA for Multiparty Modeling

Feb 09, 2020

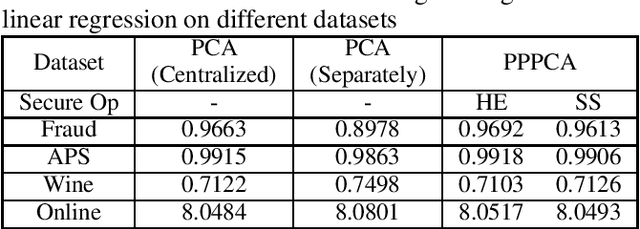

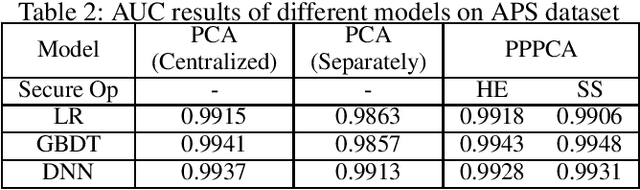

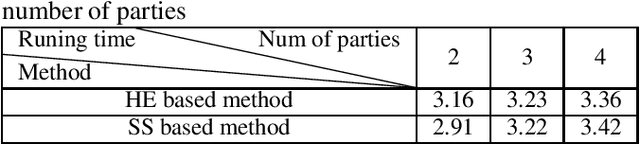

Abstract:In this paper, we present a general multiparty modeling paradigm with Privacy Preserving Principal Component Analysis (PPPCA) for horizontally partitioned data. PPPCA can accomplish multiparty cooperative execution of PCA under the premise of keeping plaintext data locally. We also propose implementations using two techniques, i.e., homomorphic encryption and secret sharing. The output of PPPCA can be sent directly to data consumer to build any machine learning models. We conduct experiments on three UCI benchmark datasets and a real-world fraud detection dataset. Results show that the accuracy of the model built upon PPPCA is the same as the model with PCA that is built based on centralized plaintext data.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge