Giorgia Ramponi

Optimal Sample Complexity for Single Time-Scale Actor-Critic with Momentum

Feb 02, 2026Abstract:We establish an optimal sample complexity of $O(ε^{-2})$ for obtaining an $ε$-optimal global policy using a single-timescale actor-critic (AC) algorithm in infinite-horizon discounted Markov decision processes (MDPs) with finite state-action spaces, improving upon the prior state of the art of $O(ε^{-3})$. Our approach applies STORM (STOchastic Recursive Momentum) to reduce variance in the critic updates. However, because samples are drawn from a nonstationary occupancy measure induced by the evolving policy, variance reduction via STORM alone is insufficient. To address this challenge, we maintain a buffer of small fraction of recent samples and uniformly sample from it for each critic update. Importantly, these mechanisms are compatible with existing deep learning architectures and require only minor modifications, without compromising practical applicability.

Fine-tuning Behavioral Cloning Policies with Preference-Based Reinforcement Learning

Sep 30, 2025Abstract:Deploying reinforcement learning (RL) in robotics, industry, and health care is blocked by two obstacles: the difficulty of specifying accurate rewards and the risk of unsafe, data-hungry exploration. We address this by proposing a two-stage framework that first learns a safe initial policy from a reward-free dataset of expert demonstrations, then fine-tunes it online using preference-based human feedback. We provide the first principled analysis of this offline-to-online approach and introduce BRIDGE, a unified algorithm that integrates both signals via an uncertainty-weighted objective. We derive regret bounds that shrink with the number of offline demonstrations, explicitly connecting the quantity of offline data to online sample efficiency. We validate BRIDGE in discrete and continuous control MuJoCo environments, showing it achieves lower regret than both standalone behavioral cloning and online preference-based RL. Our work establishes a theoretical foundation for designing more sample-efficient interactive agents.

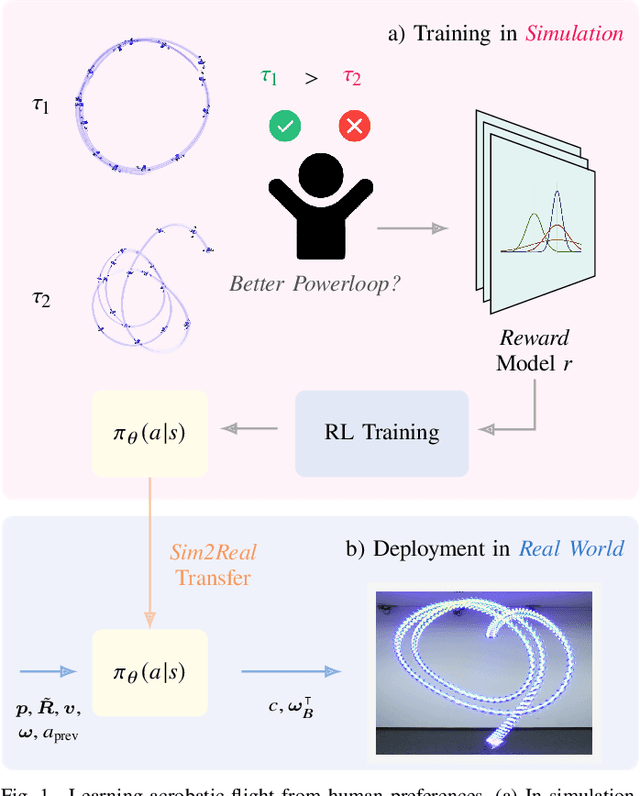

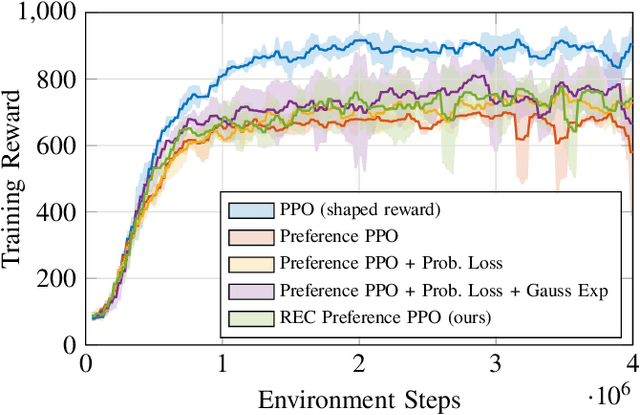

Learning Real-World Acrobatic Flight from Human Preferences

Aug 26, 2025

Abstract:Preference-based reinforcement learning (PbRL) enables agents to learn control policies without requiring manually designed reward functions, making it well-suited for tasks where objectives are difficult to formalize or inherently subjective. Acrobatic flight poses a particularly challenging problem due to its complex dynamics, rapid movements, and the importance of precise execution. In this work, we explore the use of PbRL for agile drone control, focusing on the execution of dynamic maneuvers such as powerloops. Building on Preference-based Proximal Policy Optimization (Preference PPO), we propose Reward Ensemble under Confidence (REC), an extension to the reward learning objective that improves preference modeling and learning stability. Our method achieves 88.4% of the shaped reward performance, compared to 55.2% with standard Preference PPO. We train policies in simulation and successfully transfer them to real-world drones, demonstrating multiple acrobatic maneuvers where human preferences emphasize stylistic qualities of motion. Furthermore, we demonstrate the applicability of our probabilistic reward model in a representative MuJoCo environment for continuous control. Finally, we highlight the limitations of manually designed rewards, observing only 60.7% agreement with human preferences. These results underscore the effectiveness of PbRL in capturing complex, human-centered objectives across both physical and simulated domains.

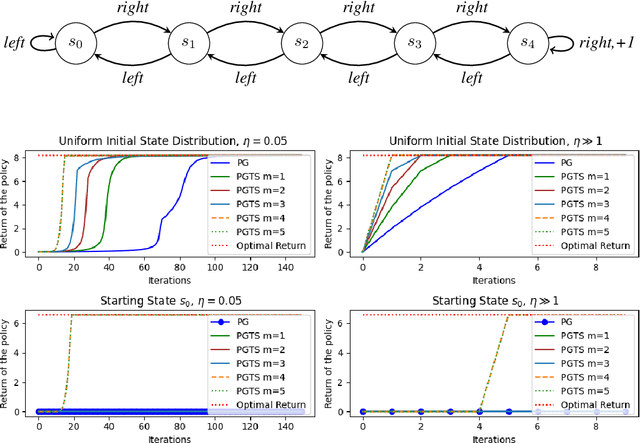

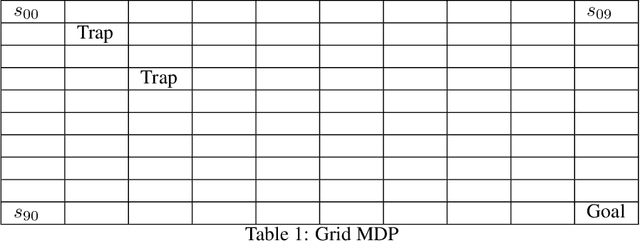

Policy Gradient with Tree Search: Avoiding Local Optimas through Lookahead

Jun 08, 2025

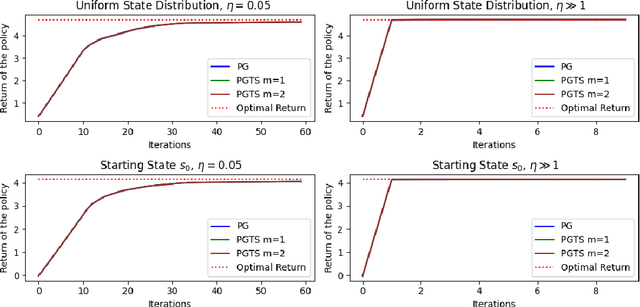

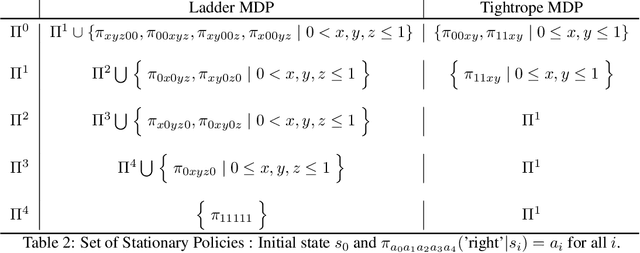

Abstract:Classical policy gradient (PG) methods in reinforcement learning frequently converge to suboptimal local optima, a challenge exacerbated in large or complex environments. This work investigates Policy Gradient with Tree Search (PGTS), an approach that integrates an $m$-step lookahead mechanism to enhance policy optimization. We provide theoretical analysis demonstrating that increasing the tree search depth $m$-monotonically reduces the set of undesirable stationary points and, consequently, improves the worst-case performance of any resulting stationary policy. Critically, our analysis accommodates practical scenarios where policy updates are restricted to states visited by the current policy, rather than requiring updates across the entire state space. Empirical evaluations on diverse MDP structures, including Ladder, Tightrope, and Gridworld environments, illustrate PGTS's ability to exhibit "farsightedness," navigate challenging reward landscapes, escape local traps where standard PG fails, and achieve superior solutions.

Learning Equilibria from Data: Provably Efficient Multi-Agent Imitation Learning

May 23, 2025Abstract:This paper provides the first expert sample complexity characterization for learning a Nash equilibrium from expert data in Markov Games. We show that a new quantity named the single policy deviation concentrability coefficient is unavoidable in the non-interactive imitation learning setting, and we provide an upper bound for behavioral cloning (BC) featuring such coefficient. BC exhibits substantial regret in games with high concentrability coefficient, leading us to utilize expert queries to develop and introduce two novel solution algorithms: MAIL-BRO and MURMAIL. The former employs a best response oracle and learns an $\varepsilon$-Nash equilibrium with $\mathcal{O}(\varepsilon^{-4})$ expert and oracle queries. The latter bypasses completely the best response oracle at the cost of a worse expert query complexity of order $\mathcal{O}(\varepsilon^{-8})$. Finally, we provide numerical evidence, confirming our theoretical findings.

Clustered KL-barycenter design for policy evaluation

Mar 04, 2025Abstract:In the context of stochastic bandit models, this article examines how to design sample-efficient behavior policies for the importance sampling evaluation of multiple target policies. From importance sampling theory, it is well established that sample efficiency is highly sensitive to the KL divergence between the target and importance sampling distributions. We first analyze a single behavior policy defined as the KL-barycenter of the target policies. Then, we refine this approach by clustering the target policies into groups with small KL divergences and assigning each cluster its own KL-barycenter as a behavior policy. This clustered KL-based policy evaluation (CKL-PE) algorithm provides a novel perspective on optimal policy selection. We prove upper bounds on the sample complexity of our method and demonstrate its effectiveness with numerical validation.

Dual Formulation for Non-Rectangular Lp Robust Markov Decision Processes

Feb 13, 2025

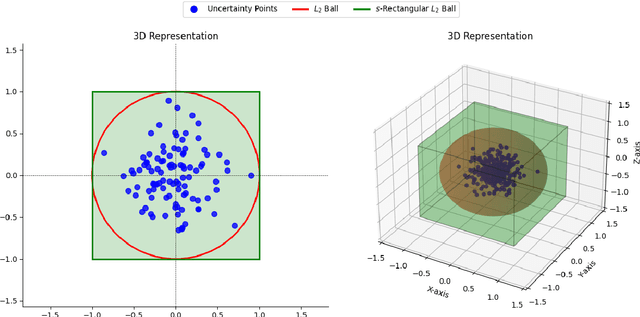

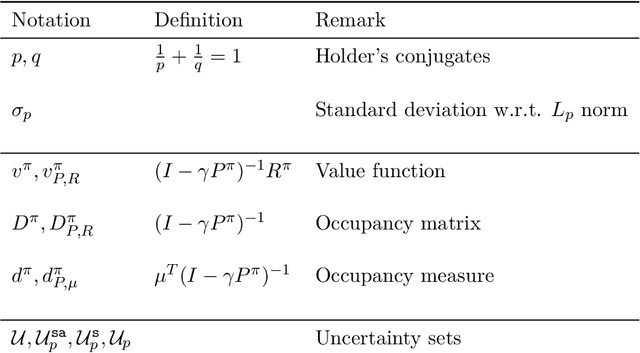

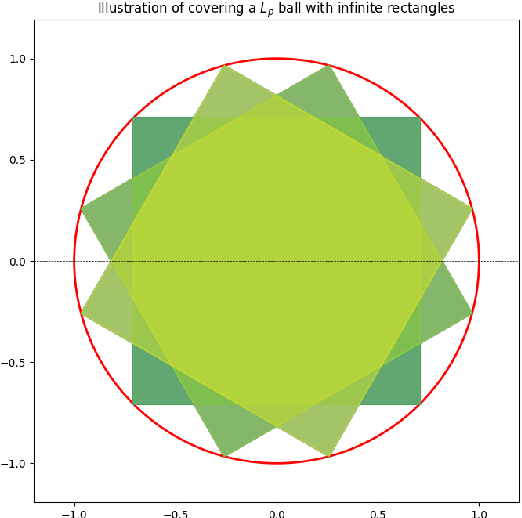

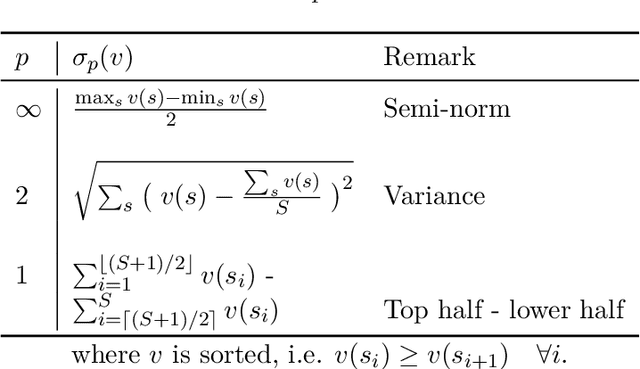

Abstract:We study robust Markov decision processes (RMDPs) with non-rectangular uncertainty sets, which capture interdependencies across states unlike traditional rectangular models. While non-rectangular robust policy evaluation is generally NP-hard, even in approximation, we identify a powerful class of $L_p$-bounded uncertainty sets that avoid these complexity barriers due to their structural simplicity. We further show that this class can be decomposed into infinitely many \texttt{sa}-rectangular $L_p$-bounded sets and leverage its structural properties to derive a novel dual formulation for $L_p$ RMDPs. This formulation provides key insights into the adversary's strategy and enables the development of the first robust policy evaluation algorithms for non-rectangular RMDPs. Empirical results demonstrate that our approach significantly outperforms brute-force methods, establishing a promising foundation for future investigation into non-rectangular robust MDPs.

On Multi-Agent Inverse Reinforcement Learning

Nov 22, 2024Abstract:In multi-agent systems, the agent behavior is highly influenced by its utility function, as these utilities shape both individual goals as well as interactions with the other agents. Inverse Reinforcement Learning (IRL) is a well-established approach to inferring the utility function by observing an expert behavior within a given environment. In this paper, we extend the IRL framework to the multi-agent setting, assuming to observe agents who are following Nash Equilibrium (NE) policies. We theoretically investigate the set of utilities that explain the behavior of NE experts. Specifically, we provide an explicit characterization of the feasible reward set and analyze how errors in estimating the transition dynamics and expert behavior impact the recovered rewards. Building on these findings, we provide the first sample complexity analysis for the multi-agent IRL problem. Finally, we provide a numerical evaluation of our theoretical results.

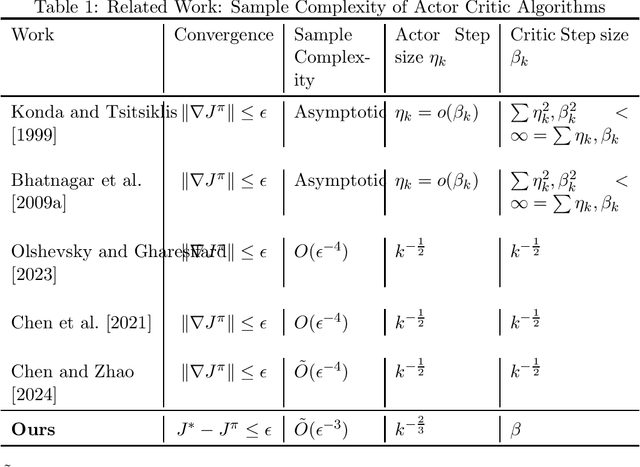

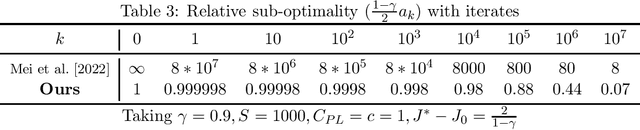

Improved Sample Complexity for Global Convergence of Actor-Critic Algorithms

Oct 11, 2024

Abstract:In this paper, we establish the global convergence of the actor-critic algorithm with a significantly improved sample complexity of $O(\epsilon^{-3})$, advancing beyond the existing local convergence results. Previous works provide local convergence guarantees with a sample complexity of $O(\epsilon^{-2})$ for bounding the squared gradient of the return, which translates to a global sample complexity of $O(\epsilon^{-4})$ using the gradient domination lemma. In contrast to traditional methods that employ decreasing step sizes for both the actor and critic, we demonstrate that a constant step size for the critic is sufficient to ensure convergence in expectation. This key insight reveals that using a decreasing step size for the actor alone is sufficient to handle the noise for both the actor and critic. Our findings provide theoretical support for the practical success of many algorithms that rely on constant step sizes.

Preference Elicitation for Offline Reinforcement Learning

Jun 26, 2024

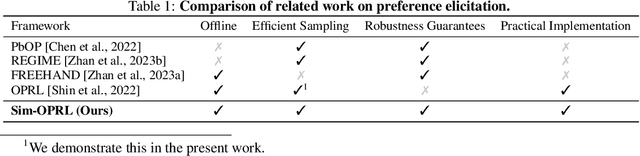

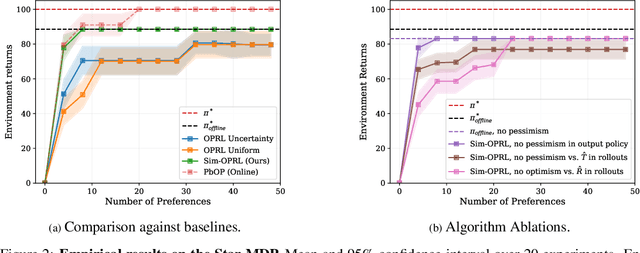

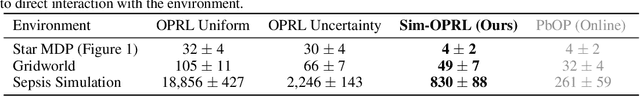

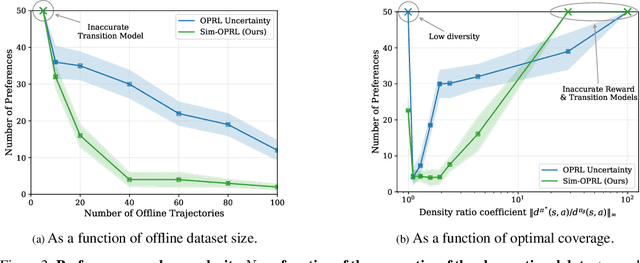

Abstract:Applying reinforcement learning (RL) to real-world problems is often made challenging by the inability to interact with the environment and the difficulty of designing reward functions. Offline RL addresses the first challenge by considering access to an offline dataset of environment interactions labeled by the reward function. In contrast, Preference-based RL does not assume access to the reward function and learns it from preferences, but typically requires an online interaction with the environment. We bridge the gap between these frameworks by exploring efficient methods for acquiring preference feedback in a fully offline setup. We propose Sim-OPRL, an offline preference-based reinforcement learning algorithm, which leverages a learned environment model to elicit preference feedback on simulated rollouts. Drawing on insights from both the offline RL and the preference-based RL literature, our algorithm employs a pessimistic approach for out-of-distribution data, and an optimistic approach for acquiring informative preferences about the optimal policy. We provide theoretical guarantees regarding the sample complexity of our approach, dependent on how well the offline data covers the optimal policy. Finally, we demonstrate the empirical performance of Sim-OPRL in different environments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge