Franck Cappello

DeepCQ: General-Purpose Deep-Surrogate Framework for Lossy Compression Quality Prediction

Dec 24, 2025Abstract:Error-bounded lossy compression techniques have become vital for scientific data management and analytics, given the ever-increasing volume of data generated by modern scientific simulations and instruments. Nevertheless, assessing data quality post-compression remains computationally expensive due to the intensive nature of metric calculations. In this work, we present a general-purpose deep-surrogate framework for lossy compression quality prediction (DeepCQ), with the following key contributions: 1) We develop a surrogate model for compression quality prediction that is generalizable to different error-bounded lossy compressors, quality metrics, and input datasets; 2) We adopt a novel two-stage design that decouples the computationally expensive feature-extraction stage from the light-weight metrics prediction, enabling efficient training and modular inference; 3) We optimize the model performance on time-evolving data using a mixture-of-experts design. Such a design enhances the robustness when predicting across simulation timesteps, especially when the training and test data exhibit significant variation. We validate the effectiveness of DeepCQ on four real-world scientific applications. Our results highlight the framework's exceptional predictive accuracy, with prediction errors generally under 10\% across most settings, significantly outperforming existing methods. Our framework empowers scientific users to make informed decisions about data compression based on their preferred data quality, thereby significantly reducing I/O and computational overhead in scientific data analysis.

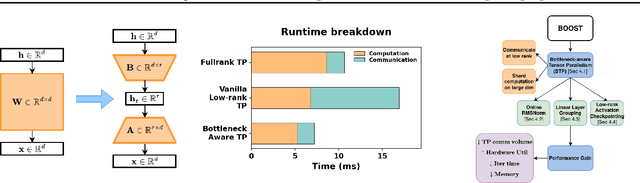

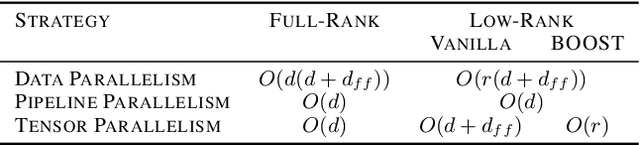

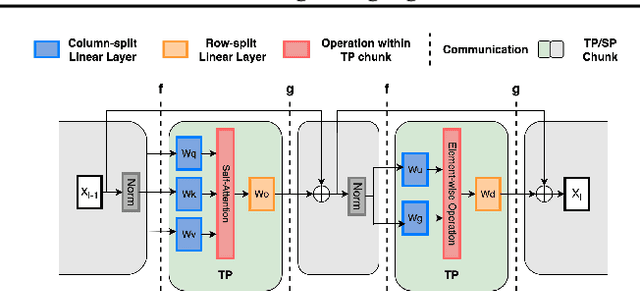

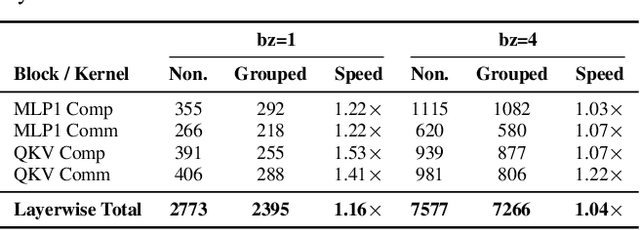

BOOST: BOttleneck-Optimized Scalable Training Framework for Low-Rank Large Language Models

Dec 13, 2025

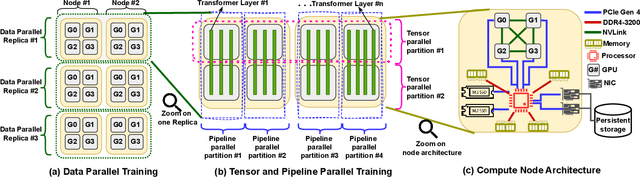

Abstract:The scale of transformer model pre-training is constrained by the increasing computation and communication cost. Low-rank bottleneck architectures offer a promising solution to significantly reduce the training time and memory footprint with minimum impact on accuracy. Despite algorithmic efficiency, bottleneck architectures scale poorly under standard tensor parallelism. Simply applying 3D parallelism designed for full-rank methods leads to excessive communication and poor GPU utilization. To address this limitation, we propose BOOST, an efficient training framework tailored for large-scale low-rank bottleneck architectures. BOOST introduces a novel Bottleneck-aware Tensor Parallelism, and combines optimizations such as online-RMSNorm, linear layer grouping, and low-rank activation checkpointing to achieve end-to-end training speedup. Evaluations on different low-rank bottleneck architectures demonstrate that BOOST achieves 1.46-1.91$\times$ speedup over full-rank model baselines and 1.87-2.27$\times$ speedup over low-rank model with naively integrated 3D parallelism, with improved GPU utilization and reduced communication overhead.

Systematic Evaluation of Optimization Techniques for Long-Context Language Models

Aug 01, 2025Abstract:Large language models (LLMs) excel across diverse natural language processing tasks but face resource demands and limited context windows. Although techniques like pruning, quantization, and token dropping can mitigate these issues, their efficacy in long-context scenarios and system evaluation remains underexplored. This paper systematically benchmarks these optimizations, characterizing memory usage, latency, and throughput, and studies how these methods impact the quality of text generation. We first analyze individual optimization methods for two LLM architectures supporting long context and then systematically evaluate combinations of these techniques to assess how this deeper analysis impacts performance metrics. We subsequently study the scalability of individual optimization methods on a larger variant with 70 billion-parameter model. Our novel insights reveal that naive combination inference optimization algorithms can adversely affect larger models due to compounded approximation errors, as compared to their smaller counterparts. Experiments show that relying solely on F1 obscures these effects by hiding precision-recall trade-offs in question answering tasks. By integrating system-level profiling with task-specific insights, this study helps LLM practitioners and researchers explore and balance efficiency, accuracy, and scalability across tasks and hardware configurations.

EAIRA: Establishing a Methodology for Evaluating AI Models as Scientific Research Assistants

Feb 27, 2025Abstract:Recent advancements have positioned AI, and particularly Large Language Models (LLMs), as transformative tools for scientific research, capable of addressing complex tasks that require reasoning, problem-solving, and decision-making. Their exceptional capabilities suggest their potential as scientific research assistants but also highlight the need for holistic, rigorous, and domain-specific evaluation to assess effectiveness in real-world scientific applications. This paper describes a multifaceted methodology for Evaluating AI models as scientific Research Assistants (EAIRA) developed at Argonne National Laboratory. This methodology incorporates four primary classes of evaluations. 1) Multiple Choice Questions to assess factual recall; 2) Open Response to evaluate advanced reasoning and problem-solving skills; 3) Lab-Style Experiments involving detailed analysis of capabilities as research assistants in controlled environments; and 4) Field-Style Experiments to capture researcher-LLM interactions at scale in a wide range of scientific domains and applications. These complementary methods enable a comprehensive analysis of LLM strengths and weaknesses with respect to their scientific knowledge, reasoning abilities, and adaptability. Recognizing the rapid pace of LLM advancements, we designed the methodology to evolve and adapt so as to ensure its continued relevance and applicability. This paper describes the methodology state at the end of February 2025. Although developed within a subset of scientific domains, the methodology is designed to be generalizable to a wide range of scientific domains.

Deep Optimizer States: Towards Scalable Training of Transformer Models Using Interleaved Offloading

Oct 26, 2024

Abstract:Transformers and large language models~(LLMs) have seen rapid adoption in all domains. Their sizes have exploded to hundreds of billions of parameters and keep increasing. Under these circumstances, the training of transformers is very expensive and often hits a ``memory wall'', i.e., even when using 3D parallelism (pipeline, tensor, data) and aggregating the memory of many GPUs, it is still not enough to hold the necessary data structures (model parameters, optimizer state, gradients, activations) in GPU memory. To compensate, state-of-the-art approaches offload the optimizer state, at least partially, to the host memory and perform hybrid CPU-GPU computations. However, the management of the combined host-GPU memory is often suboptimal and results in poor overlapping between data movements and computations. This leads to missed opportunities to simultaneously leverage the interconnect bandwidth and computational capabilities of CPUs and GPUs. In this paper, we leverage a key observation that the interleaving of the forward, backward and update phases generate fluctuations in the GPU memory utilization, which can be exploited to dynamically move a part of the optimizer state between the host and the GPU memory at each iteration. To this end, we design and implement \proj, a novel technique to split the LLM into subgroups, whose update phase is scheduled on either the CPU or the GPU based on our proposed performance model that addresses the trade-off between data movement cost, acceleration on the GPUs vs the CPUs, and competition for shared resources. We integrate our approach with DeepSpeed and demonstrate 2.5$\times$ faster iterations over state-of-the-art approaches using extensive experiments.

FT K-Means: A High-Performance K-Means on GPU with Fault Tolerance

Aug 02, 2024

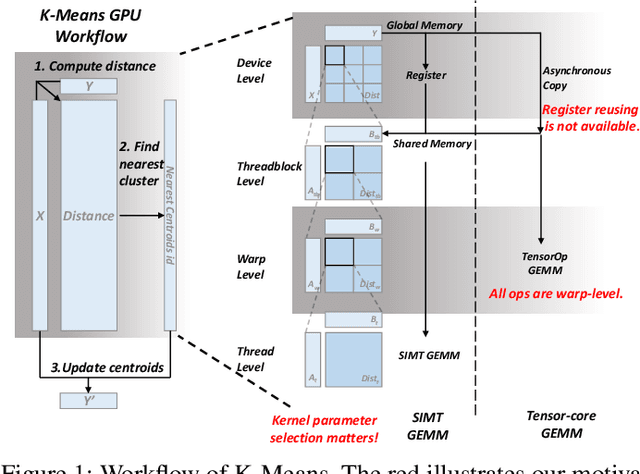

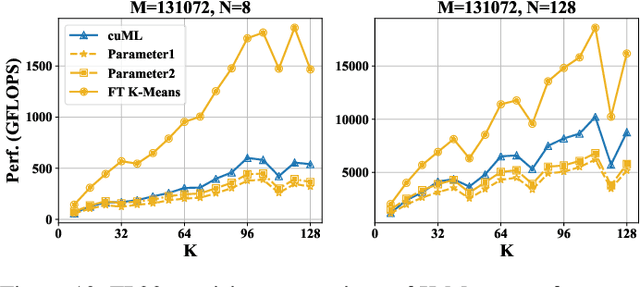

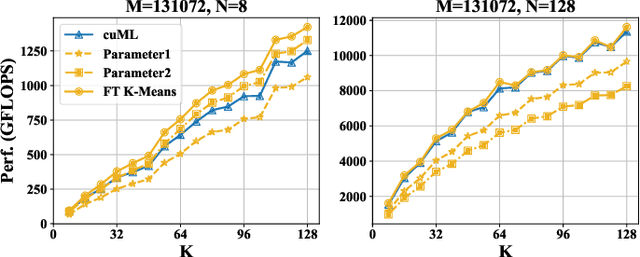

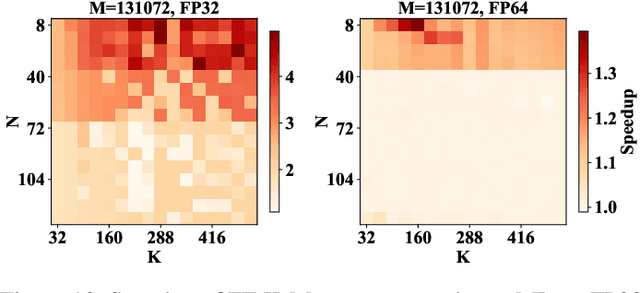

Abstract:K-Means is a widely used algorithm in clustering, however, its efficiency is primarily constrained by the computational cost of distance computing. Existing implementations suffer from suboptimal utilization of computational units and lack resilience against soft errors. To address these challenges, we introduce FT K-Means, a high-performance GPU-accelerated implementation of K-Means with online fault tolerance. We first present a stepwise optimization strategy that achieves competitive performance compared to NVIDIA's cuML library. We further improve FT K-Means with a template-based code generation framework that supports different data types and adapts to different input shapes. A novel warp-level tensor-core error correction scheme is proposed to address the failure of existing fault tolerance methods due to memory asynchronization during copy operations. Our experimental evaluations on NVIDIA T4 GPU and A100 GPU demonstrate that FT K-Means without fault tolerance outperforms cuML's K-Means implementation, showing a performance increase of 10\%-300\% in scenarios involving irregular data shapes. Moreover, the fault tolerance feature of FT K-Means introduces only an overhead of 11\%, maintaining robust performance even with tens of errors injected per second.

DataStates-LLM: Lazy Asynchronous Checkpointing for Large Language Models

Jun 15, 2024

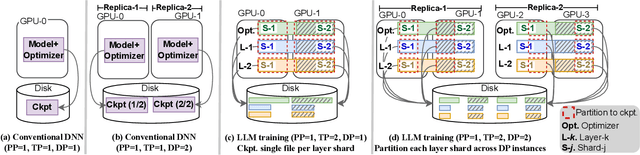

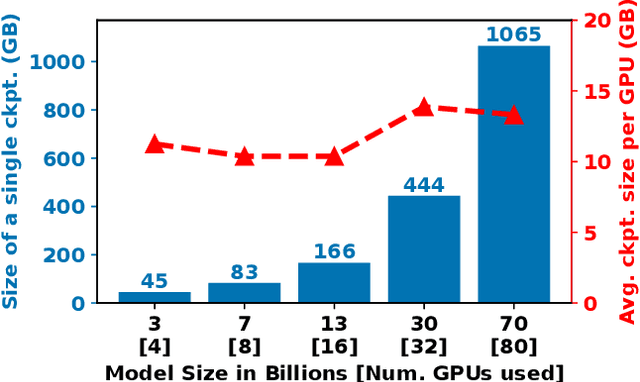

Abstract:LLMs have seen rapid adoption in all domains. They need to be trained on high-end high-performance computing (HPC) infrastructures and ingest massive amounts of input data. Unsurprisingly, at such a large scale, unexpected events (e.g., failures of components, instability of the software, undesirable learning patterns, etc.), are frequent and typically impact the training in a negative fashion. Thus, LLMs need to be checkpointed frequently so that they can be rolled back to a stable state and subsequently fine-tuned. However, given the large sizes of LLMs, a straightforward checkpointing solution that directly writes the model parameters and optimizer state to persistent storage (e.g., a parallel file system), incurs significant I/O overheads. To address this challenge, in this paper we study how to reduce the I/O overheads for enabling fast and scalable checkpointing for LLMs that can be applied at high frequency (up to the granularity of individual iterations) without significant impact on the training process. Specifically, we introduce a lazy asynchronous multi-level approach that takes advantage of the fact that the tensors making up the model and optimizer state shards remain immutable for extended periods of time, which makes it possible to copy their content in the background with minimal interference during the training process. We evaluate our approach at scales of up to 180 GPUs using different model sizes, parallelism settings, and checkpointing frequencies. The results show up to 48$\times$ faster checkpointing and 2.2$\times$ faster end-to-end training runtime compared with the state-of-art checkpointing approaches.

Understanding The Effectiveness of Lossy Compression in Machine Learning Training Sets

Mar 23, 2024

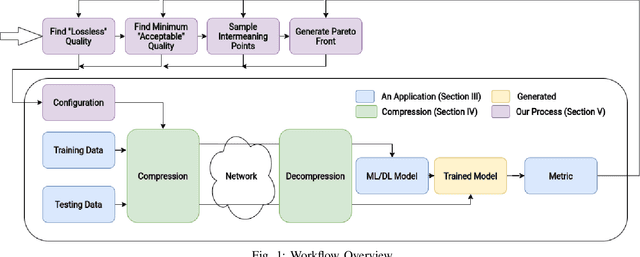

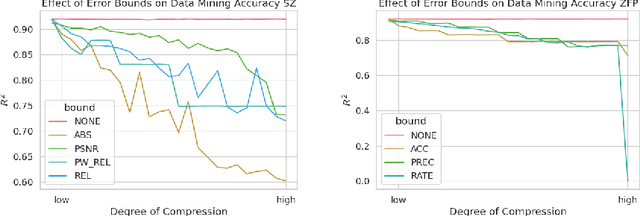

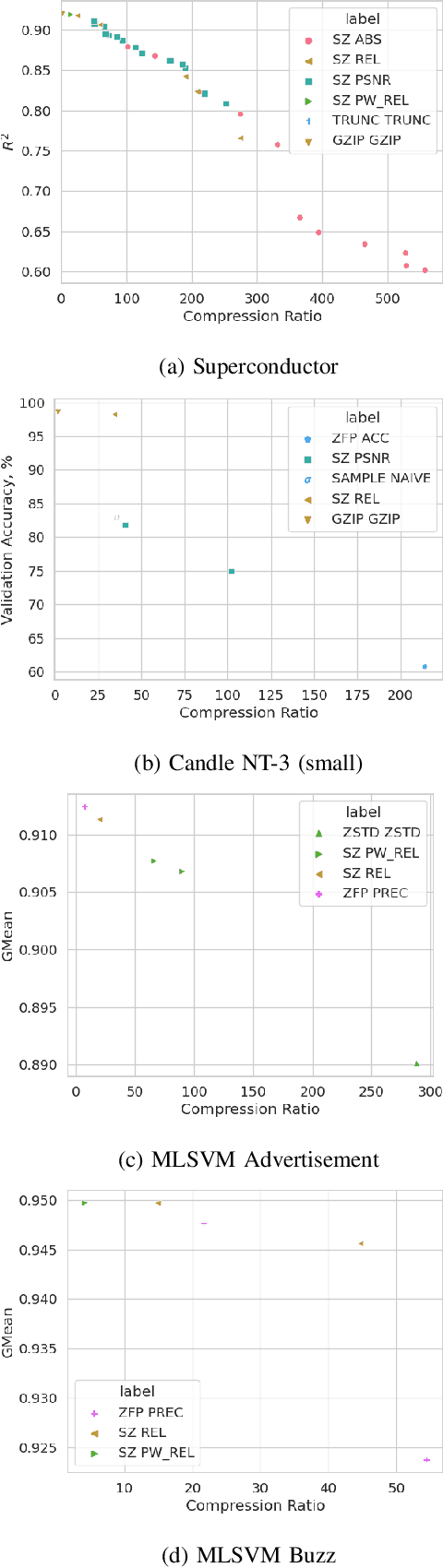

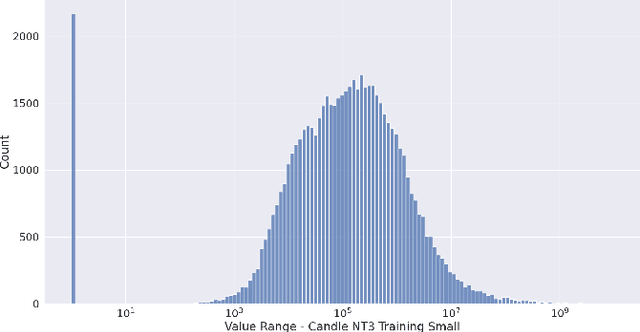

Abstract:Learning and Artificial Intelligence (ML/AI) techniques have become increasingly prevalent in high performance computing (HPC). However, these methods depend on vast volumes of floating point data for training and validation which need methods to share the data on a wide area network (WAN) or to transfer it from edge devices to data centers. Data compression can be a solution to these problems, but an in-depth understanding of how lossy compression affects model quality is needed. Prior work largely considers a single application or compression method. We designed a systematic methodology for evaluating data reduction techniques for ML/AI, and we use it to perform a very comprehensive evaluation with 17 data reduction methods on 7 ML/AI applications to show modern lossy compression methods can achieve a 50-100x compression ratio improvement for a 1% or less loss in quality. We identify critical insights that guide the future use and design of lossy compressors for ML/AI.

SRN-SZ: Deep Leaning-Based Scientific Error-bounded Lossy Compression with Super-resolution Neural Networks

Sep 07, 2023

Abstract:The fast growth of computational power and scales of modern super-computing systems have raised great challenges for the management of exascale scientific data. To maintain the usability of scientific data, error-bound lossy compression is proposed and developed as an essential technique for the size reduction of scientific data with constrained data distortion. Among the diverse datasets generated by various scientific simulations, certain datasets cannot be effectively compressed by existing error-bounded lossy compressors with traditional techniques. The recent success of Artificial Intelligence has inspired several researchers to integrate neural networks into error-bounded lossy compressors. However, those works still suffer from limited compression ratios and/or extremely low efficiencies. To address those issues and improve the compression on the hard-to-compress datasets, in this paper, we propose SRN-SZ, which is a deep learning-based scientific error-bounded lossy compressor leveraging the hierarchical data grid expansion paradigm implemented by super-resolution neural networks. SRN-SZ applies the most advanced super-resolution network HAT for its compression, which is free of time-costing per-data training. In experiments compared with various state-of-the-art compressors, SRN-SZ achieves up to 75% compression ratio improvements under the same error bound and up to 80% compression ratio improvements under the same PSNR than the second-best compressor.

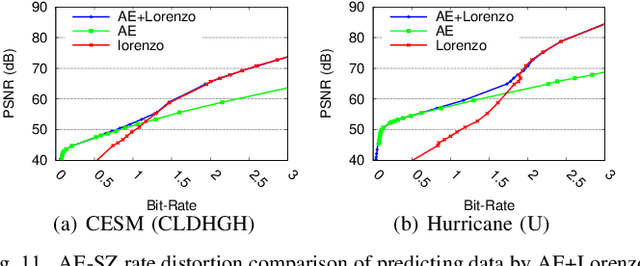

Exploring Autoencoder-Based Error-Bounded Compression for Scientific Data

May 25, 2021

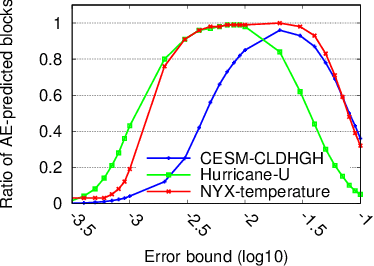

Abstract:Error-bounded lossy compression is becoming an indispensable technique for the success of today's scientific projects with vast volumes of data produced during the simulations or instrument data acquisitions. Not only can it significantly reduce data size, but it also can control the compression errors based on user-specified error bounds. Autoencoder (AE) models have been widely used in image compression, but few AE-based compression approaches support error-bounding features, which are highly required by scientific applications. To address this issue, we explore using convolutional autoencoders to improve error-bounded lossy compression for scientific data, with the following three key contributions. (1) We provide an in-depth investigation of the characteristics of various autoencoder models and develop an error-bounded autoencoder-based framework in terms of the SZ model. (2) We optimize the compression quality for main stages in our designed AE-based error-bounded compression framework, fine-tuning the block sizes and latent sizes and also optimizing the compression efficiency of latent vectors. (3) We evaluate our proposed solution using five real-world scientific datasets and comparing them with six other related works. Experiments show that our solution exhibits a very competitive compression quality from among all the compressors in our tests. In absolute terms, it can obtain a much better compression quality (100% ~ 800% improvement in compression ratio with the same data distortion) compared with SZ2.1 and ZFP in cases with a high compression ratio.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge