Fabrizio Silvestri

Concept-Enhanced Multimodal RAG: Towards Interpretable and Accurate Radiology Report Generation

Feb 17, 2026Abstract:Radiology Report Generation (RRG) through Vision-Language Models (VLMs) promises to reduce documentation burden, improve reporting consistency, and accelerate clinical workflows. However, their clinical adoption remains limited by the lack of interpretability and the tendency to hallucinate findings misaligned with imaging evidence. Existing research typically treats interpretability and accuracy as separate objectives, with concept-based explainability techniques focusing primarily on transparency, while Retrieval-Augmented Generation (RAG) methods targeting factual grounding through external retrieval. We present Concept-Enhanced Multimodal RAG (CEMRAG), a unified framework that decomposes visual representations into interpretable clinical concepts and integrates them with multimodal RAG. This approach exploits enriched contextual prompts for RRG, improving both interpretability and factual accuracy. Experiments on MIMIC-CXR and IU X-Ray across multiple VLM architectures, training regimes, and retrieval configurations demonstrate consistent improvements over both conventional RAG and concept-only baselines on clinical accuracy metrics and standard NLP measures. These results challenge the assumed trade-off between interpretability and performance, showing that transparent visual concepts can enhance rather than compromise diagnostic accuracy in medical VLMs. Our modular design decomposes interpretability into visual transparency and structured language model conditioning, providing a principled pathway toward clinically trustworthy AI-assisted radiology.

Same Answer, Different Representations: Hidden instability in VLMs

Feb 06, 2026Abstract:The robustness of Vision Language Models (VLMs) is commonly assessed through output-level invariance, implicitly assuming that stable predictions reflect stable multimodal processing. In this work, we argue that this assumption is insufficient. We introduce a representation-aware and frequency-aware evaluation framework that measures internal embedding drift, spectral sensitivity, and structural smoothness (spatial consistency of vision tokens), alongside standard label-based metrics. Applying this framework to modern VLMs across the SEEDBench, MMMU, and POPE datasets reveals three distinct failure modes. First, models frequently preserve predicted answers while undergoing substantial internal representation drift; for perturbations such as text overlays, this drift approaches the magnitude of inter-image variability, indicating that representations move to regions typically occupied by unrelated inputs despite unchanged outputs. Second, robustness does not improve with scale; larger models achieve higher accuracy but exhibit equal or greater sensitivity, consistent with sharper yet more fragile decision boundaries. Third, we find that perturbations affect tasks differently: they harm reasoning when they disrupt how models combine coarse and fine visual cues, but on the hallucination benchmarks, they can reduce false positives by making models generate more conservative answers.

Statistical Foundations of DIME: Risk Estimation for Practical Index Selection

Jan 09, 2026Abstract:High-dimensional dense embeddings have become central to modern Information Retrieval, but many dimensions are noisy or redundant. Recently proposed DIME (Dimension IMportance Estimation), provides query-dependent scores to identify informative components of embeddings. DIME relies on a costly grid search to select a priori a dimensionality for all the query corpus's embeddings. Our work provides a statistically grounded criterion that directly identifies the optimal set of dimensions for each query at inference time. Experiments confirm achieving parity of effectiveness and reduces embedding size by an average of $\sim50\%$ across different models and datasets at inference time.

AutoBench: Automating LLM Evaluation through Reciprocal Peer Assessment

Oct 26, 2025Abstract:We present AutoBench, a fully automated and self-sustaining framework for evaluating Large Language Models (LLMs) through reciprocal peer assessment. This paper provides a rigorous scientific validation of the AutoBench methodology, originally developed as an open-source project by eZecute S.R.L.. Unlike static benchmarks that suffer from test-set contamination and limited adaptability, AutoBench dynamically generates novel evaluation tasks while models alternately serve as question generators, contestants, and judges across diverse domains. An iterative weighting mechanism amplifies the influence of consistently reliable evaluators, aggregating peer judgments into consensus-based rankings that reflect collective model agreement. Our experiments demonstrate strong correlations with established benchmarks including MMLU-Pro and GPQA (respectively 78\% and 63\%), validating this peer-driven evaluation paradigm. The multi-judge design significantly outperforms single-judge baselines, confirming that distributed evaluation produces more robust and human-consistent assessments. AutoBench offers a scalable, contamination-resistant alternative to static benchmarks for the continuous evaluation of evolving language models.

Redefining Retrieval Evaluation in the Era of LLMs

Oct 24, 2025Abstract:Traditional Information Retrieval (IR) metrics, such as nDCG, MAP, and MRR, assume that human users sequentially examine documents with diminishing attention to lower ranks. This assumption breaks down in Retrieval Augmented Generation (RAG) systems, where search results are consumed by Large Language Models (LLMs), which, unlike humans, process all retrieved documents as a whole rather than sequentially. Additionally, traditional IR metrics do not account for related but irrelevant documents that actively degrade generation quality, rather than merely being ignored. Due to these two major misalignments, namely human vs. machine position discount and human relevance vs. machine utility, classical IR metrics do not accurately predict RAG performance. We introduce a utility-based annotation schema that quantifies both the positive contribution of relevant passages and the negative impact of distracting ones. Building on this foundation, we propose UDCG (Utility and Distraction-aware Cumulative Gain), a metric using an LLM-oriented positional discount to directly optimize the correlation with the end-to-end answer accuracy. Experiments on five datasets and six LLMs demonstrate that UDCG improves correlation by up to 36% compared to traditional metrics. Our work provides a critical step toward aligning IR evaluation with LLM consumers and enables more reliable assessment of RAG components

Evaluating Latent Knowledge of Public Tabular Datasets in Large Language Models

Oct 23, 2025Abstract:Large Language Models (LLMs) are increasingly evaluated on their ability to reason over structured data, yet such assessments often overlook a crucial confound: dataset contamination. In this work, we investigate whether LLMs exhibit prior knowledge of widely used tabular benchmarks such as Adult Income, Titanic, and others. Through a series of controlled probing experiments, we reveal that contamination effects emerge exclusively for datasets containing strong semantic cues-for instance, meaningful column names or interpretable value categories. In contrast, when such cues are removed or randomized, performance sharply declines to near-random levels. These findings suggest that LLMs' apparent competence on tabular reasoning tasks may, in part, reflect memorization of publicly available datasets rather than genuine generalization. We discuss implications for evaluation protocols and propose strategies to disentangle semantic leakage from authentic reasoning ability in future LLM assessments.

Titans Revisited: A Lightweight Reimplementation and Critical Analysis of a Test-Time Memory Model

Oct 10, 2025

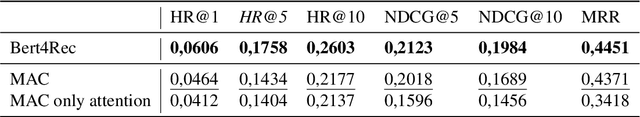

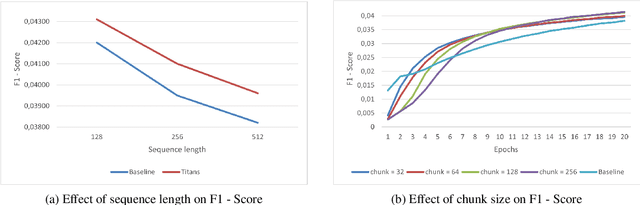

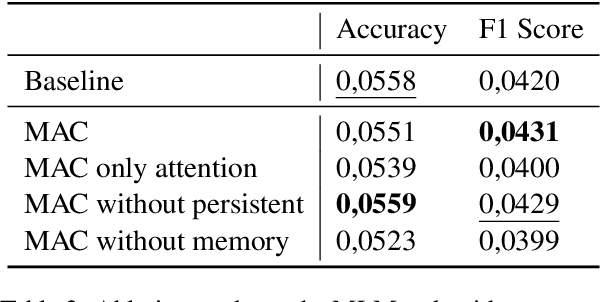

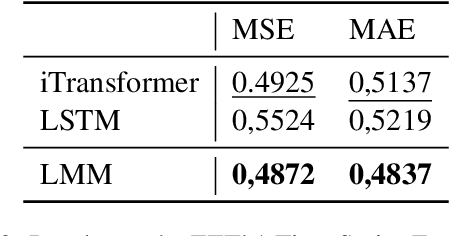

Abstract:By the end of 2024, Google researchers introduced Titans: Learning at Test Time, a neural memory model achieving strong empirical results across multiple tasks. However, the lack of publicly available code and ambiguities in the original description hinder reproducibility. In this work, we present a lightweight reimplementation of Titans and conduct a comprehensive evaluation on Masked Language Modeling, Time Series Forecasting, and Recommendation tasks. Our results reveal that Titans does not always outperform established baselines due to chunking. However, its Neural Memory component consistently improves performance compared to attention-only models. These findings confirm the model's innovative potential while highlighting its practical limitations and raising questions for future research.

Directional Sheaf Hypergraph Networks: Unifying Learning on Directed and Undirected Hypergraphs

Oct 06, 2025

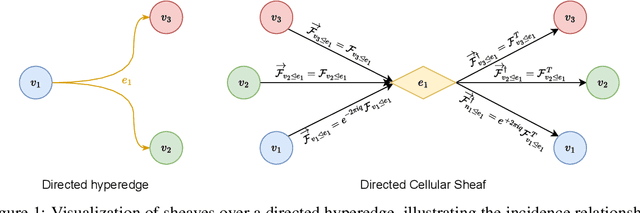

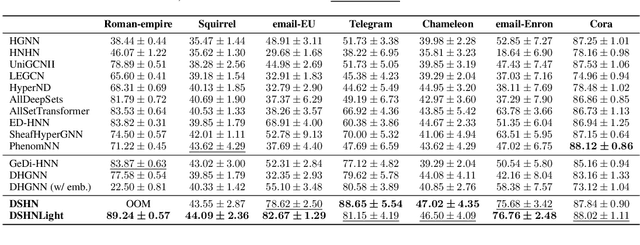

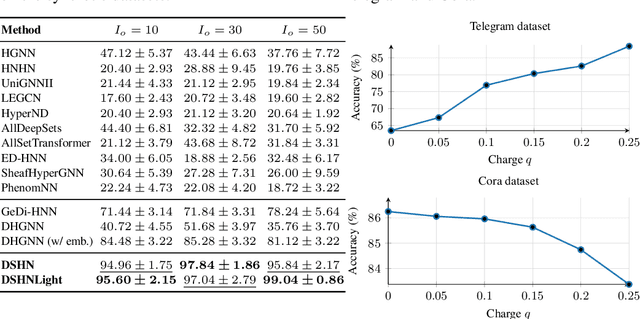

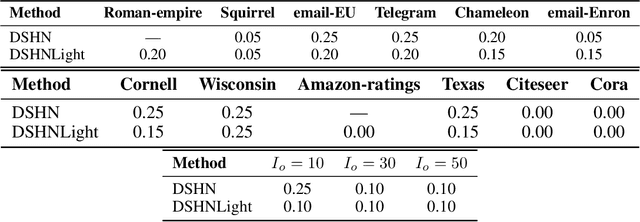

Abstract:Hypergraphs provide a natural way to represent higher-order interactions among multiple entities. While undirected hypergraphs have been extensively studied, the case of directed hypergraphs, which can model oriented group interactions, remains largely under-explored despite its relevance for many applications. Recent approaches in this direction often exhibit an implicit bias toward homophily, which limits their effectiveness in heterophilic settings. Rooted in the algebraic topology notion of Cellular Sheaves, Sheaf Neural Networks (SNNs) were introduced as an effective solution to circumvent such a drawback. While a generalization to hypergraphs is known, it is only suitable for undirected hypergraphs, failing to tackle the directed case. In this work, we introduce Directional Sheaf Hypergraph Networks (DSHN), a framework integrating sheaf theory with a principled treatment of asymmetric relations within a hypergraph. From it, we construct the Directed Sheaf Hypergraph Laplacian, a complex-valued operator by which we unify and generalize many existing Laplacian matrices proposed in the graph- and hypergraph-learning literature. Across 7 real-world datasets and against 13 baselines, DSHN achieves relative accuracy gains from 2% up to 20%, showing how a principled treatment of directionality in hypergraphs, combined with the expressive power of sheaves, can substantially improve performance.

Generalizability vs. Counterfactual Explainability Trade-Off

May 29, 2025Abstract:In this work, we investigate the relationship between model generalization and counterfactual explainability in supervised learning. We introduce the notion of $\varepsilon$-valid counterfactual probability ($\varepsilon$-VCP) -- the probability of finding perturbations of a data point within its $\varepsilon$-neighborhood that result in a label change. We provide a theoretical analysis of $\varepsilon$-VCP in relation to the geometry of the model's decision boundary, showing that $\varepsilon$-VCP tends to increase with model overfitting. Our findings establish a rigorous connection between poor generalization and the ease of counterfactual generation, revealing an inherent trade-off between generalization and counterfactual explainability. Empirical results validate our theory, suggesting $\varepsilon$-VCP as a practical proxy for quantitatively characterizing overfitting.

Early-Exit Graph Neural Networks

May 23, 2025Abstract:Early-exit mechanisms allow deep neural networks to halt inference as soon as classification confidence is high enough, adaptively trading depth for confidence, and thereby cutting latency and energy on easy inputs while retaining full-depth accuracy for harder ones. Similarly, adding early exit mechanisms to Graph Neural Networks (GNNs), the go-to models for graph-structured data, allows for dynamic trading depth for confidence on simple graphs while maintaining full-depth accuracy on harder and more complex graphs to capture intricate relationships. Although early exits have proven effective across various deep learning domains, their potential within GNNs in scenarios that require deep architectures while resisting over-smoothing and over-squashing remains largely unexplored. We unlock that potential by first introducing Symmetric-Anti-Symmetric Graph Neural Networks (SAS-GNN), whose symmetry-based inductive biases mitigate these issues and yield stable intermediate representations that can be useful to allow early exiting in GNNs. Building on this backbone, we present Early-Exit Graph Neural Networks (EEGNNs), which append confidence-aware exit heads that allow on-the-fly termination of propagation based on each node or the entire graph. Experiments show that EEGNNs preserve robust performance as depth grows and deliver competitive accuracy on heterophilic and long-range benchmarks, matching attention-based and asynchronous message-passing models while substantially reducing computation and latency. We plan to release the code to reproduce our experiments.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge