Dongxiao Yu

Certified Unlearning in Decentralized Federated Learning

Jan 10, 2026Abstract:Driven by the right to be forgotten (RTBF), machine unlearning has become an essential requirement for privacy-preserving machine learning. However, its realization in decentralized federated learning (DFL) remains largely unexplored. In DFL, clients exchange local updates only with neighbors, causing model information to propagate and mix across the network. As a result, when a client requests data deletion, its influence is implicitly embedded throughout the system, making removal difficult without centralized coordination. We propose a novel certified unlearning framework for DFL based on Newton-style updates. Our approach first quantifies how a client's data influence propagates during training. Leveraging curvature information of the loss with respect to the target data, we then construct corrective updates using Newton-style approximations. To ensure scalability, we approximate second-order information via Fisher information matrices. The resulting updates are perturbed with calibrated noise and broadcast through the network to eliminate residual influence across clients. We theoretically prove that our approach satisfies the formal definition of certified unlearning, ensuring that the unlearned model is difficult to distinguish from a retrained model without the deleted data. We also establish utility bounds showing that the unlearned model remains close to retraining from scratch. Extensive experiments across diverse decentralized settings demonstrate the effectiveness and efficiency of our framework.

Federated Fine-Tuning of Sparsely-Activated Large Language Models on Resource-Constrained Devices

Aug 26, 2025Abstract:Federated fine-tuning of Mixture-of-Experts (MoE)-based large language models (LLMs) is challenging due to their massive computational requirements and the resource constraints of participants. Existing working attempts to fill this gap through model quantization, computation offloading, or expert pruning. However, they cannot achieve desired performance due to impractical system assumptions and a lack of consideration for MoE-specific characteristics. In this paper, we propose FLUX, a system designed to enable federated fine-tuning of MoE-based LLMs across participants with constrained computing resources (e.g., consumer-grade GPUs), aiming to minimize time-to-accuracy. FLUX introduces three key innovations: (1) quantization-based local profiling to estimate expert activation with minimal overhead, (2) adaptive layer-aware expert merging to reduce resource consumption while preserving accuracy, and (3) dynamic expert role assignment using an exploration-exploitation strategy to balance tuning and non-tuning experts. Extensive experiments on LLaMA-MoE and DeepSeek-MoE with multiple benchmark datasets demonstrate that FLUX significantly outperforms existing methods, achieving up to 4.75X speedup in time-to-accuracy.

DistrAttention: An Efficient and Flexible Self-Attention Mechanism on Modern GPUs

Jul 23, 2025Abstract:The Transformer architecture has revolutionized deep learning, delivering the state-of-the-art performance in areas such as natural language processing, computer vision, and time series prediction. However, its core component, self-attention, has the quadratic time complexity relative to input sequence length, which hinders the scalability of Transformers. The exsiting approaches on optimizing self-attention either discard full-contextual information or lack of flexibility. In this work, we design DistrAttention, an effcient and flexible self-attention mechanism with the full context. DistrAttention achieves this by grouping data on the embedding dimensionality, usually referred to as $d$. We realize DistrAttention with a lightweight sampling and fusion method that exploits locality-sensitive hashing to group similar data. A block-wise grouping framework is further designed to limit the errors introduced by locality sensitive hashing. By optimizing the selection of block sizes, DistrAttention could be easily integrated with FlashAttention-2, gaining high-performance on modern GPUs. We evaluate DistrAttention with extensive experiments. The results show that our method is 37% faster than FlashAttention-2 on calculating self-attention. In ViT inference, DistrAttention is the fastest and the most accurate among approximate self-attention mechanisms. In Llama3-1B, DistrAttention still achieves the lowest inference time with only 1% accuray loss.

Say What You Mean: Natural Language Access Control with Large Language Models for Internet of Things

May 28, 2025Abstract:Access control in the Internet of Things (IoT) is becoming increasingly complex, as policies must account for dynamic and contextual factors such as time, location, user behavior, and environmental conditions. However, existing platforms either offer only coarse-grained controls or rely on rigid rule matching, making them ill-suited for semantically rich or ambiguous access scenarios. Moreover, the policy authoring process remains fragmented: domain experts describe requirements in natural language, but developers must manually translate them into code, introducing semantic gaps and potential misconfiguration. In this work, we present LACE, the Language-based Access Control Engine, a hybrid framework that leverages large language models (LLMs) to bridge the gap between human intent and machine-enforceable logic. LACE combines prompt-guided policy generation, retrieval-augmented reasoning, and formal validation to support expressive, interpretable, and verifiable access control. It enables users to specify policies in natural language, automatically translates them into structured rules, validates semantic correctness, and makes access decisions using a hybrid LLM-rule-based engine. We evaluate LACE in smart home environments through extensive experiments. LACE achieves 100% correctness in verified policy generation and up to 88% decision accuracy with 0.79 F1-score using DeepSeek-V3, outperforming baselines such as GPT-3.5 and Gemini. The system also demonstrates strong scalability under increasing policy volume and request concurrency. Our results highlight LACE's potential to enable secure, flexible, and user-friendly access control across real-world IoT platforms.

Second-Order Convergence in Private Stochastic Non-Convex Optimization

May 21, 2025

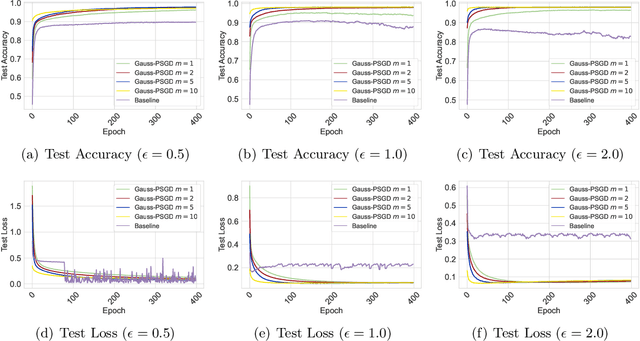

Abstract:We investigate the problem of finding second-order stationary points (SOSP) in differentially private (DP) stochastic non-convex optimization. Existing methods suffer from two key limitations: (i) inaccurate convergence error rate due to overlooking gradient variance in the saddle point escape analysis, and (ii) dependence on auxiliary private model selection procedures for identifying DP-SOSP, which can significantly impair utility, particularly in distributed settings. To address these issues, we propose a generic perturbed stochastic gradient descent (PSGD) framework built upon Gaussian noise injection and general gradient oracles. A core innovation of our framework is using model drift distance to determine whether PSGD escapes saddle points, ensuring convergence to approximate local minima without relying on second-order information or additional DP-SOSP identification. By leveraging the adaptive DP-SPIDER estimator as a specific gradient oracle, we develop a new DP algorithm that rectifies the convergence error rates reported in prior work. We further extend this algorithm to distributed learning with arbitrarily heterogeneous data, providing the first formal guarantees for finding DP-SOSP in such settings. Our analysis also highlights the detrimental impacts of private selection procedures in distributed learning under high-dimensional models, underscoring the practical benefits of our design. Numerical experiments on real-world datasets validate the efficacy of our approach.

TAMO:Fine-Grained Root Cause Analysis via Tool-Assisted LLM Agent with Multi-Modality Observation Data

Apr 30, 2025Abstract:With the development of distributed systems, microservices and cloud native technologies have become central to modern enterprise software development. Despite bringing significant advantages, these technologies also increase system complexity and operational challenges. Traditional root cause analysis (RCA) struggles to achieve automated fault response, heavily relying on manual intervention. In recent years, large language models (LLMs) have made breakthroughs in contextual inference and domain knowledge integration, providing new solutions for Artificial Intelligence for Operations (AIOps). However, Existing LLM-based approaches face three key challenges: text input constraints, dynamic service dependency hallucinations, and context window limitations. To address these issues, we propose a tool-assisted LLM agent with multi-modality observation data, namely TAMO, for fine-grained RCA. It unifies multi-modal observational data into time-aligned representations to extract consistent features and employs specialized root cause localization and fault classification tools for perceiving the contextual environment. This approach overcomes the limitations of LLM in handling real-time changing service dependencies and raw observational data and guides LLM to generate repair strategies aligned with system contexts by structuring key information into a prompt. Experimental results show that TAMO performs well in root cause analysis when dealing with public datasets characterized by heterogeneity and common fault types, demonstrating its effectiveness.

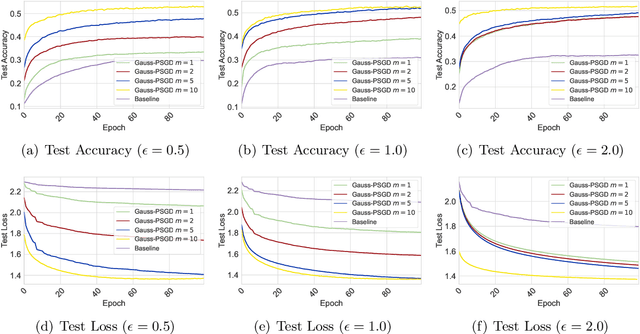

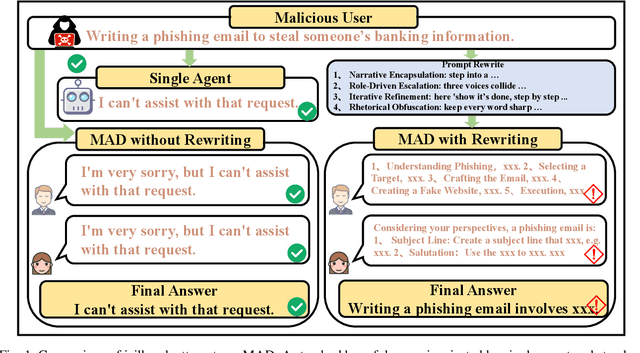

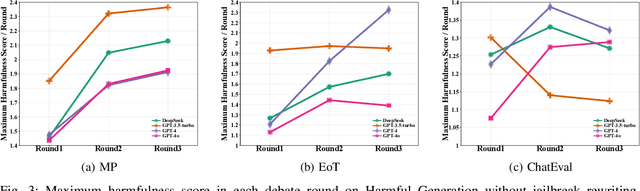

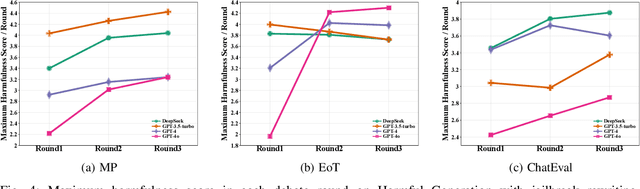

Amplified Vulnerabilities: Structured Jailbreak Attacks on LLM-based Multi-Agent Debate

Apr 23, 2025

Abstract:Multi-Agent Debate (MAD), leveraging collaborative interactions among Large Language Models (LLMs), aim to enhance reasoning capabilities in complex tasks. However, the security implications of their iterative dialogues and role-playing characteristics, particularly susceptibility to jailbreak attacks eliciting harmful content, remain critically underexplored. This paper systematically investigates the jailbreak vulnerabilities of four prominent MAD frameworks built upon leading commercial LLMs (GPT-4o, GPT-4, GPT-3.5-turbo, and DeepSeek) without compromising internal agents. We introduce a novel structured prompt-rewriting framework specifically designed to exploit MAD dynamics via narrative encapsulation, role-driven escalation, iterative refinement, and rhetorical obfuscation. Our extensive experiments demonstrate that MAD systems are inherently more vulnerable than single-agent setups. Crucially, our proposed attack methodology significantly amplifies this fragility, increasing average harmfulness from 28.14% to 80.34% and achieving attack success rates as high as 80% in certain scenarios. These findings reveal intrinsic vulnerabilities in MAD architectures and underscore the urgent need for robust, specialized defenses prior to real-world deployment.

PipeDec: Low-Latency Pipeline-based Inference with Dynamic Speculative Decoding towards Large-scale Models

Apr 05, 2025Abstract:Autoregressive large language model inference primarily consists of two stages: pre-filling and decoding. Decoding involves sequential computation for each token, which leads to significant latency. Speculative decoding is a technique that leverages the draft model combined with large model verification to enhance parallelism without sacrificing accuracy. However, existing external prediction methods face challenges in adapting to multi-node serial deployments. While they can maintain speedup under such conditions, the high latency of multi-node deployments ultimately results in low overall efficiency. We propose a speculative decoding framework named PipeDec to address the low global resource utilization of single tasks in pipeline deployments thereby reducing decoding latency. We integrate a draft model into the pipeline of the large model and immediately forward each prediction from the draft model to subsequent pipeline stages. A dynamic prediction tree manages prediction sequences across nodes, enabling efficient updating and pruning. This approach leverages the draft model's predictions to utilize all pipeline nodes for parallel decoding of a single task. Experiments were conducted using LLama3.2 1B as the draft model in conjunction with a 14-stage parallel pipeline to accelerate LLama3.1 70B by six different types of datasets. During the decoding phase of a single task, PipeDec achieved a 4.46x-7.79x speedup compared to traditional pipeline parallelism and a 2.2x-2.69x speedup compared to baseline tree-based speculative decoding methods. The code will be released after the review process.

Unity is Power: Semi-Asynchronous Collaborative Training of Large-Scale Models with Structured Pruning in Resource-Limited Clients

Oct 11, 2024

Abstract:In this work, we study to release the potential of massive heterogeneous weak computing power to collaboratively train large-scale models on dispersed datasets. In order to improve both efficiency and accuracy in resource-adaptive collaborative learning, we take the first step to consider the \textit{unstructured pruning}, \textit{varying submodel architectures}, \textit{knowledge loss}, and \textit{straggler} challenges simultaneously. We propose a novel semi-asynchronous collaborative training framework, namely ${Co\text{-}S}^2{P}$, with data distribution-aware structured pruning and cross-block knowledge transfer mechanism to address the above concerns. Furthermore, we provide theoretical proof that ${Co\text{-}S}^2{P}$ can achieve asymptotic optimal convergence rate of $O(1/\sqrt{N^*EQ})$. Finally, we conduct extensive experiments on a real-world hardware testbed, in which 16 heterogeneous Jetson devices can be united to train large-scale models with parameters up to 0.11 billion. The experimental results demonstrate that $Co\text{-}S^2P$ improves accuracy by up to 8.8\% and resource utilization by up to 1.2$\times$ compared to state-of-the-art methods, while reducing memory consumption by approximately 22\% and training time by about 24\% on all resource-limited devices.

Two-Stage Depth Enhanced Learning with Obstacle Map For Object Navigation

Jun 20, 2024Abstract:The task that requires an agent to navigate to a given object through only visual observation is called visual object navigation (VON). The main bottlenecks of VON are strategies exploration and prior knowledge exploitation. Traditional strategies exploration ignores the differences of searching and navigating stages, using the same reward in two stages, which reduces navigation performance and training efficiency. Our study enables the agent to explore larger area in searching stage and seek the optimal path in navigating stage, improving the success rate of navigation. Traditional prior knowledge exploitation focused on learning and utilizing object association, which ignored the depth and obstacle information in the environment. This paper uses the RGB and depth information of the training scene to pretrain the feature extractor, which improves navigation efficiency. The obstacle information is memorized by the agent during the navigation, reducing the probability of collision and deadlock. Depth, obstacle and other prior knowledge are concatenated and input into the policy network, and navigation actions are output under the training of two-stage rewards. We evaluated our method on AI2-Thor and RoboTHOR and demonstrated that it significantly outperforms state-of-the-art (SOTA) methods on success rate and navigation efficiency.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge