David Owen

Algorithmic progress in language models

Mar 09, 2024

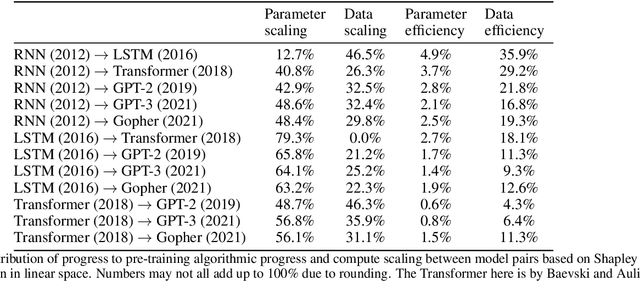

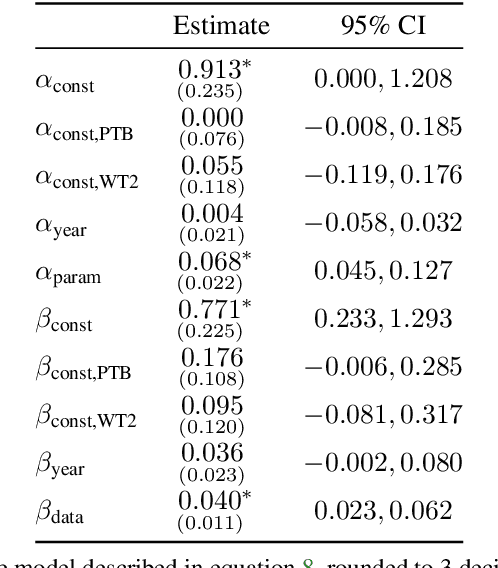

Abstract:We investigate the rate at which algorithms for pre-training language models have improved since the advent of deep learning. Using a dataset of over 200 language model evaluations on Wikitext and Penn Treebank spanning 2012-2023, we find that the compute required to reach a set performance threshold has halved approximately every 8 months, with a 95% confidence interval of around 5 to 14 months, substantially faster than hardware gains per Moore's Law. We estimate augmented scaling laws, which enable us to quantify algorithmic progress and determine the relative contributions of scaling models versus innovations in training algorithms. Despite the rapid pace of algorithmic progress and the development of new architectures such as the transformer, our analysis reveals that the increase in compute made an even larger contribution to overall performance improvements over this time period. Though limited by noisy benchmark data, our analysis quantifies the rapid progress in language modeling, shedding light on the relative contributions from compute and algorithms.

How predictable is language model benchmark performance?

Jan 09, 2024Abstract:We investigate large language model performance across five orders of magnitude of compute scaling in eleven recent model architectures. We show that average benchmark performance, aggregating over many individual tasks and evaluations as in the commonly-used BIG-Bench dataset, is decently predictable as a function of training compute scale. Specifically, when extrapolating BIG-Bench Hard performance across one order of magnitude in compute, we observe average absolute errors of 6 percentage points (pp). By contrast, extrapolation for individual BIG-Bench tasks across an order of magnitude in compute yields higher average errors of 18pp. Nonetheless, individual task performance remains significantly more predictable than chance. Overall, our work suggests compute scaling provides a promising basis to forecast AI capabilities in diverse benchmarks, though predicting performance in specific tasks poses challenges.

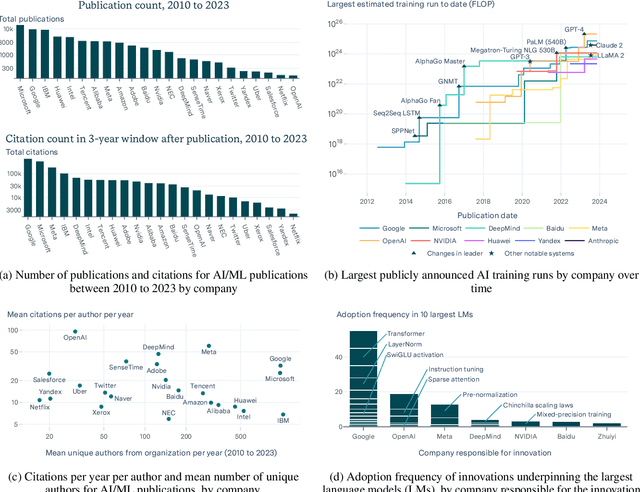

Who is leading in AI? An analysis of industry AI research

Nov 24, 2023

Abstract:AI research is increasingly industry-driven, making it crucial to understand company contributions to this field. We compare leading AI companies by research publications, citations, size of training runs, and contributions to algorithmic innovations. Our analysis reveals the substantial role played by Google, OpenAI and Meta. We find that these three companies have been responsible for some of the largest training runs, developed a large fraction of the algorithmic innovations that underpin large language models, and led in various metrics of citation impact. In contrast, leading Chinese companies such as Tencent and Baidu had a lower impact on many of these metrics compared to US counterparts. We observe many industry labs are pursuing large training runs, and that training runs from relative newcomers -- such as OpenAI and Anthropic -- have matched or surpassed those of long-standing incumbents such as Google. The data reveals a diverse ecosystem of companies steering AI progress, though US labs such as Google, OpenAI and Meta lead across critical metrics.

A spatio-temporal network for video semantic segmentation in surgical videos

Jun 19, 2023Abstract:Semantic segmentation in surgical videos has applications in intra-operative guidance, post-operative analytics and surgical education. Segmentation models need to provide accurate and consistent predictions since temporally inconsistent identification of anatomical structures can impair usability and hinder patient safety. Video information can alleviate these challenges leading to reliable models suitable for clinical use. We propose a novel architecture for modelling temporal relationships in videos. The proposed model includes a spatio-temporal decoder to enable video semantic segmentation by improving temporal consistency across frames. The encoder processes individual frames whilst the decoder processes a temporal batch of adjacent frames. The proposed decoder can be used on top of any segmentation encoder to improve temporal consistency. Model performance was evaluated on the CholecSeg8k dataset and a private dataset of robotic Partial Nephrectomy procedures. Segmentation performance was improved when the temporal decoder was applied across both datasets. The proposed model also displayed improvements in temporal consistency.

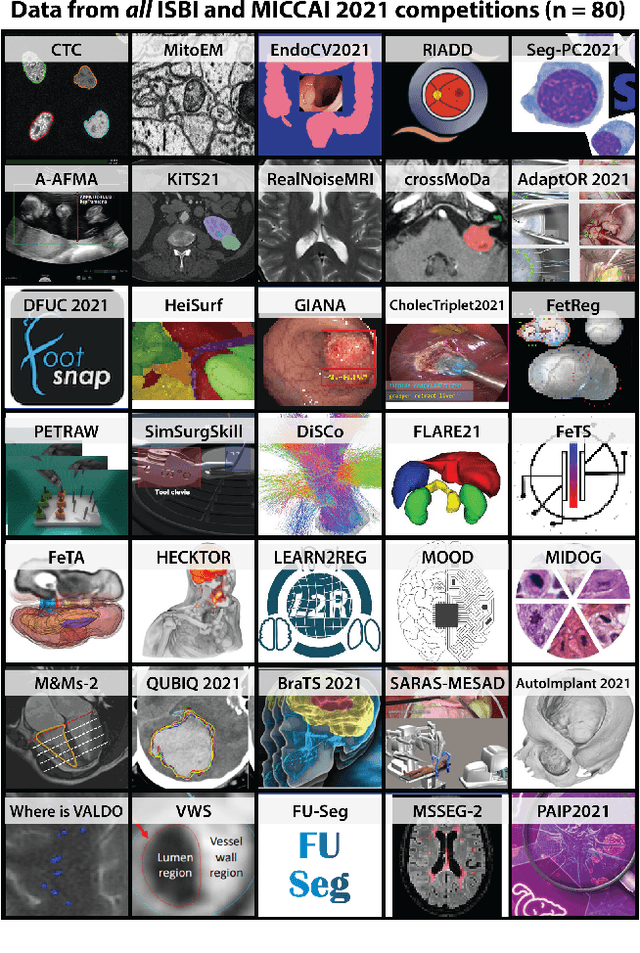

Why is the winner the best?

Mar 30, 2023

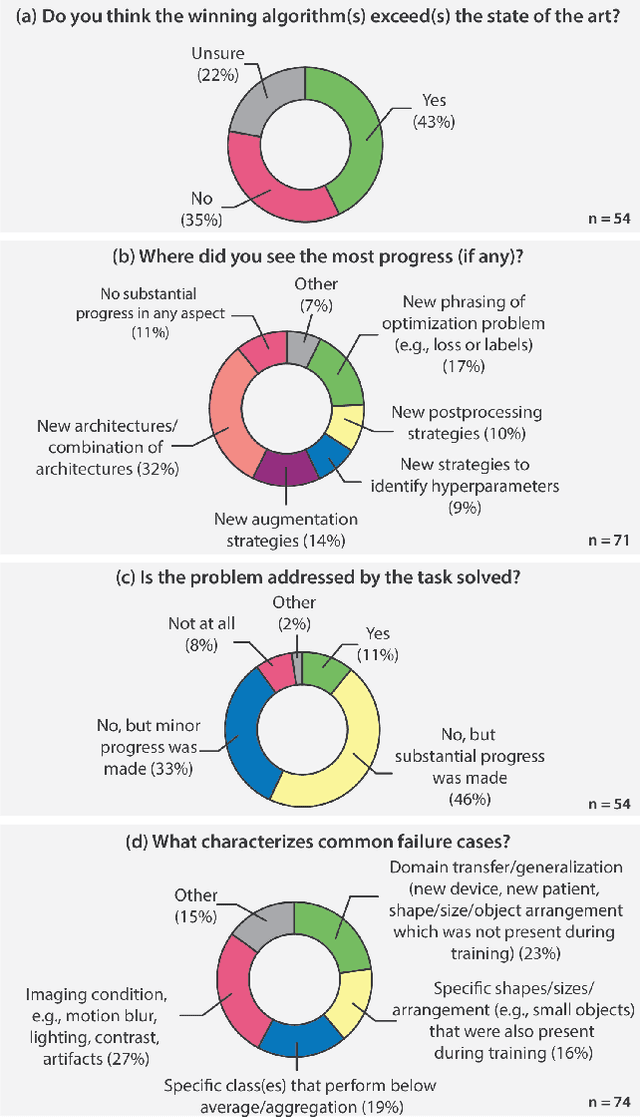

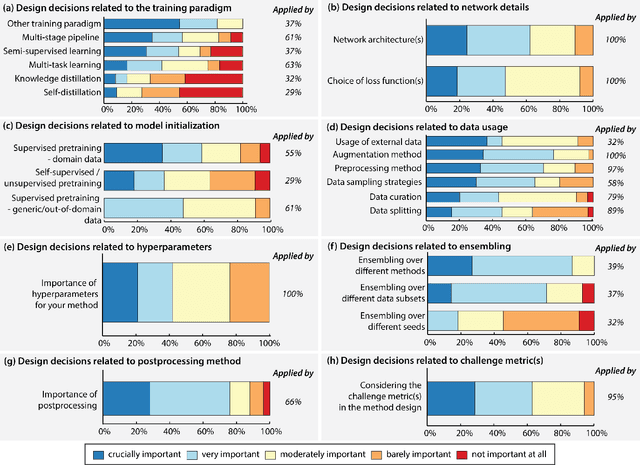

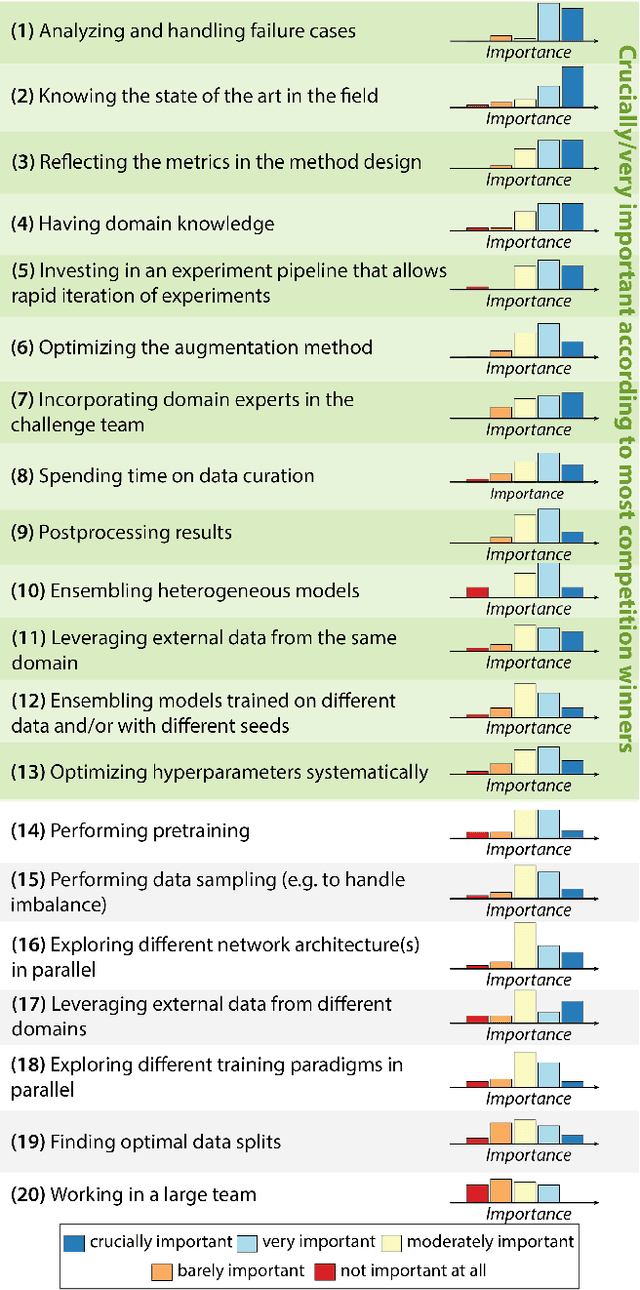

Abstract:International benchmarking competitions have become fundamental for the comparative performance assessment of image analysis methods. However, little attention has been given to investigating what can be learnt from these competitions. Do they really generate scientific progress? What are common and successful participation strategies? What makes a solution superior to a competing method? To address this gap in the literature, we performed a multi-center study with all 80 competitions that were conducted in the scope of IEEE ISBI 2021 and MICCAI 2021. Statistical analyses performed based on comprehensive descriptions of the submitted algorithms linked to their rank as well as the underlying participation strategies revealed common characteristics of winning solutions. These typically include the use of multi-task learning (63%) and/or multi-stage pipelines (61%), and a focus on augmentation (100%), image preprocessing (97%), data curation (79%), and postprocessing (66%). The "typical" lead of a winning team is a computer scientist with a doctoral degree, five years of experience in biomedical image analysis, and four years of experience in deep learning. Two core general development strategies stood out for highly-ranked teams: the reflection of the metrics in the method design and the focus on analyzing and handling failure cases. According to the organizers, 43% of the winning algorithms exceeded the state of the art but only 11% completely solved the respective domain problem. The insights of our study could help researchers (1) improve algorithm development strategies when approaching new problems, and (2) focus on open research questions revealed by this work.

Biomedical image analysis competitions: The state of current participation practice

Dec 16, 2022Abstract:The number of international benchmarking competitions is steadily increasing in various fields of machine learning (ML) research and practice. So far, however, little is known about the common practice as well as bottlenecks faced by the community in tackling the research questions posed. To shed light on the status quo of algorithm development in the specific field of biomedical imaging analysis, we designed an international survey that was issued to all participants of challenges conducted in conjunction with the IEEE ISBI 2021 and MICCAI 2021 conferences (80 competitions in total). The survey covered participants' expertise and working environments, their chosen strategies, as well as algorithm characteristics. A median of 72% challenge participants took part in the survey. According to our results, knowledge exchange was the primary incentive (70%) for participation, while the reception of prize money played only a minor role (16%). While a median of 80 working hours was spent on method development, a large portion of participants stated that they did not have enough time for method development (32%). 25% perceived the infrastructure to be a bottleneck. Overall, 94% of all solutions were deep learning-based. Of these, 84% were based on standard architectures. 43% of the respondents reported that the data samples (e.g., images) were too large to be processed at once. This was most commonly addressed by patch-based training (69%), downsampling (37%), and solving 3D analysis tasks as a series of 2D tasks. K-fold cross-validation on the training set was performed by only 37% of the participants and only 50% of the participants performed ensembling based on multiple identical models (61%) or heterogeneous models (39%). 48% of the respondents applied postprocessing steps.

Towards Preemptive Detection of Depression and Anxiety in Twitter

Nov 10, 2020

Abstract:Depression and anxiety are psychiatric disorders that are observed in many areas of everyday life. For example, these disorders manifest themselves somewhat frequently in texts written by nondiagnosed users in social media. However, detecting users with these conditions is not a straightforward task as they may not explicitly talk about their mental state, and if they do, contextual cues such as immediacy must be taken into account. When available, linguistic flags pointing to probable anxiety or depression could be used by medical experts to write better guidelines and treatments. In this paper, we develop a dataset designed to foster research in depression and anxiety detection in Twitter, framing the detection task as a binary tweet classification problem. We then apply state-of-the-art classification models to this dataset, providing a competitive set of baselines alongside qualitative error analysis. Our results show that language models perform reasonably well, and better than more traditional baselines. Nonetheless, there is clear room for improvement, particularly with unbalanced training sets and in cases where seemingly obvious linguistic cues (keywords) are used counter-intuitively.

Detecting Reflections by Combining Semantic and Instance Segmentation

Apr 30, 2019

Abstract:Reflections in natural images commonly cause false positives in automated detection systems. These false positives can lead to significant impairment of accuracy in the tasks of detection, counting and segmentation. Here, inspired by the recent panoptic approach to segmentation, we show how fusing instance and semantic segmentation can automatically identify reflection false positives, without explicitly needing to have the reflective regions labelled. We explore in detail how state of the art two-stage detectors suffer a loss of broader contextual features, and hence are unable to learn to ignore these reflections. We then present an approach to fuse instance and semantic segmentations for this application, and subsequently show how this reduces false positive detections in a real world surveillance data with a large number of reflective surfaces. This demonstrates how panoptic segmentation and related work, despite being in its infancy, can already be useful in real world computer vision problems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge