Christian Gagne

Layerwise Early Stopping for Test Time Adaptation

Apr 04, 2024

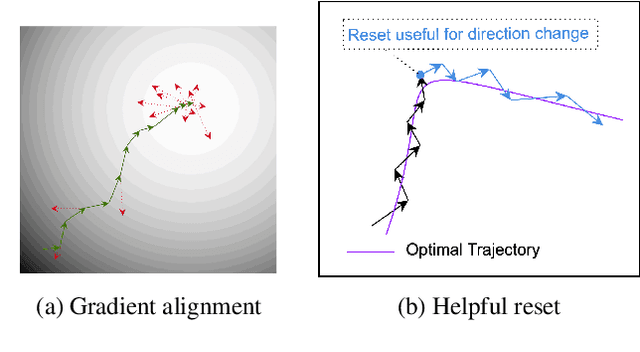

Abstract:Test Time Adaptation (TTA) addresses the problem of distribution shift by enabling pretrained models to learn new features on an unseen domain at test time. However, it poses a significant challenge to maintain a balance between learning new features and retaining useful pretrained features. In this paper, we propose Layerwise EArly STopping (LEAST) for TTA to address this problem. The key idea is to stop adapting individual layers during TTA if the features being learned do not appear beneficial for the new domain. For that purpose, we propose using a novel gradient-based metric to measure the relevance of the current learnt features to the new domain without the need for supervised labels. More specifically, we propose to use this metric to determine dynamically when to stop updating each layer during TTA. This enables a more balanced adaptation, restricted to layers benefiting from it, and only for a certain number of steps. Such an approach also has the added effect of limiting the forgetting of pretrained features useful for dealing with new domains. Through extensive experiments, we demonstrate that Layerwise Early Stopping improves the performance of existing TTA approaches across multiple datasets, domain shifts, model architectures, and TTA losses.

Generalizing across Temporal Domains with Koopman Operators

Feb 15, 2024

Abstract:In the field of domain generalization, the task of constructing a predictive model capable of generalizing to a target domain without access to target data remains challenging. This problem becomes further complicated when considering evolving dynamics between domains. While various approaches have been proposed to address this issue, a comprehensive understanding of the underlying generalization theory is still lacking. In this study, we contribute novel theoretic results that aligning conditional distribution leads to the reduction of generalization bounds. Our analysis serves as a key motivation for solving the Temporal Domain Generalization (TDG) problem through the application of Koopman Neural Operators, resulting in Temporal Koopman Networks (TKNets). By employing Koopman Operators, we effectively address the time-evolving distributions encountered in TDG using the principles of Koopman theory, where measurement functions are sought to establish linear transition relations between evolving domains. Through empirical evaluations conducted on synthetic and real-world datasets, we validate the effectiveness of our proposed approach.

Image-to-Image Translation with Low Resolution Conditioning

Jul 23, 2021

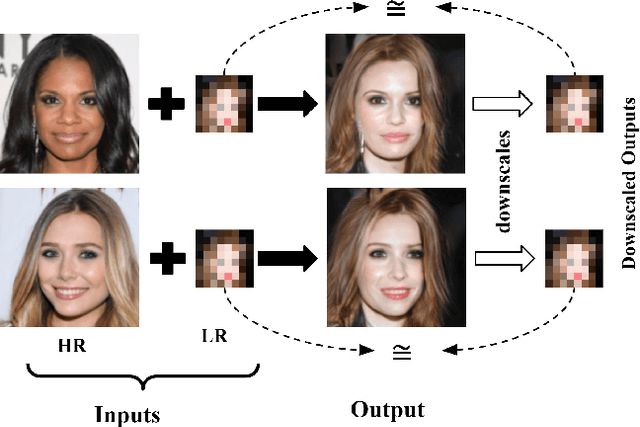

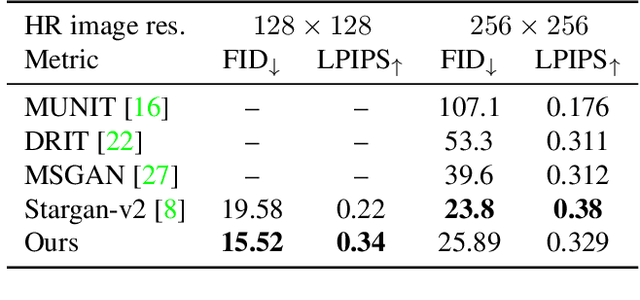

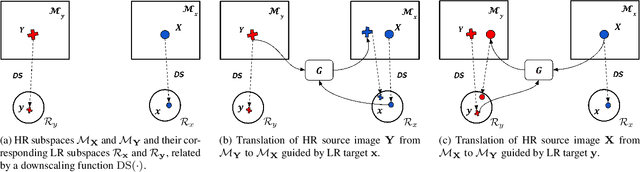

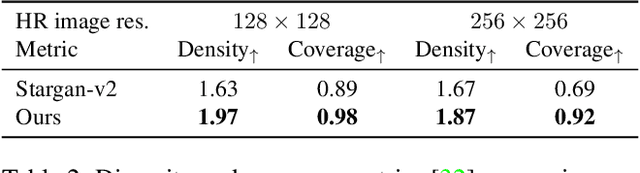

Abstract:Most image-to-image translation methods focus on learning mappings across domains with the assumption that images share content (e.g., pose) but have their own domain-specific information known as style. When conditioned on a target image, such methods aim to extract the style of the target and combine it with the content of the source image. In this work, we consider the scenario where the target image has a very low resolution. More specifically, our approach aims at transferring fine details from a high resolution (HR) source image to fit a coarse, low resolution (LR) image representation of the target. We therefore generate HR images that share features from both HR and LR inputs. This differs from previous methods that focus on translating a given image style into a target content, our translation approach being able to simultaneously imitate the style and merge the structural information of the LR target. Our approach relies on training the generative model to produce HR target images that both 1) share distinctive information of the associated source image; 2) correctly match the LR target image when downscaled. We validate our method on the CelebA-HQ and AFHQ datasets by demonstrating improvements in terms of visual quality, diversity and coverage. Qualitative and quantitative results show that when dealing with intra-domain image translation, our method generates more realistic samples compared to state-of-the-art methods such as Stargan-v2

Self-supervised Robust Object Detectors from Partially Labelled datasets

May 23, 2020

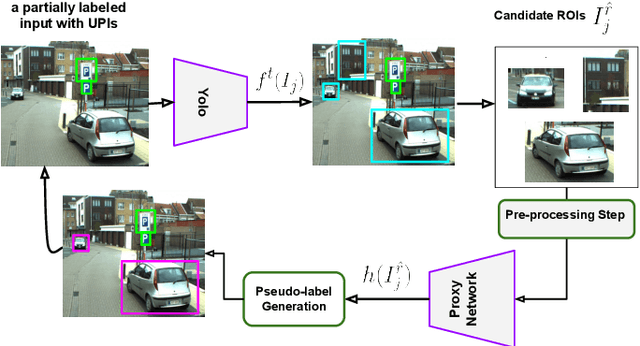

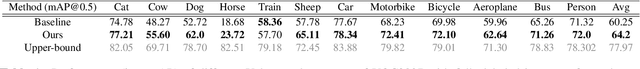

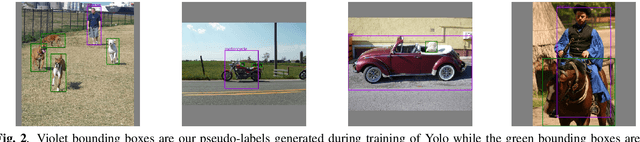

Abstract:In the object detection task, merging various datasets from similar contexts but with different sets of Objects of Interest (OoI) is an inexpensive way (in terms of labor cost) for crafting a large-scale dataset covering a wide range of objects. Moreover, merging datasets allows us to train one integrated object detector, instead of training several ones, which in turn resulting in the reduction of computational and time costs. However, merging the datasets from similar contexts causes samples with partial labeling as each constituent dataset is originally annotated for its own set of OoI and ignores to annotate those objects that are become interested after merging the datasets. With the goal of training \emph{one integrated robust object detector with high generalization performance}, we propose a training framework to overcome missing-label challenge of the merged datasets. More specifically, we propose a computationally efficient self-supervised framework to create on-the-fly pseudo-labels for the unlabelled positive instances in the merged dataset in order to train the object detector jointly on both ground truth and pseudo labels. We evaluate our proposed framework for training Yolo on a simulated merged dataset with missing rate $\approx\!48\%$ using VOC2012 and VOC2007. We empirically show that generalization performance of Yolo trained on both ground truth and the pseudo-labels created by our method is on average $4\%$ higher than the ones trained only with the ground truth labels of the merged dataset.

Toward Adversarial Robustness by Diversity in an Ensemble of Specialized Deep Neural Networks

May 17, 2020

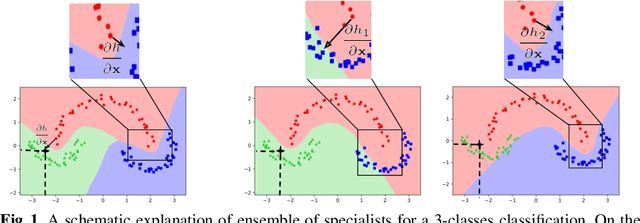

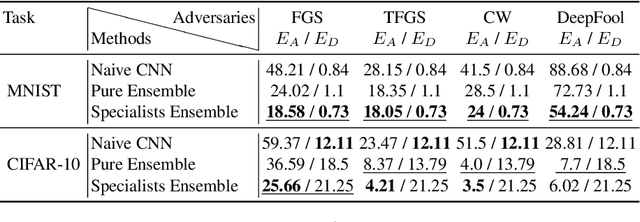

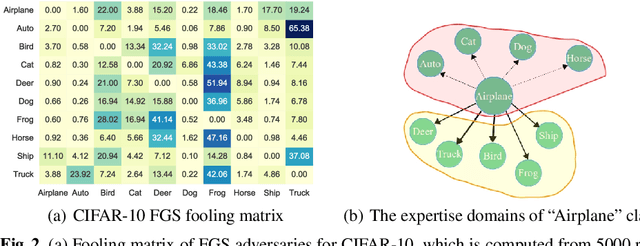

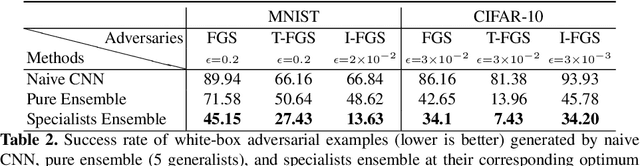

Abstract:We aim at demonstrating the influence of diversity in the ensemble of CNNs on the detection of black-box adversarial instances and hardening the generation of white-box adversarial attacks. To this end, we propose an ensemble of diverse specialized CNNs along with a simple voting mechanism. The diversity in this ensemble creates a gap between the predictive confidences of adversaries and those of clean samples, making adversaries detectable. We then analyze how diversity in such an ensemble of specialists may mitigate the risk of the black-box and white-box adversarial examples. Using MNIST and CIFAR-10, we empirically verify the ability of our ensemble to detect a large portion of well-known black-box adversarial examples, which leads to a significant reduction in the risk rate of adversaries, at the expense of a small increase in the risk rate of clean samples. Moreover, we show that the success rate of generating white-box attacks by our ensemble is remarkably decreased compared to a vanilla CNN and an ensemble of vanilla CNNs, highlighting the beneficial role of diversity in the ensemble for developing more robust models.

A Novel Unsupervised Post-Processing Calibration Method for DNNS with Robustness to Domain Shift

Nov 25, 2019

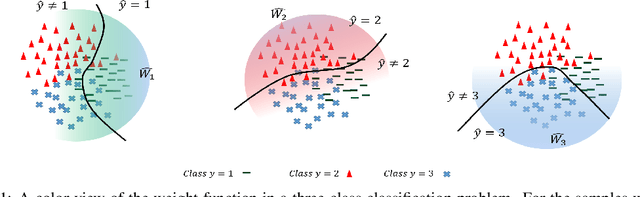

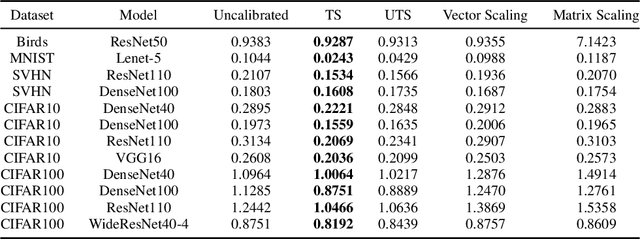

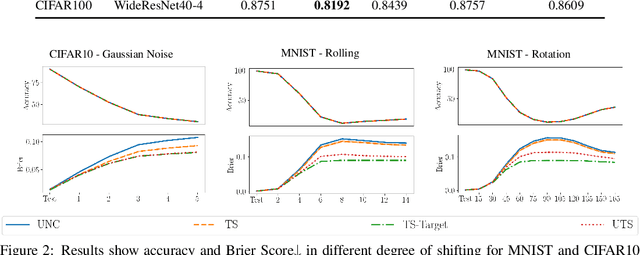

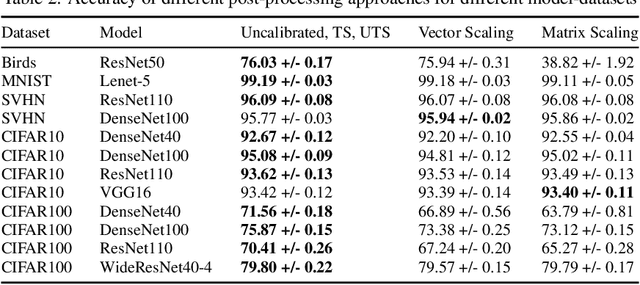

Abstract:The uncertainty estimation is critical in real-world decision making applications, especially when distributional shift between the training and test data are prevalent. Many calibration methods in the literature have been proposed to improve the predictive uncertainty of DNNs which are generally not well-calibrated. However, none of them is specifically designed to work properly under domain shift condition. In this paper, we propose Unsupervised Temperature Scaling (UTS) as a robust calibration method to domain shift. It exploits unlabeled test samples instead of the training one to adjust the uncertainty prediction of deep models towards the test distribution. UTS utilizes a novel loss function, weighted NLL, which allows unsupervised calibration. We evaluate UTS on a wide range of model-datasets to show the possibility of calibration without labels and demonstrate the robustness of UTS compared to other methods (e.g., TS, MC-dropout, SVI, ensembles) in shifted domains.

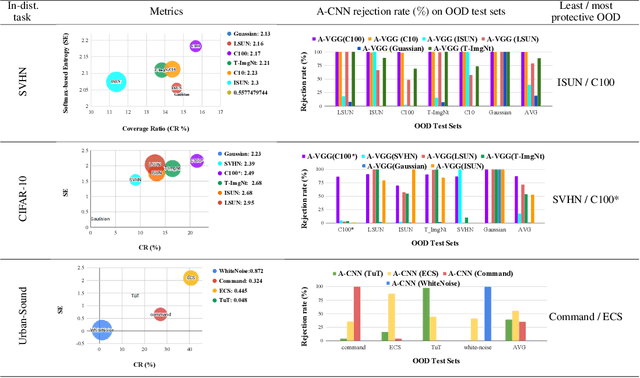

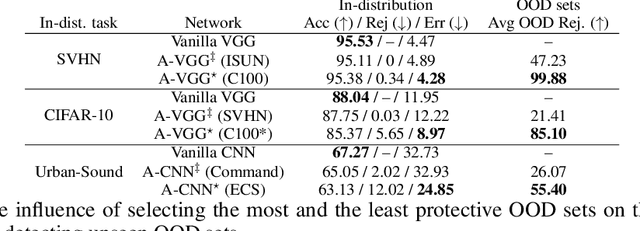

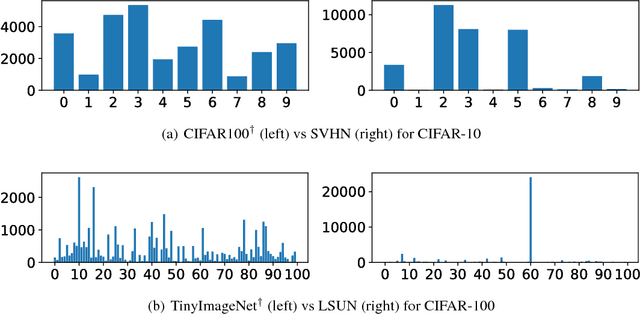

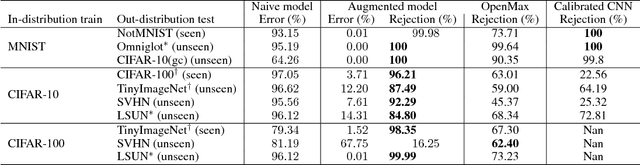

Toward Metrics for Differentiating Out-of-Distribution Sets

Oct 18, 2019

Abstract:Vanilla CNNs, as uncalibrated classifiers, suffer from classifying out-of-distribution (OOD) samples nearly as confidently as in-distribution samples, making them indistinguishable from each other. To tackle this challenge, some recent works have demonstrated the gains of leveraging readily accessible OOD sets for training end-to-end calibrated CNNs. However, a critical question remains unanswered in these works: how to select an OOD set, among the available OOD sets, for training such CNNs that induces high detection rates on unseen OOD sets? We address this pivotal question through the use of Augmented-CNN (A-CNN) involving an explicit rejection option. We first provide a formal definition to precisely differentiate OOD sets for the purpose of selection. As using this definition incurs a huge computational cost, we propose novel metrics, as a computationally efficient tool, for characterizing OOD sets in order to select the proper one. In a series of experiments on several image and audio benchmarks, we show that training an A-CNN with an OOD set identified by our metrics (called A-CNN$^{\star}$) leads to remarkable detection rate of unseen OOD sets while maintaining in-distribution generalization performance, thus demonstrating the viability of our metrics for identifying the proper OOD set. Furthermore, we show that A-CNN$^{\star}$ outperforms state-of-the-art OOD detectors across different benchmarks.

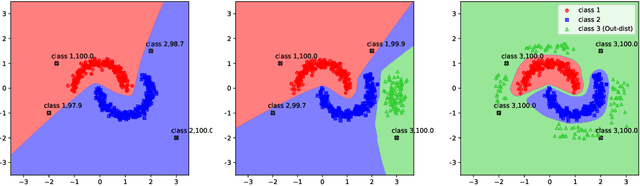

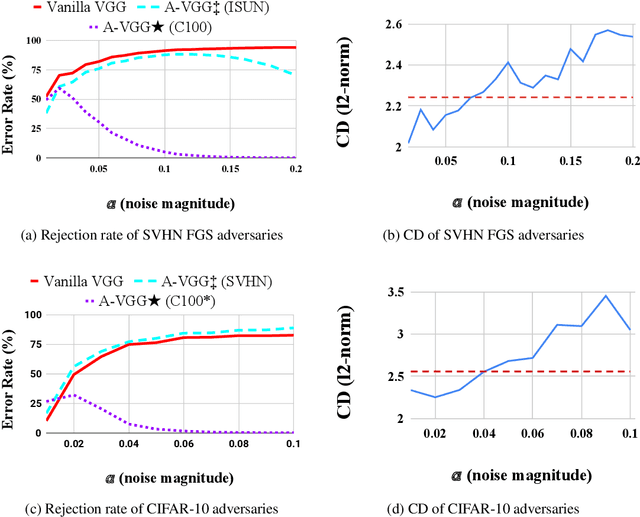

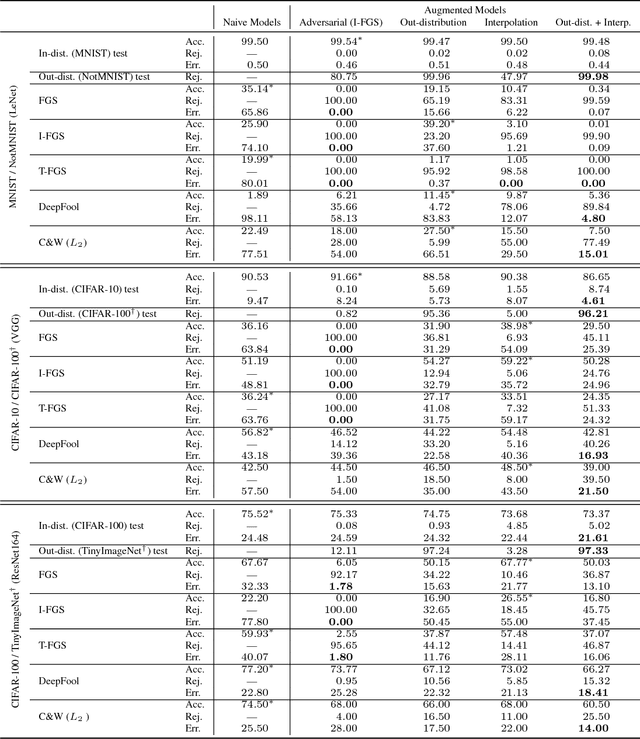

Controlling Over-generalization and its Effect on Adversarial Examples Generation and Detection

Oct 03, 2018

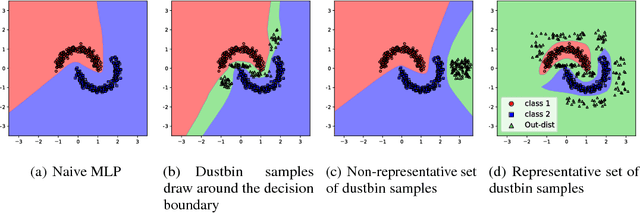

Abstract:Convolutional Neural Networks (CNNs) significantly improve the state-of-the-art for many applications, especially in computer vision. However, CNNs still suffer from a tendency to confidently classify out-distribution samples from unknown classes into pre-defined known classes. Further, they are also vulnerable to adversarial examples. We are relating these two issues through the tendency of CNNs to over-generalize for areas of the input space not covered well by the training set. We show that a CNN augmented with an extra output class can act as a simple yet effective end-to-end model for controlling over-generalization. As an appropriate training set for the extra class, we introduce two resources that are computationally efficient to obtain: a representative natural out-distribution set and interpolated in-distribution samples. To help select a representative natural out-distribution set among available ones, we propose a simple measurement to assess an out-distribution set's fitness. We also demonstrate that training such an augmented CNN with representative out-distribution natural datasets and some interpolated samples allows it to better handle a wide range of unseen out-distribution samples and black-box adversarial examples without training it on any adversaries. Finally, we show that generation of white-box adversarial attacks using our proposed augmented CNN can become harder, as the attack algorithms have to get around the rejection regions when generating actual adversaries.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge