Azadeh Sadat Mozafari

A Novel Unsupervised Post-Processing Calibration Method for DNNS with Robustness to Domain Shift

Nov 25, 2019

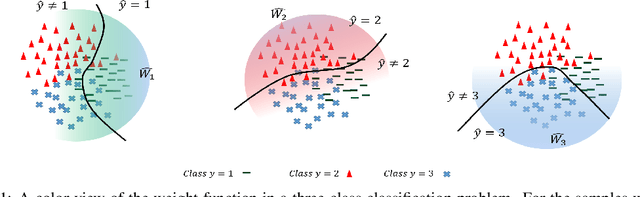

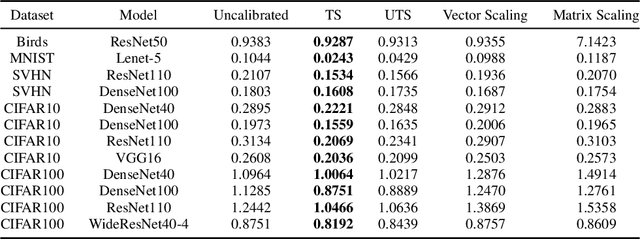

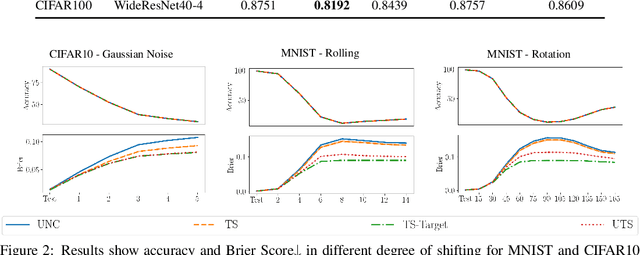

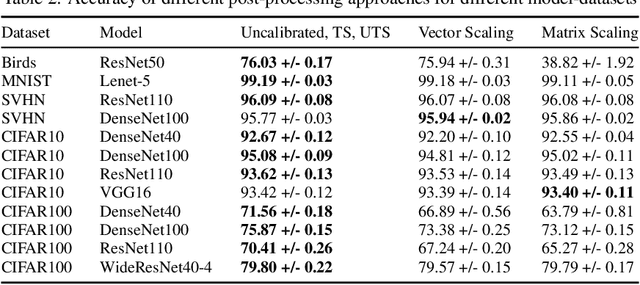

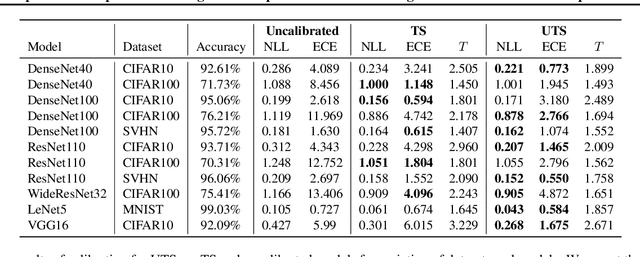

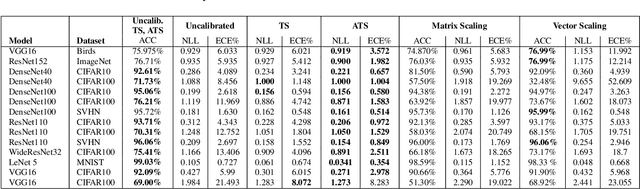

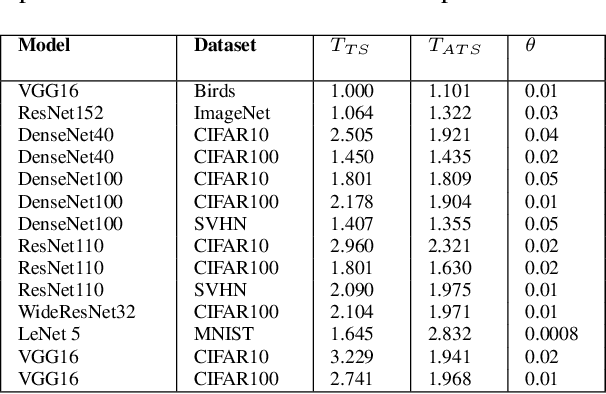

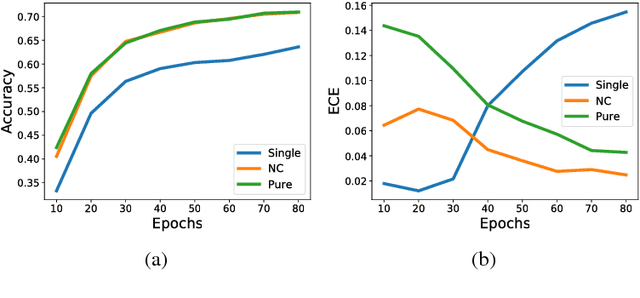

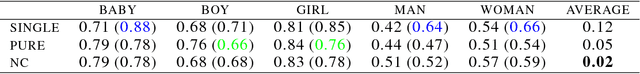

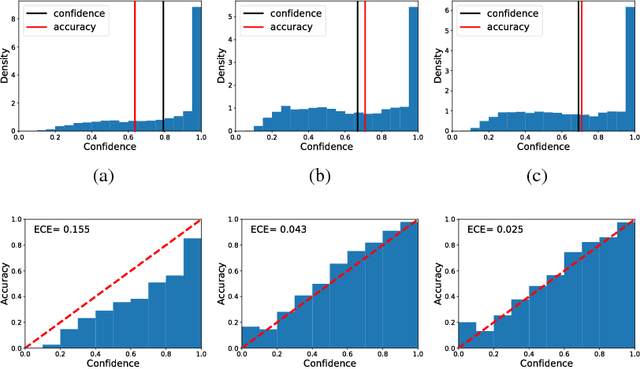

Abstract:The uncertainty estimation is critical in real-world decision making applications, especially when distributional shift between the training and test data are prevalent. Many calibration methods in the literature have been proposed to improve the predictive uncertainty of DNNs which are generally not well-calibrated. However, none of them is specifically designed to work properly under domain shift condition. In this paper, we propose Unsupervised Temperature Scaling (UTS) as a robust calibration method to domain shift. It exploits unlabeled test samples instead of the training one to adjust the uncertainty prediction of deep models towards the test distribution. UTS utilizes a novel loss function, weighted NLL, which allows unsupervised calibration. We evaluate UTS on a wide range of model-datasets to show the possibility of calibration without labels and demonstrate the robustness of UTS compared to other methods (e.g., TS, MC-dropout, SVI, ensembles) in shifted domains.

Unsupervised Temperature Scaling: Post-Processing Unsupervised Calibration of Deep Models Decisions

May 08, 2019

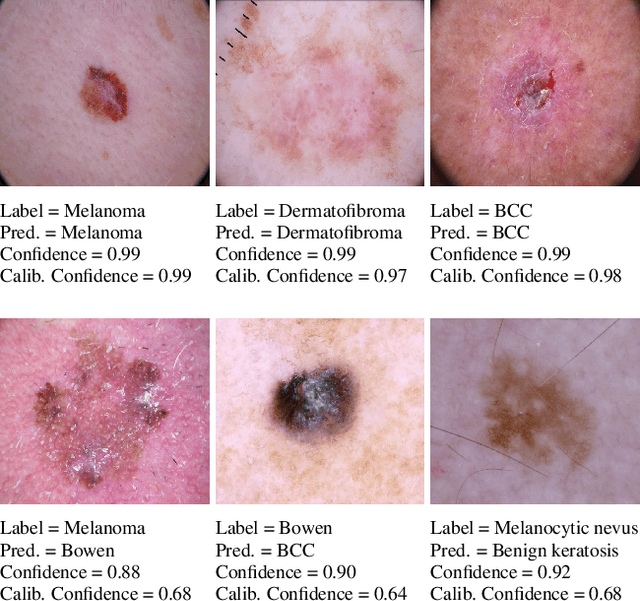

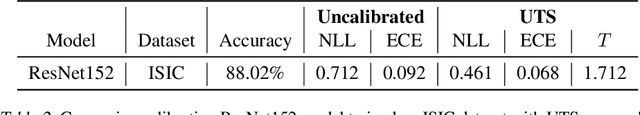

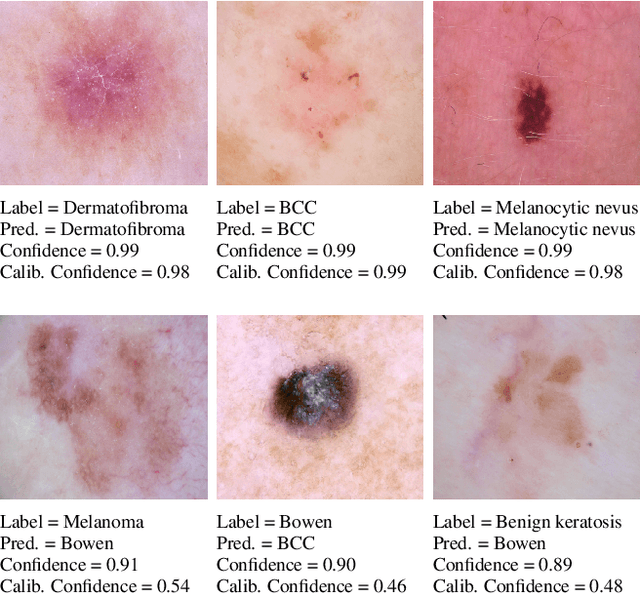

Abstract:Great performances of deep learning are undeniable, with impressive results on wide range of tasks. However, the output confidence of these models is usually not well calibrated, which can be an issue for applications where confidence on the decisions is central to bring trust and reliability (e.g., autonomous driving or medical diagnosis). For models using softmax at the last layer, Temperature Scaling (TS) is a state-of-the-art calibration method, with low time and memory complexity as well as demonstrated effectiveness.TS relies on a T parameter to rescale and calibrate values of the softmax layer, using a labelled dataset to determine the value of that parameter.We are proposing an Unsupervised Temperature Scaling (UTS) approach, which does not dependent on labelled samples to calibrate the model,allowing, for example, using a part of test samples for calibrating the pre-trained model before going into inference mode. We provide theoretical justifications for UTS and assess its effectiveness on the wide range of deep models and datasets. We also demonstrate calibration results of UTS on skin lesion detection, a problem where a well-calibrated output can play an important role for accurate decision-making.

A New Loss Function for Temperature Scaling to have Better Calibrated Deep Networks

Oct 27, 2018

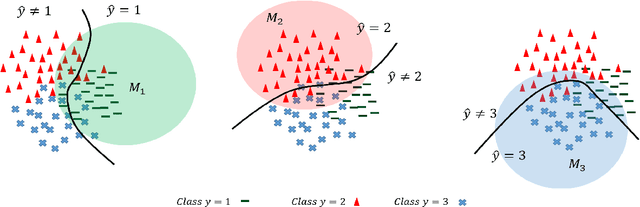

Abstract:However Deep neural networks recently have achieved impressive results for different tasks, they suffer from poor uncertainty prediction. Temperature Scaling(TS) is an efficient post-processing method for calibrating DNNs toward to have more accurate uncertainty prediction. TS relies on a single parameter T which softens the logit layer of a DNN and the optimal value of it is found by minimizing on Negative Log Likelihood (NLL) loss function. In this paper, we discuss about weakness of NLL loss function, especially for DNNs with high accuracy and propose a new loss function called Attended-NLL which can improve TS calibration ability significantly

Controlling Over-generalization and its Effect on Adversarial Examples Generation and Detection

Oct 03, 2018

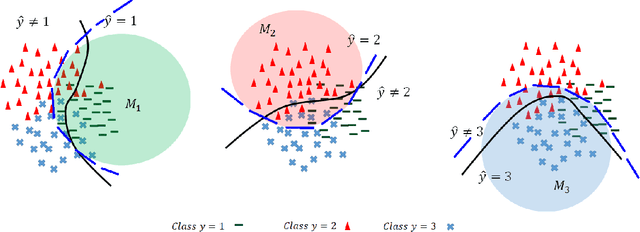

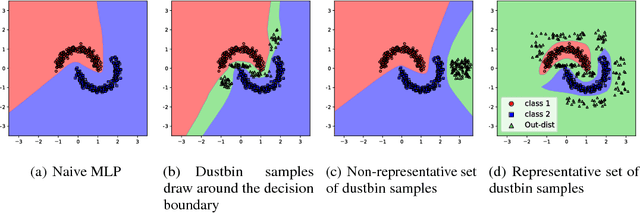

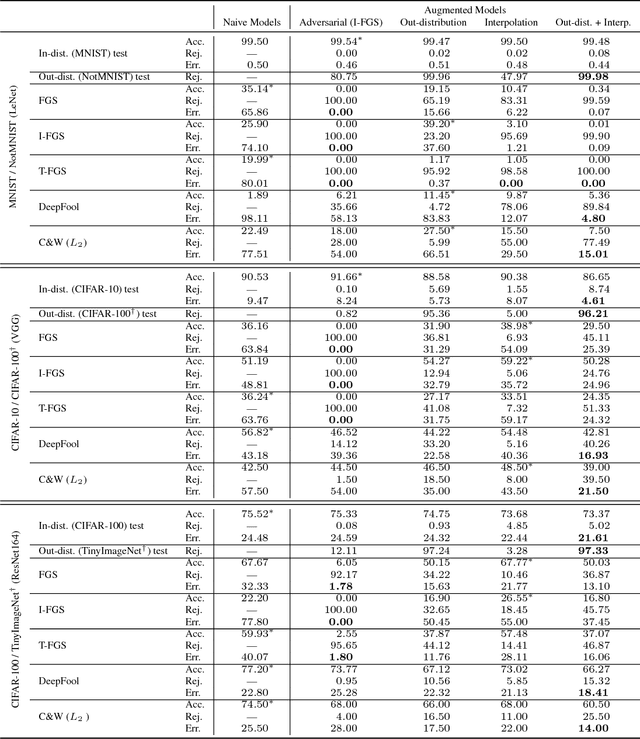

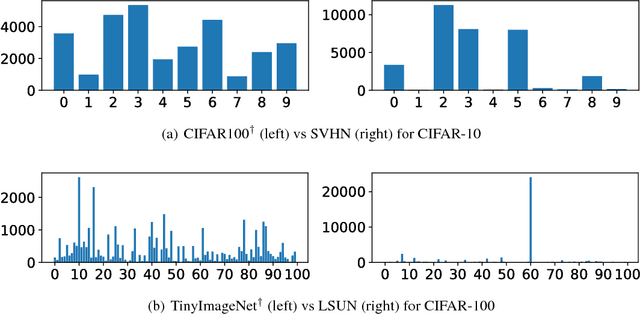

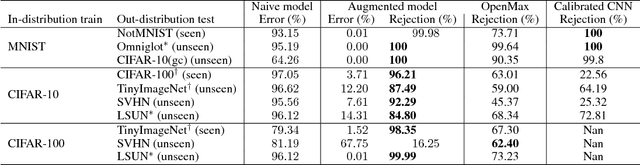

Abstract:Convolutional Neural Networks (CNNs) significantly improve the state-of-the-art for many applications, especially in computer vision. However, CNNs still suffer from a tendency to confidently classify out-distribution samples from unknown classes into pre-defined known classes. Further, they are also vulnerable to adversarial examples. We are relating these two issues through the tendency of CNNs to over-generalize for areas of the input space not covered well by the training set. We show that a CNN augmented with an extra output class can act as a simple yet effective end-to-end model for controlling over-generalization. As an appropriate training set for the extra class, we introduce two resources that are computationally efficient to obtain: a representative natural out-distribution set and interpolated in-distribution samples. To help select a representative natural out-distribution set among available ones, we propose a simple measurement to assess an out-distribution set's fitness. We also demonstrate that training such an augmented CNN with representative out-distribution natural datasets and some interpolated samples allows it to better handle a wide range of unseen out-distribution samples and black-box adversarial examples without training it on any adversaries. Finally, we show that generation of white-box adversarial attacks using our proposed augmented CNN can become harder, as the attack algorithms have to get around the rejection regions when generating actual adversaries.

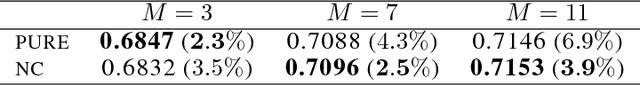

Diversity regularization in deep ensembles

Feb 22, 2018

Abstract:Calibrating the confidence of supervised learning models is important for a variety of contexts where the certainty over predictions should be reliable. However, it has been reported that deep neural network models are often too poorly calibrated for achieving complex tasks requiring reliable uncertainty estimates in their prediction. In this work, we are proposing a strategy for training deep ensembles with a diversity function regularization, which improves the calibration property while maintaining a similar prediction accuracy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge