Denis Laurendeau

Fast, Accurate and Object Boundary-Aware Surface Normal Estimation from Depth Maps

Sep 17, 2022

Abstract:This paper proposes a fast and accurate surface normal estimation method which can be directly used on depth maps (organized point clouds). The surface normal estimation process is formulated as a closed-form expression. In order to reduce the effect of measurement noise, the averaging operation is utilized in multi-direction manner. The multi-direction normal estimation process is reformulated in the next step to be implemented efficiently. Finally, a simple yet effective method is proposed to remove erroneous normal estimation at depth discontinuities. The proposed method is compared to well-known surface normal estimation algorithms. The results show that the proposed algorithm not only outperforms the baseline algorithms in term of accuracy, but also is fast enough to be used in real-time applications.

Deep SVBRDF Estimation on Real Materials

Oct 08, 2020

Abstract:Recent work has demonstrated that deep learning approaches can successfully be used to recover accurate estimates of the spatially-varying BRDF (SVBRDF) of a surface from as little as a single image. Closer inspection reveals, however, that most approaches in the literature are trained purely on synthetic data, which, while diverse and realistic, is often not representative of the richness of the real world. In this paper, we show that training such networks exclusively on synthetic data is insufficient to achieve adequate results when tested on real data. Our analysis leverages a new dataset of real materials obtained with a novel portable multi-light capture apparatus. Through an extensive series of experiments and with the use of a novel deep learning architecture, we explore two strategies for improving results on real data: finetuning, and a per-material optimization procedure. We show that adapting network weights to real data is of critical importance, resulting in an approach which significantly outperforms previous methods for SVBRDF estimation on real materials. Dataset and code are available at https://lvsn.github.io/real-svbrdf

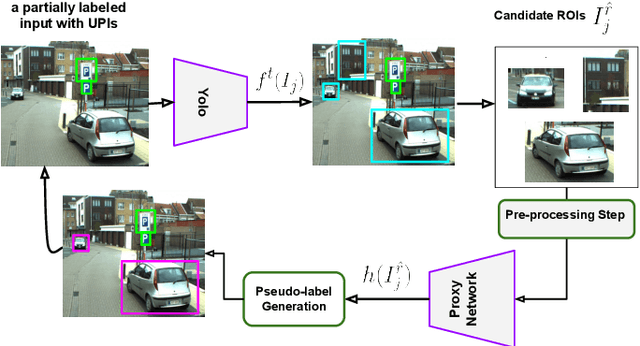

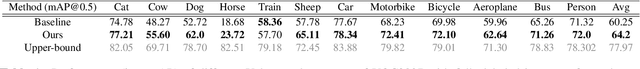

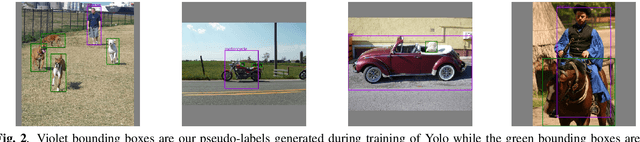

Self-supervised Robust Object Detectors from Partially Labelled datasets

May 23, 2020

Abstract:In the object detection task, merging various datasets from similar contexts but with different sets of Objects of Interest (OoI) is an inexpensive way (in terms of labor cost) for crafting a large-scale dataset covering a wide range of objects. Moreover, merging datasets allows us to train one integrated object detector, instead of training several ones, which in turn resulting in the reduction of computational and time costs. However, merging the datasets from similar contexts causes samples with partial labeling as each constituent dataset is originally annotated for its own set of OoI and ignores to annotate those objects that are become interested after merging the datasets. With the goal of training \emph{one integrated robust object detector with high generalization performance}, we propose a training framework to overcome missing-label challenge of the merged datasets. More specifically, we propose a computationally efficient self-supervised framework to create on-the-fly pseudo-labels for the unlabelled positive instances in the merged dataset in order to train the object detector jointly on both ground truth and pseudo labels. We evaluate our proposed framework for training Yolo on a simulated merged dataset with missing rate $\approx\!48\%$ using VOC2012 and VOC2007. We empirically show that generalization performance of Yolo trained on both ground truth and the pseudo-labels created by our method is on average $4\%$ higher than the ones trained only with the ground truth labels of the merged dataset.

A Framework for Evaluating 6-DOF Object Trackers

Sep 06, 2018

Abstract:We present a challenging and realistic novel dataset for evaluating 6-DOF object tracking algorithms. Existing datasets show serious limitations---notably, unrealistic synthetic data, or real data with large fiducial markers---preventing the community from obtaining an accurate picture of the state-of-the-art. Using a data acquisition pipeline based on a commercial motion capture system for acquiring accurate ground truth poses of real objects with respect to a Kinect V2 camera, we build a dataset which contains a total of 297 calibrated sequences. They are acquired in three different scenarios to evaluate the performance of trackers: stability, robustness to occlusion and accuracy during challenging interactions between a person and the object. We conduct an extensive study of a deep 6-DOF tracking architecture and determine a set of optimal parameters. We enhance the architecture and the training methodology to train a 6-DOF tracker that can robustly generalize to objects never seen during training, and demonstrate favorable performance compared to previous approaches trained specifically on the objects to track.

Sustainable Cooperative Coevolution with a Multi-Armed Bandit

Apr 10, 2013

Abstract:This paper proposes a self-adaptation mechanism to manage the resources allocated to the different species comprising a cooperative coevolutionary algorithm. The proposed approach relies on a dynamic extension to the well-known multi-armed bandit framework. At each iteration, the dynamic multi-armed bandit makes a decision on which species to evolve for a generation, using the history of progress made by the different species to guide the decisions. We show experimentally, on a benchmark and a real-world problem, that evolving the different populations at different paces allows not only to identify solutions more rapidly, but also improves the capacity of cooperative coevolution to solve more complex problems.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge