Cheng Ding

Mimic Human Cognition, Master Multi-Image Reasoning: A Meta-Action Framework for Enhanced Visual Understanding

Jan 12, 2026Abstract:While Multimodal Large Language Models (MLLMs) excel at single-image understanding, they exhibit significantly degraded performance in multi-image reasoning scenarios. Multi-image reasoning presents fundamental challenges including complex inter-relationships between images and scattered critical information across image sets. Inspired by human cognitive processes, we propose the Cognition-Inspired Meta-Action Framework (CINEMA), a novel approach that decomposes multi-image reasoning into five structured meta-actions: Global, Focus, Hint, Think, and Answer which explicitly modeling the sequential cognitive steps humans naturally employ. For cold-start training, we introduce a Retrieval-Based Tree Sampling strategy that generates high-quality meta-action trajectories to bootstrap the model with reasoning patterns. During reinforcement learning, we adopt a two-stage paradigm: an exploration phase with Diversity-Preserving Strategy to avoid entropy collapse, followed by an annealed exploitation phase with DAPO to gradually strengthen exploitation. To train our model, we construct a dataset of 57k cold-start and 58k reinforcement learning instances spanning multi-image, multi-frame, and single-image tasks. We conduct extensive evaluations on multi-image reasoning benchmarks, video understanding benchmarks, and single-image benchmarks, achieving competitive state-of-the-art performance on several key benchmarks. Our model surpasses GPT-4o on the MUIR and MVMath benchmarks and notably outperforms specialized video reasoning models on video understanding benchmarks, demonstrating the effectiveness and generalizability of our human cognition-inspired reasoning framework.

GPT-PPG: A GPT-based Foundation Model for Photoplethysmography Signals

Mar 11, 2025Abstract:This study introduces a novel application of a Generative Pre-trained Transformer (GPT) model tailored for photoplethysmography (PPG) signals, serving as a foundation model for various downstream tasks. Adapting the standard GPT architecture to suit the continuous characteristics of PPG signals, our approach demonstrates promising results. Our models are pre-trained on our extensive dataset that contains more than 200 million 30s PPG samples. We explored different supervised fine-tuning techniques to adapt our model to downstream tasks, resulting in performance comparable to or surpassing current state-of-the-art (SOTA) methods in tasks like atrial fibrillation detection. A standout feature of our GPT model is its inherent capability to perform generative tasks such as signal denoising effectively, without the need for further fine-tuning. This success is attributed to the generative nature of the GPT framework.

Foundation Models in Electrocardiogram: A Review

Oct 24, 2024Abstract:The electrocardiogram (ECG) is ubiquitous across various healthcare domains, such as cardiac arrhythmia detection and sleep monitoring, making ECG analysis critically essential. Traditional deep learning models for ECG are task-specific, with a narrow scope of functionality and limited generalization capabilities. Recently, foundation models (FMs), also known as large pre-training models, have fundamentally reshaped the scheme of model design and representation learning, enhancing the performance across a variety of downstream tasks. This success has drawn interest in the exploration of FMs to address ECG-based medical challenges concurrently. This survey provides a timely, comprehensive and up-to-date overview of FMs for large-scale ECG-FMs. First, we offer a brief background introduction to FMs. Then, we discuss the model architectures, pre-training methods, and adaptation approaches of ECG-FMs from a methodology perspective. Despite the promising opportunities of ECG-FMs, we also outline the challenges and potential future directions. Overall, this survey aims to provide researchers and practitioners with insights into the research of ECG-FMs on theoretical underpinnings, domain-specific applications, and avenues for future exploration.

Human Stone Toolmaking Action Grammar (HSTAG): A Challenging Benchmark for Fine-grained Motor Behavior Recognition

Oct 10, 2024Abstract:Action recognition has witnessed the development of a growing number of novel algorithms and datasets in the past decade. However, the majority of public benchmarks were constructed around activities of daily living and annotated at a rather coarse-grained level, which lacks diversity in domain-specific datasets, especially for rarely seen domains. In this paper, we introduced Human Stone Toolmaking Action Grammar (HSTAG), a meticulously annotated video dataset showcasing previously undocumented stone toolmaking behaviors, which can be used for investigating the applications of advanced artificial intelligence techniques in understanding a rapid succession of complex interactions between two hand-held objects. HSTAG consists of 18,739 video clips that record 4.5 hours of experts' activities in stone toolmaking. Its unique features include (i) brief action durations and frequent transitions, mirroring the rapid changes inherent in many motor behaviors; (ii) multiple angles of view and switches among multiple tools, increasing intra-class variability; (iii) unbalanced class distributions and high similarity among different action sequences, adding difficulty in capturing distinct patterns for each action. Several mainstream action recognition models are used to conduct experimental analysis, which showcases the challenges and uniqueness of HSTAG https://nyu.databrary.org/volume/1697.

Deep Learning for Personalized Electrocardiogram Diagnosis: A Review

Sep 12, 2024Abstract:The electrocardiogram (ECG) remains a fundamental tool in cardiac diagnostics, yet its interpretation traditionally reliant on the expertise of cardiologists. The emergence of deep learning has heralded a revolutionary era in medical data analysis, particularly in the domain of ECG diagnostics. However, inter-patient variability prohibit the generalibility of ECG-AI model trained on a population dataset, hence degrade the performance of ECG-AI on specific patient or patient group. Many studies have address this challenge using different deep learning technologies. This comprehensive review systematically synthesizes research from a wide range of studies to provide an in-depth examination of cutting-edge deep-learning techniques in personalized ECG diagnosis. The review outlines a rigorous methodology for the selection of pertinent scholarly articles and offers a comprehensive overview of deep learning approaches applied to personalized ECG diagnostics. Moreover, the challenges these methods encounter are investigated, along with future research directions, culminating in insights into how the integration of deep learning can transform personalized ECG diagnosis and enhance cardiac care. By emphasizing both the strengths and limitations of current methodologies, this review underscores the immense potential of deep learning to refine and redefine ECG analysis in clinical practice, paving the way for more accurate, efficient, and personalized cardiac diagnostics.

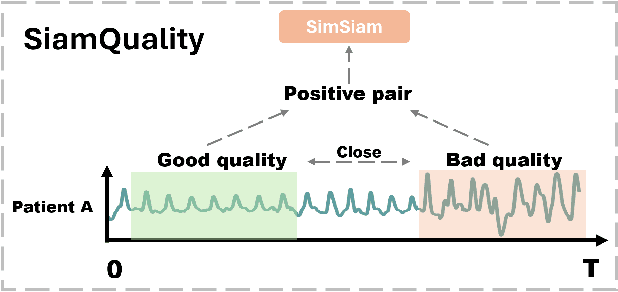

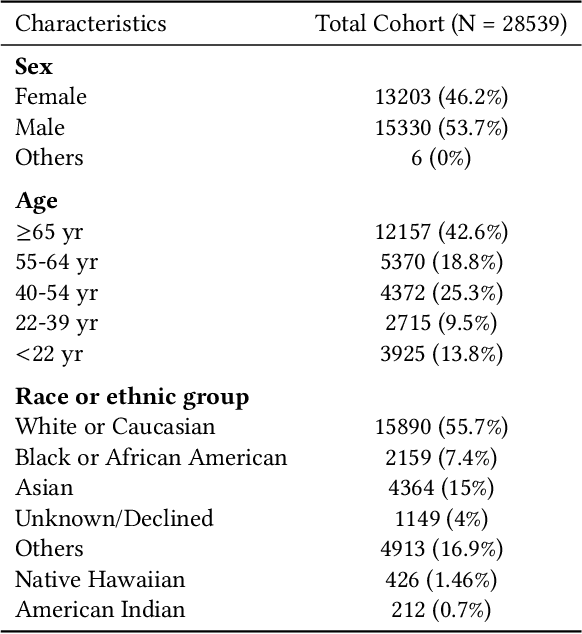

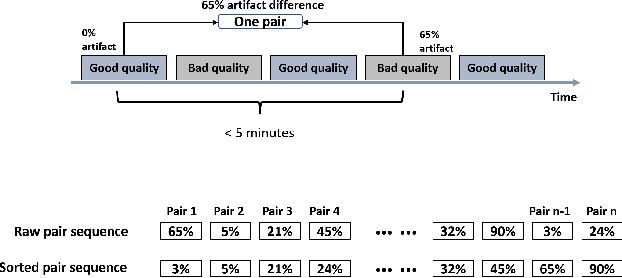

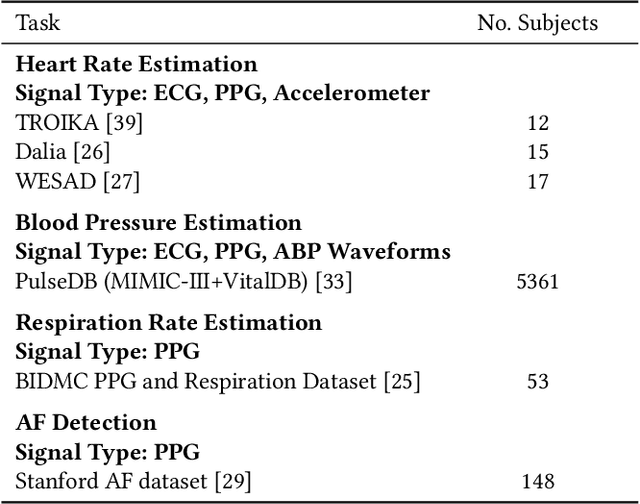

SiamQuality: A ConvNet-Based Foundation Model for Imperfect Physiological Signals

Apr 26, 2024

Abstract:Foundation models, especially those using transformers as backbones, have gained significant popularity, particularly in language and language-vision tasks. However, large foundation models are typically trained on high-quality data, which poses a significant challenge, given the prevalence of poor-quality real-world data. This challenge is more pronounced for developing foundation models for physiological data; such data are often noisy, incomplete, or inconsistent. The present work aims to provide a toolset for developing foundation models on physiological data. We leverage a large dataset of photoplethysmography (PPG) signals from hospitalized intensive care patients. For this data, we propose SimQuality, a novel self-supervised learning task based on convolutional neural networks (CNNs) as the backbone to enforce representations to be similar for good and poor quality signals that are from similar physiological states. We pre-trained the SimQuality on over 36 million 30-second PPG pairs and then fine-tuned and tested on six downstream tasks using external datasets. The results demonstrate the superiority of the proposed approach on all the downstream tasks, which are extremely important for heart monitoring on wearable devices. Our method indicates that CNNs can be an effective backbone for foundation models that are robust to training data quality.

SQUWA: Signal Quality Aware DNN Architecture for Enhanced Accuracy in Atrial Fibrillation Detection from Noisy PPG Signals

Apr 15, 2024

Abstract:Atrial fibrillation (AF), a common cardiac arrhythmia, significantly increases the risk of stroke, heart disease, and mortality. Photoplethysmography (PPG) offers a promising solution for continuous AF monitoring, due to its cost efficiency and integration into wearable devices. Nonetheless, PPG signals are susceptible to corruption from motion artifacts and other factors often encountered in ambulatory settings. Conventional approaches typically discard corrupted segments or attempt to reconstruct original signals, allowing for the use of standard machine learning techniques. However, this reduces dataset size and introduces biases, compromising prediction accuracy and the effectiveness of continuous monitoring. We propose a novel deep learning model, Signal Quality Weighted Fusion of Attentional Convolution and Recurrent Neural Network (SQUWA), designed to learn how to retain accurate predictions from partially corrupted PPG. Specifically, SQUWA innovatively integrates an attention mechanism that directly considers signal quality during the learning process, dynamically adjusting the weights of time series segments based on their quality. This approach enhances the influence of higher-quality segments while reducing that of lower-quality ones, effectively utilizing partially corrupted segments. This approach represents a departure from the conventional methods that exclude such segments, enabling the utilization of a broader range of data, which has great implications for less disruption when monitoring of AF risks and more accurate estimation of AF burdens. Our extensive experiments show that SQUWA outperform existing PPG-based models, achieving the highest AUCPR of 0.89 with label noise mitigation. This also exceeds the 0.86 AUCPR of models trained with using both electrocardiogram (ECG) and PPG data.

Evaluation of General Large Language Models in Contextually Assessing Semantic Concepts Extracted from Adult Critical Care Electronic Health Record Notes

Jan 24, 2024Abstract:The field of healthcare has increasingly turned its focus towards Large Language Models (LLMs) due to their remarkable performance. However, their performance in actual clinical applications has been underexplored. Traditional evaluations based on question-answering tasks don't fully capture the nuanced contexts. This gap highlights the need for more in-depth and practical assessments of LLMs in real-world healthcare settings. Objective: We sought to evaluate the performance of LLMs in the complex clinical context of adult critical care medicine using systematic and comprehensible analytic methods, including clinician annotation and adjudication. Methods: We investigated the performance of three general LLMs in understanding and processing real-world clinical notes. Concepts from 150 clinical notes were identified by MetaMap and then labeled by 9 clinicians. Each LLM's proficiency was evaluated by identifying the temporality and negation of these concepts using different prompts for an in-depth analysis. Results: GPT-4 showed overall superior performance compared to other LLMs. In contrast, both GPT-3.5 and text-davinci-003 exhibit enhanced performance when the appropriate prompting strategies are employed. The GPT family models have demonstrated considerable efficiency, evidenced by their cost-effectiveness and time-saving capabilities. Conclusion: A comprehensive qualitative performance evaluation framework for LLMs is developed and operationalized. This framework goes beyond singular performance aspects. With expert annotations, this methodology not only validates LLMs' capabilities in processing complex medical data but also establishes a benchmark for future LLM evaluations across specialized domains.

Reconsideration on evaluation of machine learning models in continuous monitoring using wearables

Dec 04, 2023

Abstract:This paper explores the challenges in evaluating machine learning (ML) models for continuous health monitoring using wearable devices beyond conventional metrics. We state the complexities posed by real-world variability, disease dynamics, user-specific characteristics, and the prevalence of false notifications, necessitating novel evaluation strategies. Drawing insights from large-scale heart studies, the paper offers a comprehensive guideline for robust ML model evaluation on continuous health monitoring.

Photoplethysmography based atrial fibrillation detection: an updated review from July 2019

Oct 22, 2023

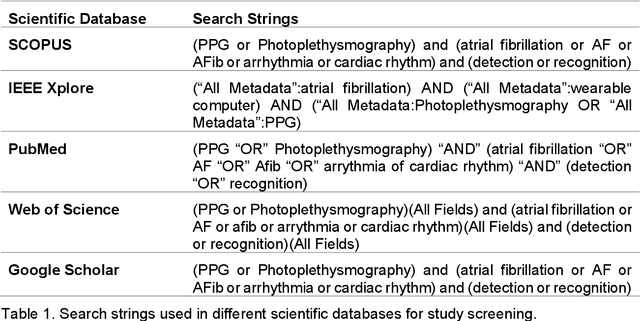

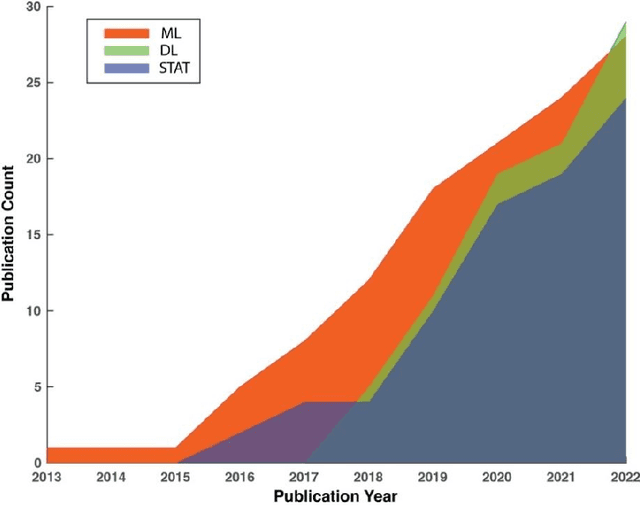

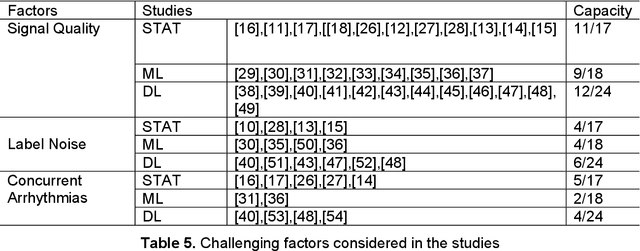

Abstract:Atrial fibrillation (AF) is a prevalent cardiac arrhythmia associated with significant health ramifications, including an elevated susceptibility to ischemic stroke, heart disease, and heightened mortality. Photoplethysmography (PPG) has emerged as a promising technology for continuous AF monitoring for its cost-effectiveness and widespread integration into wearable devices. Our team previously conducted an exhaustive review on PPG-based AF detection before June 2019. However, since then, more advanced technologies have emerged in this field. This paper offers a comprehensive review of the latest advancements in PPG-based AF detection, utilizing digital health and artificial intelligence (AI) solutions, within the timeframe spanning from July 2019 to December 2022. Through extensive exploration of scientific databases, we have identified 59 pertinent studies. Our comprehensive review encompasses an in-depth assessment of the statistical methodologies, traditional machine learning techniques, and deep learning approaches employed in these studies. In addition, we address the challenges encountered in the domain of PPG-based AF detection. Furthermore, we maintain a dedicated website to curate the latest research in this area, with regular updates on a regular basis.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge