Randall J Lee

SiamQuality: A ConvNet-Based Foundation Model for Imperfect Physiological Signals

Apr 26, 2024

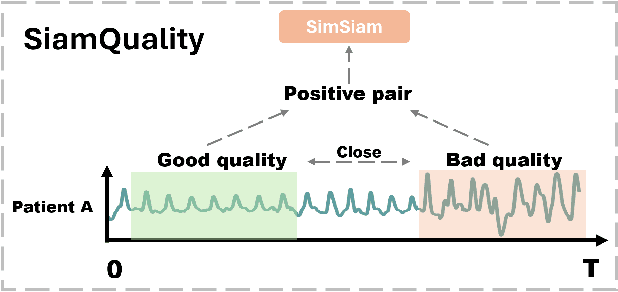

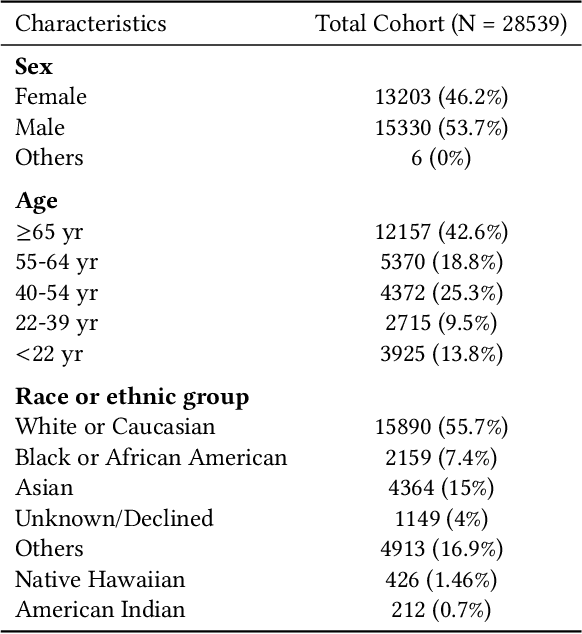

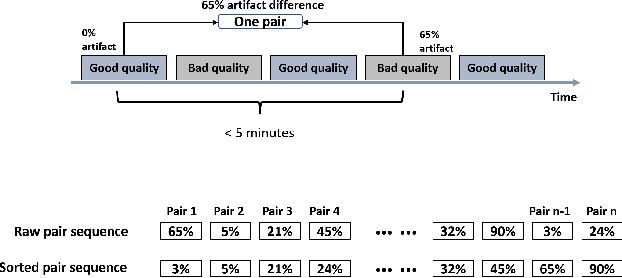

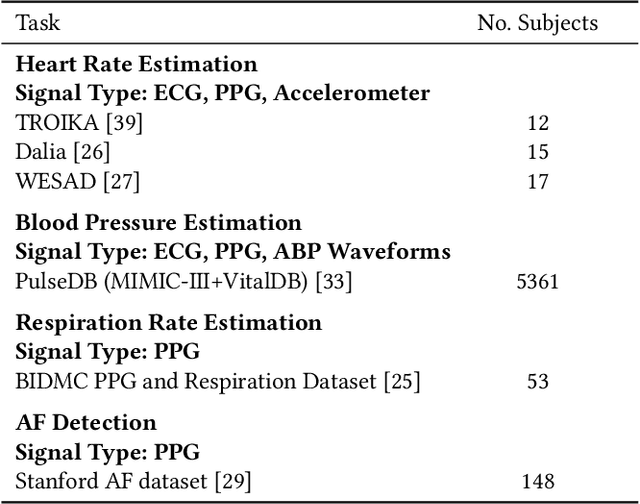

Abstract:Foundation models, especially those using transformers as backbones, have gained significant popularity, particularly in language and language-vision tasks. However, large foundation models are typically trained on high-quality data, which poses a significant challenge, given the prevalence of poor-quality real-world data. This challenge is more pronounced for developing foundation models for physiological data; such data are often noisy, incomplete, or inconsistent. The present work aims to provide a toolset for developing foundation models on physiological data. We leverage a large dataset of photoplethysmography (PPG) signals from hospitalized intensive care patients. For this data, we propose SimQuality, a novel self-supervised learning task based on convolutional neural networks (CNNs) as the backbone to enforce representations to be similar for good and poor quality signals that are from similar physiological states. We pre-trained the SimQuality on over 36 million 30-second PPG pairs and then fine-tuned and tested on six downstream tasks using external datasets. The results demonstrate the superiority of the proposed approach on all the downstream tasks, which are extremely important for heart monitoring on wearable devices. Our method indicates that CNNs can be an effective backbone for foundation models that are robust to training data quality.

SQUWA: Signal Quality Aware DNN Architecture for Enhanced Accuracy in Atrial Fibrillation Detection from Noisy PPG Signals

Apr 15, 2024

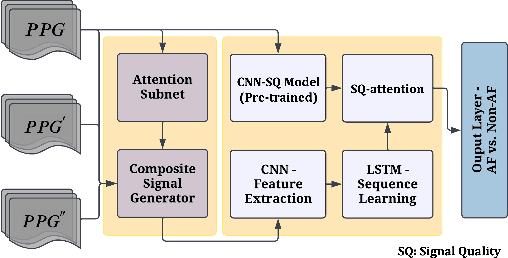

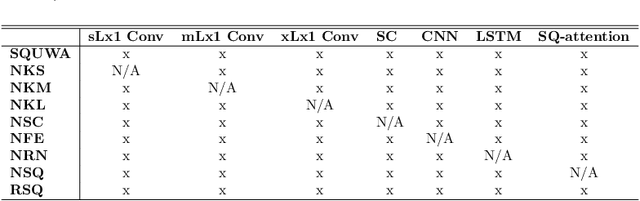

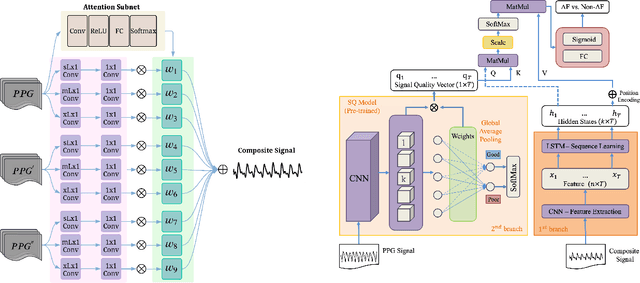

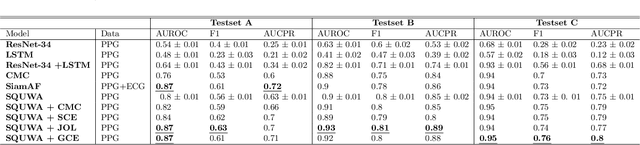

Abstract:Atrial fibrillation (AF), a common cardiac arrhythmia, significantly increases the risk of stroke, heart disease, and mortality. Photoplethysmography (PPG) offers a promising solution for continuous AF monitoring, due to its cost efficiency and integration into wearable devices. Nonetheless, PPG signals are susceptible to corruption from motion artifacts and other factors often encountered in ambulatory settings. Conventional approaches typically discard corrupted segments or attempt to reconstruct original signals, allowing for the use of standard machine learning techniques. However, this reduces dataset size and introduces biases, compromising prediction accuracy and the effectiveness of continuous monitoring. We propose a novel deep learning model, Signal Quality Weighted Fusion of Attentional Convolution and Recurrent Neural Network (SQUWA), designed to learn how to retain accurate predictions from partially corrupted PPG. Specifically, SQUWA innovatively integrates an attention mechanism that directly considers signal quality during the learning process, dynamically adjusting the weights of time series segments based on their quality. This approach enhances the influence of higher-quality segments while reducing that of lower-quality ones, effectively utilizing partially corrupted segments. This approach represents a departure from the conventional methods that exclude such segments, enabling the utilization of a broader range of data, which has great implications for less disruption when monitoring of AF risks and more accurate estimation of AF burdens. Our extensive experiments show that SQUWA outperform existing PPG-based models, achieving the highest AUCPR of 0.89 with label noise mitigation. This also exceeds the 0.86 AUCPR of models trained with using both electrocardiogram (ECG) and PPG data.

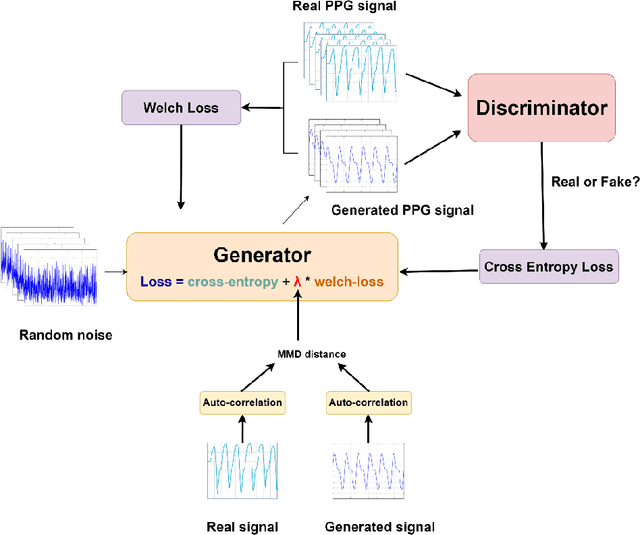

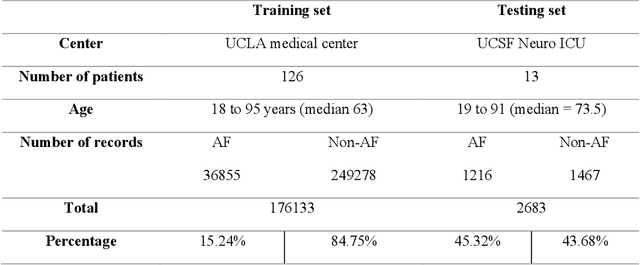

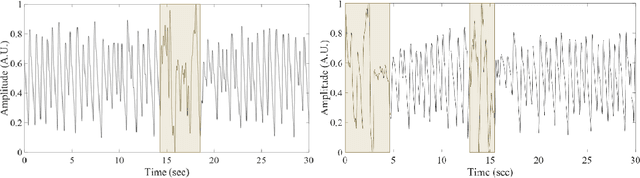

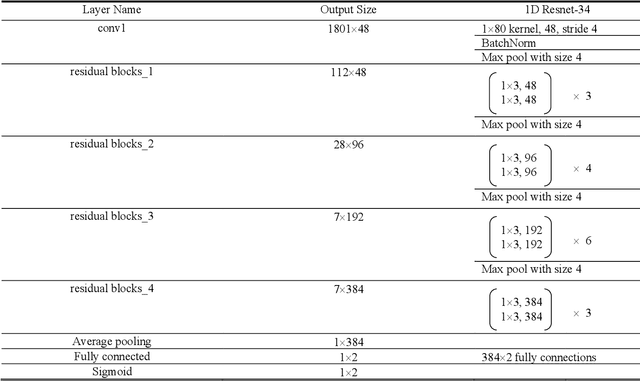

Welch-GAN: Generating realistic photoplethysmography signal from frequency-domain for atrial fibrillation detection

Aug 11, 2021

Abstract:Training machine learning algorithms from a small and imbalanced dataset is often a daunting challenge in medical research. However, it has been shown that the synthetic data generated by data augmentation techniques can enlarge the dataset and contribute to alleviating the imbalance situation. In this study, we propose a novel generative adversarial network (GAN) architecture-Welch-GAN and focused on examining how its influence on classifier performance is related to signal quality and class imbalance within the context of photoplethysmography (PPG)-based atrial fibrillation (AF) detection. Pulse oximetry data were collected from 126 adult patients and augmented using the permutation technique to build a large training set for training an AF detection model based on a one-dimensional residual neural network. To test the model, PPG data were collected from 13 stroke patients and utilized. Four data augmentation methods, including both traditional and GANs, are leveraged as baseline in this study. Three different experiments are designed to investigate each data augmentation methods from the aspect of performance gain, robustness to motion artifact and training sample size, respectively. Compared to the un-augmented data, by training the same AF classification algorithm using augmented data, the AF detection accuracy was significantly improved from 80.36% to over 90% with no compromise on sensitivity nor on negative predicted value. Within each data augmentation techniques, Welch-GAN has shown around 3% superiority in terms of AF detection accuracy compared to the baseline methods, which suggests the state-of-the-art of our proposed Welch-GAN.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge