Celia Cintas

gridfm-datakit-v1: A Python Library for Scalable and Realistic Power Flow and Optimal Power Flow Data Generation

Dec 16, 2025Abstract:We introduce gridfm-datakit-v1, a Python library for generating realistic and diverse Power Flow (PF) and Optimal Power Flow (OPF) datasets for training Machine Learning (ML) solvers. Existing datasets and libraries face three main challenges: (1) lack of realistic stochastic load and topology perturbations, limiting scenario diversity; (2) PF datasets are restricted to OPF-feasible points, hindering generalization of ML solvers to cases that violate operating limits (e.g., branch overloads or voltage violations); and (3) OPF datasets use fixed generator cost functions, limiting generalization across varying costs. gridfm-datakit addresses these challenges by: (1) combining global load scaling from real-world profiles with localized noise and supporting arbitrary N-k topology perturbations to create diverse yet realistic datasets; (2) generating PF samples beyond operating limits; and (3) producing OPF data with varying generator costs. It also scales efficiently to large grids (up to 10,000 buses). Comparisons with OPFData, OPF-Learn, PGLearn, and PF$Δ$ are provided. Available on GitHub at https://github.com/gridfm/gridfm-datakit under Apache 2.0 and via `pip install gridfm-datakit`.

Enabling Precise Topic Alignment in Large Language Models Via Sparse Autoencoders

Jun 14, 2025Abstract:Recent work shows that Sparse Autoencoders (SAE) applied to large language model (LLM) layers have neurons corresponding to interpretable concepts. These SAE neurons can be modified to align generated outputs, but only towards pre-identified topics and with some parameter tuning. Our approach leverages the observational and modification properties of SAEs to enable alignment for any topic. This method 1) scores each SAE neuron by its semantic similarity to an alignment text and uses them to 2) modify SAE-layer-level outputs by emphasizing topic-aligned neurons. We assess the alignment capabilities of this approach on diverse public topic datasets including Amazon reviews, Medicine, and Sycophancy, across the currently available open-source LLMs and SAE pairs (GPT2 and Gemma) with multiple SAEs configurations. Experiments aligning to medical prompts reveal several benefits over fine-tuning, including increased average language acceptability (0.25 vs. 0.5), reduced training time across multiple alignment topics (333.6s vs. 62s), and acceptable inference time for many applications (+0.00092s/token). Our open-source code is available at github.com/IBM/sae-steering.

Localizing Persona Representations in LLMs

May 30, 2025Abstract:We present a study on how and where personas -- defined by distinct sets of human characteristics, values, and beliefs -- are encoded in the representation space of large language models (LLMs). Using a range of dimension reduction and pattern recognition methods, we first identify the model layers that show the greatest divergence in encoding these representations. We then analyze the activations within a selected layer to examine how specific personas are encoded relative to others, including their shared and distinct embedding spaces. We find that, across multiple pre-trained decoder-only LLMs, the analyzed personas show large differences in representation space only within the final third of the decoder layers. We observe overlapping activations for specific ethical perspectives -- such as moral nihilism and utilitarianism -- suggesting a degree of polysemy. In contrast, political ideologies like conservatism and liberalism appear to be represented in more distinct regions. These findings help to improve our understanding of how LLMs internally represent information and can inform future efforts in refining the modulation of specific human traits in LLM outputs. Warning: This paper includes potentially offensive sample statements.

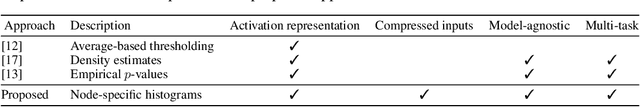

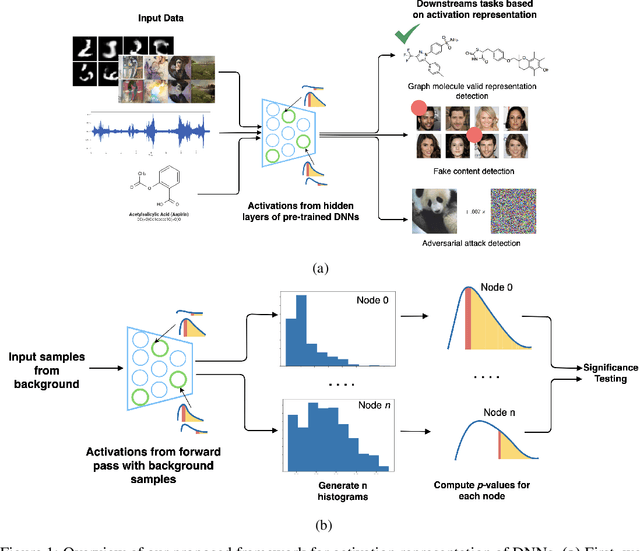

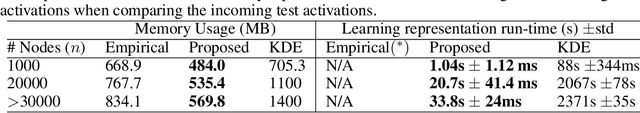

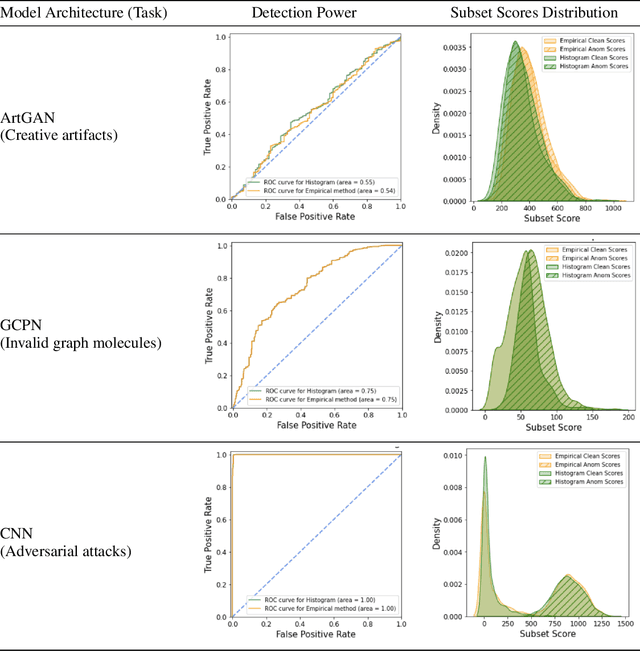

Efficient Representation of the Activation Space in Deep Neural Networks

Dec 13, 2023

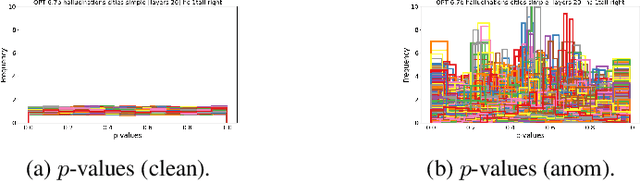

Abstract:The representations of the activation space of deep neural networks (DNNs) are widely utilized for tasks like natural language processing, anomaly detection and speech recognition. Due to the diverse nature of these tasks and the large size of DNNs, an efficient and task-independent representation of activations becomes crucial. Empirical p-values have been used to quantify the relative strength of an observed node activation compared to activations created by already-known inputs. Nonetheless, keeping raw data for these calculations increases memory resource consumption and raises privacy concerns. To this end, we propose a model-agnostic framework for creating representations of activations in DNNs using node-specific histograms to compute p-values of observed activations without retaining already-known inputs. Our proposed approach demonstrates promising potential when validated with multiple network architectures across various downstream tasks and compared with the kernel density estimates and brute-force empirical baselines. In addition, the framework reduces memory usage by 30% with up to 4 times faster p-value computing time while maintaining state of-the-art detection power in downstream tasks such as the detection of adversarial attacks and synthesized content. Moreover, as we do not persist raw data at inference time, we could potentially reduce susceptibility to attacks and privacy issues.

Weakly Supervised Detection of Hallucinations in LLM Activations

Dec 05, 2023

Abstract:We propose an auditing method to identify whether a large language model (LLM) encodes patterns such as hallucinations in its internal states, which may propagate to downstream tasks. We introduce a weakly supervised auditing technique using a subset scanning approach to detect anomalous patterns in LLM activations from pre-trained models. Importantly, our method does not need knowledge of the type of patterns a-priori. Instead, it relies on a reference dataset devoid of anomalies during testing. Further, our approach enables the identification of pivotal nodes responsible for encoding these patterns, which may offer crucial insights for fine-tuning specific sub-networks for bias mitigation. We introduce two new scanning methods to handle LLM activations for anomalous sentences that may deviate from the expected distribution in either direction. Our results confirm prior findings of BERT's limited internal capacity for encoding hallucinations, while OPT appears capable of encoding hallucination information internally. Importantly, our scanning approach, without prior exposure to false statements, performs comparably to a fully supervised out-of-distribution classifier.

Revisiting Skin Tone Fairness in Dermatological Lesion Classification

Aug 18, 2023Abstract:Addressing fairness in lesion classification from dermatological images is crucial due to variations in how skin diseases manifest across skin tones. However, the absence of skin tone labels in public datasets hinders building a fair classifier. To date, such skin tone labels have been estimated prior to fairness analysis in independent studies using the Individual Typology Angle (ITA). Briefly, ITA calculates an angle based on pixels extracted from skin images taking into account the lightness and yellow-blue tints. These angles are then categorised into skin tones that are subsequently used to analyse fairness in skin cancer classification. In this work, we review and compare four ITA-based approaches of skin tone classification on the ISIC18 dataset, a common benchmark for assessing skin cancer classification fairness in the literature. Our analyses reveal a high disagreement among previously published studies demonstrating the risks of ITA-based skin tone estimation methods. Moreover, we investigate the causes of such large discrepancy among these approaches and find that the lack of diversity in the ISIC18 dataset limits its use as a testbed for fairness analysis. Finally, we recommend further research on robust ITA estimation and diverse dataset acquisition with skin tone annotation to facilitate conclusive fairness assessments of artificial intelligence tools in dermatology. Our code is available at https://github.com/tkalbl/RevisitingSkinToneFairness.

Domain-agnostic and Multi-level Evaluation of Generative Models

Jan 20, 2023

Abstract:While the capabilities of generative models heavily improved in different domains (images, text, graphs, molecules, etc.), their evaluation metrics largely remain based on simplified quantities or manual inspection with limited practicality. To this end, we propose a framework for Multi-level Performance Evaluation of Generative mOdels (MPEGO), which could be employed across different domains. MPEGO aims to quantify generation performance hierarchically, starting from a sub-feature-based low-level evaluation to a global features-based high-level evaluation. MPEGO offers great customizability as the employed features are entirely user-driven and can thus be highly domain/problem-specific while being arbitrarily complex (e.g., outcomes of experimental procedures). We validate MPEGO using multiple generative models across several datasets from the material discovery domain. An ablation study is conducted to study the plausibility of intermediate steps in MPEGO. Results demonstrate that MPEGO provides a flexible, user-driven, and multi-level evaluation framework, with practical insights on the generation quality. The framework, source code, and experiments will be available at https://github.com/GT4SD/mpego.

Model-free feature selection to facilitate automatic discovery of divergent subgroups in tabular data

Mar 08, 2022

Abstract:Data-centric AI encourages the need of cleaning and understanding of data in order to achieve trustworthy AI. Existing technologies, such as AutoML, make it easier to design and train models automatically, but there is a lack of a similar level of capabilities to extract data-centric insights. Manual stratification of tabular data per a feature (e.g., gender) is limited to scale up for higher feature dimension, which could be addressed using automatic discovery of divergent subgroups. Nonetheless, these automatic discovery techniques often search across potentially exponential combinations of features that could be simplified using a preceding feature selection step. Existing feature selection techniques for tabular data often involve fitting a particular model in order to select important features. However, such model-based selection is prone to model-bias and spurious correlations in addition to requiring extra resource to design, fine-tune and train a model. In this paper, we propose a model-free and sparsity-based automatic feature selection (SAFS) framework to facilitate automatic discovery of divergent subgroups. Different from filter-based selection techniques, we exploit the sparsity of objective measures among feature values to rank and select features. We validated SAFS across two publicly available datasets (MIMIC-III and Allstate Claims) and compared it with six existing feature selection methods. SAFS achieves a reduction of feature selection time by a factor of 81x and 104x, averaged cross the existing methods in the MIMIC-III and Claims datasets respectively. SAFS-selected features are also shown to achieve competitive detection performance, e.g., 18.3% of features selected by SAFS in the Claims dataset detected divergent samples similar to those detected by using the whole features with a Jaccard similarity of 0.95 but with a 16x reduction in detection time.

Towards Creativity Characterization of Generative Models via Group-based Subset Scanning

Mar 03, 2022

Abstract:Deep generative models, such as Variational Autoencoders (VAEs) and Generative Adversarial Networks (GANs), have been employed widely in computational creativity research. However, such models discourage out-of-distribution generation to avoid spurious sample generation, thereby limiting their creativity. Thus, incorporating research on human creativity into generative deep learning techniques presents an opportunity to make their outputs more compelling and human-like. As we see the emergence of generative models directed toward creativity research, a need for machine learning-based surrogate metrics to characterize creative output from these models is imperative. We propose group-based subset scanning to identify, quantify, and characterize creative processes by detecting a subset of anomalous node-activations in the hidden layers of the generative models. Our experiments on the standard image benchmarks, and their "creatively generated" variants, reveal that the proposed subset scores distribution is more useful for detecting creative processes in the activation space rather than the pixel space. Further, we found that creative samples generate larger subsets of anomalies than normal or non-creative samples across datasets. The node activations highlighted during the creative decoding process are different from those responsible for the normal sample generation. Lastly, we assess if the images from the subsets selected by our method were also found creative by human evaluators, presenting a link between creativity perception in humans and node activations within deep neural nets.

Out-of-Distribution Detection in Dermatology using Input Perturbation and Subset Scanning

Jun 02, 2021

Abstract:Recent advances in deep learning have led to breakthroughs in the development of automated skin disease classification. As we observe an increasing interest in these models in the dermatology space, it is crucial to address aspects such as the robustness towards input data distribution shifts. Current skin disease models could make incorrect inferences for test samples from different hardware devices and clinical settings or unknown disease samples, which are out-of-distribution (OOD) from the training samples. To this end, we propose a simple yet effective approach that detect these OOD samples prior to making any decision. The detection is performed via scanning in the latent space representation (e.g., activations of the inner layers of any pre-trained skin disease classifier). The input samples could also perturbed to maximise divergence of OOD samples. We validate our ODD detection approach in two use cases: 1) identify samples collected from different protocols, and 2) detect samples from unknown disease classes. Additionally, we evaluate the performance of the proposed approach and compare it with other state-of-the-art methods. Furthermore, data-driven dermatology applications may deepen the disparity in clinical care across racial and ethnic groups since most datasets are reported to suffer from bias in skin tone distribution. Therefore, we also evaluate the fairness of these OOD detection methods across different skin tones. Our experiments resulted in competitive performance across multiple datasets in detecting OOD samples, which could be used (in the future) to design more effective transfer learning techniques prior to inferring on these samples.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge