Boshi Wang

A Two-Stage ISAC Framework for Low-Altitude Economy Based on 5G NR Signals

Nov 18, 2025Abstract:The evolution of next-generation wireless networks has spurred the vigorous development of the low-altitude economy (LAE). To support this emerging field while remaining compatible with existing network architectures, integrated sensing and communication (ISAC) based on 5G New Radio (NR) signals is regarded as a promising solution. However, merely leveraging standard 5G NR signals, such as the Synchronization Signal Block (SSB), presents fundamental limitations in sensing resolution. To address the issue, this paper proposes a two-stage coarse-to-fine sensing framework that utilizes standard 5G NR initial access signals augmented by a custom-designed sparse pilot structure (SPS) for high-precision unmanned aerial vehicles (UAV) sensing. In Stage I, we first fuse information from the SSB, Type\#0-PDCCH, and system information block 1 (SIB1) to ensure the initial target detection. In Stage II, a refined estimation algorithm is introduced to overcome the resolution limitations of these signals. Inspired by the sparse array theory, this stage employs a novel SPS, which is inserted into resource blocks (RBs) within the CORSET\#0 bandwidth. To accurately extract the off-grid range and velocity parameters from these sparse pilots, we develop a corresponding high-resolution algorithm based on the weighted unwrapped phase (WUP) technique and the RELAX-based iterative method. Finally, the density-based spatial clustering of applications with noise (DBSCAN) algorithm is adopted to prune the redundant detections arising from beam overlap. Comprehensive simulation results demonstrate the superior estimation accuracy and computational efficiency of the proposed framework in comparison to other techniques.

Is the Reversal Curse a Binding Problem? Uncovering Limitations of Transformers from a Basic Generalization Failure

Apr 02, 2025Abstract:Despite their impressive capabilities, LLMs exhibit a basic generalization failure known as the Reversal Curse, where they struggle to learn reversible factual associations. Understanding why this occurs could help identify weaknesses in current models and advance their generalization and robustness. In this paper, we conjecture that the Reversal Curse in LLMs is a manifestation of the long-standing binding problem in cognitive science, neuroscience and AI. Specifically, we identify two primary causes of the Reversal Curse stemming from transformers' limitations in conceptual binding: the inconsistency and entanglements of concept representations. We perform a series of experiments that support these conjectures. Our exploration leads to a model design based on JEPA (Joint-Embedding Predictive Architecture) that for the first time breaks the Reversal Curse without side-stepping it with specialized data augmentation or non-causal masking, and moreover, generalization could be further improved by incorporating special memory layers that support disentangled concept representations. We demonstrate that the skill of reversal unlocks a new kind of memory integration that enables models to solve large-scale arithmetic reasoning problems via parametric forward-chaining, outperforming frontier LLMs based on non-parametric memory and prolonged explicit reasoning.

ScienceAgentBench: Toward Rigorous Assessment of Language Agents for Data-Driven Scientific Discovery

Oct 07, 2024

Abstract:The advancements of language language models (LLMs) have piqued growing interest in developing LLM-based language agents to automate scientific discovery end-to-end, which has sparked both excitement and skepticism about the true capabilities of such agents. In this work, we argue that for an agent to fully automate scientific discovery, it must be able to complete all essential tasks in the workflow. Thus, we call for rigorous assessment of agents on individual tasks in a scientific workflow before making bold claims on end-to-end automation. To this end, we present ScienceAgentBench, a new benchmark for evaluating language agents for data-driven scientific discovery. To ensure the scientific authenticity and real-world relevance of our benchmark, we extract 102 tasks from 44 peer-reviewed publications in four disciplines and engage nine subject matter experts to validate them. We unify the target output for every task to a self-contained Python program file and employ an array of evaluation metrics to examine the generated programs, execution results, and costs. Each task goes through multiple rounds of manual validation by annotators and subject matter experts to ensure its annotation quality and scientific plausibility. We also propose two effective strategies to mitigate data contamination concerns. Using our benchmark, we evaluate five open-weight and proprietary LLMs, each with three frameworks: direct prompting, OpenHands, and self-debug. Given three attempts for each task, the best-performing agent can only solve 32.4% of the tasks independently and 34.3% with expert-provided knowledge. These results underscore the limited capacities of current language agents in generating code for data-driven discovery, let alone end-to-end automation for scientific research.

Grokked Transformers are Implicit Reasoners: A Mechanistic Journey to the Edge of Generalization

May 27, 2024

Abstract:We study whether transformers can learn to implicitly reason over parametric knowledge, a skill that even the most capable language models struggle with. Focusing on two representative reasoning types, composition and comparison, we consistently find that transformers can learn implicit reasoning, but only through grokking, i.e., extended training far beyond overfitting. The levels of generalization also vary across reasoning types: when faced with out-of-distribution examples, transformers fail to systematically generalize for composition but succeed for comparison. We delve into the model's internals throughout training, conducting analytical experiments that reveal: 1) the mechanism behind grokking, such as the formation of the generalizing circuit and its relation to the relative efficiency of generalizing and memorizing circuits, and 2) the connection between systematicity and the configuration of the generalizing circuit. Our findings guide data and training setup to better induce implicit reasoning and suggest potential improvements to the transformer architecture, such as encouraging cross-layer knowledge sharing. Furthermore, we demonstrate that for a challenging reasoning task with a large search space, GPT-4-Turbo and Gemini-1.5-Pro based on non-parametric memory fail badly regardless of prompting styles or retrieval augmentation, while a fully grokked transformer can achieve near-perfect accuracy, showcasing the power of parametric memory for complex reasoning.

Beamforming Design for Double-Active-RIS-aided Communication Systems with Inter-Excitation

Mar 17, 2024Abstract:In this paper, we investigate a double-active-reconfigurable intelligent surface (RIS)-aided downlink wireless communication system, where a multi-antenna base station (BS) serves multiple single-antenna users with both double reflection and single reflection links. Due to the signal amplification capability of active RISs, the mutual influence between active RISs, which is termed as the "inter-excitation" effect, cannot be ignored. Then, we develop a feedback-type model to characterize the signal containing the inter-excitation effect. Based on the signal model, we formulate a weighted sum rate (WSR) maximization problem by jointly optimizing the beamforming matrix at the BS and the reflecting coefficient matrices at the two active RISs, subject to power constraints at the BS and active RISs, as well as the maximum amplification gain constraints of the active RISs. To solve this non-convex problem, we first transform the problem into a more tractable form using the fractional programming (FP) method. Then, by introducing auxiliary variables, the problem can be converted into an equivalent form that can be solved by using a low-complexity penalty dual decomposition (PDD) algorithm. Finally, simulation results indicate that it is crucial to consider the inter-excitation effect between active RISs in beamforming design for double-active-RIS-aided communication systems. Additionally, it prevails over other benchmark schemes with single active RIS and double passive RISs in terms of achievable rate.

LLMs in the Imaginarium: Tool Learning through Simulated Trial and Error

Mar 07, 2024

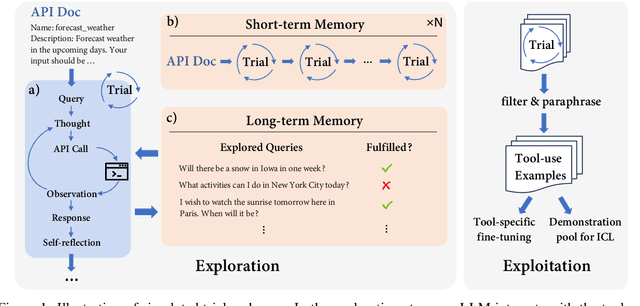

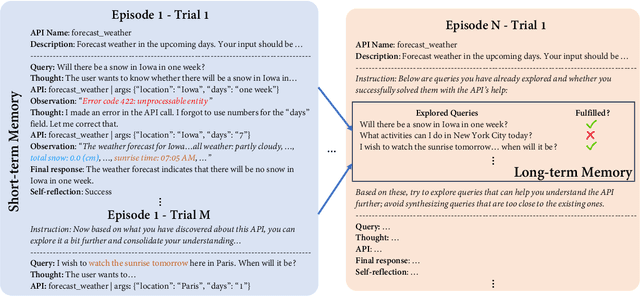

Abstract:Tools are essential for large language models (LLMs) to acquire up-to-date information and take consequential actions in external environments. Existing work on tool-augmented LLMs primarily focuses on the broad coverage of tools and the flexibility of adding new tools. However, a critical aspect that has surprisingly been understudied is simply how accurately an LLM uses tools for which it has been trained. We find that existing LLMs, including GPT-4 and open-source LLMs specifically fine-tuned for tool use, only reach a correctness rate in the range of 30% to 60%, far from reliable use in practice. We propose a biologically inspired method for tool-augmented LLMs, simulated trial and error (STE), that orchestrates three key mechanisms for successful tool use behaviors in the biological system: trial and error, imagination, and memory. Specifically, STE leverages an LLM's 'imagination' to simulate plausible scenarios for using a tool, after which the LLM interacts with the tool to learn from its execution feedback. Both short-term and long-term memory are employed to improve the depth and breadth of the exploration, respectively. Comprehensive experiments on ToolBench show that STE substantially improves tool learning for LLMs under both in-context learning and fine-tuning settings, bringing a boost of 46.7% to Mistral-Instruct-7B and enabling it to outperform GPT-4. We also show effective continual learning of tools via a simple experience replay strategy.

Reconfigurable Intelligent Surface-Aided Dual-Function Radar and Communication Systems With MU-MIMO Communication

Feb 08, 2024

Abstract:In this paper, we investigate an reconfigurable intelligent surface (RIS)-aided integrated sensing and communication (ISAC) system. Our objective is to maximize the achievable sum rate of the multi-antenna communication users through the joint active and passive beamforming. {Specifically}, the weighted minimum mean-square error (WMMSE) method is { first} used to reformulate the original problem into an equivalent one. Then, we utilize an alternating optimization (AO) { algorithm} to decouple the optimization variables and decompose this challenging problem into two subproblems. Given reflecting coefficients, a penalty-based algorithm is utilized to deal with the non-convex radar signal-to-noise ratio (SNR) constraints. For the given beamforming matrix of the BS, we apply majorization-minimization (MM) to transform the problem into a quadratic constraint quadratic programming (QCQP) problem, which is ultimately solved using a semidefinite relaxation (SDR)-based algorithm. Simulation results illustrate the advantage of deploying RIS in the considered multi-user MIMO (MU-MIMO) ISAC systems.

Joint Beamforming Design for Double Active RIS-assisted Radar-Communication Coexistence Systems

Feb 07, 2024

Abstract:Integrated sensing and communication (ISAC) technology has been considered as one of the key candidate technologies in the next-generation wireless communication systems. However, when radar and communication equipment coexist in the same system, i.e. radar-communication coexistence (RCC), the interference from communication systems to radar can be large and cannot be ignored. Recently, reconfigurable intelligent surface (RIS) has been introduced into RCC systems to reduce the interference. However, the "multiplicative fading" effect introduced by passive RIS limits its performance. To tackle this issue, we consider a double active RIS-assisted RCC system, which focuses on the design of the radar's beamforming vector and the active RISs' reflecting coefficient matrices, to maximize the achievable data rate of the communication system. The considered system needs to meet the radar detection constraint and the power budgets at the radar and the RISs. Since the problem is non-convex, we propose an algorithm based on the penalty dual decomposition (PDD) framework. Specifically, we initially introduce auxiliary variables to reformulate the coupled variables into equation constraints and incorporate these constraints into the objective function through the PDD framework. Then, we decouple the equivalent problem into several subproblems by invoking the block coordinate descent (BCD) method. Furthermore, we employ the Lagrange dual method to alternately optimize these subproblems. Simulation results verify the effectiveness of the proposed algorithm. Furthermore, the results also show that under the same power budget, deploying double active RISs in RCC systems can achieve higher data rate than those with single active RIS and double passive RISs.

Secure Wireless Communication in Active RIS-Assisted DFRC System

Feb 03, 2024

Abstract:This work considers a dual-functional radar and communication (DFRC) system with an active reconfigurable intelligent surface (RIS) and a potential eavesdropper. Our purpose is to maximize the secrecy rate (SR) of the system by jointly designing the beamforming matrix at the DFRC base station (BS) and the reflecting coefficients at the active RIS, subject to the signal-to-interference-plus-noise-ratio (SINR) constraint of the radar echo and the power consumption constraints at the DFRC-BS and active RIS. An alternating optimization (AO) algorithm based on semi-definite relaxation (SDR) and majorizationminimization (MM) is applied to solve the SR-maximization problem by alternately optimizing the beamforming matrix and the reflecting coefficients. Specifically, we first apply the SDR and successive convex approximation (SCA) methods to transform the two subproblems into more tractable forms, then the MM method is applied to derive a concave surrogate function and iteratively solve the subproblems. Finally, simulation results indicate that the active RIS can better confront the impact of "multiplicative fading" and outperforms traditional passive RIS in terms of both secure data rate and radar sensing performance.

How Trustworthy are Open-Source LLMs? An Assessment under Malicious Demonstrations Shows their Vulnerabilities

Nov 15, 2023

Abstract:The rapid progress in open-source Large Language Models (LLMs) is significantly driving AI development forward. However, there is still a limited understanding of their trustworthiness. Deploying these models at scale without sufficient trustworthiness can pose significant risks, highlighting the need to uncover these issues promptly. In this work, we conduct an assessment of open-source LLMs on trustworthiness, scrutinizing them across eight different aspects including toxicity, stereotypes, ethics, hallucination, fairness, sycophancy, privacy, and robustness against adversarial demonstrations. We propose an enhanced Chain of Utterances-based (CoU) prompting strategy by incorporating meticulously crafted malicious demonstrations for trustworthiness attack. Our extensive experiments encompass recent and representative series of open-source LLMs, including Vicuna, MPT, Falcon, Mistral, and Llama 2. The empirical outcomes underscore the efficacy of our attack strategy across diverse aspects. More interestingly, our result analysis reveals that models with superior performance in general NLP tasks do not always have greater trustworthiness; in fact, larger models can be more vulnerable to attacks. Additionally, models that have undergone instruction tuning, focusing on instruction following, tend to be more susceptible, although fine-tuning LLMs for safety alignment proves effective in mitigating adversarial trustworthiness attacks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge