Ashutosh Pandey

Improving Resource-Efficient Speech Enhancement via Neural Differentiable DSP Vocoder Refinement

Aug 20, 2025

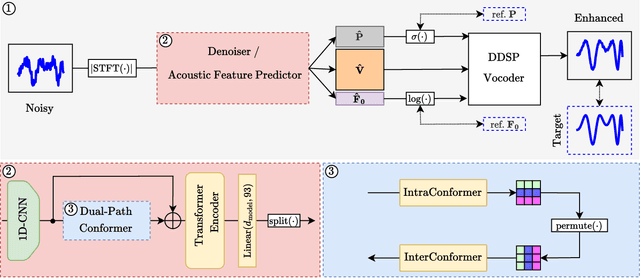

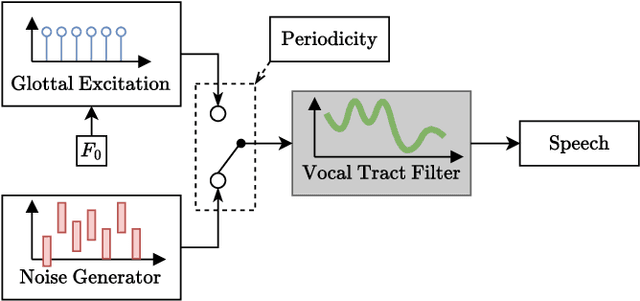

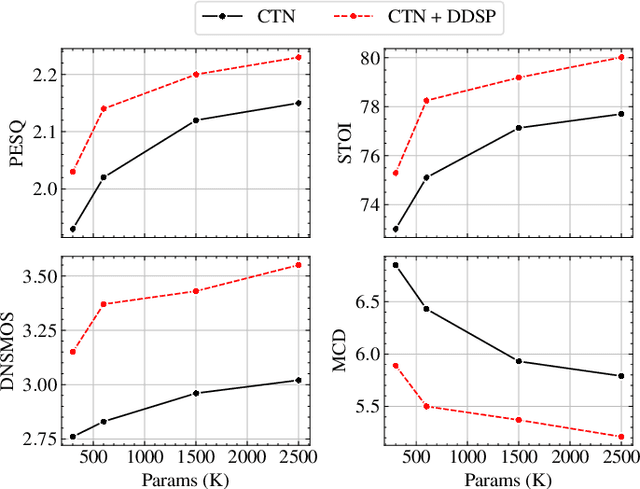

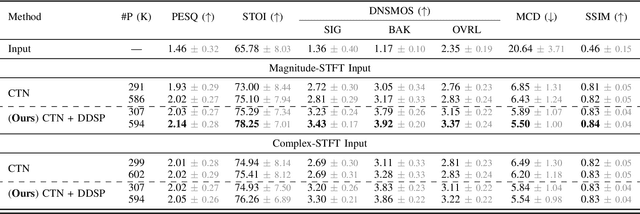

Abstract:Deploying speech enhancement (SE) systems in wearable devices, such as smart glasses, is challenging due to the limited computational resources on the device. Although deep learning methods have achieved high-quality results, their computational cost limits their feasibility on embedded platforms. This work presents an efficient end-to-end SE framework that leverages a Differentiable Digital Signal Processing (DDSP) vocoder for high-quality speech synthesis. First, a compact neural network predicts enhanced acoustic features from noisy speech: spectral envelope, fundamental frequency (F0), and periodicity. These features are fed into the DDSP vocoder to synthesize the enhanced waveform. The system is trained end-to-end with STFT and adversarial losses, enabling direct optimization at the feature and waveform levels. Experimental results show that our method improves intelligibility and quality by 4% (STOI) and 19% (DNSMOS) over strong baselines without significantly increasing computation, making it well-suited for real-time applications.

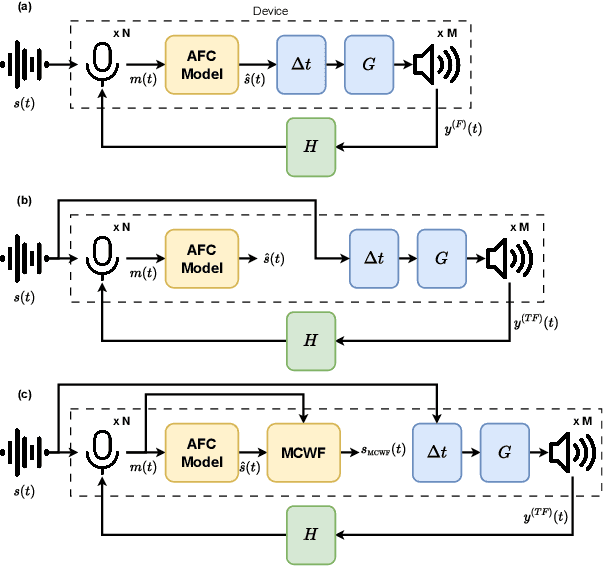

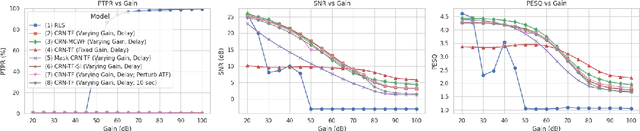

A Novel Deep Learning Framework for Efficient Multichannel Acoustic Feedback Control

May 21, 2025

Abstract:This study presents a deep-learning framework for controlling multichannel acoustic feedback in audio devices. Traditional digital signal processing methods struggle with convergence when dealing with highly correlated noise such as feedback. We introduce a Convolutional Recurrent Network that efficiently combines spatial and temporal processing, significantly enhancing speech enhancement capabilities with lower computational demands. Our approach utilizes three training methods: In-a-Loop Training, Teacher Forcing, and a Hybrid strategy with a Multichannel Wiener Filter, optimizing performance in complex acoustic environments. This scalable framework offers a robust solution for real-world applications, making significant advances in Acoustic Feedback Control technology.

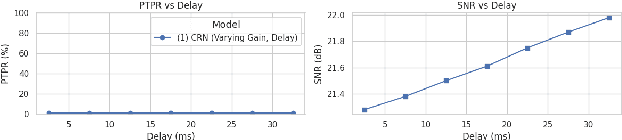

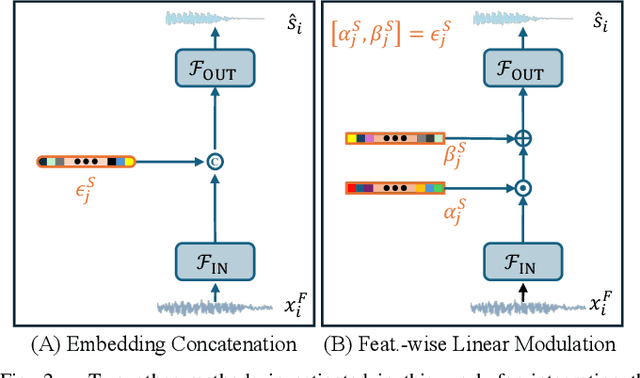

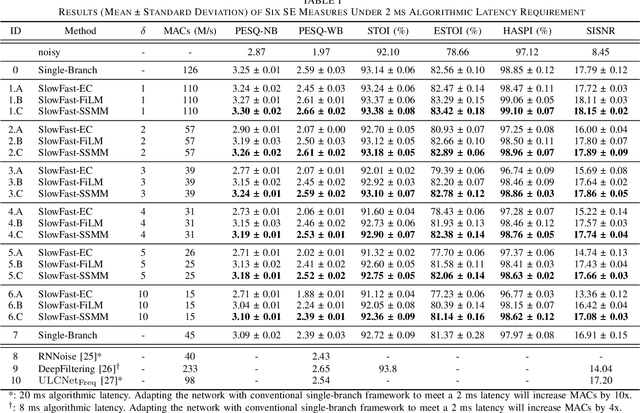

Modulating State Space Model with SlowFast Framework for Compute-Efficient Ultra Low-Latency Speech Enhancement

Nov 04, 2024

Abstract:Deep learning-based speech enhancement (SE) methods often face significant computational challenges when needing to meet low-latency requirements because of the increased number of frames to be processed. This paper introduces the SlowFast framework which aims to reduce computation costs specifically when low-latency enhancement is needed. The framework consists of a slow branch that analyzes the acoustic environment at a low frame rate, and a fast branch that performs SE in the time domain at the needed higher frame rate to match the required latency. Specifically, the fast branch employs a state space model where its state transition process is dynamically modulated by the slow branch. Experiments on a SE task with a 2 ms algorithmic latency requirement using the Voice Bank + Demand dataset show that our approach reduces computation cost by 70% compared to a baseline single-branch network with equivalent parameters, without compromising enhancement performance. Furthermore, by leveraging the SlowFast framework, we implemented a network that achieves an algorithmic latency of just 60 {\mu}s (one sample point at 16 kHz sample rate) with a computation cost of 100 M MACs/s, while scoring a PESQ-NB of 3.12 and SISNR of 16.62.

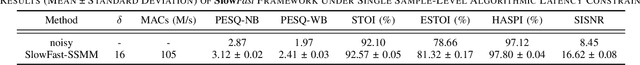

Dynamic Gated Recurrent Neural Network for Compute-efficient Speech Enhancement

Aug 22, 2024

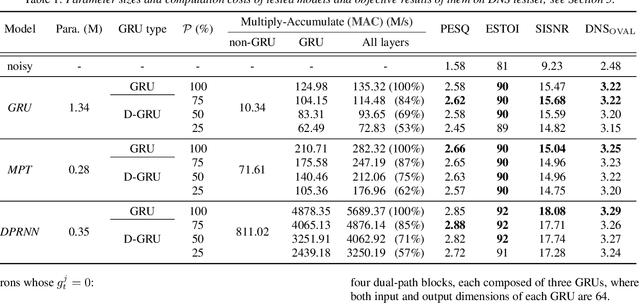

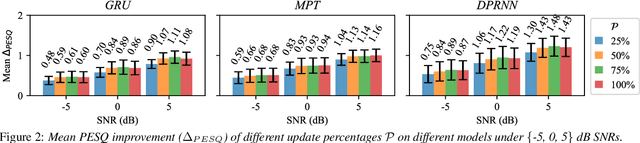

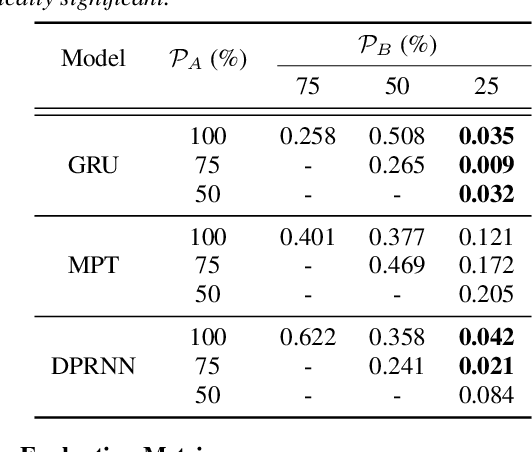

Abstract:This paper introduces a new Dynamic Gated Recurrent Neural Network (DG-RNN) for compute-efficient speech enhancement models running on resource-constrained hardware platforms. It leverages the slow evolution characteristic of RNN hidden states over steps, and updates only a selected set of neurons at each step by adding a newly proposed select gate to the RNN model. This select gate allows the computation cost of the conventional RNN to be reduced during network inference. As a realization of the DG-RNN, we further propose the Dynamic Gated Recurrent Unit (D-GRU) which does not require additional parameters. Test results obtained from several state-of-the-art compute-efficient RNN-based speech enhancement architectures using the DNS challenge dataset, show that the D-GRU based model variants maintain similar speech intelligibility and quality metrics comparable to the baseline GRU based models even with an average 50% reduction in GRU computes.

FoVNet: Configurable Field-of-View Speech Enhancement with Low Computation and Distortion for Smart Glasses

Aug 12, 2024

Abstract:This paper presents a novel multi-channel speech enhancement approach, FoVNet, that enables highly efficient speech enhancement within a configurable field of view (FoV) of a smart-glasses user without needing specific target-talker(s) directions. It advances over prior works by enhancing all speakers within any given FoV, with a hybrid signal processing and deep learning approach designed with high computational efficiency. The neural network component is designed with ultra-low computation (about 50 MMACS). A multi-channel Wiener filter and a post-processing module are further used to improve perceptual quality. We evaluate our algorithm with a microphone array on smart glasses, providing a configurable, efficient solution for augmented hearing on energy-constrained devices. FoVNet excels in both computational efficiency and speech quality across multiple scenarios, making it a promising solution for smart glasses applications.

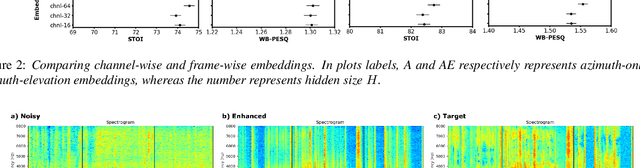

All Neural Low-latency Directional Speech Extraction

Jul 05, 2024

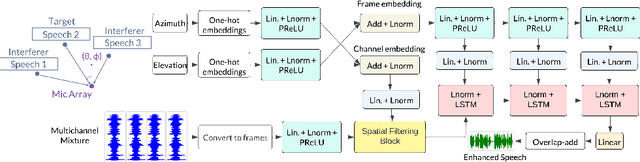

Abstract:We introduce a novel all neural model for low-latency directional speech extraction. The model uses direction of arrival (DOA) embeddings from a predefined spatial grid, which are transformed and fused into a recurrent neural network based speech extraction model. This process enables the model to effectively extract speech from a specified DOA. Unlike previous methods that relied on hand-crafted directional features, the proposed model trains DOA embeddings from scratch using speech enhancement loss, making it suitable for low-latency scenarios. Additionally, it operates at a high frame rate, taking in DOA with each input frame, which brings in the capability of quickly adapting to changing scene in highly dynamic real-world scenarios. We provide extensive evaluation to demonstrate the model's efficacy in directional speech extraction, robustness to DOA mismatch, and its capability to quickly adapt to abrupt changes in DOA.

Speech enhancement deep-learning architecture for efficient edge processing

May 27, 2024

Abstract:Deep learning has become a de facto method of choice for speech enhancement tasks with significant improvements in speech quality. However, real-time processing with reduced size and computations for low-power edge devices drastically degrades speech quality. Recently, transformer-based architectures have greatly reduced the memory requirements and provided ways to improve the model performance through local and global contexts. However, the transformer operations remain computationally heavy. In this work, we introduce WaveUNet squeeze-excitation Res2 (WSR)-based metric generative adversarial network (WSR-MGAN) architecture that can be efficiently implemented on low-power edge devices for noise suppression tasks while maintaining speech quality. We utilize multi-scale features using Res2Net blocks that can be related to spectral content used in speech-processing tasks. In the generator, we integrate squeeze-excitation blocks (SEB) with multi-scale features for maintaining local and global contexts along with gated recurrent units (GRUs). The proposed approach is optimized through a combined loss function calculated over raw waveform, multi-resolution magnitude spectrogram, and objective metrics using a metric discriminator. Experimental results in terms of various objective metrics on VoiceBank+DEMAND and DNS-2020 challenge datasets demonstrate that the proposed speech enhancement (SE) approach outperforms the baselines and achieves state-of-the-art (SOTA) performance in the time domain.

Towards Decoupling Frontend Enhancement and Backend Recognition in Monaural Robust ASR

Mar 11, 2024Abstract:It has been shown that the intelligibility of noisy speech can be improved by speech enhancement (SE) algorithms. However, monaural SE has not been established as an effective frontend for automatic speech recognition (ASR) in noisy conditions compared to an ASR model trained on noisy speech directly. The divide between SE and ASR impedes the progress of robust ASR systems, especially as SE has made major advances in recent years. This paper focuses on eliminating this divide with an ARN (attentive recurrent network) time-domain and a CrossNet time-frequency domain enhancement models. The proposed systems fully decouple frontend enhancement and backend ASR trained only on clean speech. Results on the WSJ, CHiME-2, LibriSpeech, and CHiME-4 corpora demonstrate that ARN and CrossNet enhanced speech both translate to improved ASR results in noisy and reverberant environments, and generalize well to real acoustic scenarios. The proposed system outperforms the baselines trained on corrupted speech directly. Furthermore, it cuts the previous best word error rate (WER) on CHiME-2 by $28.4\%$ relatively with a $5.57\%$ WER, and achieves $3.32/4.44\%$ WER on single-channel CHiME-4 simulated/real test data without training on CHiME-4.

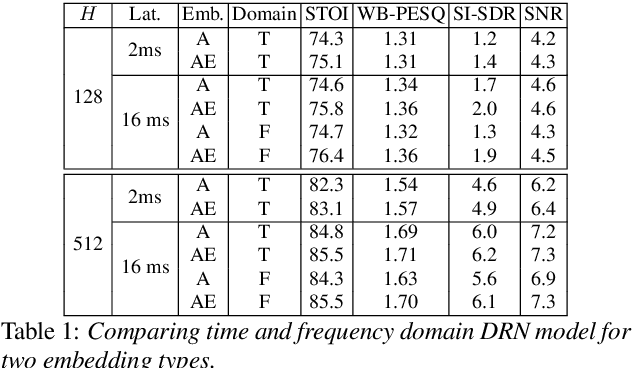

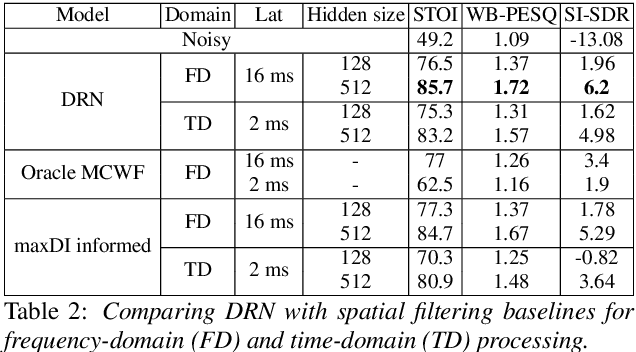

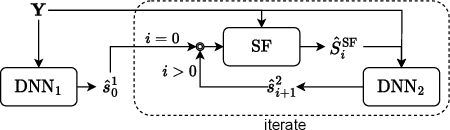

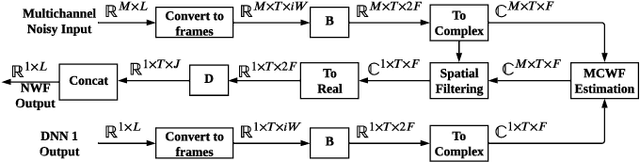

On the Importance of Neural Wiener Filter for Resource Efficient Multichannel Speech Enhancement

Jan 15, 2024

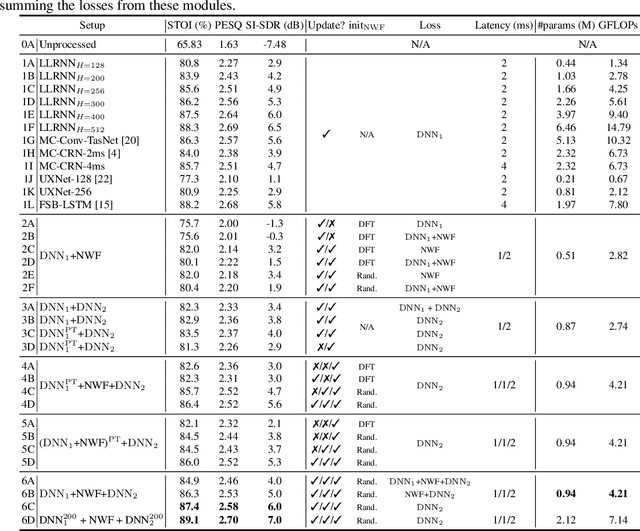

Abstract:We introduce a time-domain framework for efficient multichannel speech enhancement, emphasizing low latency and computational efficiency. This framework incorporates two compact deep neural networks (DNNs) surrounding a multichannel neural Wiener filter (NWF). The first DNN enhances the speech signal to estimate NWF coefficients, while the second DNN refines the output from the NWF. The NWF, while conceptually similar to the traditional frequency-domain Wiener filter, undergoes a training process optimized for low-latency speech enhancement, involving fine-tuning of both analysis and synthesis transforms. Our research results illustrate that the NWF output, having minimal nonlinear distortions, attains performance levels akin to those of the first DNN, deviating from conventional Wiener filter paradigms. Training all components jointly outperforms sequential training, despite its simplicity. Consequently, this framework achieves superior performance with fewer parameters and reduced computational demands, making it a compelling solution for resource-efficient multichannel speech enhancement.

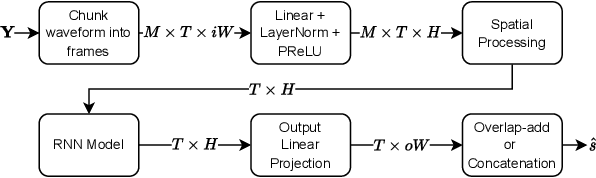

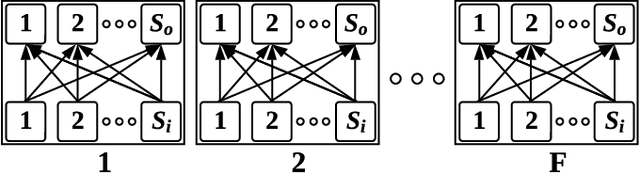

Decoupled Spatial and Temporal Processing for Resource Efficient Multichannel Speech Enhancement

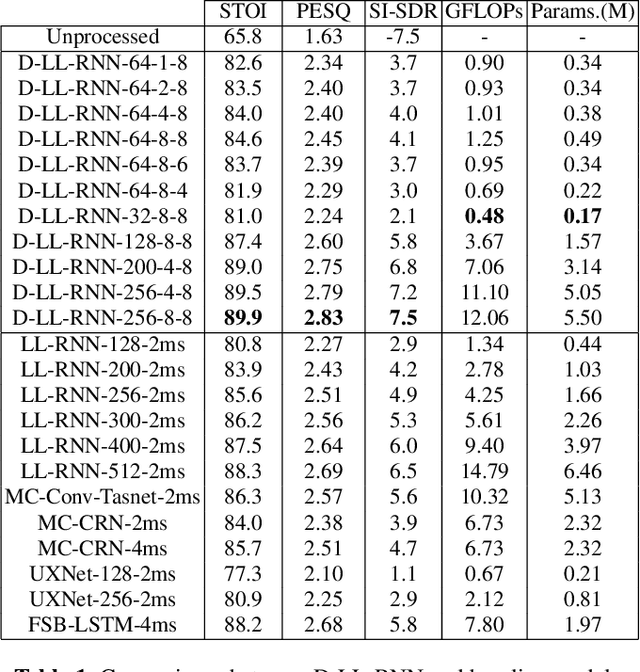

Jan 15, 2024

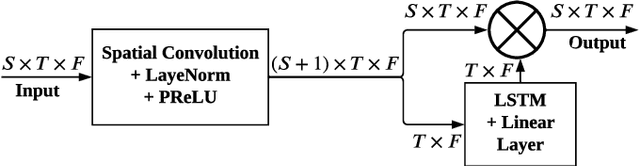

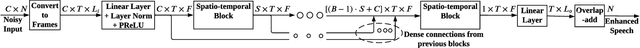

Abstract:We present a novel model designed for resource-efficient multichannel speech enhancement in the time domain, with a focus on low latency, lightweight, and low computational requirements. The proposed model incorporates explicit spatial and temporal processing within deep neural network (DNN) layers. Inspired by frequency-dependent multichannel filtering, our spatial filtering process applies multiple trainable filters to each hidden unit across the spatial dimension, resulting in a multichannel output. The temporal processing is applied over a single-channel output stream from the spatial processing using a Long Short-Term Memory (LSTM) network. The output from the temporal processing stage is then further integrated into the spatial dimension through elementwise multiplication. This explicit separation of spatial and temporal processing results in a resource-efficient network design. Empirical findings from our experiments show that our proposed model significantly outperforms robust baseline models while demanding far fewer parameters and computations, while achieving an ultra-low algorithmic latency of just 2 milliseconds.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge