Zhonghao Shi

HRIBench: Benchmarking Vision-Language Models for Real-Time Human Perception in Human-Robot Interaction

Jun 25, 2025Abstract:Real-time human perception is crucial for effective human-robot interaction (HRI). Large vision-language models (VLMs) offer promising generalizable perceptual capabilities but often suffer from high latency, which negatively impacts user experience and limits VLM applicability in real-world scenarios. To systematically study VLM capabilities in human perception for HRI and performance-latency trade-offs, we introduce HRIBench, a visual question-answering (VQA) benchmark designed to evaluate VLMs across a diverse set of human perceptual tasks critical for HRI. HRIBench covers five key domains: (1) non-verbal cue understanding, (2) verbal instruction understanding, (3) human-robot object relationship understanding, (4) social navigation, and (5) person identification. To construct HRIBench, we collected data from real-world HRI environments to curate questions for non-verbal cue understanding, and leveraged publicly available datasets for the remaining four domains. We curated 200 VQA questions for each domain, resulting in a total of 1000 questions for HRIBench. We then conducted a comprehensive evaluation of both state-of-the-art closed-source and open-source VLMs (N=11) on HRIBench. Our results show that, despite their generalizability, current VLMs still struggle with core perceptual capabilities essential for HRI. Moreover, none of the models within our experiments demonstrated a satisfactory performance-latency trade-off suitable for real-time deployment, underscoring the need for future research on developing smaller, low-latency VLMs with improved human perception capabilities. HRIBench and our results can be found in this Github repository: https://github.com/interaction-lab/HRIBench.

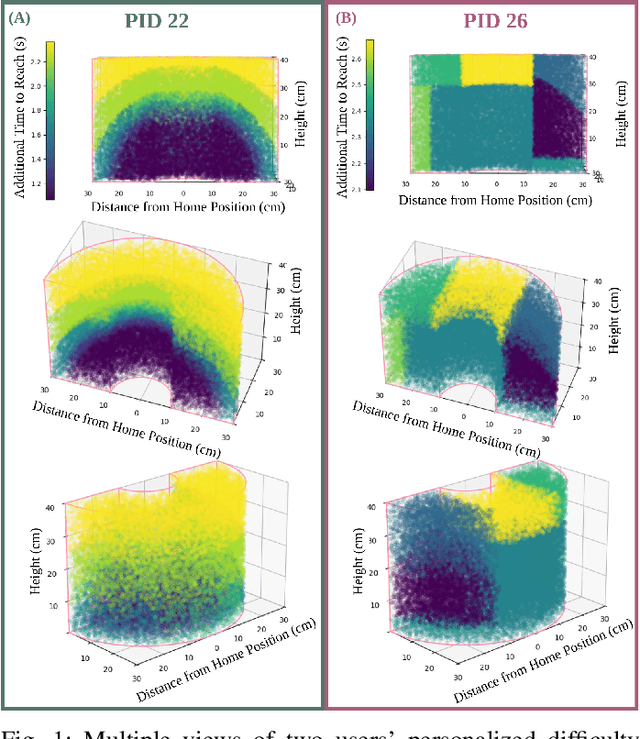

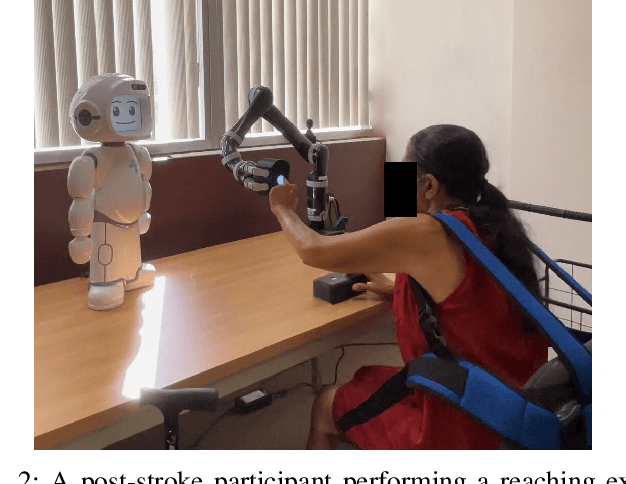

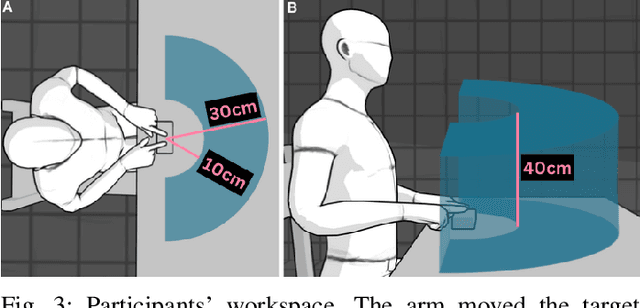

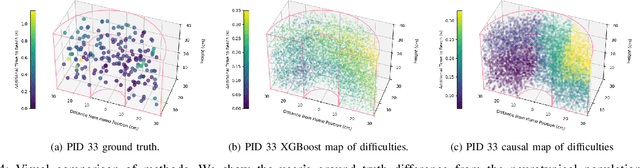

Modeling Personalized Difficulty of Rehabilitation Exercises Using Causal Trees

May 07, 2025

Abstract:Rehabilitation robots are often used in game-like interactions for rehabilitation to increase a person's motivation to complete rehabilitation exercises. By adjusting exercise difficulty for a specific user throughout the exercise interaction, robots can maximize both the user's rehabilitation outcomes and the their motivation throughout the exercise. Previous approaches have assumed exercises have generic difficulty values that apply to all users equally, however, we identified that stroke survivors have varied and unique perceptions of exercise difficulty. For example, some stroke survivors found reaching vertically more difficult than reaching farther but lower while others found reaching farther more challenging than reaching vertically. In this paper, we formulate a causal tree-based method to calculate exercise difficulty based on the user's performance. We find that this approach accurately models exercise difficulty and provides a readily interpretable model of why that exercise is difficult for both users and caretakers.

Improving User Experience in Preference-Based Optimization of Reward Functions for Assistive Robots

Nov 17, 2024

Abstract:Assistive robots interact with humans and must adapt to different users' preferences to be effective. An easy and effective technique to learn non-expert users' preferences is through rankings of robot behaviors, for example, robot movement trajectories or gestures. Existing techniques focus on generating trajectories for users to rank that maximize the outcome of the preference learning process. However, the generated trajectories do not appear to reflect the user's preference over repeated interactions. In this work, we design an algorithm to generate trajectories for users to rank that we call Covariance Matrix Adaptation Evolution Strategies with Information Gain (CMA-ES-IG). CMA-ES-IG prioritizes the user's experience of the preference learning process. We show that users find our algorithm more intuitive and easier to use than previous approaches across both physical and social robot tasks. This project's code is hosted at github.com/interaction-lab/CMA-ES-IG

How Can Large Language Models Enable Better Socially Assistive Human-Robot Interaction: A Brief Survey

Apr 05, 2024Abstract:Socially assistive robots (SARs) have shown great success in providing personalized cognitive-affective support for user populations with special needs such as older adults, children with autism spectrum disorder (ASD), and individuals with mental health challenges. The large body of work on SAR demonstrates its potential to provide at-home support that complements clinic-based interventions delivered by mental health professionals, making these interventions more effective and accessible. However, there are still several major technical challenges that hinder SAR-mediated interactions and interventions from reaching human-level social intelligence and efficacy. With the recent advances in large language models (LLMs), there is an increased potential for novel applications within the field of SAR that can significantly expand the current capabilities of SARs. However, incorporating LLMs introduces new risks and ethical concerns that have not yet been encountered, and must be carefully be addressed to safely deploy these more advanced systems. In this work, we aim to conduct a brief survey on the use of LLMs in SAR technologies, and discuss the potentials and risks of applying LLMs to the following three major technical challenges of SAR: 1) natural language dialog; 2) multimodal understanding; 3) LLMs as robot policies.

Evaluating and Personalizing User-Perceived Quality of Text-to-Speech Voices for Delivering Mindfulness Meditation with Different Physical Embodiments

Jan 07, 2024Abstract:Mindfulness-based therapies have been shown to be effective in improving mental health, and technology-based methods have the potential to expand the accessibility of these therapies. To enable real-time personalized content generation for mindfulness practice in these methods, high-quality computer-synthesized text-to-speech (TTS) voices are needed to provide verbal guidance and respond to user performance and preferences. However, the user-perceived quality of state-of-the-art TTS voices has not yet been evaluated for administering mindfulness meditation, which requires emotional expressiveness. In addition, work has not yet been done to study the effect of physical embodiment and personalization on the user-perceived quality of TTS voices for mindfulness. To that end, we designed a two-phase human subject study. In Phase 1, an online Mechanical Turk between-subject study (N=471) evaluated 3 (feminine, masculine, child-like) state-of-the-art TTS voices with 2 (feminine, masculine) human therapists' voices in 3 different physical embodiment settings (no agent, conversational agent, socially assistive robot) with remote participants. Building on findings from Phase 1, in Phase 2, an in-person within-subject study (N=94), we used a novel framework we developed for personalizing TTS voices based on user preferences, and evaluated user-perceived quality compared to best-rated non-personalized voices from Phase 1. We found that the best-rated human voice was perceived better than all TTS voices; the emotional expressiveness and naturalness of TTS voices were poorly rated, while users were satisfied with the clarity of TTS voices. Surprisingly, by allowing users to fine-tune TTS voice features, the user-personalized TTS voices could perform almost as well as human voices, suggesting user personalization could be a simple and very effective tool to improve user-perceived quality of TTS voice.

Designing a Socially Assistive Robot to Support Older Adults with Low Vision

Jan 06, 2024Abstract:Socially assistive robots (SARs) have shown great promise in supplementing and augmenting interventions to support the physical and mental well-being of older adults. However, past work has not yet explored the potential of applying SAR to lower the barriers of long-term low vision rehabilitation (LVR) interventions for older adults. In this work, we present a user-informed design process to validate the motivation and identify major design principles for developing SAR for long-term LVR. To evaluate user-perceived usefulness and acceptance of SAR in this novel domain, we performed a two-phase study through user surveys. First, a group (n=38) of older adults with LV completed a mailed-in survey. Next, a new group (n=13) of older adults with LV saw an in-clinic SAR demo and then completed the survey. The study participants reported that SARs would be useful, trustworthy, easy to use, and enjoyable while providing socio-emotional support to augment LVR interventions. The in-clinic demo group reported significantly more positive opinions of the SAR's capabilities than did the baseline survey group that used mailed-in forms without the SAR demo.

MaSS: Multi-attribute Selective Suppression

Oct 18, 2022

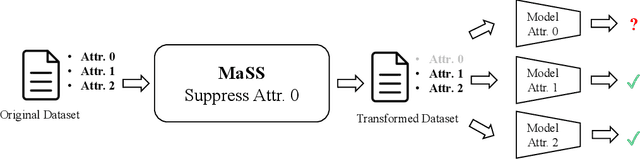

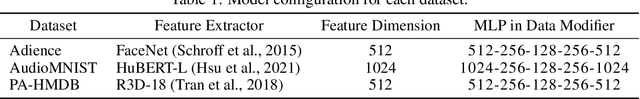

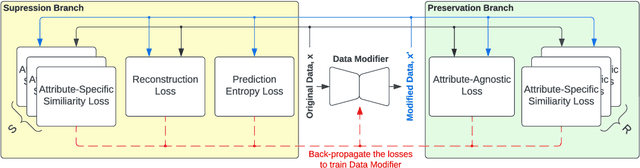

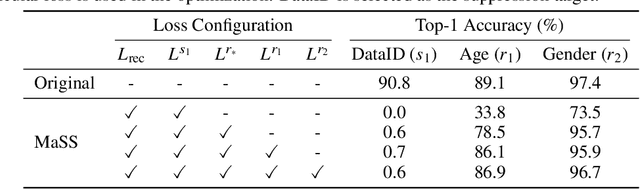

Abstract:The recent rapid advances in machine learning technologies largely depend on the vast richness of data available today, in terms of both the quantity and the rich content contained within. For example, biometric data such as images and voices could reveal people's attributes like age, gender, sentiment, and origin, whereas location/motion data could be used to infer people's activity levels, transportation modes, and life habits. Along with the new services and applications enabled by such technological advances, various governmental policies are put in place to regulate such data usage and protect people's privacy and rights. As a result, data owners often opt for simple data obfuscation (e.g., blur people's faces in images) or withholding data altogether, which leads to severe data quality degradation and greatly limits the data's potential utility. Aiming for a sophisticated mechanism which gives data owners fine-grained control while retaining the maximal degree of data utility, we propose Multi-attribute Selective Suppression, or MaSS, a general framework for performing precisely targeted data surgery to simultaneously suppress any selected set of attributes while preserving the rest for downstream machine learning tasks. MaSS learns a data modifier through adversarial games between two sets of networks, where one is aimed at suppressing selected attributes, and the other ensures the retention of the rest of the attributes via general contrastive loss as well as explicit classification metrics. We carried out an extensive evaluation of our proposed method using multiple datasets from different domains including facial images, voice audio, and video clips, and obtained promising results in MaSS' generalizability and capability of suppressing targeted attributes without negatively affecting the data's usability in other downstream ML tasks.

Personalized Affect-Aware Socially Assistive Robot Tutors Aimed at Fostering Social Grit in Children with Autism

Mar 29, 2021

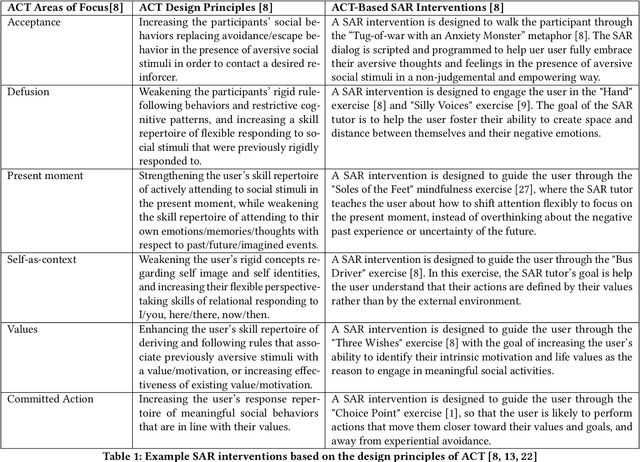

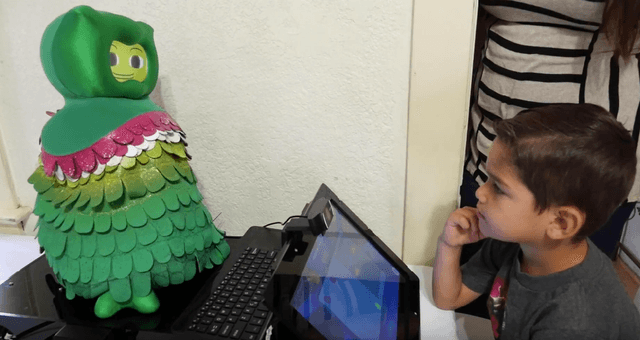

Abstract:Affect-aware socially assistive robotics (SAR) tutors have great potential to augment and democratize professional therapeutic interventions for children with autism spectrum disorders (ASD) from different socioeconomic backgrounds. However, the majority of research on SAR for ASD has been on teaching cognitive and/or social skills, not on addressing users' emotional needs for real-world social situations. To bridge that gap, this work aims to develop personalized affect-aware SAR tutors to help alleviate social anxiety and foster social grit-the growth mindset for social skill development-in children with ASD. We propose a novel paradigm to incorporate clinically validated Acceptance and Commitment Training (ACT) with personalized SAR interventions. This work paves the way toward developing personalized affect-aware SAR interventions to support the unique and diverse socio-emotional needs and challenges of children with ASD.

Toward Personalized Affect-Aware Socially Assistive Robot Tutors in Long-Term Interventions for Children with Autism

Jan 30, 2021

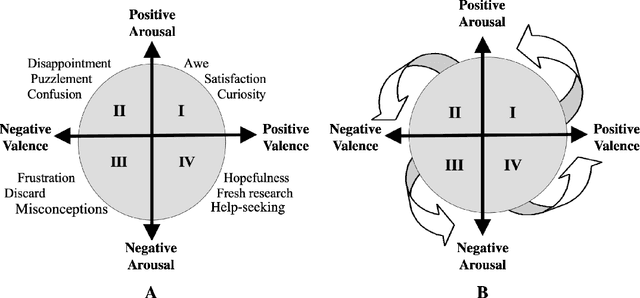

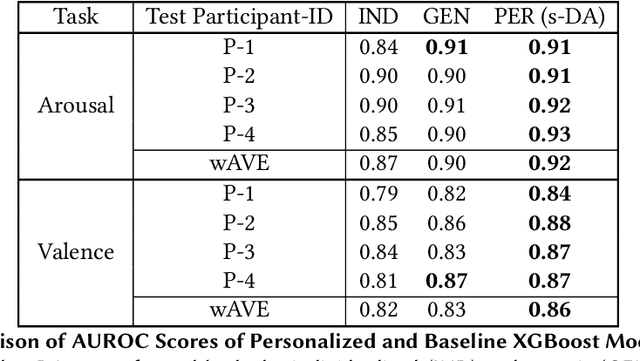

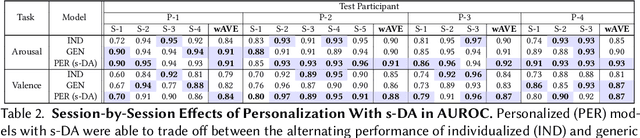

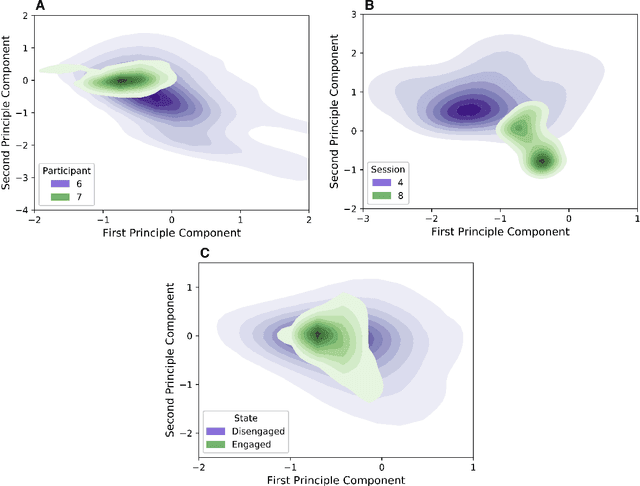

Abstract:Affect-aware socially assistive robotics (SAR) has shown great potential for augmenting interventions for children with autism spectrum disorders (ASD). However, current SAR cannot yet perceive the unique and diverse set of atypical cognitive-affective behaviors from children with ASD in an automatic and personalized fashion in long-term (multi-session) real-world interactions. To bridge this gap, this work designed and validated personalized models of arousal and valence for children with ASD using a multi-session in-home dataset of SAR interventions. By training machine learning (ML) algorithms with supervised domain adaptation (s-DA), the personalized models were able to trade off between the limited individual data and the more abundant less personal data pooled from other study participants. We evaluated the effects of personalization on a long-term multimodal dataset consisting of 4 children with ASD with a total of 19 sessions, and derived inter-rater reliability (IR) scores for binary arousal (IR = 83%) and valence (IR = 81%) labels between human annotators. Our results show that personalized Gradient Boosted Decision Trees (XGBoost) models with s-DA outperformed two non-personalized individualized and generic model baselines not only on the weighted average of all sessions, but also statistically (p < .05) across individual sessions. This work paves the way for the development of personalized autonomous SAR systems tailored toward individuals with atypical cognitive-affective and socio-emotional needs.

Modeling Engagement in Long-Term, In-Home Socially Assistive Robot Interventions for Children with Autism Spectrum Disorders

Feb 06, 2020

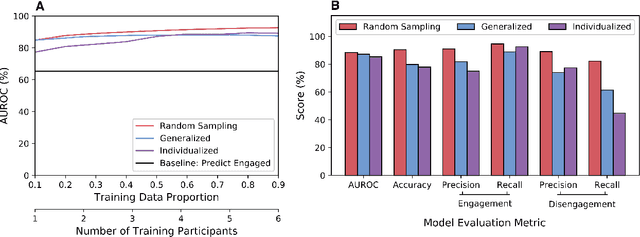

Abstract:Socially assistive robotics (SAR) has great potential to provide accessible, affordable, and personalized therapeutic interventions for children with autism spectrum disorders (ASD). However, human-robot interaction (HRI) methods are still limited in their ability to autonomously recognize and respond to behavioral cues, especially in atypical users and everyday settings. This work applies supervised machine learning algorithms to model user engagement in the context of long-term, in-home SAR interventions for children with ASD. Specifically, two types of engagement models are presented for each user: 1) generalized models trained on data from different users; and 2) individualized models trained on an early subset of the user's data. The models achieved approximately 90% accuracy (AUROC) for post hoc binary classification of engagement, despite the high variance in data observed across users, sessions, and engagement states. Moreover, temporal patterns in model predictions could be used to reliably initiate re-engagement actions at appropriate times. These results validate the feasibility and challenges of recognition and response to user disengagement in long-term, real-world HRI settings. The contributions of this work also inform the design of engaging and personalized HRI, especially for the ASD community.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge