Mina Kian

Mechanistic Interpretability of Emotion Inference in Large Language Models

Feb 08, 2025Abstract:Large language models (LLMs) show promising capabilities in predicting human emotions from text. However, the mechanisms through which these models process emotional stimuli remain largely unexplored. Our study addresses this gap by investigating how autoregressive LLMs infer emotions, showing that emotion representations are functionally localized to specific regions in the model. Our evaluation includes diverse model families and sizes and is supported by robustness checks. We then show that the identified representations are psychologically plausible by drawing on cognitive appraisal theory, a well-established psychological framework positing that emotions emerge from evaluations (appraisals) of environmental stimuli. By causally intervening on construed appraisal concepts, we steer the generation and show that the outputs align with theoretical and intuitive expectations. This work highlights a novel way to causally intervene and precisely shape emotional text generation, potentially benefiting safety and alignment in sensitive affective domains.

How Can Large Language Models Enable Better Socially Assistive Human-Robot Interaction: A Brief Survey

Apr 05, 2024Abstract:Socially assistive robots (SARs) have shown great success in providing personalized cognitive-affective support for user populations with special needs such as older adults, children with autism spectrum disorder (ASD), and individuals with mental health challenges. The large body of work on SAR demonstrates its potential to provide at-home support that complements clinic-based interventions delivered by mental health professionals, making these interventions more effective and accessible. However, there are still several major technical challenges that hinder SAR-mediated interactions and interventions from reaching human-level social intelligence and efficacy. With the recent advances in large language models (LLMs), there is an increased potential for novel applications within the field of SAR that can significantly expand the current capabilities of SARs. However, incorporating LLMs introduces new risks and ethical concerns that have not yet been encountered, and must be carefully be addressed to safely deploy these more advanced systems. In this work, we aim to conduct a brief survey on the use of LLMs in SAR technologies, and discuss the potentials and risks of applying LLMs to the following three major technical challenges of SAR: 1) natural language dialog; 2) multimodal understanding; 3) LLMs as robot policies.

Designing Robot Identity: The Role of Voice, Clothing, and Task on Robot Gender Perception

Mar 30, 2024

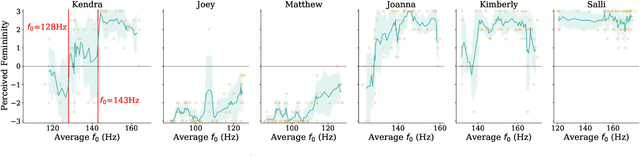

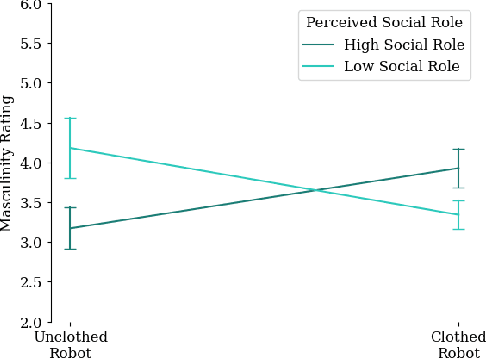

Abstract:Perceptions of gender are a significant aspect of human-human interaction, and gender has wide-reaching social implications for robots deployed in contexts where they are expected to interact with humans. This work explored two flexible modalities for communicating gender in robots--voice and appearance--and we studied their individual and combined influences on a robot's perceived gender. We evaluated the perception of a robot's gender through three video-based studies. First, we conducted a study (n=65) on the gender perception of robot voices by varying speaker identity and pitch. Second, we conducted a study (n=93) on the gender perception of robot clothing designed for two different tasks. Finally, building on the results of the first two studies, we completed a large integrative video-based study (n=273) involving two human-robot interaction tasks. We found that voice and clothing can be used to reliably establish a robot's perceived gender, and that combining these two modalities can have different effects on the robot's perceived gender. Taken together, these results inform the design of robot voices and clothing as individual and interacting components in the perceptions of robot gender.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge