Uksang Yoo

Modeling Personalized Difficulty of Rehabilitation Exercises Using Causal Trees

May 07, 2025

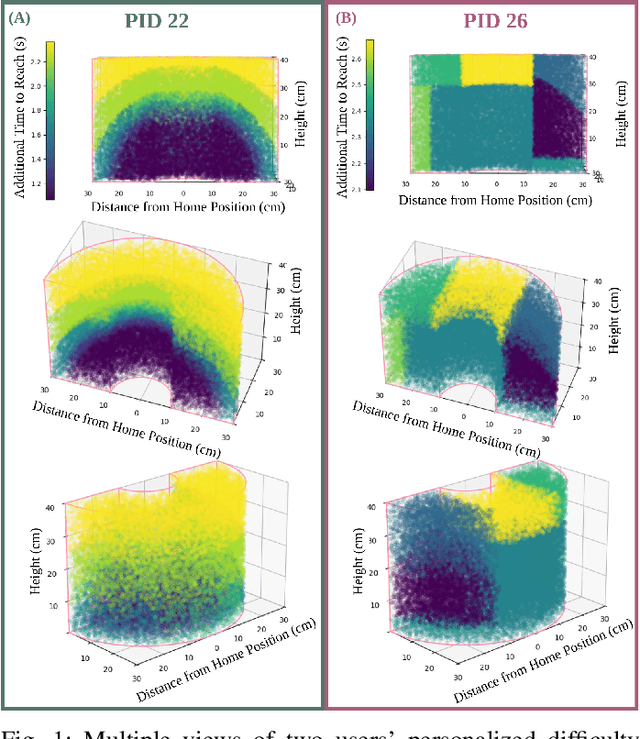

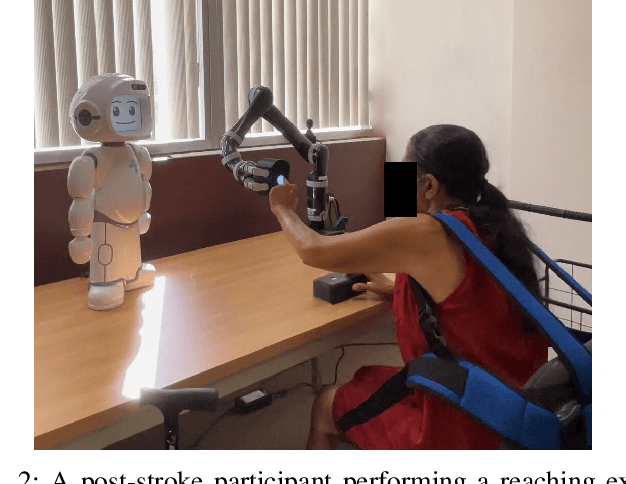

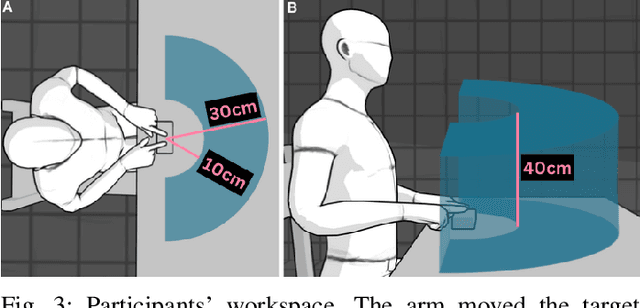

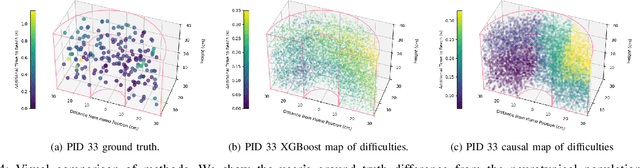

Abstract:Rehabilitation robots are often used in game-like interactions for rehabilitation to increase a person's motivation to complete rehabilitation exercises. By adjusting exercise difficulty for a specific user throughout the exercise interaction, robots can maximize both the user's rehabilitation outcomes and the their motivation throughout the exercise. Previous approaches have assumed exercises have generic difficulty values that apply to all users equally, however, we identified that stroke survivors have varied and unique perceptions of exercise difficulty. For example, some stroke survivors found reaching vertically more difficult than reaching farther but lower while others found reaching farther more challenging than reaching vertically. In this paper, we formulate a causal tree-based method to calculate exercise difficulty based on the user's performance. We find that this approach accurately models exercise difficulty and provides a readily interpretable model of why that exercise is difficult for both users and caretakers.

KineSoft: Learning Proprioceptive Manipulation Policies with Soft Robot Hands

Mar 03, 2025

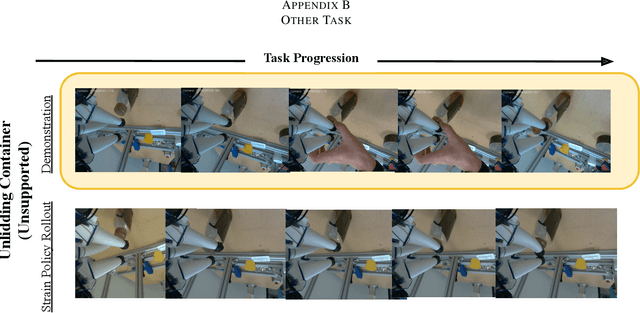

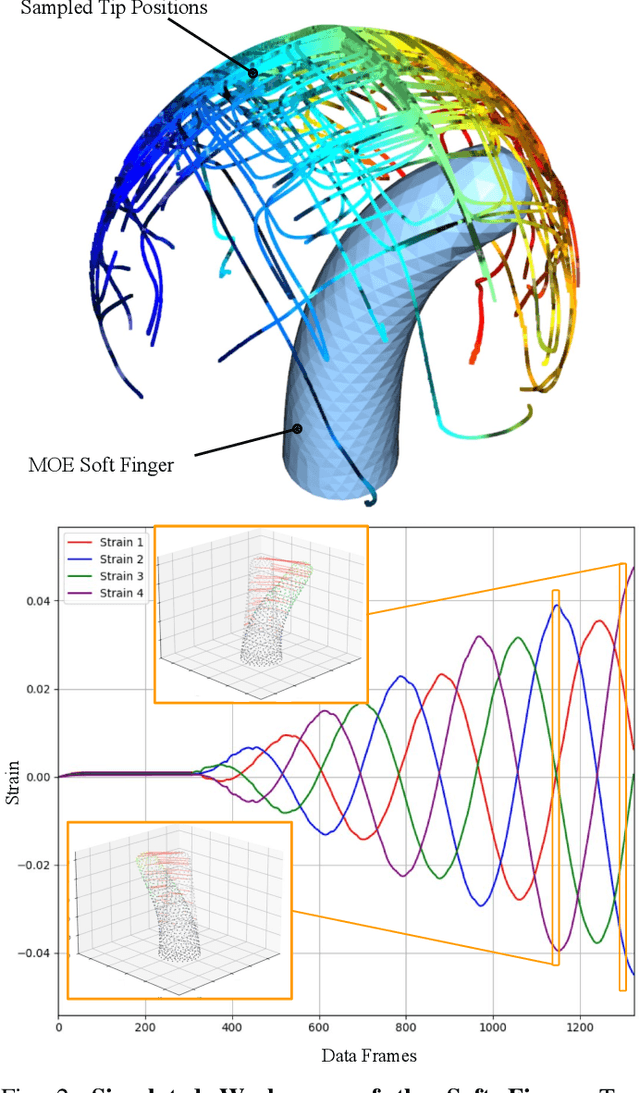

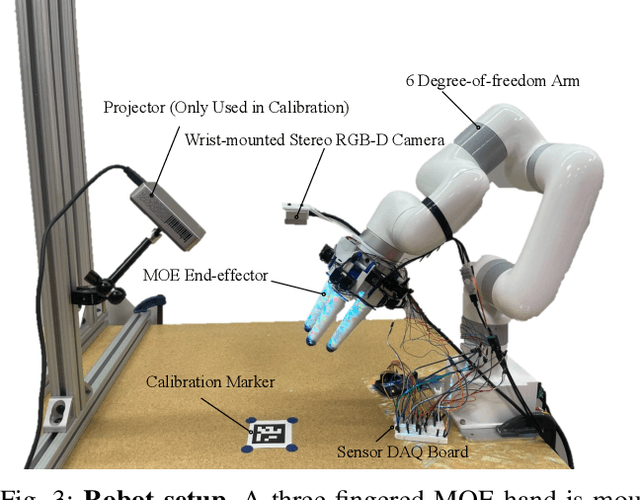

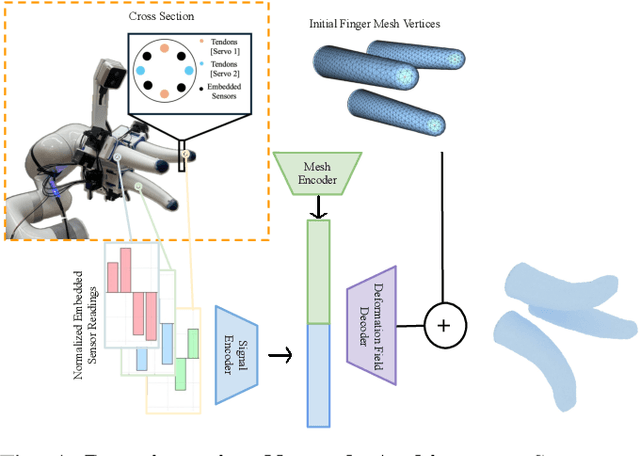

Abstract:Underactuated soft robot hands offer inherent safety and adaptability advantages over rigid systems, but developing dexterous manipulation skills remains challenging. While imitation learning shows promise for complex manipulation tasks, traditional approaches struggle with soft systems due to demonstration collection challenges and ineffective state representations. We present KineSoft, a framework enabling direct kinesthetic teaching of soft robotic hands by leveraging their natural compliance as a skill teaching advantage rather than only as a control challenge. KineSoft makes two key contributions: (1) an internal strain sensing array providing occlusion-free proprioceptive shape estimation, and (2) a shape-based imitation learning framework that uses proprioceptive feedback with a low-level shape-conditioned controller to ground diffusion-based policies. This enables human demonstrators to physically guide the robot while the system learns to associate proprioceptive patterns with successful manipulation strategies. We validate KineSoft through physical experiments, demonstrating superior shape estimation accuracy compared to baseline methods, precise shape-trajectory tracking, and higher task success rates compared to baseline imitation learning approaches.

Soft and Compliant Contact-Rich Hair Manipulation and Care

Jan 05, 2025

Abstract:Hair care robots can help address labor shortages in elderly care while enabling those with limited mobility to maintain their hair-related identity. We present MOE-Hair, a soft robot system that performs three hair-care tasks: head patting, finger combing, and hair grasping. The system features a tendon-driven soft robot end-effector (MOE) with a wrist-mounted RGBD camera, leveraging both mechanical compliance for safety and visual force sensing through deformation. In testing with a force-sensorized mannequin head, MOE achieved comparable hair-grasping effectiveness while applying significantly less force than rigid grippers. Our novel force estimation method combines visual deformation data and tendon tensions from actuators to infer applied forces, reducing sensing errors by up to 60.1% and 20.3% compared to actuator current load-only and depth image-only baselines, respectively. A user study with 12 participants demonstrated statistically significant preferences for MOE-Hair over a baseline system in terms of comfort, effectiveness, and appropriate force application. These results demonstrate the unique advantages of soft robots in contact-rich hair-care tasks, while highlighting the importance of precise force control despite the inherent compliance of the system.

SonicBoom: Contact Localization Using Array of Microphones

Dec 13, 2024

Abstract:In cluttered environments where visual sensors encounter heavy occlusion, such as in agricultural settings, tactile signals can provide crucial spatial information for the robot to locate rigid objects and maneuver around them. We introduce SonicBoom, a holistic hardware and learning pipeline that enables contact localization through an array of contact microphones. While conventional sound source localization methods effectively triangulate sources in air, localization through solid media with irregular geometry and structure presents challenges that are difficult to model analytically. We address this challenge through a feature engineering and learning based approach, autonomously collecting 18,000 robot interaction sound pairs to learn a mapping between acoustic signals and collision locations on the robot end effector link. By leveraging relative features between microphones, SonicBoom achieves localization errors of 0.42cm for in distribution interactions and maintains robust performance of 2.22cm error even with novel objects and contact conditions. We demonstrate the system's practical utility through haptic mapping of occluded branches in mock canopy settings, showing that acoustic based sensing can enable reliable robot navigation in visually challenging environments.

Soft Robotic Dynamic In-Hand Pen Spinning

Nov 19, 2024

Abstract:Dynamic in-hand manipulation remains a challenging task for soft robotic systems that have demonstrated advantages in safe compliant interactions but struggle with high-speed dynamic tasks. In this work, we present SWIFT, a system for learning dynamic tasks using a soft and compliant robotic hand. Unlike previous works that rely on simulation, quasi-static actions and precise object models, the proposed system learns to spin a pen through trial-and-error using only real-world data without requiring explicit prior knowledge of the pen's physical attributes. With self-labeled trials sampled from the real world, the system discovers the set of pen grasping and spinning primitive parameters that enables a soft hand to spin a pen robustly and reliably. After 130 sampled actions per object, SWIFT achieves 100% success rate across three pens with different weights and weight distributions, demonstrating the system's generalizability and robustness to changes in object properties. The results highlight the potential for soft robotic end-effectors to perform dynamic tasks including rapid in-hand manipulation. We also demonstrate that SWIFT generalizes to spinning items with different shapes and weights such as a brush and a screwdriver which we spin with 10/10 and 5/10 success rates respectively. Videos, data, and code are available at https://soft-spin.github.io.

Inclusion in Assistive Haircare Robotics: Practical and Ethical Considerations in Hair Manipulation

Nov 07, 2024

Abstract:Robot haircare systems could provide a controlled and personalized environment that is respectful of an individual's sensitivities and may offer a comfortable experience. We argue that because of hair and hairstyles' often unique importance in defining and expressing an individual's identity, we should approach the development of assistive robot haircare systems carefully while considering various practical and ethical concerns and risks. In this work, we specifically list and discuss the consideration of hair type, expression of the individual's preferred identity, cost accessibility of the system, culturally-aware robot strategies, and the associated societal risks. Finally, we discuss the planned studies that will allow us to better understand and address the concerns and considerations we outlined in this work through interactions with both haircare experts and end-users. Through these practical and ethical considerations, this work seeks to systematically organize and provide guidance for the development of inclusive and ethical robot haircare systems.

RoPotter: Toward Robotic Pottery and Deformable Object Manipulation with Structural Priors

Aug 05, 2024Abstract:Humans are capable of continuously manipulating a wide variety of deformable objects into complex shapes. This is made possible by our intuitive understanding of material properties and mechanics of the object, for reasoning about object states even when visual perception is occluded. These capabilities allow us to perform diverse tasks ranging from cooking with dough to expressing ourselves with pottery-making. However, developing robotic systems to robustly perform similar tasks remains challenging, as current methods struggle to effectively model volumetric deformable objects and reason about the complex behavior they typically exhibit. To study the robotic systems and algorithms capable of deforming volumetric objects, we introduce a novel robotics task of continuously deforming clay on a pottery wheel. We propose a pipeline for perception and pottery skill-learning, called RoPotter, wherein we demonstrate that structural priors specific to the task of pottery-making can be exploited to simplify the pottery skill-learning process. Namely, we can project the cross-section of the clay to a plane to represent the state of the clay, reducing dimensionality. We also demonstrate a mesh-based method of occluded clay state recovery, toward robotic agents capable of continuously deforming clay. Our experiments show that by using the reduced representation with structural priors based on the deformation behaviors of the clay, RoPotter can perform the long-horizon pottery task with 44.4% lower final shape error compared to the state-of-the-art baselines.

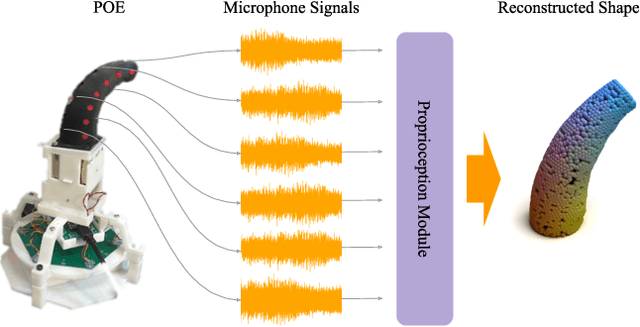

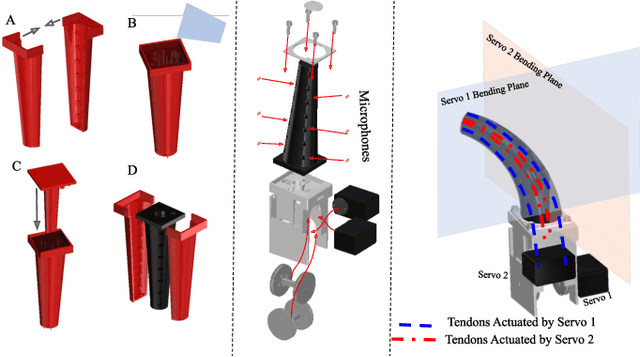

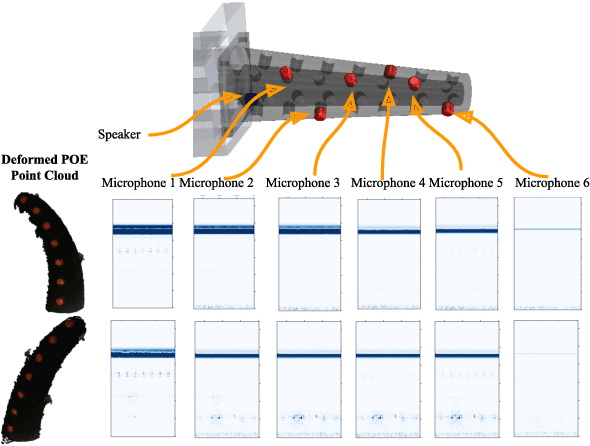

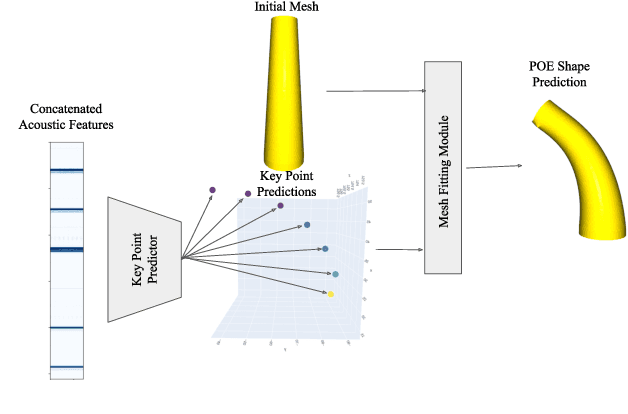

POE: Acoustic Soft Robotic Proprioception for Omnidirectional End-effectors

Jan 17, 2024

Abstract:Soft robotic shape estimation and proprioception are challenging because of soft robot's complex deformation behaviors and infinite degrees of freedom. A soft robot's continuously deforming body makes it difficult to integrate rigid sensors and to reliably estimate its shape. In this work, we present Proprioceptive Omnidirectional End-effector (POE), which has six embedded microphones across the tendon-driven soft robot's surface. We first introduce novel applications of previously proposed 3D reconstruction methods to acoustic signals from the microphones for soft robot shape proprioception. To improve the proprioception pipeline's training efficiency and model prediction consistency, we present POE-M. POE-M first predicts key point positions from the acoustic signal observations with the embedded microphone array. Then we utilize an energy-minimization method to reconstruct a physically admissible high-resolution mesh of POE given the estimated key points. We evaluate the mesh reconstruction module with simulated data and the full POE-M pipeline with real-world experiments. We demonstrate that POE-M's explicit guidance of the key points during the mesh reconstruction process provides robustness and stability to the pipeline with ablation studies. POE-M reduced the maximum Chamfer distance error by 23.10 % compared to the state-of-the-art end-to-end soft robot proprioception models and achieved 4.91 mm average Chamfer distance error during evaluation.

Toward Zero-Shot Sim-to-Real Transfer Learning for Pneumatic Soft Robot 3D Proprioceptive Sensing

Mar 08, 2023

Abstract:Pneumatic soft robots present many advantages in manipulation tasks. Notably, their inherent compliance makes them safe and reliable in unstructured and fragile environments. However, full-body shape sensing for pneumatic soft robots is challenging because of their high degrees of freedom and complex deformation behaviors. Vision-based proprioception sensing methods relying on embedded cameras and deep learning provide a good solution to proprioception sensing by extracting the full-body shape information from the high-dimensional sensing data. But the current training data collection process makes it difficult for many applications. To address this challenge, we propose and demonstrate a robust sim-to-real pipeline that allows the collection of the soft robot's shape information in high-fidelity point cloud representation. The model trained on simulated data was evaluated with real internal camera images. The results show that the model performed with averaged Chamfer distance of 8.85 mm and tip position error of 10.12 mm even with external perturbation for a pneumatic soft robot with a length of 100.0 mm. We also demonstrated the sim-to-real pipeline's potential for exploring different configurations of visual patterns to improve vision-based reconstruction results. The code and dataset are available at https://github.com/DeepSoRo/DeepSoRoSim2Real.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge