Moonyoung Lee

StageACT: Stage-Conditioned Imitation for Robust Humanoid Door Opening

Sep 16, 2025Abstract:Humanoid robots promise to operate in everyday human environments without requiring modifications to the surroundings. Among the many skills needed, opening doors is essential, as doors are the most common gateways in built spaces and often limit where a robot can go. Door opening, however, poses unique challenges as it is a long-horizon task under partial observability, such as reasoning about the door's unobservable latch state that dictates whether the robot should rotate the handle or push the door. This ambiguity makes standard behavior cloning prone to mode collapse, yielding blended or out-of-sequence actions. We introduce StageACT, a stage-conditioned imitation learning framework that augments low-level policies with task-stage inputs. This effective addition increases robustness to partial observability, leading to higher success rates and shorter completion times. On a humanoid operating in a real-world office environment, StageACT achieves a 55% success rate on previously unseen doors, more than doubling the best baseline. Moreover, our method supports intentional behavior guidance through stage prompting, enabling recovery behaviors. These results highlight stage conditioning as a lightweight yet powerful mechanism for long-horizon humanoid loco-manipulation.

Hearing the Slide: Acoustic-Guided Constraint Learning for Fast Non-Prehensile Transport

Jun 10, 2025Abstract:Object transport tasks are fundamental in robotic automation, emphasizing the importance of efficient and secure methods for moving objects. Non-prehensile transport can significantly improve transport efficiency, as it enables handling multiple objects simultaneously and accommodating objects unsuitable for parallel-jaw or suction grasps. Existing approaches incorporate constraints based on the Coulomb friction model, which is imprecise during fast motions where inherent mechanical vibrations occur. Imprecise constraints can cause transported objects to slide or even fall off the tray. To address this limitation, we propose a novel method to learn a friction model using acoustic sensing that maps a tray's motion profile to a dynamically conditioned friction coefficient. This learned model enables an optimization-based motion planner to adjust the friction constraint at each control step according to the planned motion at that step. In experiments, we generate time-optimized trajectories for a UR5e robot to transport various objects with constraints using both the standard Coulomb friction model and the learned friction model. Results suggest that the learned friction model reduces object displacement by up to 86.0% compared to the baseline, highlighting the effectiveness of acoustic sensing in learning real-world friction constraints.

Audio-Visual Contact Classification for Tree Structures in Agriculture

May 19, 2025Abstract:Contact-rich manipulation tasks in agriculture, such as pruning and harvesting, require robots to physically interact with tree structures to maneuver through cluttered foliage. Identifying whether the robot is contacting rigid or soft materials is critical for the downstream manipulation policy to be safe, yet vision alone is often insufficient due to occlusion and limited viewpoints in this unstructured environment. To address this, we propose a multi-modal classification framework that fuses vibrotactile (audio) and visual inputs to identify the contact class: leaf, twig, trunk, or ambient. Our key insight is that contact-induced vibrations carry material-specific signals, making audio effective for detecting contact events and distinguishing material types, while visual features add complementary semantic cues that support more fine-grained classification. We collect training data using a hand-held sensor probe and demonstrate zero-shot generalization to a robot-mounted probe embodiment, achieving an F1 score of 0.82. These results underscore the potential of audio-visual learning for manipulation in unstructured, contact-rich environments.

SonicBoom: Contact Localization Using Array of Microphones

Dec 13, 2024

Abstract:In cluttered environments where visual sensors encounter heavy occlusion, such as in agricultural settings, tactile signals can provide crucial spatial information for the robot to locate rigid objects and maneuver around them. We introduce SonicBoom, a holistic hardware and learning pipeline that enables contact localization through an array of contact microphones. While conventional sound source localization methods effectively triangulate sources in air, localization through solid media with irregular geometry and structure presents challenges that are difficult to model analytically. We address this challenge through a feature engineering and learning based approach, autonomously collecting 18,000 robot interaction sound pairs to learn a mapping between acoustic signals and collision locations on the robot end effector link. By leveraging relative features between microphones, SonicBoom achieves localization errors of 0.42cm for in distribution interactions and maintains robust performance of 2.22cm error even with novel objects and contact conditions. We demonstrate the system's practical utility through haptic mapping of occluded branches in mock canopy settings, showing that acoustic based sensing can enable reliable robot navigation in visually challenging environments.

Hefty: A Modular Reconfigurable Robot for Advancing Robot Manipulation in Agriculture

Feb 28, 2024Abstract:This paper presents a modular, reconfigurable robot platform for robot manipulation in agriculture. While robot manipulation promises great advancements in automating challenging, complex tasks that are currently best left to humans, it is also an expensive capital investment for researchers and users because it demands significantly varying robot configurations depending on the task. Modular robots provide a way to obtain multiple configurations and reduce costs by enabling incremental acquisition of only the necessary modules. The robot we present, Hefty, is designed to be modular and reconfigurable. It is designed for both researchers and end-users as a means to improve technology transfer from research to real-world application. This paper provides a detailed design and integration process, outlining the critical design decisions that enable modularity in the mobility of the robot as well as its sensor payload, power systems, computing, and fixture mounting. We demonstrate the utility of the robot by presenting five configurations used in multiple real-world agricultural robotics applications.

Task-Oriented Active Learning of Model Preconditions for Inaccurate Dynamics Models

Jan 08, 2024Abstract:When planning with an inaccurate dynamics model, a practical strategy is to restrict planning to regions of state-action space where the model is accurate: also known as a model precondition. Empirical real-world trajectory data is valuable for defining data-driven model preconditions regardless of the model form (analytical, simulator, learned, etc...). However, real-world data is often expensive and dangerous to collect. In order to achieve data efficiency, this paper presents an algorithm for actively selecting trajectories to learn a model precondition for an inaccurate pre-specified dynamics model. Our proposed techniques address challenges arising from the sequential nature of trajectories, and potential benefit of prioritizing task-relevant data. The experimental analysis shows how algorithmic properties affect performance in three planning scenarios: icy gridworld, simulated plant watering, and real-world plant watering. Results demonstrate an improvement of approximately 80% after only four real-world trajectories when using our proposed techniques.

Towards Robotic Tree Manipulation: Leveraging Graph Representations

Nov 13, 2023

Abstract:There is growing interest in automating agricultural tasks that require intricate and precise interaction with specialty crops, such as trees and vines. However, developing robotic solutions for crop manipulation remains a difficult challenge due to complexities involved in modeling their deformable behavior. In this study, we present a framework for learning the deformation behavior of tree-like crops under contact interaction. Our proposed method involves encoding the state of a spring-damper modeled tree crop as a graph. This representation allows us to employ graph networks to learn both a forward model for predicting resulting deformations, and a contact policy for inferring actions to manipulate tree crops. We conduct a comprehensive set of experiments in a simulated environment and demonstrate generalizability of our method on previously unseen trees. Videos can be found on the project website: https://kantor-lab.github.io/tree_gnn

Towards Autonomous Crop Monitoring: Inserting Sensors in Cluttered Environments

Nov 07, 2023Abstract:We present a contact-based phenotyping robot platform that can autonomously insert nitrate sensors into cornstalks to proactively monitor macronutrient levels in crops. This task is challenging because inserting such sensors requires sub-centimeter precision in an environment which contains high levels of clutter, lighting variation, and occlusion. To address these challenges, we develop a robust perception-action pipeline to detect and grasp stalks, and create a custom robot gripper which mechanically aligns the sensor before inserting it into the stalk. Through experimental validation on 48 unique stalks in a cornfield in Iowa, we demonstrate our platform's capability of detecting a stalk with 94% success, grasping a stalk with 90% success, and inserting a sensor with 60% success. In addition to developing an autonomous phenotyping research platform, we share key challenges and insights obtained from deployment in the field. Our research platform is open-sourced, with additional information available at https://kantor-lab.github.io/cornbot.

3D Reconstruction-Based Seed Counting of Sorghum Panicles for Agricultural Inspection

Nov 14, 2022

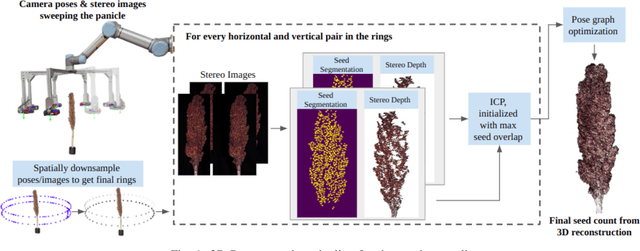

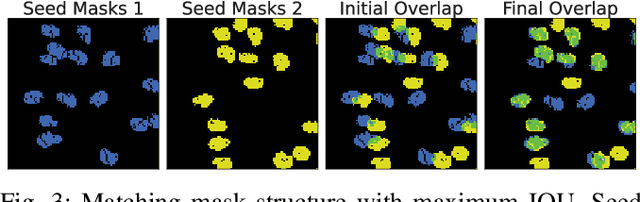

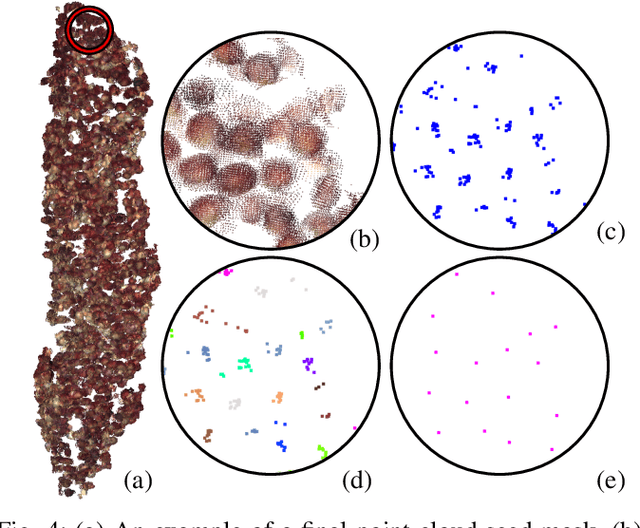

Abstract:In this paper, we present a method for creating high-quality 3D models of sorghum panicles for phenotyping in breeding experiments. This is achieved with a novel reconstruction approach that uses seeds as semantic landmarks in both 2D and 3D. To evaluate the performance, we develop a new metric for assessing the quality of reconstructed point clouds without having a ground-truth point cloud. Finally, a counting method is presented where the density of seed centers in the 3D model allows 2D counts from multiple views to be effectively combined into a whole-panicle count. We demonstrate that using this method to estimate seed count and weight for sorghum outperforms count extrapolation from 2D images, an approach used in most state of the art methods for seeds and grains of comparable size.

Vision-based Relative Detection and Tracking for Teams of Micro Aerial Vehicles

Jul 17, 2022

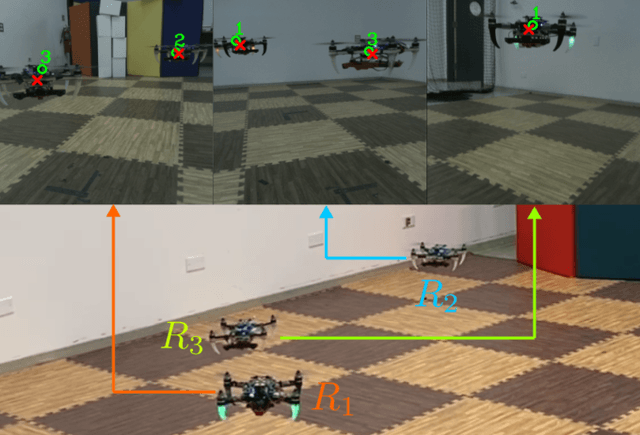

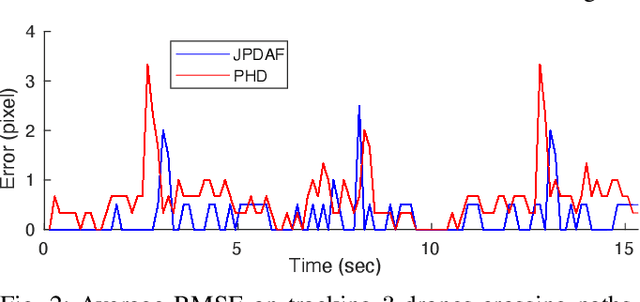

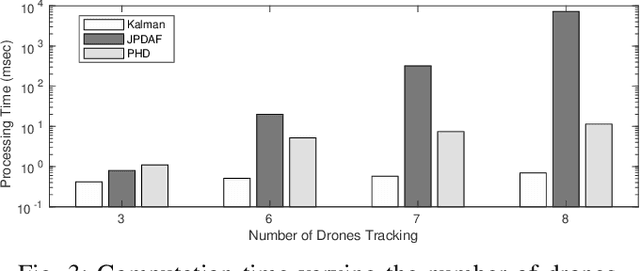

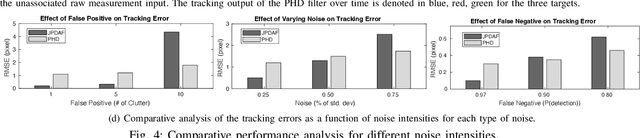

Abstract:In this paper, we address the vision-based detection and tracking problems of multiple aerial vehicles using a single camera and Inertial Measurement Unit (IMU) as well as the corresponding perception consensus problem (i.e., uniqueness and identical IDs across all observing agents). We design several vision-based decentralized Bayesian multi-tracking filtering strategies to resolve the association between the incoming unsorted measurements obtained by a visual detector algorithm and the tracked agents. We compare their accuracy in different operating conditions as well as their scalability according to the number of agents in the team. This analysis provides useful insights about the most appropriate design choice for the given task. We further show that the proposed perception and inference pipeline which includes a Deep Neural Network (DNN) as visual target detector is lightweight and capable of concurrently running control and planning with Size, Weight, and Power (SWaP) constrained robots on-board. Experimental results show the effective tracking of multiple drones in various challenging scenarios such as heavy occlusions.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge