Eliot Xing

Soft and Compliant Contact-Rich Hair Manipulation and Care

Jan 05, 2025

Abstract:Hair care robots can help address labor shortages in elderly care while enabling those with limited mobility to maintain their hair-related identity. We present MOE-Hair, a soft robot system that performs three hair-care tasks: head patting, finger combing, and hair grasping. The system features a tendon-driven soft robot end-effector (MOE) with a wrist-mounted RGBD camera, leveraging both mechanical compliance for safety and visual force sensing through deformation. In testing with a force-sensorized mannequin head, MOE achieved comparable hair-grasping effectiveness while applying significantly less force than rigid grippers. Our novel force estimation method combines visual deformation data and tendon tensions from actuators to infer applied forces, reducing sensing errors by up to 60.1% and 20.3% compared to actuator current load-only and depth image-only baselines, respectively. A user study with 12 participants demonstrated statistically significant preferences for MOE-Hair over a baseline system in terms of comfort, effectiveness, and appropriate force application. These results demonstrate the unique advantages of soft robots in contact-rich hair-care tasks, while highlighting the importance of precise force control despite the inherent compliance of the system.

Stabilizing Reinforcement Learning in Differentiable Multiphysics Simulation

Dec 16, 2024

Abstract:Recent advances in GPU-based parallel simulation have enabled practitioners to collect large amounts of data and train complex control policies using deep reinforcement learning (RL), on commodity GPUs. However, such successes for RL in robotics have been limited to tasks sufficiently simulated by fast rigid-body dynamics. Simulation techniques for soft bodies are comparatively several orders of magnitude slower, thereby limiting the use of RL due to sample complexity requirements. To address this challenge, this paper presents both a novel RL algorithm and a simulation platform to enable scaling RL on tasks involving rigid bodies and deformables. We introduce Soft Analytic Policy Optimization (SAPO), a maximum entropy first-order model-based actor-critic RL algorithm, which uses first-order analytic gradients from differentiable simulation to train a stochastic actor to maximize expected return and entropy. Alongside our approach, we develop Rewarped, a parallel differentiable multiphysics simulation platform that supports simulating various materials beyond rigid bodies. We re-implement challenging manipulation and locomotion tasks in Rewarped, and show that SAPO outperforms baselines over a range of tasks that involve interaction between rigid bodies, articulations, and deformables.

Self-Activating Neural Ensembles for Continual Reinforcement Learning

Dec 31, 2022

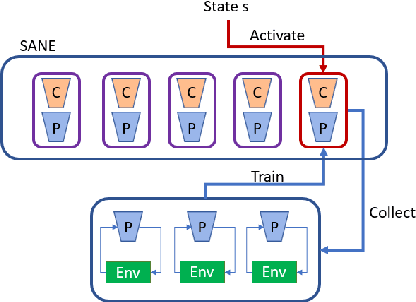

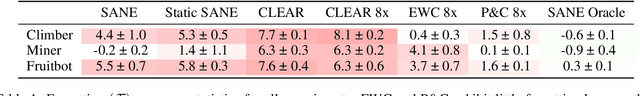

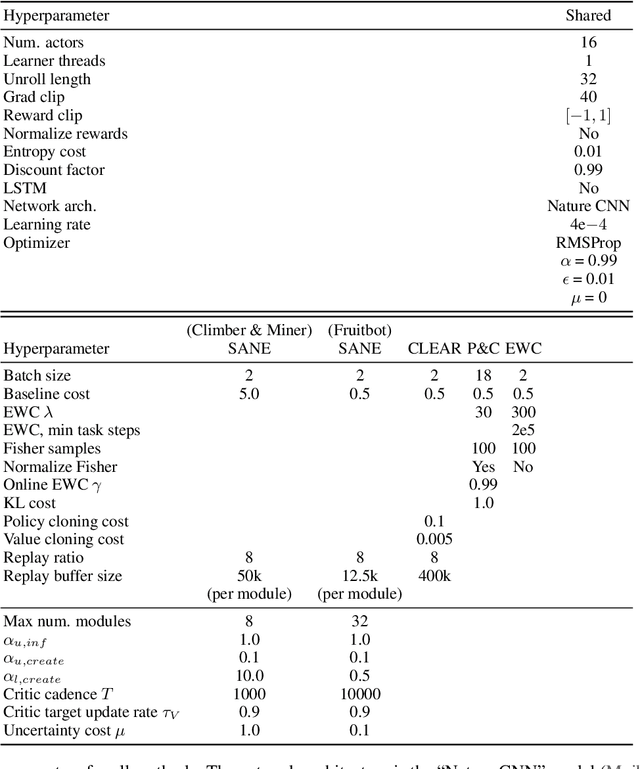

Abstract:The ability for an agent to continuously learn new skills without catastrophically forgetting existing knowledge is of critical importance for the development of generally intelligent agents. Most methods devised to address this problem depend heavily on well-defined task boundaries, and thus depend on human supervision. Our task-agnostic method, Self-Activating Neural Ensembles (SANE), uses a modular architecture designed to avoid catastrophic forgetting without making any such assumptions. At the beginning of each trajectory, a module in the SANE ensemble is activated to determine the agent's next policy. During training, new modules are created as needed and only activated modules are updated to ensure that unused modules remain unchanged. This system enables our method to retain and leverage old skills, while growing and learning new ones. We demonstrate our approach on visually rich procedurally generated environments.

* Code available here: https://github.com/AGI-Labs/continual_rl

CORA: Benchmarks, Baselines, and Metrics as a Platform for Continual Reinforcement Learning Agents

Oct 19, 2021Abstract:Progress in continual reinforcement learning has been limited due to several barriers to entry: missing code, high compute requirements, and a lack of suitable benchmarks. In this work, we present CORA, a platform for Continual Reinforcement Learning Agents that provides benchmarks, baselines, and metrics in a single code package. The benchmarks we provide are designed to evaluate different aspects of the continual RL challenge, such as catastrophic forgetting, plasticity, ability to generalize, and sample-efficient learning. Three of the benchmarks utilize video game environments (Atari, Procgen, NetHack). The fourth benchmark, CHORES, consists of four different task sequences in a visually realistic home simulator, drawn from a diverse set of task and scene parameters. To compare continual RL methods on these benchmarks, we prepare three metrics in CORA: continual evaluation, forgetting, and zero-shot forward transfer. Finally, CORA includes a set of performant, open-source baselines of existing algorithms for researchers to use and expand on. We release CORA and hope that the continual RL community can benefit from our contributions, to accelerate the development of new continual RL algorithms.

Characterizing Multidimensional Capacitive Servoing for Physical Human-Robot Interaction

May 25, 2021

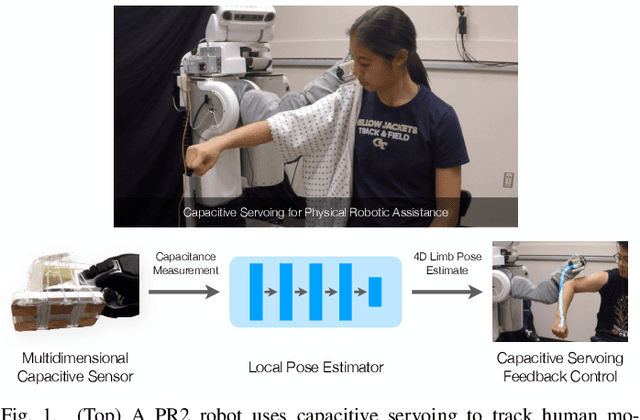

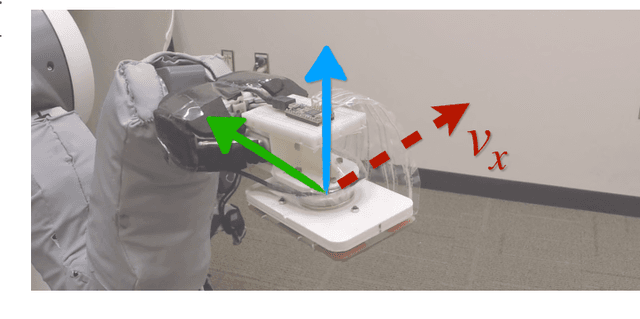

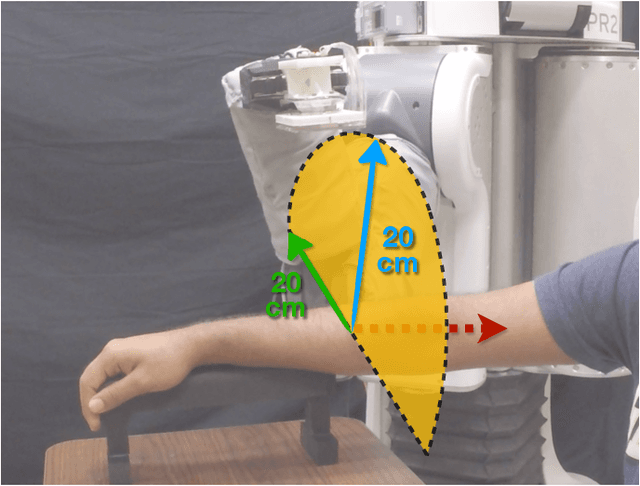

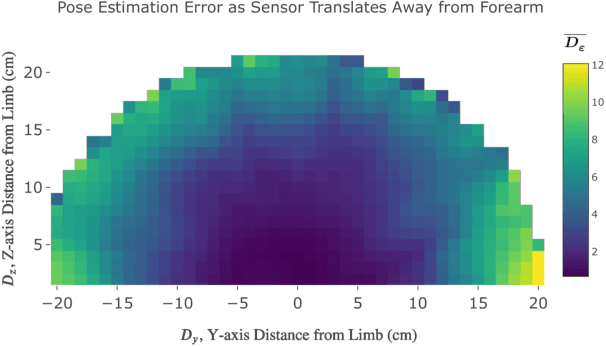

Abstract:Towards the goal of robots performing robust and intelligent physical interactions with people, it is crucial that robots are able to accurately sense the human body, follow trajectories around the body, and track human motion. This study introduces a capacitive servoing control scheme that allows a robot to sense and navigate around human limbs during close physical interactions. Capacitive servoing leverages temporal measurements from a multi-electrode capacitive sensor array mounted on a robot's end effector to estimate the relative position and orientation (pose) of a nearby human limb. Capacitive servoing then uses these human pose estimates from a data-driven pose estimator within a feedback control loop in order to maneuver the robot's end effector around the surface of a human limb. We provide a design overview of capacitive sensors for human-robot interaction and then investigate the performance and generalization of capacitive servoing through an experiment with 12 human participants. The results indicate that multidimensional capacitive servoing enables a robot's end effector to move proximally or distally along human limbs while adapting to human pose. Using a cross-validation experiment, results further show that capacitive servoing generalizes well across people with different body size.

Multimodal Material Classification for Robots using Spectroscopy and High Resolution Texture Imaging

Apr 02, 2020

Abstract:Material recognition can help inform robots about how to properly interact with and manipulate real-world objects. In this paper, we present a multimodal sensing technique, leveraging near-infrared spectroscopy and close-range high resolution texture imaging, that enables robots to estimate the materials of household objects. We release a dataset of high resolution texture images and spectral measurements collected from a mobile manipulator that interacted with 144 household objects. We then present a neural network architecture that learns a compact multimodal representation of spectral measurements and texture images. When generalizing material classification to new objects, we show that this multimodal representation enables a robot to recognize materials with greater performance as compared to prior state-of-the-art approaches. Finally, we present how a robot can combine this high resolution local sensing with images from the robot's head-mounted camera to achieve accurate material classification over a scene of objects on a table.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge