Zhaohui Wang

Wuhan University

IFFair: Influence Function-driven Sample Reweighting for Fair Classification

Dec 08, 2025

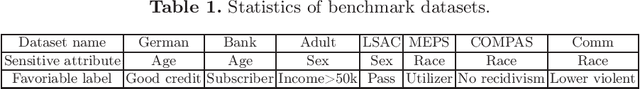

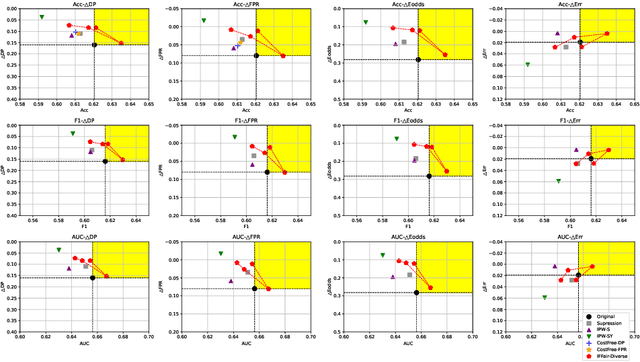

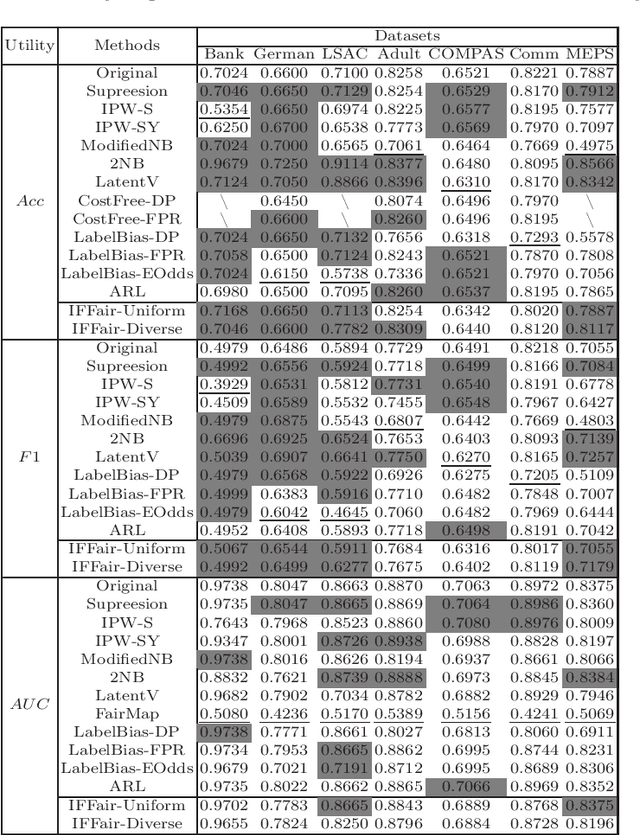

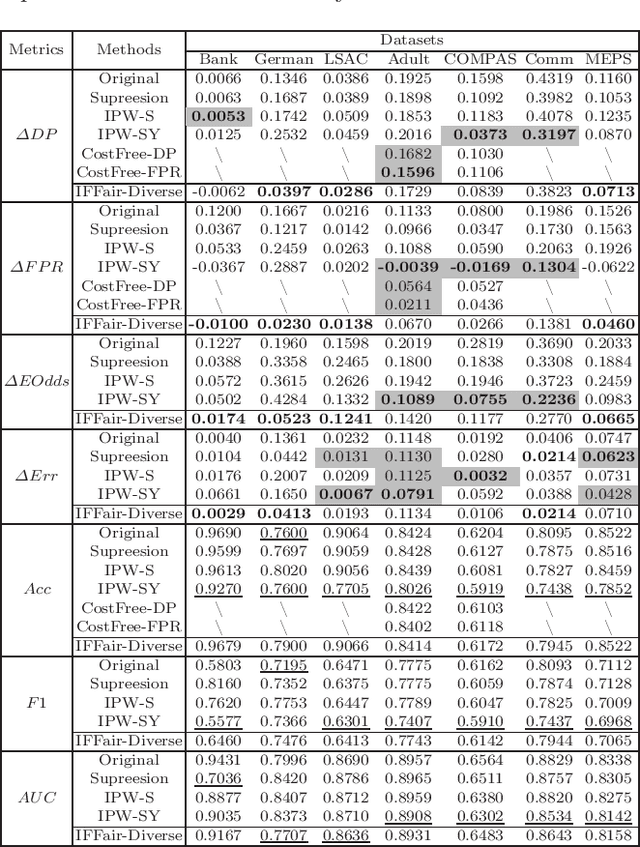

Abstract:Because machine learning has significantly improved efficiency and convenience in the society, it's increasingly used to assist or replace human decision-making. However, the data-based pattern makes related algorithms learn and even exacerbate potential bias in samples, resulting in discriminatory decisions against certain unprivileged groups, depriving them of the rights to equal treatment, thus damaging the social well-being and hindering the development of related applications. Therefore, we propose a pre-processing method IFFair based on the influence function. Compared with other fairness optimization approaches, IFFair only uses the influence disparity of training samples on different groups as a guidance to dynamically adjust the sample weights during training without modifying the network structure, data features and decision boundaries. To evaluate the validity of IFFair, we conduct experiments on multiple real-world datasets and metrics. The experimental results show that our approach mitigates bias of multiple accepted metrics in the classification setting, including demographic parity, equalized odds, equality of opportunity and error rate parity without conflicts. It also demonstrates that IFFair achieves better trade-off between multiple utility and fairness metrics compared with previous pre-processing methods.

R3GS: Gaussian Splatting for Robust Reconstruction and Relocalization in Unconstrained Image Collections

May 21, 2025

Abstract:We propose R3GS, a robust reconstruction and relocalization framework tailored for unconstrained datasets. Our method uses a hybrid representation during training. Each anchor combines a global feature from a convolutional neural network (CNN) with a local feature encoded by the multiresolution hash grids [2]. Subsequently, several shallow multi-layer perceptrons (MLPs) predict the attributes of each Gaussians, including color, opacity, and covariance. To mitigate the adverse effects of transient objects on the reconstruction process, we ffne-tune a lightweight human detection network. Once ffne-tuned, this network generates a visibility map that efffciently generalizes to other transient objects (such as posters, banners, and cars) with minimal need for further adaptation. Additionally, to address the challenges posed by sky regions in outdoor scenes, we propose an effective sky-handling technique that incorporates a depth prior as a constraint. This allows the inffnitely distant sky to be represented on the surface of a large-radius sky sphere, signiffcantly reducing ffoaters caused by errors in sky reconstruction. Furthermore, we introduce a novel relocalization method that remains robust to changes in lighting conditions while estimating the camera pose of a given image within the reconstructed 3DGS scene. As a result, R3GS significantly enhances rendering ffdelity, improves both training and rendering efffciency, and reduces storage requirements. Our method achieves state-of-the-art performance compared to baseline methods on in-the-wild datasets. The code will be made open-source following the acceptance of the paper.

* 7 pages, 4 figures

Real-time Ad retrieval via LLM-generative Commercial Intention for Sponsored Search Advertising

Apr 02, 2025

Abstract:The integration of Large Language Models (LLMs) with retrieval systems has shown promising potential in retrieving documents (docs) or advertisements (ads) for a given query. Existing LLM-based retrieval methods generate numeric or content-based DocIDs to retrieve docs/ads. However, the one-to-few mapping between numeric IDs and docs, along with the time-consuming content extraction, leads to semantic inefficiency and limits scalability in large-scale corpora. In this paper, we propose the Real-time Ad REtrieval (RARE) framework, which leverages LLM-generated text called Commercial Intentions (CIs) as an intermediate semantic representation to directly retrieve ads for queries in real-time. These CIs are generated by a customized LLM injected with commercial knowledge, enhancing its domain relevance. Each CI corresponds to multiple ads, yielding a lightweight and scalable set of CIs. RARE has been implemented in a real-world online system, handling daily search volumes in the hundreds of millions. The online implementation has yielded significant benefits: a 5.04% increase in consumption, a 6.37% rise in Gross Merchandise Volume (GMV), a 1.28% enhancement in click-through rate (CTR) and a 5.29% increase in shallow conversions. Extensive offline experiments show RARE's superiority over ten competitive baselines in four major categories.

MAFT: Efficient Model-Agnostic Fairness Testing for Deep Neural Networks via Zero-Order Gradient Search

Dec 28, 2024

Abstract:Deep neural networks (DNNs) have shown powerful performance in various applications and are increasingly being used in decision-making systems. However, concerns about fairness in DNNs always persist. Some efficient white-box fairness testing methods about individual fairness have been proposed. Nevertheless, the development of black-box methods has stagnated, and the performance of existing methods is far behind that of white-box methods. In this paper, we propose a novel black-box individual fairness testing method called Model-Agnostic Fairness Testing (MAFT). By leveraging MAFT, practitioners can effectively identify and address discrimination in DL models, regardless of the specific algorithm or architecture employed. Our approach adopts lightweight procedures such as gradient estimation and attribute perturbation rather than non-trivial procedures like symbol execution, rendering it significantly more scalable and applicable than existing methods. We demonstrate that MAFT achieves the same effectiveness as state-of-the-art white-box methods whilst improving the applicability to large-scale networks. Compared to existing black-box approaches, our approach demonstrates distinguished performance in discovering fairness violations w.r.t effectiveness (approximately 14.69 times) and efficiency (approximately 32.58 times).

PiSSA: Principal Singular Values and Singular Vectors Adaptation of Large Language Models

Apr 14, 2024Abstract:As the parameters of LLMs expand, the computational cost of fine-tuning the entire model becomes prohibitive. To address this challenge, we introduce a PEFT method, Principal Singular values and Singular vectors Adaptation (PiSSA), which optimizes a significantly reduced parameter space while achieving or surpassing the performance of full-parameter fine-tuning. PiSSA is inspired by Intrinsic SAID, which suggests that pre-trained, over-parametrized models inhabit a space of low intrinsic dimension. Consequently, PiSSA represents a matrix W within the model by the product of two trainable matrices A and B, plus a residual matrix $W^{res}$ for error correction. SVD is employed to factorize W, and the principal singular values and vectors of W are utilized to initialize A and B. The residual singular values and vectors initialize the residual matrix $W^{res}$, which keeps frozen during fine-tuning. Notably, PiSSA shares the same architecture with LoRA. However, LoRA approximates Delta W through the product of two matrices, A, initialized with Gaussian noise, and B, initialized with zeros, while PiSSA initializes A and B with principal singular values and vectors of the original matrix W. PiSSA can better approximate the outcomes of full-parameter fine-tuning at the beginning by changing the essential parts while freezing the "noisy" parts. In comparison, LoRA freezes the original matrix and updates the "noise". This distinction enables PiSSA to convergence much faster than LoRA and also achieve better performance in the end. Due to the same architecture, PiSSA inherits many of LoRA's advantages, such as parameter efficiency and compatibility with quantization. Leveraging a fast SVD method, the initialization of PiSSA takes only a few seconds, inducing negligible cost of switching LoRA to PiSSA.

CoSSegGaussians: Compact and Swift Scene Segmenting 3D Gaussians with Dual Feature Fusion

Jan 30, 2024Abstract:We propose Compact and Swift Segmenting 3D Gaussians(CoSSegGaussians), a method for compact 3D-consistent scene segmentation at fast rendering speed with only RGB images input. Previous NeRF-based segmentation methods have relied on time-consuming neural scene optimization. While recent 3D Gaussian Splatting has notably improved speed, existing Gaussian-based segmentation methods struggle to produce compact masks, especially in zero-shot segmentation. This issue probably stems from their straightforward assignment of learnable parameters to each Gaussian, resulting in a lack of robustness against cross-view inconsistent 2D machine-generated labels. Our method aims to address this problem by employing Dual Feature Fusion Network as Gaussians' segmentation field. Specifically, we first optimize 3D Gaussians under RGB supervision. After Gaussian Locating, DINO features extracted from images are applied through explicit unprojection, which are further incorporated with spatial features from the efficient point cloud processing network. Feature aggregation is utilized to fuse them in a global-to-local strategy for compact segmentation features. Experimental results show that our model outperforms baselines on both semantic and panoptic zero-shot segmentation task, meanwhile consumes less than 10% inference time compared to NeRF-based methods. Code and more results will be available at https://David-Dou.github.io/CoSSegGaussians

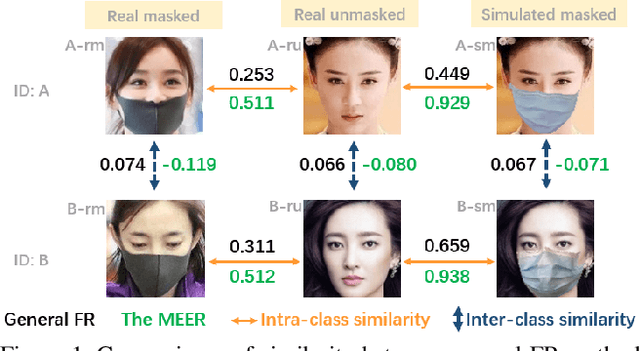

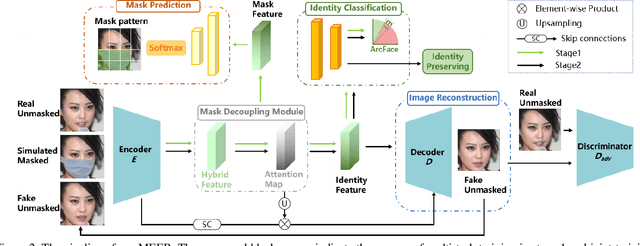

Seeing through the Mask: Multi-task Generative Mask Decoupling Face Recognition

Nov 20, 2023

Abstract:The outbreak of COVID-19 pandemic make people wear masks more frequently than ever. Current general face recognition system suffers from serious performance degradation,when encountering occluded scenes. The potential reason is that face features are corrupted by occlusions on key facial regions. To tackle this problem, previous works either extract identity-related embeddings on feature level by additional mask prediction, or restore the occluded facial part by generative models. However, the former lacks visual results for model interpretation, while the latter suffers from artifacts which may affect downstream recognition. Therefore, this paper proposes a Multi-task gEnerative mask dEcoupling face Recognition (MEER) network to jointly handle these two tasks, which can learn occlusionirrelevant and identity-related representation while achieving unmasked face synthesis. We first present a novel mask decoupling module to disentangle mask and identity information, which makes the network obtain purer identity features from visible facial components. Then, an unmasked face is restored by a joint-training strategy, which will be further used to refine the recognition network with an id-preserving loss. Experiments on masked face recognition under realistic and synthetic occlusions benchmarks demonstrate that the MEER can outperform the state-ofthe-art methods.

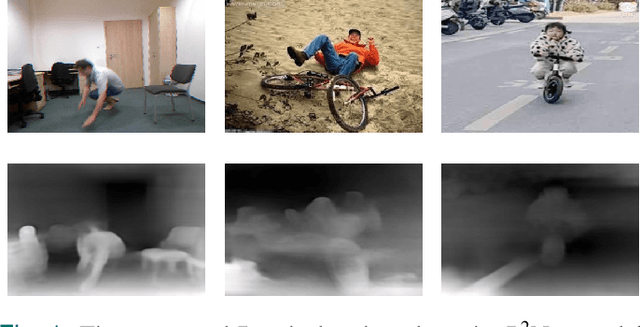

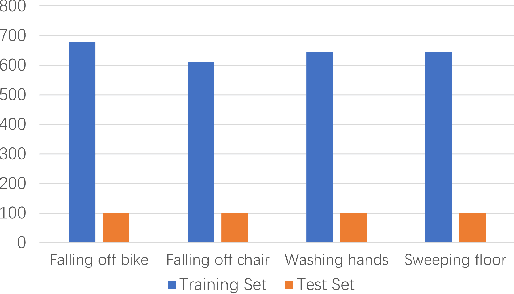

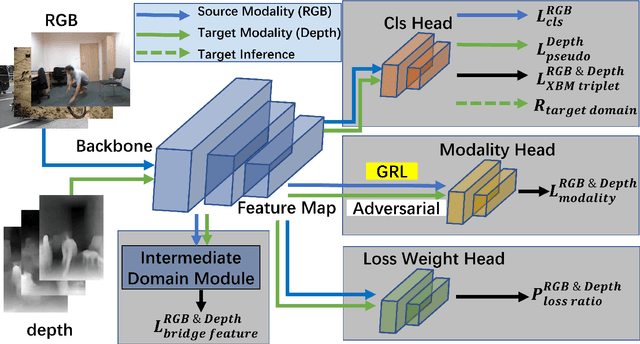

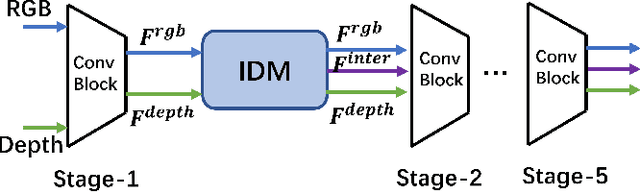

Towards Privacy-Supporting Fall Detection via Deep Unsupervised RGB2Depth Adaptation

Aug 23, 2023

Abstract:Fall detection is a vital task in health monitoring, as it allows the system to trigger an alert and therefore enabling faster interventions when a person experiences a fall. Although most previous approaches rely on standard RGB video data, such detailed appearance-aware monitoring poses significant privacy concerns. Depth sensors, on the other hand, are better at preserving privacy as they merely capture the distance of objects from the sensor or camera, omitting color and texture information. In this paper, we introduce a privacy-supporting solution that makes the RGB-trained model applicable in depth domain and utilizes depth data at test time for fall detection. To achieve cross-modal fall detection, we present an unsupervised RGB to Depth (RGB2Depth) cross-modal domain adaptation approach that leverages labelled RGB data and unlabelled depth data during training. Our proposed pipeline incorporates an intermediate domain module for feature bridging, modality adversarial loss for modality discrimination, classification loss for pseudo-labeled depth data and labeled source data, triplet loss that considers both source and target domains, and a novel adaptive loss weight adjustment method for improved coordination among various losses. Our approach achieves state-of-the-art results in the unsupervised RGB2Depth domain adaptation task for fall detection. Code is available at https://github.com/1015206533/privacy_supporting_fall_detection.

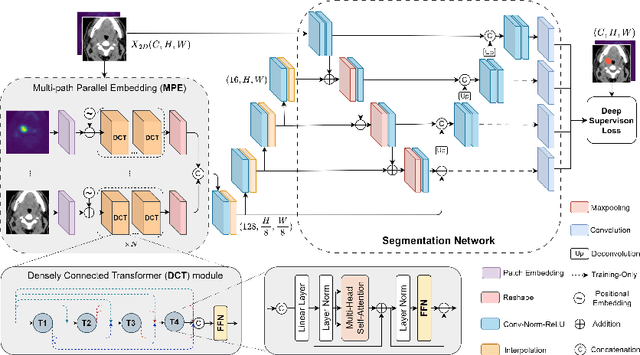

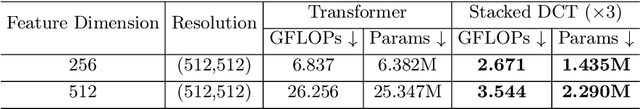

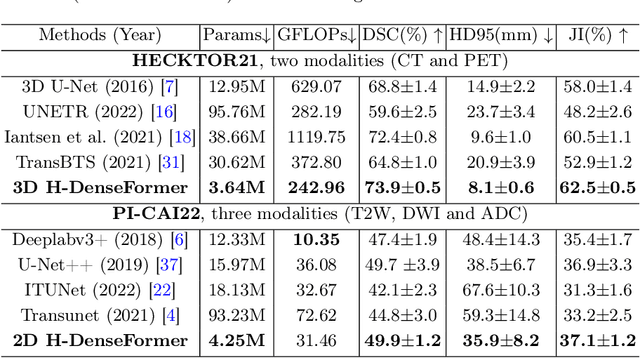

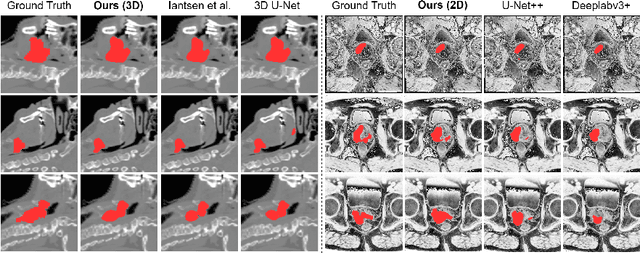

H-DenseFormer: An Efficient Hybrid Densely Connected Transformer for Multimodal Tumor Segmentation

Jul 04, 2023

Abstract:Recently, deep learning methods have been widely used for tumor segmentation of multimodal medical images with promising results. However, most existing methods are limited by insufficient representational ability, specific modality number and high computational complexity. In this paper, we propose a hybrid densely connected network for tumor segmentation, named H-DenseFormer, which combines the representational power of the Convolutional Neural Network (CNN) and the Transformer structures. Specifically, H-DenseFormer integrates a Transformer-based Multi-path Parallel Embedding (MPE) module that can take an arbitrary number of modalities as input to extract the fusion features from different modalities. Then, the multimodal fusion features are delivered to different levels of the encoder to enhance multimodal learning representation. Besides, we design a lightweight Densely Connected Transformer (DCT) block to replace the standard Transformer block, thus significantly reducing computational complexity. We conduct extensive experiments on two public multimodal datasets, HECKTOR21 and PI-CAI22. The experimental results show that our proposed method outperforms the existing state-of-the-art methods while having lower computational complexity. The source code is available at https://github.com/shijun18/H-DenseFormer.

V-Cloak: Intelligibility-, Naturalness- & Timbre-Preserving Real-Time Voice Anonymization

Oct 27, 2022Abstract:Voice data generated on instant messaging or social media applications contains unique user voiceprints that may be abused by malicious adversaries for identity inference or identity theft. Existing voice anonymization techniques, e.g., signal processing and voice conversion/synthesis, suffer from degradation of perceptual quality. In this paper, we develop a voice anonymization system, named V-Cloak, which attains real-time voice anonymization while preserving the intelligibility, naturalness and timbre of the audio. Our designed anonymizer features a one-shot generative model that modulates the features of the original audio at different frequency levels. We train the anonymizer with a carefully-designed loss function. Apart from the anonymity loss, we further incorporate the intelligibility loss and the psychoacoustics-based naturalness loss. The anonymizer can realize untargeted and targeted anonymization to achieve the anonymity goals of unidentifiability and unlinkability. We have conducted extensive experiments on four datasets, i.e., LibriSpeech (English), AISHELL (Chinese), CommonVoice (French) and CommonVoice (Italian), five Automatic Speaker Verification (ASV) systems (including two DNN-based, two statistical and one commercial ASV), and eleven Automatic Speech Recognition (ASR) systems (for different languages). Experiment results confirm that V-Cloak outperforms five baselines in terms of anonymity performance. We also demonstrate that V-Cloak trained only on the VoxCeleb1 dataset against ECAPA-TDNN ASV and DeepSpeech2 ASR has transferable anonymity against other ASVs and cross-language intelligibility for other ASRs. Furthermore, we verify the robustness of V-Cloak against various de-noising techniques and adaptive attacks. Hopefully, V-Cloak may provide a cloak for us in a prism world.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge