Yuji Matsumoto

Better Generalizing to Unseen Concepts: An Evaluation Framework and An LLM-Based Auto-Labeled Pipeline for Biomedical Concept Recognition

Jan 23, 2026Abstract:Generalization to unseen concepts is a central challenge due to the scarcity of human annotations in Mention-agnostic Biomedical Concept Recognition (MA-BCR). This work makes two key contributions to systematically address this issue. First, we propose an evaluation framework built on hierarchical concept indices and novel metrics to measure generalization. Second, we explore LLM-based Auto-Labeled Data (ALD) as a scalable resource, creating a task-specific pipeline for its generation. Our research unequivocally shows that while LLM-generated ALD cannot fully substitute for manual annotations, it is a valuable resource for improving generalization, successfully providing models with the broader coverage and structural knowledge needed to approach recognizing unseen concepts. Code and datasets are available at https://github.com/bio-ie-tool/hi-ald.

Post Persona Alignment for Multi-Session Dialogue Generation

Jun 13, 2025Abstract:Multi-session persona-based dialogue generation presents challenges in maintaining long-term consistency and generating diverse, personalized responses. While large language models (LLMs) excel in single-session dialogues, they struggle to preserve persona fidelity and conversational coherence across extended interactions. Existing methods typically retrieve persona information before response generation, which can constrain diversity and result in generic outputs. We propose Post Persona Alignment (PPA), a novel two-stage framework that reverses this process. PPA first generates a general response based solely on dialogue context, then retrieves relevant persona memories using the response as a query, and finally refines the response to align with the speaker's persona. This post-hoc alignment strategy promotes naturalness and diversity while preserving consistency and personalization. Experiments on multi-session LLM-generated dialogue data demonstrate that PPA significantly outperforms prior approaches in consistency, diversity, and persona relevance, offering a more flexible and effective paradigm for long-term personalized dialogue generation.

MA-COIR: Leveraging Semantic Search Index and Generative Models for Ontology-Driven Biomedical Concept Recognition

May 19, 2025Abstract:Recognizing biomedical concepts in the text is vital for ontology refinement, knowledge graph construction, and concept relationship discovery. However, traditional concept recognition methods, relying on explicit mention identification, often fail to capture complex concepts not explicitly stated in the text. To overcome this limitation, we introduce MA-COIR, a framework that reformulates concept recognition as an indexing-recognition task. By assigning semantic search indexes (ssIDs) to concepts, MA-COIR resolves ambiguities in ontology entries and enhances recognition efficiency. Using a pretrained BART-based model fine-tuned on small datasets, our approach reduces computational requirements to facilitate adoption by domain experts. Furthermore, we incorporate large language models (LLMs)-generated queries and synthetic data to improve recognition in low-resource settings. Experimental results on three scenarios (CDR, HPO, and HOIP) highlight the effectiveness of MA-COIR in recognizing both explicit and implicit concepts without the need for mention-level annotations during inference, advancing ontology-driven concept recognition in biomedical domain applications. Our code and constructed data are available at https://github.com/sl-633/macoir-master.

Recent Trends in Personalized Dialogue Generation: A Review of Datasets, Methodologies, and Evaluations

May 28, 2024

Abstract:Enhancing user engagement through personalization in conversational agents has gained significance, especially with the advent of large language models that generate fluent responses. Personalized dialogue generation, however, is multifaceted and varies in its definition -- ranging from instilling a persona in the agent to capturing users' explicit and implicit cues. This paper seeks to systemically survey the recent landscape of personalized dialogue generation, including the datasets employed, methodologies developed, and evaluation metrics applied. Covering 22 datasets, we highlight benchmark datasets and newer ones enriched with additional features. We further analyze 17 seminal works from top conferences between 2021-2023 and identify five distinct types of problems. We also shed light on recent progress by LLMs in personalized dialogue generation. Our evaluation section offers a comprehensive summary of assessment facets and metrics utilized in these works. In conclusion, we discuss prevailing challenges and envision prospect directions for future research in personalized dialogue generation.

A Dataset for Pharmacovigilance in German, French, and Japanese: Annotating Adverse Drug Reactions across Languages

Mar 27, 2024

Abstract:User-generated data sources have gained significance in uncovering Adverse Drug Reactions (ADRs), with an increasing number of discussions occurring in the digital world. However, the existing clinical corpora predominantly revolve around scientific articles in English. This work presents a multilingual corpus of texts concerning ADRs gathered from diverse sources, including patient fora, social media, and clinical reports in German, French, and Japanese. Our corpus contains annotations covering 12 entity types, four attribute types, and 13 relation types. It contributes to the development of real-world multilingual language models for healthcare. We provide statistics to highlight certain challenges associated with the corpus and conduct preliminary experiments resulting in strong baselines for extracting entities and relations between these entities, both within and across languages.

Unsupervised Paraphrasing of Multiword Expressions

Jun 02, 2023Abstract:We propose an unsupervised approach to paraphrasing multiword expressions (MWEs) in context. Our model employs only monolingual corpus data and pre-trained language models (without fine-tuning), and does not make use of any external resources such as dictionaries. We evaluate our method on the SemEval 2022 idiomatic semantic text similarity task, and show that it outperforms all unsupervised systems and rivals supervised systems.

Is In-hospital Meta-information Useful for Abstractive Discharge Summary Generation?

Mar 10, 2023

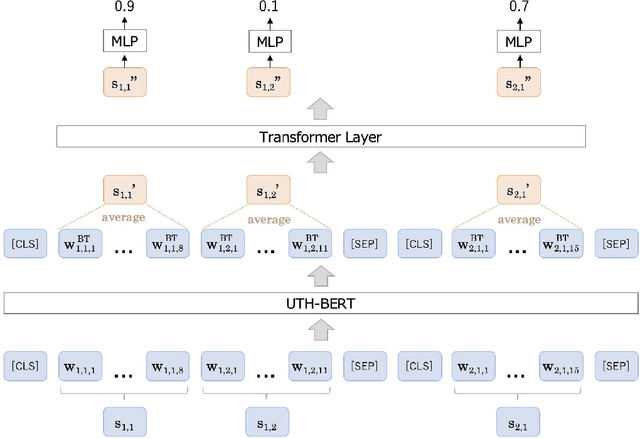

Abstract:During the patient's hospitalization, the physician must record daily observations of the patient and summarize them into a brief document called "discharge summary" when the patient is discharged. Automated generation of discharge summary can greatly relieve the physicians' burden, and has been addressed recently in the research community. Most previous studies of discharge summary generation using the sequence-to-sequence architecture focus on only inpatient notes for input. However, electric health records (EHR) also have rich structured metadata (e.g., hospital, physician, disease, length of stay, etc.) that might be useful. This paper investigates the effectiveness of medical meta-information for summarization tasks. We obtain four types of meta-information from the EHR systems and encode each meta-information into a sequence-to-sequence model. Using Japanese EHRs, meta-information encoded models increased ROUGE-1 by up to 4.45 points and BERTScore by 3.77 points over the vanilla Longformer. Also, we found that the encoded meta-information improves the precisions of its related terms in the outputs. Our results showed the benefit of the use of medical meta-information.

Switching to Discriminative Image Captioning by Relieving a Bottleneck of Reinforcement Learning

Dec 06, 2022Abstract:Discriminativeness is a desirable feature of image captions: captions should describe the characteristic details of input images. However, recent high-performing captioning models, which are trained with reinforcement learning (RL), tend to generate overly generic captions despite their high performance in various other criteria. First, we investigate the cause of the unexpectedly low discriminativeness and show that RL has a deeply rooted side effect of limiting the output words to high-frequency words. The limited vocabulary is a severe bottleneck for discriminativeness as it is difficult for a model to describe the details beyond its vocabulary. Then, based on this identification of the bottleneck, we drastically recast discriminative image captioning as a much simpler task of encouraging low-frequency word generation. Hinted by long-tail classification and debiasing methods, we propose methods that easily switch off-the-shelf RL models to discriminativeness-aware models with only a single-epoch fine-tuning on the part of the parameters. Extensive experiments demonstrate that our methods significantly enhance the discriminativeness of off-the-shelf RL models and even outperform previous discriminativeness-aware methods with much smaller computational costs. Detailed analysis and human evaluation also verify that our methods boost the discriminativeness without sacrificing the overall quality of captions.

Exploring Optimal Granularity for Extractive Summarization of Unstructured Health Records: Analysis of the Largest Multi-Institutional Archive of Health Records in Japan

Sep 20, 2022

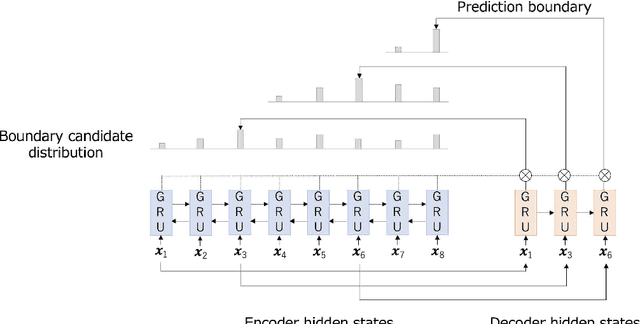

Abstract:Automated summarization of clinical texts can reduce the burden of medical professionals. "Discharge summaries" are one promising application of the summarization, because they can be generated from daily inpatient records. Our preliminary experiment suggests that 20-31% of the descriptions in discharge summaries overlap with the content of the inpatient records. However, it remains unclear how the summaries should be generated from the unstructured source. To decompose the physician's summarization process, this study aimed to identify the optimal granularity in summarization. We first defined three types of summarization units with different granularities to compare the performance of the discharge summary generation: whole sentences, clinical segments, and clauses. We defined clinical segments in this study, aiming to express the smallest medically meaningful concepts. To obtain the clinical segments, it was necessary to automatically split the texts in the first stage of the pipeline. Accordingly, we compared rule-based methods and a machine learning method, and the latter outperformed the formers with an F1 score of 0.846 in the splitting task. Next, we experimentally measured the accuracy of extractive summarization using the three types of units, based on the ROUGE-1 metric, on a multi-institutional national archive of health records in Japan. The measured accuracies of extractive summarization using whole sentences, clinical segments, and clauses were 31.91, 36.15, and 25.18, respectively. We found that the clinical segments yielded higher accuracy than sentences and clauses. This result indicates that summarization of inpatient records demands finer granularity than sentence-oriented processing. Although we used only Japanese health records, it can be interpreted as follows: physicians extract "concepts of medical significance" from patient records and recombine them ...

Unsupervised Lexical Substitution with Decontextualised Embeddings

Sep 17, 2022

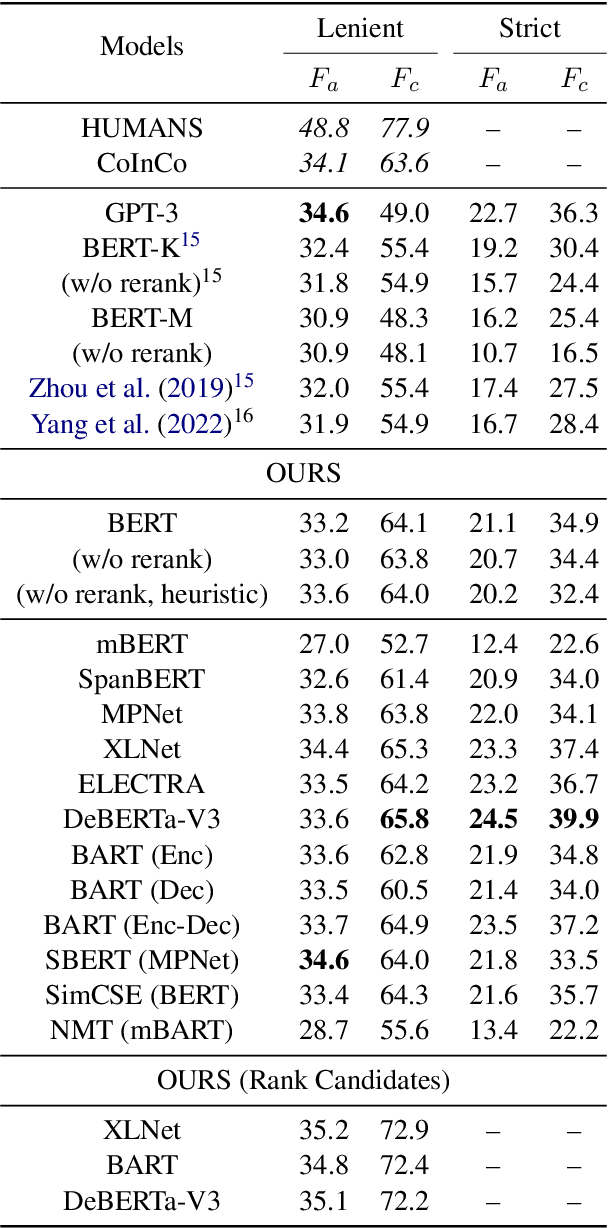

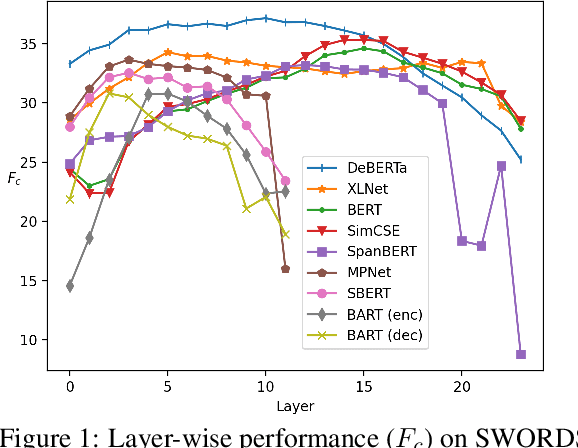

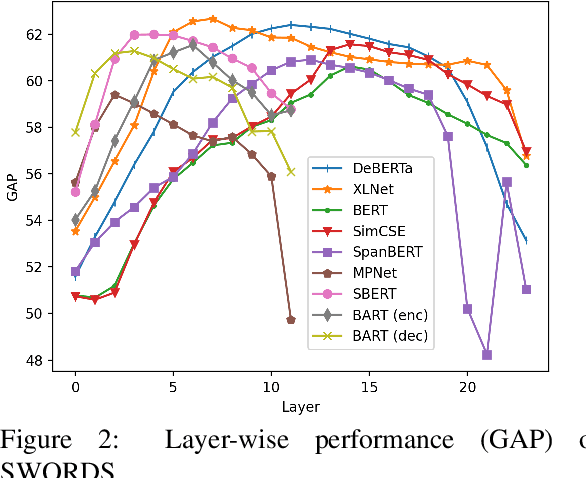

Abstract:We propose a new unsupervised method for lexical substitution using pre-trained language models. Compared to previous approaches that use the generative capability of language models to predict substitutes, our method retrieves substitutes based on the similarity of contextualised and decontextualised word embeddings, i.e. the average contextual representation of a word in multiple contexts. We conduct experiments in English and Italian, and show that our method substantially outperforms strong baselines and establishes a new state-of-the-art without any explicit supervision or fine-tuning. We further show that our method performs particularly well at predicting low-frequency substitutes, and also generates a diverse list of substitute candidates, reducing morphophonetic or morphosyntactic biases induced by article-noun agreement.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge