Yuchang Zhu

What Matters in LLM-generated Data: Diversity and Its Effect on Model Fine-Tuning

Jun 24, 2025

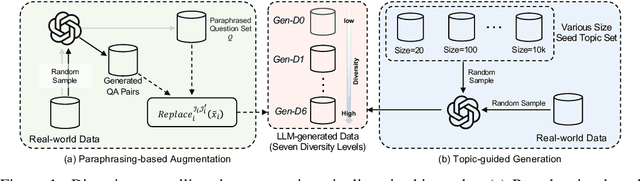

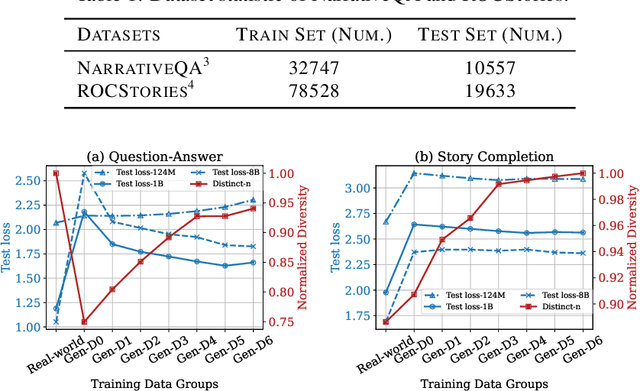

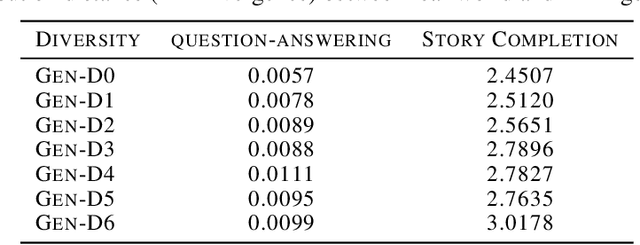

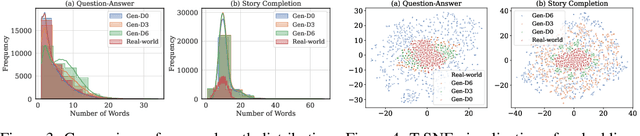

Abstract:With the remarkable generative capabilities of large language models (LLMs), using LLM-generated data to train downstream models has emerged as a promising approach to mitigate data scarcity in specific domains and reduce time-consuming annotations. However, recent studies have highlighted a critical issue: iterative training on self-generated data results in model collapse, where model performance degrades over time. Despite extensive research on the implications of LLM-generated data, these works often neglect the importance of data diversity, a key factor in data quality. In this work, we aim to understand the implications of the diversity of LLM-generated data on downstream model performance. Specifically, we explore how varying levels of diversity in LLM-generated data affect downstream model performance. Additionally, we investigate the performance of models trained on data that mixes different proportions of LLM-generated data, which we refer to as synthetic data. Our experimental results show that, with minimal distribution shift, moderately diverse LLM-generated data can enhance model performance in scenarios with insufficient labeled data, whereas highly diverse generated data has a negative impact. We hope our empirical findings will offer valuable guidance for future studies on LLMs as data generators.

GT-SVQ: A Linear-Time Graph Transformer for Node Classification Using Spiking Vector Quantization

Apr 16, 2025Abstract:Graph Transformers (GTs), which simultaneously integrate message-passing and self-attention mechanisms, have achieved promising empirical results in some graph prediction tasks. Although these approaches show the potential of Transformers in capturing long-range graph topology information, issues concerning the quadratic complexity and high computing energy consumption severely limit the scalability of GTs on large-scale graphs. Recently, as brain-inspired neural networks, Spiking Neural Networks (SNNs), facilitate the development of graph representation learning methods with lower computational and storage overhead through the unique event-driven spiking neurons. Inspired by these characteristics, we propose a linear-time Graph Transformer using Spiking Vector Quantization (GT-SVQ) for node classification. GT-SVQ reconstructs codebooks based on rate coding outputs from spiking neurons, and injects the codebooks into self-attention blocks to aggregate global information in linear complexity. Besides, spiking vector quantization effectively alleviates codebook collapse and the reliance on complex machinery (distance measure, auxiliary loss, etc.) present in previous vector quantization-based graph learning methods. In experiments, we compare GT-SVQ with other state-of-the-art baselines on node classification datasets ranging from small to large. Experimental results show that GT-SVQ has achieved competitive performances on most datasets while maintaining up to 130x faster inference speed compared to other GTs.

Are Large Language Models In-Context Graph Learners?

Feb 19, 2025

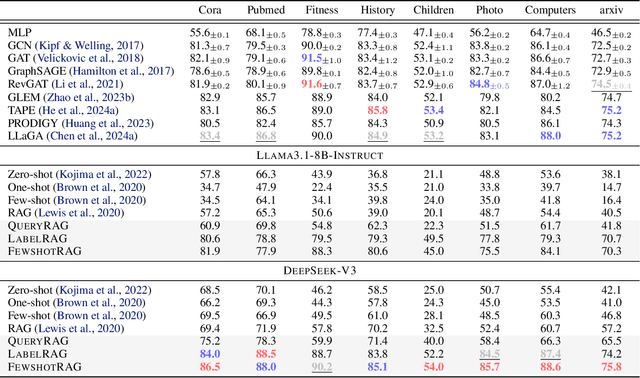

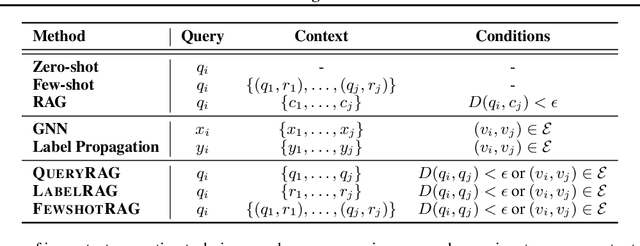

Abstract:Large language models (LLMs) have demonstrated remarkable in-context reasoning capabilities across a wide range of tasks, particularly with unstructured inputs such as language or images. However, LLMs struggle to handle structured data, such as graphs, due to their lack of understanding of non-Euclidean structures. As a result, without additional fine-tuning, their performance significantly lags behind that of graph neural networks (GNNs) in graph learning tasks. In this paper, we show that learning on graph data can be conceptualized as a retrieval-augmented generation (RAG) process, where specific instances (e.g., nodes or edges) act as queries, and the graph itself serves as the retrieved context. Building on this insight, we propose a series of RAG frameworks to enhance the in-context learning capabilities of LLMs for graph learning tasks. Comprehensive evaluations demonstrate that our proposed RAG frameworks significantly improve LLM performance on graph-based tasks, particularly in scenarios where a pretrained LLM must be used without modification or accessed via an API.

Measuring Diversity in Synthetic Datasets

Feb 12, 2025Abstract:Large language models (LLMs) are widely adopted to generate synthetic datasets for various natural language processing (NLP) tasks, such as text classification and summarization. However, accurately measuring the diversity of these synthetic datasets-an aspect crucial for robust model performance-remains a significant challenge. In this paper, we introduce DCScore, a novel method for measuring synthetic dataset diversity from a classification perspective. Specifically, DCScore formulates diversity evaluation as a sample classification task, leveraging mutual relationships among samples. We further provide theoretical verification of the diversity-related axioms satisfied by DCScore, highlighting its role as a principled diversity evaluation method. Experimental results on synthetic datasets reveal that DCScore enjoys a stronger correlation with multiple diversity pseudo-truths of evaluated datasets, underscoring its effectiveness. Moreover, both empirical and theoretical evidence demonstrate that DCScore substantially reduces computational costs compared to existing approaches. Code is available at: https://github.com/BlueWhaleLab/DCScore.

Revisiting and Benchmarking Graph Autoencoders: A Contrastive Learning Perspective

Oct 14, 2024

Abstract:Graph autoencoders (GAEs) are self-supervised learning models that can learn meaningful representations of graph-structured data by reconstructing the input graph from a low-dimensional latent space. Over the past few years, GAEs have gained significant attention in academia and industry. In particular, the recent advent of GAEs with masked autoencoding schemes marks a significant advancement in graph self-supervised learning research. While numerous GAEs have been proposed, the underlying mechanisms of GAEs are not well understood, and a comprehensive benchmark for GAEs is still lacking. In this work, we bridge the gap between GAEs and contrastive learning by establishing conceptual and methodological connections. We revisit the GAEs studied in previous works and demonstrate how contrastive learning principles can be applied to GAEs. Motivated by these insights, we introduce lrGAE (left-right GAE), a general and powerful GAE framework that leverages contrastive learning principles to learn meaningful representations. Our proposed lrGAE not only facilitates a deeper understanding of GAEs but also sets a new benchmark for GAEs across diverse graph-based learning tasks. The source code for lrGAE, including the baselines and all the code for reproducing the results, is publicly available at https://github.com/EdisonLeeeee/lrGAE.

One Fits All: Learning Fair Graph Neural Networks for Various Sensitive Attributes

Jun 19, 2024

Abstract:Recent studies have highlighted fairness issues in Graph Neural Networks (GNNs), where they produce discriminatory predictions against specific protected groups categorized by sensitive attributes such as race and age. While various efforts to enhance GNN fairness have made significant progress, these approaches are often tailored to specific sensitive attributes. Consequently, they necessitate retraining the model from scratch to accommodate changes in the sensitive attribute requirement, resulting in high computational costs. To gain deeper insights into this issue, we approach the graph fairness problem from a causal modeling perspective, where we identify the confounding effect induced by the sensitive attribute as the underlying reason. Motivated by this observation, we formulate the fairness problem in graphs from an invariant learning perspective, which aims to learn invariant representations across environments. Accordingly, we propose a graph fairness framework based on invariant learning, namely FairINV, which enables the training of fair GNNs to accommodate various sensitive attributes within a single training session. Specifically, FairINV incorporates sensitive attribute partition and trains fair GNNs by eliminating spurious correlations between the label and various sensitive attributes. Experimental results on several real-world datasets demonstrate that FairINV significantly outperforms state-of-the-art fairness approaches, underscoring its effectiveness. Our code is available via: https://github.com/ZzoomD/FairINV/.

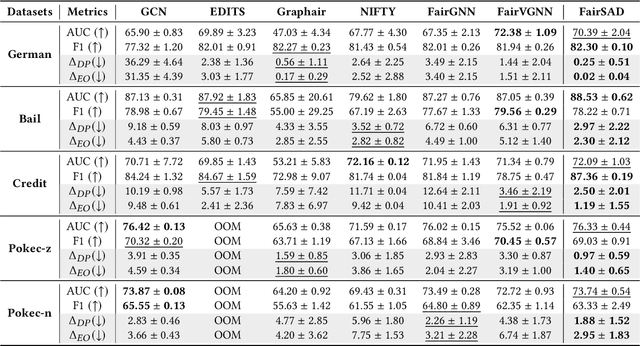

Fair Graph Representation Learning via Sensitive Attribute Disentanglement

May 11, 2024

Abstract:Group fairness for Graph Neural Networks (GNNs), which emphasizes algorithmic decisions neither favoring nor harming certain groups defined by sensitive attributes (e.g., race and gender), has gained considerable attention. In particular, the objective of group fairness is to ensure that the decisions made by GNNs are independent of the sensitive attribute. To achieve this objective, most existing approaches involve eliminating sensitive attribute information in node representations or algorithmic decisions. However, such ways may also eliminate task-related information due to its inherent correlation with the sensitive attribute, leading to a sacrifice in utility. In this work, we focus on improving the fairness of GNNs while preserving task-related information and propose a fair GNN framework named FairSAD. Instead of eliminating sensitive attribute information, FairSAD enhances the fairness of GNNs via Sensitive Attribute Disentanglement (SAD), which separates the sensitive attribute-related information into an independent component to mitigate its impact. Additionally, FairSAD utilizes a channel masking mechanism to adaptively identify the sensitive attribute-related component and subsequently decorrelates it. Overall, FairSAD minimizes the impact of the sensitive attribute on GNN outcomes rather than eliminating sensitive attributes, thereby preserving task-related information associated with the sensitive attribute. Furthermore, experiments conducted on several real-world datasets demonstrate that FairSAD outperforms other state-of-the-art methods by a significant margin in terms of both fairness and utility performance. Our source code is available at https://github.com/ZzoomD/FairSAD.

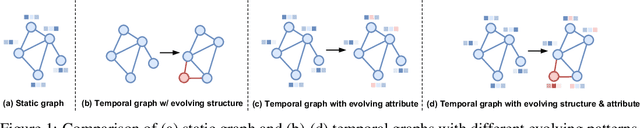

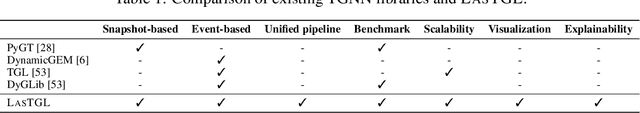

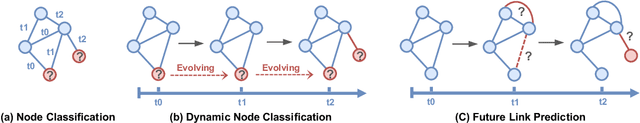

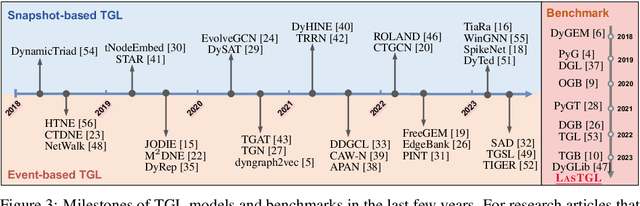

LasTGL: An Industrial Framework for Large-Scale Temporal Graph Learning

Nov 30, 2023

Abstract:Over the past few years, graph neural networks (GNNs) have become powerful and practical tools for learning on (static) graph-structure data. However, many real-world applications, such as social networks and e-commerce, involve temporal graphs where nodes and edges are dynamically evolving. Temporal graph neural networks (TGNNs) have progressively emerged as an extension of GNNs to address time-evolving graphs and have gradually become a trending research topic in both academics and industry. Advancing research and application in such an emerging field necessitates the development of new tools to compose TGNN models and unify their different schemes for dealing with temporal graphs. In this work, we introduce LasTGL, an industrial framework that integrates unified and extensible implementations of common temporal graph learning algorithms for various advanced tasks. The purpose of LasTGL is to provide the essential building blocks for solving temporal graph learning tasks, focusing on the guiding principles of user-friendliness and quick prototyping on which PyTorch is based. In particular, LasTGL provides comprehensive temporal graph datasets, TGNN models and utilities along with well-documented tutorials, making it suitable for both absolute beginners and expert deep learning practitioners alike.

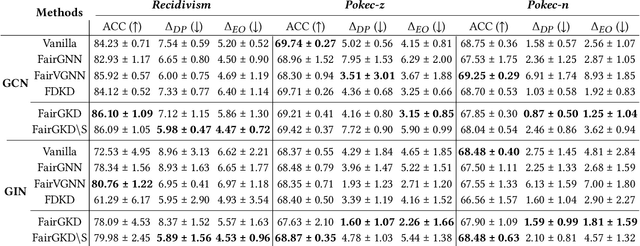

The Devil is in the Data: Learning Fair Graph Neural Networks via Partial Knowledge Distillation

Nov 29, 2023

Abstract:Graph neural networks (GNNs) are being increasingly used in many high-stakes tasks, and as a result, there is growing attention on their fairness recently. GNNs have been shown to be unfair as they tend to make discriminatory decisions toward certain demographic groups, divided by sensitive attributes such as gender and race. While recent works have been devoted to improving their fairness performance, they often require accessible demographic information. This greatly limits their applicability in real-world scenarios due to legal restrictions. To address this problem, we present a demographic-agnostic method to learn fair GNNs via knowledge distillation, namely FairGKD. Our work is motivated by the empirical observation that training GNNs on partial data (i.e., only node attributes or topology data) can improve their fairness, albeit at the cost of utility. To make a balanced trade-off between fairness and utility performance, we employ a set of fairness experts (i.e., GNNs trained on different partial data) to construct the synthetic teacher, which distills fairer and informative knowledge to guide the learning of the GNN student. Experiments on several benchmark datasets demonstrate that FairGKD, which does not require access to demographic information, significantly improves the fairness of GNNs by a large margin while maintaining their utility.

Hetero$^2$Net: Heterophily-aware Representation Learning on Heterogenerous Graphs

Oct 18, 2023

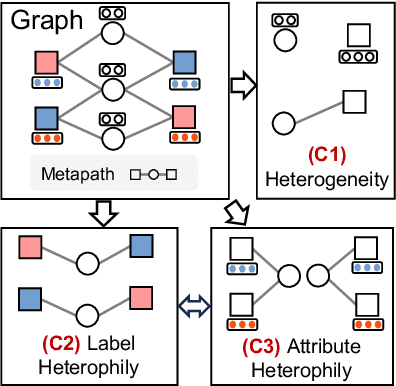

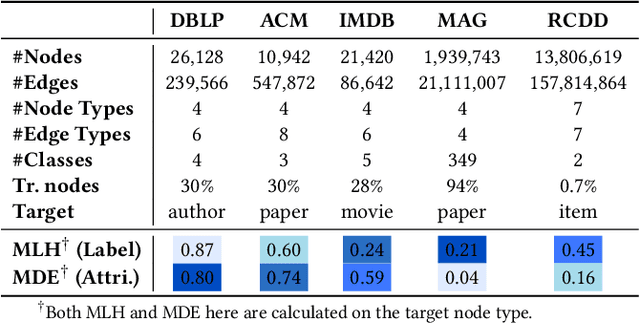

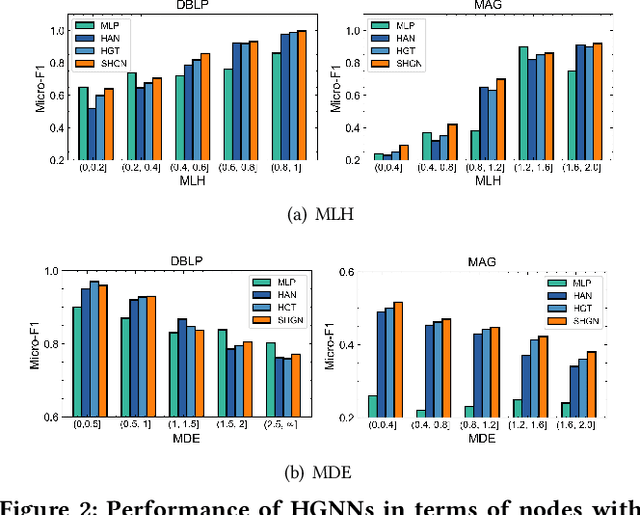

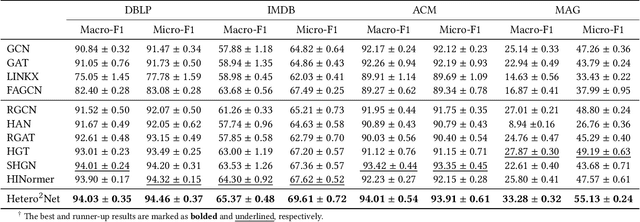

Abstract:Real-world graphs are typically complex, exhibiting heterogeneity in the global structure, as well as strong heterophily within local neighborhoods. While a growing body of literature has revealed the limitations of common graph neural networks (GNNs) in handling homogeneous graphs with heterophily, little work has been conducted on investigating the heterophily properties in the context of heterogeneous graphs. To bridge this research gap, we identify the heterophily in heterogeneous graphs using metapaths and propose two practical metrics to quantitatively describe the levels of heterophily. Through in-depth investigations on several real-world heterogeneous graphs exhibiting varying levels of heterophily, we have observed that heterogeneous graph neural networks (HGNNs), which inherit many mechanisms from GNNs designed for homogeneous graphs, fail to generalize to heterogeneous graphs with heterophily or low level of homophily. To address the challenge, we present Hetero$^2$Net, a heterophily-aware HGNN that incorporates both masked metapath prediction and masked label prediction tasks to effectively and flexibly handle both homophilic and heterophilic heterogeneous graphs. We evaluate the performance of Hetero$^2$Net on five real-world heterogeneous graph benchmarks with varying levels of heterophily. The results demonstrate that Hetero$^2$Net outperforms strong baselines in the semi-supervised node classification task, providing valuable insights into effectively handling more complex heterogeneous graphs.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge