Yuanyu Wan

KeepLoRA: Continual Learning with Residual Gradient Adaptation

Jan 27, 2026Abstract:Continual learning for pre-trained vision-language models requires balancing three competing objectives: retaining pre-trained knowledge, preserving knowledge from a sequence of learned tasks, and maintaining the plasticity to acquire new knowledge. This paper presents a simple but effective approach called KeepLoRA to effectively balance these objectives. We first analyze the knowledge retention mechanism within the model parameter space and find that general knowledge is mainly encoded in the principal subspace, while task-specific knowledge is encoded in the residual subspace. Motivated by this finding, KeepLoRA learns new tasks by restricting LoRA parameter updates in the residual subspace to prevent interfering with previously learned capabilities. Specifically, we infuse knowledge for a new task by projecting its gradient onto a subspace orthogonal to both the principal subspace of pre-trained model and the dominant directions of previous task features. Our theoretical and empirical analyses confirm that KeepLoRA balances the three objectives and achieves state-of-the-art performance. The implementation code is available at https://github.com/MaolinLuo/KeepLoRA.

Deep But Reliable: Advancing Multi-turn Reasoning for Thinking with Images

Dec 19, 2025

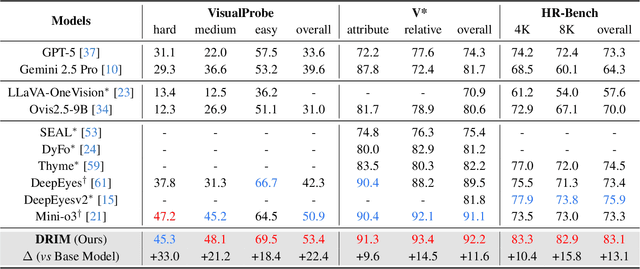

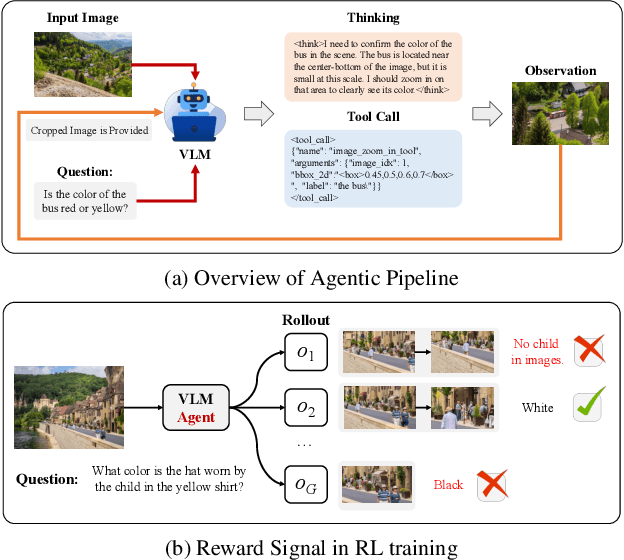

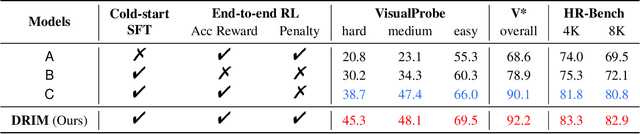

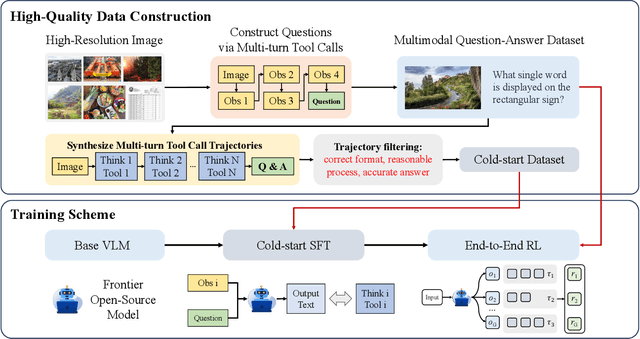

Abstract:Recent advances in large Vision-Language Models (VLMs) have exhibited strong reasoning capabilities on complex visual tasks by thinking with images in their Chain-of-Thought (CoT), which is achieved by actively invoking tools to analyze visual inputs rather than merely perceiving them. However, existing models often struggle to reflect on and correct themselves when attempting incorrect reasoning trajectories. To address this limitation, we propose DRIM, a model that enables deep but reliable multi-turn reasoning when thinking with images in its multimodal CoT. Our pipeline comprises three stages: data construction, cold-start SFT and RL. Based on a high-resolution image dataset, we construct high-difficulty and verifiable visual question-answer pairs, where solving each task requires multi-turn tool calls to reach the correct answer. In the SFT stage, we collect tool trajectories as cold-start data, guiding a multi-turn reasoning pattern. In the RL stage, we introduce redundancy-penalized policy optimization, which incentivizes the model to develop a self-reflective reasoning pattern. The basic idea is to impose judgment on reasoning trajectories and penalize those that produce incorrect answers without sufficient multi-scale exploration. Extensive experiments demonstrate that DRIM achieves superior performance on visual understanding benchmarks.

Beyond the Lower Bound: Bridging Regret Minimization and Best Arm Identification in Lexicographic Bandits

Nov 08, 2025Abstract:In multi-objective decision-making with hierarchical preferences, lexicographic bandits provide a natural framework for optimizing multiple objectives in a prioritized order. In this setting, a learner repeatedly selects arms and observes reward vectors, aiming to maximize the reward for the highest-priority objective, then the next, and so on. While previous studies have primarily focused on regret minimization, this work bridges the gap between \textit{regret minimization} and \textit{best arm identification} under lexicographic preferences. We propose two elimination-based algorithms to address this joint objective. The first algorithm eliminates suboptimal arms sequentially, layer by layer, in accordance with the objective priorities, and achieves sample complexity and regret bounds comparable to those of the best single-objective algorithms. The second algorithm simultaneously leverages reward information from all objectives in each round, effectively exploiting cross-objective dependencies. Remarkably, it outperforms the known lower bound for the single-objective bandit problem, highlighting the benefit of cross-objective information sharing in the multi-objective setting. Empirical results further validate their superior performance over baselines.

Dataset Ownership Verification for Pre-trained Masked Models

Jul 16, 2025Abstract:High-quality open-source datasets have emerged as a pivotal catalyst driving the swift advancement of deep learning, while facing the looming threat of potential exploitation. Protecting these datasets is of paramount importance for the interests of their owners. The verification of dataset ownership has evolved into a crucial approach in this domain; however, existing verification techniques are predominantly tailored to supervised models and contrastive pre-trained models, rendering them ill-suited for direct application to the increasingly prevalent masked models. In this work, we introduce the inaugural methodology addressing this critical, yet unresolved challenge, termed Dataset Ownership Verification for Masked Modeling (DOV4MM). The central objective is to ascertain whether a suspicious black-box model has been pre-trained on a particular unlabeled dataset, thereby assisting dataset owners in safeguarding their rights. DOV4MM is grounded in our empirical observation that when a model is pre-trained on the target dataset, the difficulty of reconstructing masked information within the embedding space exhibits a marked contrast to models not pre-trained on that dataset. We validated the efficacy of DOV4MM through ten masked image models on ImageNet-1K and four masked language models on WikiText-103. The results demonstrate that DOV4MM rejects the null hypothesis, with a $p$-value considerably below 0.05, surpassing all prior approaches. Code is available at https://github.com/xieyc99/DOV4MM.

Continuous Subspace Optimization for Continual Learning

May 17, 2025Abstract:Continual learning aims to learn multiple tasks sequentially while preserving prior knowledge, but faces the challenge of catastrophic forgetting when acquiring new knowledge. Recently, approaches leveraging pre-trained models have gained increasing popularity to mitigate this issue, due to the strong generalization ability of foundation models. To adjust pre-trained models for new tasks, existing methods usually employ low-rank adaptation, which restricts parameter updates to a fixed low-rank subspace. However, constraining the optimization space inherently compromises the model's learning capacity, resulting in inferior performance. To address the limitation, we propose Continuous Subspace Optimization for Continual Learning (CoSO) to fine-tune the model in a series of subspaces rather than a single one. These sequential subspaces are dynamically determined through the singular value decomposition of gradients. CoSO updates the model by projecting gradients into these subspaces, ensuring memory-efficient optimization. To mitigate forgetting, the optimization subspaces of each task are set to be orthogonal to the historical task subspace. During task learning, CoSO maintains a task-specific component that captures the critical update directions associated with the current task. Upon completing a task, this component is used to update the historical task subspace, laying the groundwork for subsequent learning. Extensive experiments on multiple datasets demonstrate that CoSO significantly outperforms state-of-the-art methods, especially in challenging scenarios with long task sequences.

Quantizing Diffusion Models from a Sampling-Aware Perspective

May 04, 2025Abstract:Diffusion models have recently emerged as the dominant approach in visual generation tasks. However, the lengthy denoising chains and the computationally intensive noise estimation networks hinder their applicability in low-latency and resource-limited environments. Previous research has endeavored to address these limitations in a decoupled manner, utilizing either advanced samplers or efficient model quantization techniques. In this study, we uncover that quantization-induced noise disrupts directional estimation at each sampling step, further distorting the precise directional estimations of higher-order samplers when solving the sampling equations through discretized numerical methods, thereby altering the optimal sampling trajectory. To attain dual acceleration with high fidelity, we propose a sampling-aware quantization strategy, wherein a Mixed-Order Trajectory Alignment technique is devised to impose a more stringent constraint on the error bounds at each sampling step, facilitating a more linear probability flow. Extensive experiments on sparse-step fast sampling across multiple datasets demonstrate that our approach preserves the rapid convergence characteristics of high-speed samplers while maintaining superior generation quality. Code will be made publicly available soon.

Revisiting Multi-Agent Asynchronous Online Optimization with Delays: the Strongly Convex Case

Mar 13, 2025Abstract:We revisit multi-agent asynchronous online optimization with delays, where only one of the agents becomes active for making the decision at each round, and the corresponding feedback is received by all the agents after unknown delays. Although previous studies have established an $O(\sqrt{dT})$ regret bound for this problem, they assume that the maximum delay $d$ is knowable or the arrival order of feedback satisfies a special property, which may not hold in practice. In this paper, we surprisingly find that when the loss functions are strongly convex, these assumptions can be eliminated, and the existing regret bound can be significantly improved to $O(d\log T)$ meanwhile. Specifically, to exploit the strong convexity of functions, we first propose a delayed variant of the classical follow-the-leader algorithm, namely FTDL, which is very simple but requires the full information of functions as feedback. Moreover, to handle the more general case with only the gradient feedback, we develop an approximate variant of FTDL by combining it with surrogate loss functions. Experimental results show that the approximate FTDL outperforms the existing algorithm in the strongly convex case.

Mirror Descent Under Generalized Smoothness

Feb 02, 2025

Abstract:Smoothness is crucial for attaining fast rates in first-order optimization. However, many optimization problems in modern machine learning involve non-smooth objectives. Recent studies relax the smoothness assumption by allowing the Lipschitz constant of the gradient to grow with respect to the gradient norm, which accommodates a broad range of objectives in practice. Despite this progress, existing generalizations of smoothness are restricted to Euclidean geometry with $\ell_2$-norm and only have theoretical guarantees for optimization in the Euclidean space. In this paper, we address this limitation by introducing a new $\ell*$-smoothness concept that measures the norm of Hessian in terms of a general norm and its dual, and establish convergence for mirror-descent-type algorithms, matching the rates under the classic smoothness. Notably, we propose a generalized self-bounding property that facilitates bounding the gradients via controlling suboptimality gaps, serving as a principal component for convergence analysis. Beyond deterministic optimization, we establish an anytime convergence for stochastic mirror descent based on a new bounded noise condition that encompasses the widely adopted bounded or affine noise assumptions.

Continuous Contrastive Learning for Long-Tailed Semi-Supervised Recognition

Oct 08, 2024Abstract:Long-tailed semi-supervised learning poses a significant challenge in training models with limited labeled data exhibiting a long-tailed label distribution. Current state-of-the-art LTSSL approaches heavily rely on high-quality pseudo-labels for large-scale unlabeled data. However, these methods often neglect the impact of representations learned by the neural network and struggle with real-world unlabeled data, which typically follows a different distribution than labeled data. This paper introduces a novel probabilistic framework that unifies various recent proposals in long-tail learning. Our framework derives the class-balanced contrastive loss through Gaussian kernel density estimation. We introduce a continuous contrastive learning method, CCL, extending our framework to unlabeled data using reliable and smoothed pseudo-labels. By progressively estimating the underlying label distribution and optimizing its alignment with model predictions, we tackle the diverse distribution of unlabeled data in real-world scenarios. Extensive experiments across multiple datasets with varying unlabeled data distributions demonstrate that CCL consistently outperforms prior state-of-the-art methods, achieving over 4% improvement on the ImageNet-127 dataset. Our source code is available at https://github.com/zhouzihao11/CCL

Projection-Free Variance Reduction Methods for Stochastic Constrained Multi-Level Compositional Optimization

Jun 06, 2024Abstract:This paper investigates projection-free algorithms for stochastic constrained multi-level optimization. In this context, the objective function is a nested composition of several smooth functions, and the decision set is closed and convex. Existing projection-free algorithms for solving this problem suffer from two limitations: 1) they solely focus on the gradient mapping criterion and fail to match the optimal sample complexities in unconstrained settings; 2) their analysis is exclusively applicable to non-convex functions, without considering convex and strongly convex objectives. To address these issues, we introduce novel projection-free variance reduction algorithms and analyze their complexities under different criteria. For gradient mapping, our complexities improve existing results and match the optimal rates for unconstrained problems. For the widely-used Frank-Wolfe gap criterion, we provide theoretical guarantees that align with those for single-level problems. Additionally, by using a stage-wise adaptation, we further obtain complexities for convex and strongly convex functions. Finally, numerical experiments on different tasks demonstrate the effectiveness of our methods.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge