Mengjie Shi

Adversarial Erasing with Pruned Elements: Towards Better Graph Lottery Ticket

Aug 10, 2023

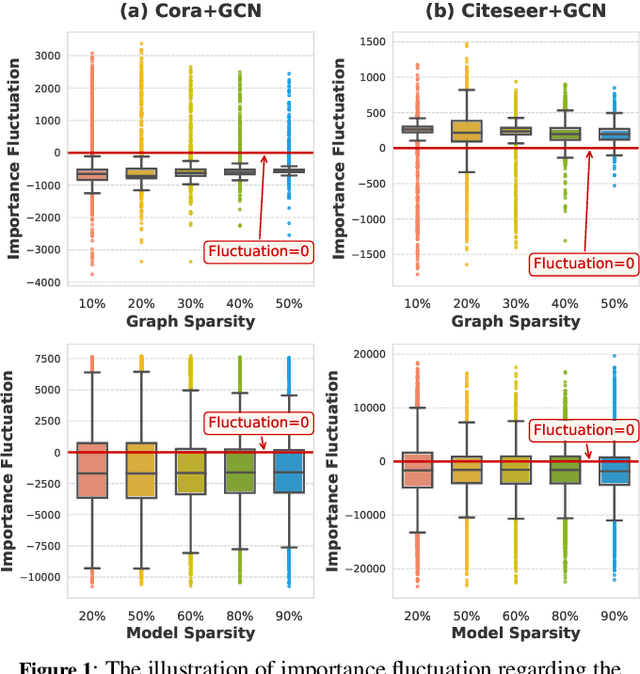

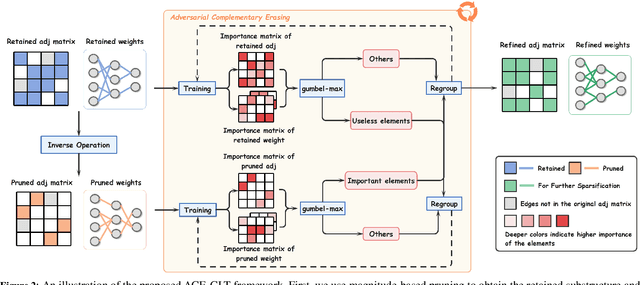

Abstract:Graph Lottery Ticket (GLT), a combination of core subgraph and sparse subnetwork, has been proposed to mitigate the computational cost of deep Graph Neural Networks (GNNs) on large input graphs while preserving original performance. However, the winning GLTs in exisiting studies are obtained by applying iterative magnitude-based pruning (IMP) without re-evaluating and re-considering the pruned information, which disregards the dynamic changes in the significance of edges/weights during graph/model structure pruning, and thus limits the appeal of the winning tickets. In this paper, we formulate a conjecture, i.e., existing overlooked valuable information in the pruned graph connections and model parameters which can be re-grouped into GLT to enhance the final performance. Specifically, we propose an adversarial complementary erasing (ACE) framework to explore the valuable information from the pruned components, thereby developing a more powerful GLT, referred to as the ACE-GLT. The main idea is to mine valuable information from pruned edges/weights after each round of IMP, and employ the ACE technique to refine the GLT processing. Finally, experimental results demonstrate that our ACE-GLT outperforms existing methods for searching GLT in diverse tasks. Our code will be made publicly available.

Learning-based sound speed reconstruction and aberration correction in linear-array photoacoustic/ultrasound imaging

Jun 19, 2023Abstract:Photoacoustic (PA) image reconstruction involves acoustic inversion that necessitates the specification of the speed of sound (SoS) within the medium of propagation. Due to the lack of information on the spatial distribution of the SoS within heterogeneous soft tissue, a homogeneous SoS distribution (such as 1540 m/s) is typically assumed in PA image reconstruction, similar to that of ultrasound (US) imaging. Failure to compensate the SoS variations leads to aberration artefacts, deteriorating the image quality. In this work, we developed a deep learning framework for SoS reconstruction and subsequent aberration correction in a dual-modal PA/US imaging system sharing a clinical US probe. As the PA and US data were inherently co-registered, the reconstructed SoS distribution from US channel data using deep neural networks was utilised for accurate PA image reconstruction. On a numerical and a tissue-mimicking phantom, this framework was able to significantly suppress US aberration artefacts, with the structural similarity index measure (SSIM) of up to 0.8109 and 0.8128 as compared to the conventional approach (0.6096 and 0.5985, respectively). The networks, trained only on simulated US data, also demonstrated a good generalisation ability on data from ex vivo tissues and the wrist and fingers of healthy human volunteers, and thus could be valuable in various in vivo applications to enhance PA image reconstruction.

Spatiotemporal singular value decomposition for denoising in photoacoustic imaging with low-energy excitation light source

Jul 09, 2022

Abstract:Photoacoustic (PA) imaging is an emerging hybrid imaging modality that combines rich optical spectroscopic contrast and high ultrasonic resolution and thus holds tremendous promise for a wide range of pre-clinical and clinical applications. Compact and affordable light sources such as light-emitting diodes (LEDs) and laser diodes (LDs) are promising alternatives to bulky and expensive solid-state laser systems that are commonly used as PA light sources. These could accelerate the clinical translation of PA technology. However, PA signals generated with these light sources are readily degraded by noise due to the low optical fluence, leading to decreased signal-to-noise ratio (SNR) in PA images. In this work, a spatiotemporal singular value decomposition (SVD) based PA denoising method was investigated for these light sources that usually have low fluence and high repetition rates. The proposed method leverages both spatial and temporal correlations between radiofrequency (RF) data frames. Validation was performed on simulations and in vivo PA data acquired from human fingers (2D) and forearm (3D) using a LED-based system. Spatiotemporal SVD greatly enhanced the PA signals of blood vessels corrupted by noise while preserving a high temporal resolution to slow motions, improving the SNR of in vivo PA images by 1.1, 0.7, and 1.9 times compared to single frame-based wavelet denoising, averaging across 200 frames, and single frame without denoising, respectively. The proposed method demonstrated a processing time of around 50 \mus per frame with SVD acceleration and GPU. Thus, spatiotemporal SVD is well suited to PA imaging systems with low-energy excitation light sources for real-time in vivo applications.

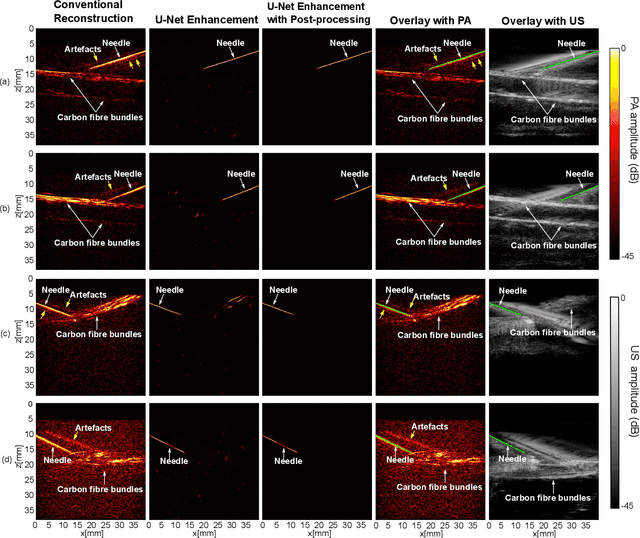

Improving needle visibility in LED-based photoacoustic imaging using deep learning with semi-synthetic datasets

Nov 15, 2021

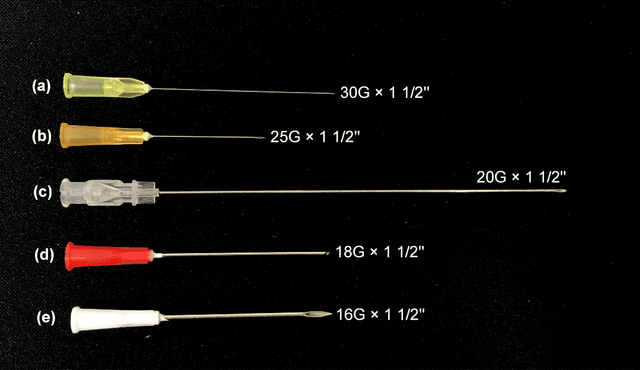

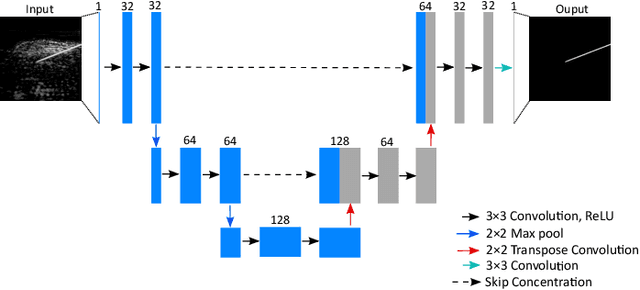

Abstract:Photoacoustic imaging has shown great potential for guiding minimally invasive procedures by accurate identification of critical tissue targets and invasive medical devices (such as metallic needles). Recently, the use of light-emitting diodes (LEDs) as the excitation light sources further accelerates its clinical translation owing to its high affordability and portability. However, needle visibility in LED-based photoacoustic imaging is compromised primarily due to its low optical fluence. In this work, we propose a deep learning framework based on a modified U-Net to improve the visibility of clinical metallic needles with a LED-based photoacoustic and ultrasound imaging system. This framework included the generation of semi-synthetic training datasets combining both simulated data to represent features from the needles and in vivo measurements for tissue background. Evaluation of the trained neural network was performed with needle insertions into a blood-vessel-mimicking phantom, pork joint tissue ex vivo, and measurements on human volunteers. This deep learning-based framework substantially improved the needle visibility in photoacoustic imaging in vivo compared to conventional reconstructions by suppressing background noise and image artefacts, achieving ~1.9 times higher signal-to-noise ratios and an increase of the intersection over union (IoU) from 16.4% to 61.9%. Thus, the proposed framework could be helpful for reducing complications during percutaneous needle insertions by accurate identification of clinical needles in photoacoustic imaging.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge