Adrien E. Desjardins

Improving needle visibility in LED-based photoacoustic imaging using deep learning with semi-synthetic datasets

Nov 15, 2021

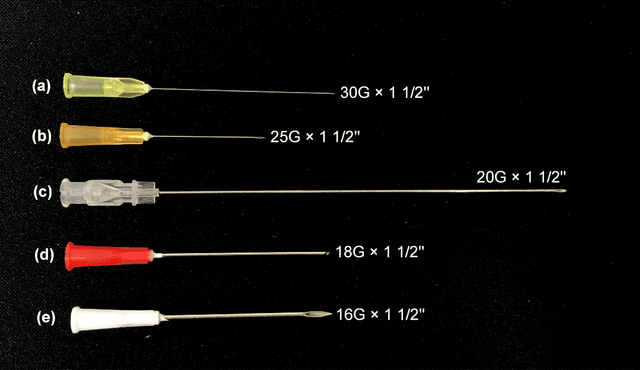

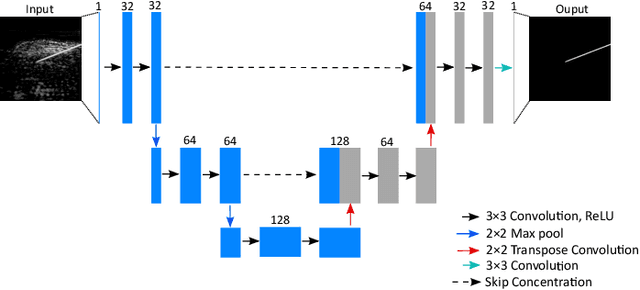

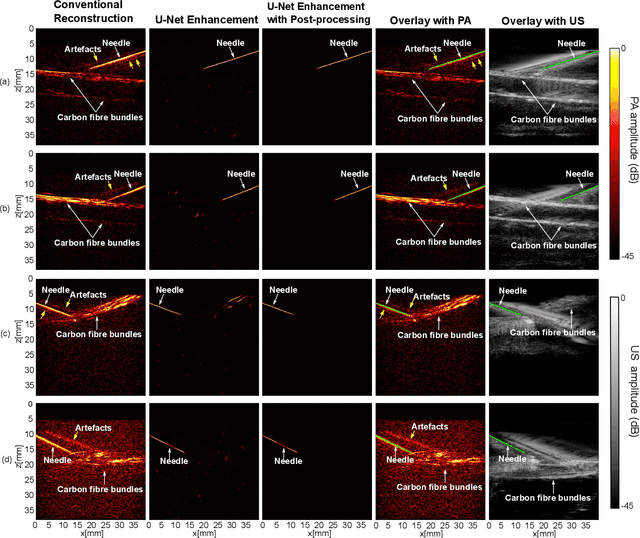

Abstract:Photoacoustic imaging has shown great potential for guiding minimally invasive procedures by accurate identification of critical tissue targets and invasive medical devices (such as metallic needles). Recently, the use of light-emitting diodes (LEDs) as the excitation light sources further accelerates its clinical translation owing to its high affordability and portability. However, needle visibility in LED-based photoacoustic imaging is compromised primarily due to its low optical fluence. In this work, we propose a deep learning framework based on a modified U-Net to improve the visibility of clinical metallic needles with a LED-based photoacoustic and ultrasound imaging system. This framework included the generation of semi-synthetic training datasets combining both simulated data to represent features from the needles and in vivo measurements for tissue background. Evaluation of the trained neural network was performed with needle insertions into a blood-vessel-mimicking phantom, pork joint tissue ex vivo, and measurements on human volunteers. This deep learning-based framework substantially improved the needle visibility in photoacoustic imaging in vivo compared to conventional reconstructions by suppressing background noise and image artefacts, achieving ~1.9 times higher signal-to-noise ratios and an increase of the intersection over union (IoU) from 16.4% to 61.9%. Thus, the proposed framework could be helpful for reducing complications during percutaneous needle insertions by accurate identification of clinical needles in photoacoustic imaging.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge