Chang Yao

Learner-Tailored Program Repair: A Solution Generator with Iterative Edit-Driven Retrieval Enhancement

Jan 13, 2026Abstract:With the development of large language models (LLMs) in the field of programming, intelligent programming coaching systems have gained widespread attention. However, most research focuses on repairing the buggy code of programming learners without providing the underlying causes of the bugs. To address this gap, we introduce a novel task, namely \textbf{LPR} (\textbf{L}earner-Tailored \textbf{P}rogram \textbf{R}epair). We then propose a novel and effective framework, \textbf{\textsc{\MethodName{}}} (\textbf{L}earner-Tailored \textbf{S}olution \textbf{G}enerator), to enhance program repair while offering the bug descriptions for the buggy code. In the first stage, we utilize a repair solution retrieval framework to construct a solution retrieval database and then employ an edit-driven code retrieval approach to retrieve valuable solutions, guiding LLMs in identifying and fixing the bugs in buggy code. In the second stage, we propose a solution-guided program repair method, which fixes the code and provides explanations under the guidance of retrieval solutions. Moreover, we propose an Iterative Retrieval Enhancement method that utilizes evaluation results of the generated code to iteratively optimize the retrieval direction and explore more suitable repair strategies, improving performance in practical programming coaching scenarios. The experimental results show that our approach outperforms a set of baselines by a large margin, validating the effectiveness of our framework for the newly proposed LPR task.

CoLA: Collaborative Low-Rank Adaptation

May 21, 2025Abstract:The scaling law of Large Language Models (LLMs) reveals a power-law relationship, showing diminishing return on performance as model scale increases. While training LLMs from scratch is resource-intensive, fine-tuning a pre-trained model for specific tasks has become a practical alternative. Full fine-tuning (FFT) achieves strong performance; however, it is computationally expensive and inefficient. Parameter-efficient fine-tuning (PEFT) methods, like LoRA, have been proposed to address these challenges by freezing the pre-trained model and adding lightweight task-specific modules. LoRA, in particular, has proven effective, but its application to multi-task scenarios is limited by interference between tasks. Recent approaches, such as Mixture-of-Experts (MOE) and asymmetric LoRA, have aimed to mitigate these issues but still struggle with sample scarcity and noise interference due to their fixed structure. In response, we propose CoLA, a more flexible LoRA architecture with an efficient initialization scheme, and introduces three collaborative strategies to enhance performance by better utilizing the quantitative relationships between matrices $A$ and $B$. Our experiments demonstrate the effectiveness and robustness of CoLA, outperforming existing PEFT methods, especially in low-sample scenarios. Our data and code are fully publicly available at https://github.com/zyy-2001/CoLA.

Boosting MLLM Reasoning with Text-Debiased Hint-GRPO

Mar 31, 2025Abstract:MLLM reasoning has drawn widespread research for its excellent problem-solving capability. Current reasoning methods fall into two types: PRM, which supervises the intermediate reasoning steps, and ORM, which supervises the final results. Recently, DeepSeek-R1 has challenged the traditional view that PRM outperforms ORM, which demonstrates strong generalization performance using an ORM method (i.e., GRPO). However, current MLLM's GRPO algorithms still struggle to handle challenging and complex multimodal reasoning tasks (e.g., mathematical reasoning). In this work, we reveal two problems that impede the performance of GRPO on the MLLM: Low data utilization and Text-bias. Low data utilization refers to that GRPO cannot acquire positive rewards to update the MLLM on difficult samples, and text-bias is a phenomenon that the MLLM bypasses image condition and solely relies on text condition for generation after GRPO training. To tackle these problems, this work proposes Hint-GRPO that improves data utilization by adaptively providing hints for samples of varying difficulty, and text-bias calibration that mitigates text-bias by calibrating the token prediction logits with image condition in test-time. Experiment results on three base MLLMs across eleven datasets demonstrate that our proposed methods advance the reasoning capability of original MLLM by a large margin, exhibiting superior performance to existing MLLM reasoning methods. Our code is available at https://github.com/hqhQAQ/Hint-GRPO.

Less is More: Adaptive Program Repair with Bug Localization and Preference Learning

Mar 09, 2025Abstract:Automated Program Repair (APR) is a task to automatically generate patches for the buggy code. However, most research focuses on generating correct patches while ignoring the consistency between the fixed code and the original buggy code. How to conduct adaptive bug fixing and generate patches with minimal modifications have seldom been investigated. To bridge this gap, we first introduce a novel task, namely AdaPR (Adaptive Program Repair). We then propose a two-stage approach AdaPatcher (Adaptive Patch Generator) to enhance program repair while maintaining the consistency. In the first stage, we utilize a Bug Locator with self-debug learning to accurately pinpoint bug locations. In the second stage, we train a Program Modifier to ensure consistency between the post-modified fixed code and the pre-modified buggy code. The Program Modifier is enhanced with a location-aware repair learning strategy to generate patches based on identified buggy lines, a hybrid training strategy for selective reference and an adaptive preference learning to prioritize fewer changes. The experimental results show that our approach outperforms a set of baselines by a large margin, validating the effectiveness of our two-stage framework for the newly proposed AdaPR task.

CoDe: Communication Delay-Tolerant Multi-Agent Collaboration via Dual Alignment of Intent and Timeliness

Jan 09, 2025

Abstract:Communication has been widely employed to enhance multi-agent collaboration. Previous research has typically assumed delay-free communication, a strong assumption that is challenging to meet in practice. However, real-world agents suffer from channel delays, receiving messages sent at different time points, termed {\it{Asynchronous Communication}}, leading to cognitive biases and breakdowns in collaboration. This paper first defines two communication delay settings in MARL and emphasizes their harm to collaboration. To handle the above delays, this paper proposes a novel framework, Communication Delay-tolerant Multi-Agent Collaboration (CoDe). At first, CoDe learns an intent representation as messages through future action inference, reflecting the stable future behavioral trends of the agents. Then, CoDe devises a dual alignment mechanism of intent and timeliness to strengthen the fusion process of asynchronous messages. In this way, agents can extract the long-term intent of others, even from delayed messages, and selectively utilize the most recent messages that are relevant to their intent. Experimental results demonstrate that CoDe outperforms baseline algorithms in three MARL benchmarks without delay and exhibits robustness under fixed and time-varying delays.

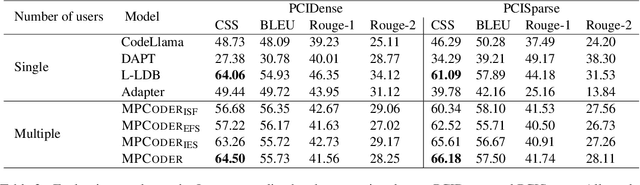

MPCODER: Multi-user Personalized Code Generator with Explicit and Implicit Style Representation Learning

Jun 25, 2024

Abstract:Large Language Models (LLMs) have demonstrated great potential for assisting developers in their daily development. However, most research focuses on generating correct code, how to use LLMs to generate personalized code has seldom been investigated. To bridge this gap, we proposed MPCoder (Multi-user Personalized Code Generator) to generate personalized code for multiple users. To better learn coding style features, we utilize explicit coding style residual learning to capture the syntax code style standards and implicit style learning to capture the semantic code style conventions. We train a multi-user style adapter to better differentiate the implicit feature representations of different users through contrastive learning, ultimately enabling personalized code generation for multiple users. We further propose a novel evaluation metric for estimating similarities between codes of different coding styles. The experimental results show the effectiveness of our approach for this novel task.

Improved Regret for Bandit Convex Optimization with Delayed Feedback

Feb 14, 2024Abstract:We investigate bandit convex optimization (BCO) with delayed feedback, where only the loss value of the action is revealed under an arbitrary delay. Previous studies have established a regret bound of $O(T^{3/4}+d^{1/3}T^{2/3})$ for this problem, where $d$ is the maximum delay, by simply feeding delayed loss values to the classical bandit gradient descent (BGD) algorithm. In this paper, we develop a novel algorithm to enhance the regret, which carefully exploits the delayed bandit feedback via a blocking update mechanism. Our analysis first reveals that the proposed algorithm can decouple the joint effect of the delays and bandit feedback on the regret, and improve the regret bound to $O(T^{3/4}+\sqrt{dT})$ for convex functions. Compared with the previous result, our regret matches the $O(T^{3/4})$ regret of BGD in the non-delayed setting for a larger amount of delay, i.e., $d=O(\sqrt{T})$, instead of $d=O(T^{1/4})$. Furthermore, we consider the case with strongly convex functions, and prove that the proposed algorithm can enjoy a better regret bound of $O(T^{2/3}\log^{1/3}T+d\log T)$. Finally, we show that in a special case with unconstrained action sets, it can be simply extended to achieve a regret bound of $O(\sqrt{T\log T}+d\log T)$ for strongly convex and smooth functions.

Improved Projection-free Online Continuous Submodular Maximization

May 29, 2023Abstract:We investigate the problem of online learning with monotone and continuous DR-submodular reward functions, which has received great attention recently. To efficiently handle this problem, especially in the case with complicated decision sets, previous studies have proposed an efficient projection-free algorithm called Mono-Frank-Wolfe (Mono-FW) using $O(T)$ gradient evaluations and linear optimization steps in total. However, it only attains a $(1-1/e)$-regret bound of $O(T^{4/5})$. In this paper, we propose an improved projection-free algorithm, namely POBGA, which reduces the regret bound to $O(T^{3/4})$ while keeping the same computational complexity as Mono-FW. Instead of modifying Mono-FW, our key idea is to make a novel combination of a projection-based algorithm called online boosting gradient ascent, an infeasible projection technique, and a blocking technique. Furthermore, we consider the decentralized setting and develop a variant of POBGA, which not only reduces the current best regret bound of efficient projection-free algorithms for this setting from $O(T^{4/5})$ to $O(T^{3/4})$, but also reduces the total communication complexity from $O(T)$ to $O(\sqrt{T})$.

Non-stationary Online Convex Optimization with Arbitrary Delays

May 20, 2023Abstract:Online convex optimization (OCO) with arbitrary delays, in which gradients or other information of functions could be arbitrarily delayed, has received increasing attention recently. Different from previous studies that focus on stationary environments, this paper investigates the delayed OCO in non-stationary environments, and aims to minimize the dynamic regret with respect to any sequence of comparators. To this end, we first propose a simple algorithm, namely DOGD, which performs a gradient descent step for each delayed gradient according to their arrival order. Despite its simplicity, our novel analysis shows that DOGD can attain an $O(\sqrt{dT}(P_T+1)$ dynamic regret bound in the worst case, where $d$ is the maximum delay, $T$ is the time horizon, and $P_T$ is the path length of comparators. More importantly, in case delays do not change the arrival order of gradients, it can automatically reduce the dynamic regret to $O(\sqrt{S}(1+P_T))$, where $S$ is the sum of delays. Furthermore, we develop an improved algorithm, which can reduce those dynamic regret bounds achieved by DOGD to $O(\sqrt{dT(P_T+1)})$ and $O(\sqrt{S(1+P_T)})$, respectively. The essential idea is to run multiple DOGD with different learning rates, and utilize a meta-algorithm to track the best one based on their delayed performance. Finally, we demonstrate that our improved algorithm is optimal in both cases by deriving a matching lower bound.

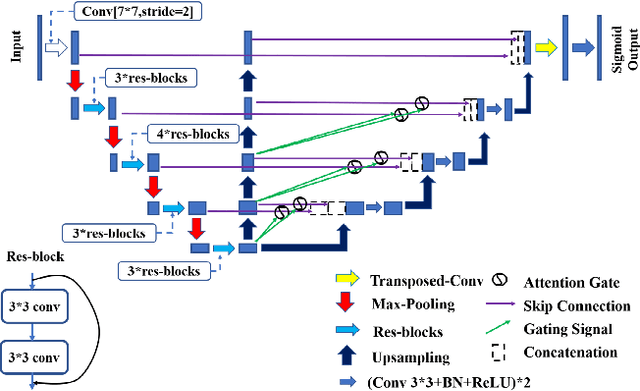

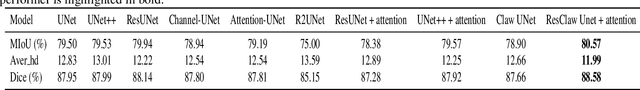

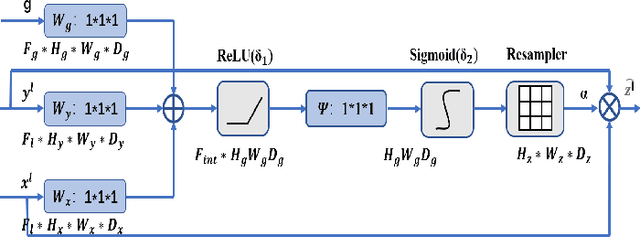

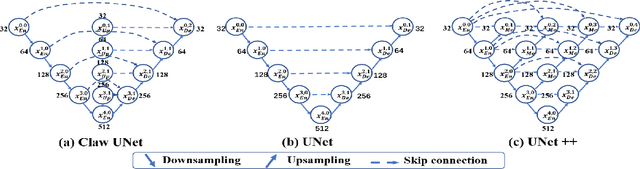

Claw U-Net: A Unet-based Network with Deep Feature Concatenation for Scleral Blood Vessel Segmentation

Oct 20, 2020

Abstract:Sturge-Weber syndrome (SWS) is a vascular malformation disease, and it may cause blindness if the patient's condition is severe. Clinical results show that SWS can be divided into two types based on the characteristics of scleral blood vessels. Therefore, how to accurately segment scleral blood vessels has become a significant problem in computer-aided diagnosis. In this research, we propose to continuously upsample the bottom layer's feature maps to preserve image details, and design a novel Claw UNet based on UNet for scleral blood vessel segmentation. Specifically, the residual structure is used to increase the number of network layers in the feature extraction stage to learn deeper features. In the decoding stage, by fusing the features of the encoding, upsampling, and decoding parts, Claw UNet can achieve effective segmentation in the fine-grained regions of scleral blood vessels. To effectively extract small blood vessels, we use the attention mechanism to calculate the attention coefficient of each position in images. Claw UNet outperforms other UNet-based networks on scleral blood vessel image dataset.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge