Yichen Xiao

MiniMax-Speech: Intrinsic Zero-Shot Text-to-Speech with a Learnable Speaker Encoder

May 12, 2025Abstract:We introduce MiniMax-Speech, an autoregressive Transformer-based Text-to-Speech (TTS) model that generates high-quality speech. A key innovation is our learnable speaker encoder, which extracts timbre features from a reference audio without requiring its transcription. This enables MiniMax-Speech to produce highly expressive speech with timbre consistent with the reference in a zero-shot manner, while also supporting one-shot voice cloning with exceptionally high similarity to the reference voice. In addition, the overall quality of the synthesized audio is enhanced through the proposed Flow-VAE. Our model supports 32 languages and demonstrates excellent performance across multiple objective and subjective evaluations metrics. Notably, it achieves state-of-the-art (SOTA) results on objective voice cloning metrics (Word Error Rate and Speaker Similarity) and has secured the top position on the public TTS Arena leaderboard. Another key strength of MiniMax-Speech, granted by the robust and disentangled representations from the speaker encoder, is its extensibility without modifying the base model, enabling various applications such as: arbitrary voice emotion control via LoRA; text to voice (T2V) by synthesizing timbre features directly from text description; and professional voice cloning (PVC) by fine-tuning timbre features with additional data. We encourage readers to visit https://minimax-ai.github.io/tts_tech_report for more examples.

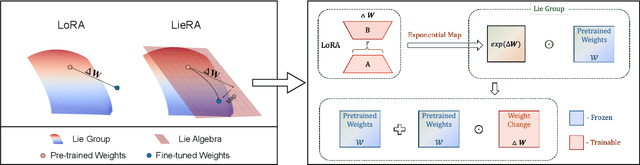

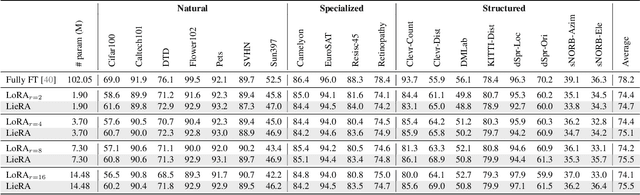

Generalized Tensor-based Parameter-Efficient Fine-Tuning via Lie Group Transformations

Apr 01, 2025

Abstract:Adapting pre-trained foundation models for diverse downstream tasks is a core practice in artificial intelligence. However, the wide range of tasks and high computational costs make full fine-tuning impractical. To overcome this, parameter-efficient fine-tuning (PEFT) methods like LoRA have emerged and are becoming a growing research focus. Despite the success of these methods, they are primarily designed for linear layers, focusing on two-dimensional matrices while largely ignoring higher-dimensional parameter spaces like convolutional kernels. Moreover, directly applying these methods to higher-dimensional parameter spaces often disrupts their structural relationships. Given the rapid advancements in matrix-based PEFT methods, rather than designing a specialized strategy, we propose a generalization that extends matrix-based PEFT methods to higher-dimensional parameter spaces without compromising their structural properties. Specifically, we treat parameters as elements of a Lie group, with updates modeled as perturbations in the corresponding Lie algebra. These perturbations are mapped back to the Lie group through the exponential map, ensuring smooth, consistent updates that preserve the inherent structure of the parameter space. Extensive experiments on computer vision and natural language processing validate the effectiveness and versatility of our approach, demonstrating clear improvements over existing methods.

Spiking Vision Transformer with Saccadic Attention

Feb 18, 2025Abstract:The combination of Spiking Neural Networks (SNNs) and Vision Transformers (ViTs) holds potential for achieving both energy efficiency and high performance, particularly suitable for edge vision applications. However, a significant performance gap still exists between SNN-based ViTs and their ANN counterparts. Here, we first analyze why SNN-based ViTs suffer from limited performance and identify a mismatch between the vanilla self-attention mechanism and spatio-temporal spike trains. This mismatch results in degraded spatial relevance and limited temporal interactions. To address these issues, we draw inspiration from biological saccadic attention mechanisms and introduce an innovative Saccadic Spike Self-Attention (SSSA) method. Specifically, in the spatial domain, SSSA employs a novel spike distribution-based method to effectively assess the relevance between Query and Key pairs in SNN-based ViTs. Temporally, SSSA employs a saccadic interaction module that dynamically focuses on selected visual areas at each timestep and significantly enhances whole scene understanding through temporal interactions. Building on the SSSA mechanism, we develop a SNN-based Vision Transformer (SNN-ViT). Extensive experiments across various visual tasks demonstrate that SNN-ViT achieves state-of-the-art performance with linear computational complexity. The effectiveness and efficiency of the SNN-ViT highlight its potential for power-critical edge vision applications.

Mixed-Precision Graph Neural Quantization for Low Bit Large Language Models

Jan 30, 2025Abstract:Post-Training Quantization (PTQ) is pivotal for deploying large language models (LLMs) within resource-limited settings by significantly reducing resource demands. However, existing PTQ strategies underperform at low bit levels < 3 bits due to the significant difference between the quantized and original weights. To enhance the quantization performance at low bit widths, we introduce a Mixed-precision Graph Neural PTQ (MG-PTQ) approach, employing a graph neural network (GNN) module to capture dependencies among weights and adaptively assign quantization bit-widths. Through the information propagation of the GNN module, our method more effectively captures dependencies among target weights, leading to a more accurate assessment of weight importance and optimized allocation of quantization strategies. Extensive experiments on the WikiText2 and C4 datasets demonstrate that our MG-PTQ method outperforms previous state-of-the-art PTQ method GPTQ, setting new benchmarks for quantization performance under low-bit conditions.

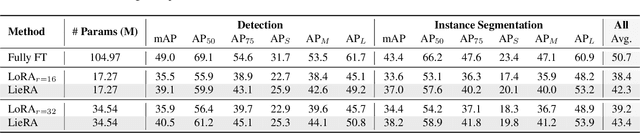

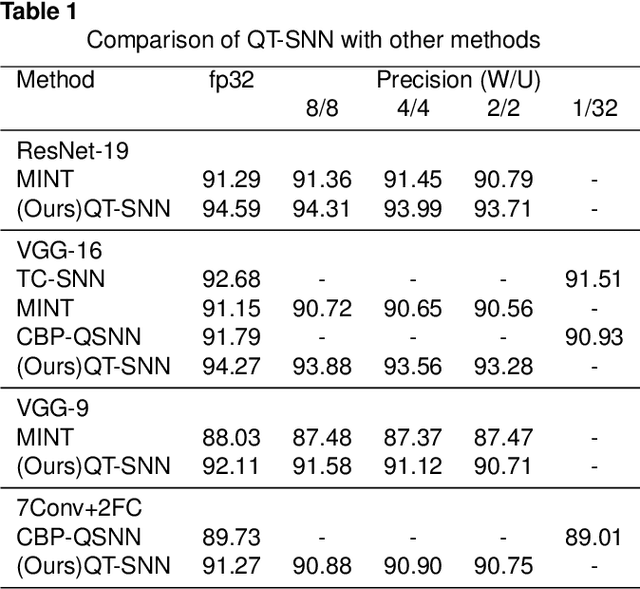

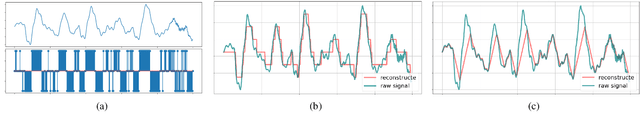

Ternary Spike-based Neuromorphic Signal Processing System

Jul 07, 2024

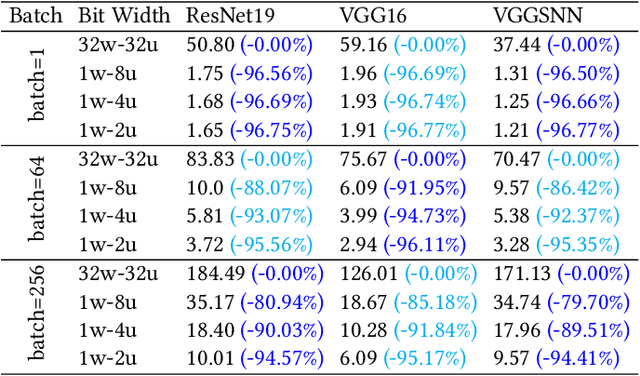

Abstract:Deep Neural Networks (DNNs) have been successfully implemented across various signal processing fields, resulting in significant enhancements in performance. However, DNNs generally require substantial computational resources, leading to significant economic costs and posing challenges for their deployment on resource-constrained edge devices. In this study, we take advantage of spiking neural networks (SNNs) and quantization technologies to develop an energy-efficient and lightweight neuromorphic signal processing system. Our system is characterized by two principal innovations: a threshold-adaptive encoding (TAE) method and a quantized ternary SNN (QT-SNN). The TAE method can efficiently encode time-varying analog signals into sparse ternary spike trains, thereby reducing energy and memory demands for signal processing. QT-SNN, compatible with ternary spike trains from the TAE method, quantifies both membrane potentials and synaptic weights to reduce memory requirements while maintaining performance. Extensive experiments are conducted on two typical signal-processing tasks: speech and electroencephalogram recognition. The results demonstrate that our neuromorphic signal processing system achieves state-of-the-art (SOTA) performance with a 94% reduced memory requirement. Furthermore, through theoretical energy consumption analysis, our system shows 7.5x energy saving compared to other SNN works. The efficiency and efficacy of the proposed system highlight its potential as a promising avenue for energy-efficient signal processing.

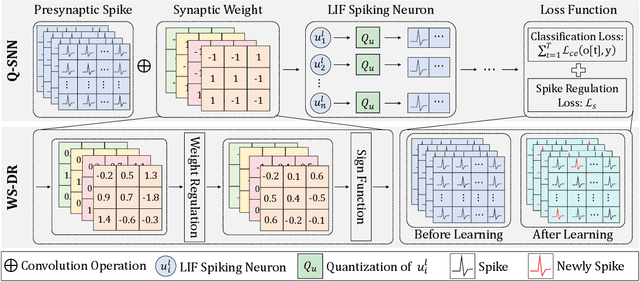

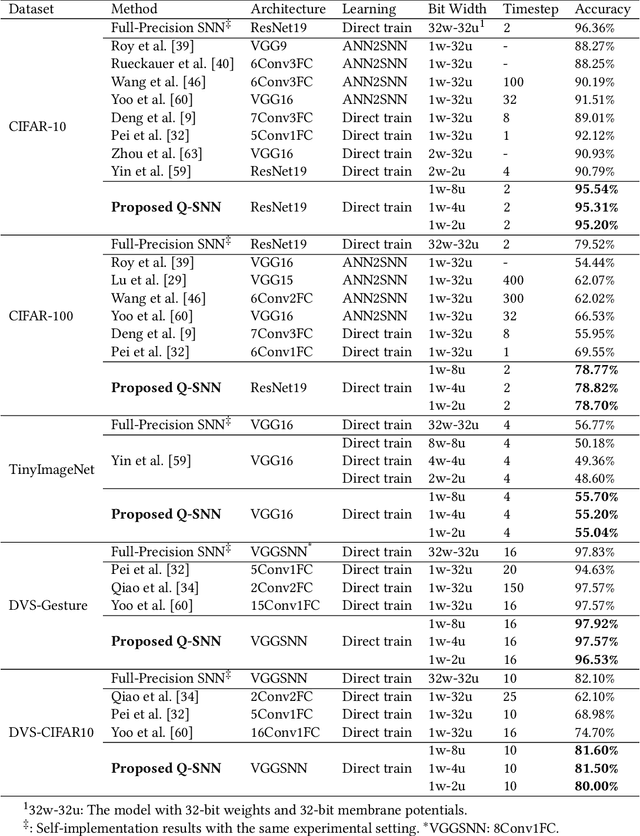

Q-SNNs: Quantized Spiking Neural Networks

Jun 19, 2024

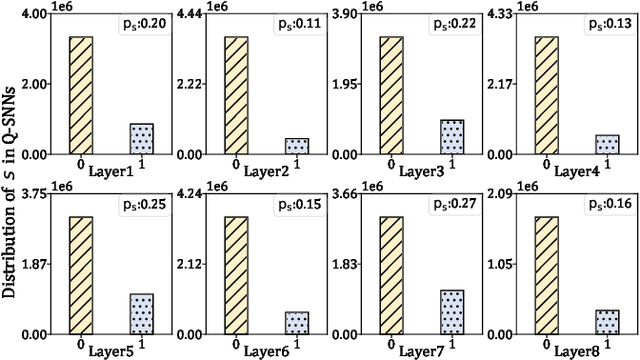

Abstract:Brain-inspired Spiking Neural Networks (SNNs) leverage sparse spikes to represent information and process them in an asynchronous event-driven manner, offering an energy-efficient paradigm for the next generation of machine intelligence. However, the current focus within the SNN community prioritizes accuracy optimization through the development of large-scale models, limiting their viability in resource-constrained and low-power edge devices. To address this challenge, we introduce a lightweight and hardware-friendly Quantized SNN (Q-SNN) that applies quantization to both synaptic weights and membrane potentials. By significantly compressing these two key elements, the proposed Q-SNNs substantially reduce both memory usage and computational complexity. Moreover, to prevent the performance degradation caused by this compression, we present a new Weight-Spike Dual Regulation (WS-DR) method inspired by information entropy theory. Experimental evaluations on various datasets, including static and neuromorphic, demonstrate that our Q-SNNs outperform existing methods in terms of both model size and accuracy. These state-of-the-art results in efficiency and efficacy suggest that the proposed method can significantly improve edge intelligent computing.

Beyond Single-Event Extraction: Towards Efficient Document-Level Multi-Event Argument Extraction

May 03, 2024

Abstract:Recent mainstream event argument extraction methods process each event in isolation, resulting in inefficient inference and ignoring the correlations among multiple events. To address these limitations, here we propose a multiple-event argument extraction model DEEIA (Dependency-guided Encoding and Event-specific Information Aggregation), capable of extracting arguments from all events within a document simultaneouslyThe proposed DEEIA model employs a multi-event prompt mechanism, comprising DE and EIA modules. The DE module is designed to improve the correlation between prompts and their corresponding event contexts, whereas the EIA module provides event-specific information to improve contextual understanding. Extensive experiments show that our method achieves new state-of-the-art performance on four public datasets (RAMS, WikiEvents, MLEE, and ACE05), while significantly saving the inference time compared to the baselines. Further analyses demonstrate the effectiveness of the proposed modules.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge