Xuning Yang

Inference-Time Policy Steering through Human Interactions

Nov 25, 2024

Abstract:Generative policies trained with human demonstrations can autonomously accomplish multimodal, long-horizon tasks. However, during inference, humans are often removed from the policy execution loop, limiting the ability to guide a pre-trained policy towards a specific sub-goal or trajectory shape among multiple predictions. Naive human intervention may inadvertently exacerbate distribution shift, leading to constraint violations or execution failures. To better align policy output with human intent without inducing out-of-distribution errors, we propose an Inference-Time Policy Steering (ITPS) framework that leverages human interactions to bias the generative sampling process, rather than fine-tuning the policy on interaction data. We evaluate ITPS across three simulated and real-world benchmarks, testing three forms of human interaction and associated alignment distance metrics. Among six sampling strategies, our proposed stochastic sampling with diffusion policy achieves the best trade-off between alignment and distribution shift. Videos are available at https://yanweiw.github.io/itps/.

Aim My Robot: Precision Local Navigation to Any Object

Nov 22, 2024Abstract:Existing navigation systems mostly consider "success" when the robot reaches within 1m radius to a goal. This precision is insufficient for emerging applications where the robot needs to be positioned precisely relative to an object for downstream tasks, such as docking, inspection, and manipulation. To this end, we design and implement Aim-My-Robot (AMR), a local navigation system that enables a robot to reach any object in its vicinity at the desired relative pose, with centimeter-level precision. AMR achieves high precision and robustness by leveraging multi-modal perception, precise action prediction, and is trained on large-scale photorealistic data generated in simulation. AMR shows strong sim2real transfer and can adapt to different robot kinematics and unseen objects with little to no fine-tuning.

Fast Explicit-Input Assistance for Teleoperation in Clutter

Feb 04, 2024

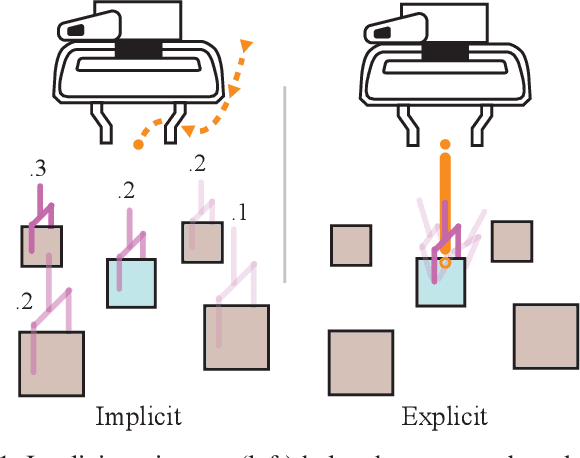

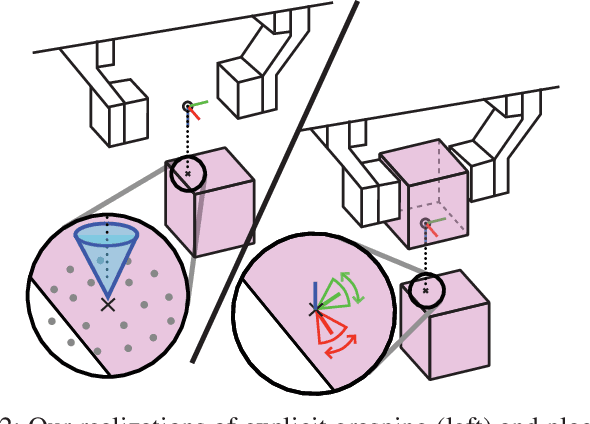

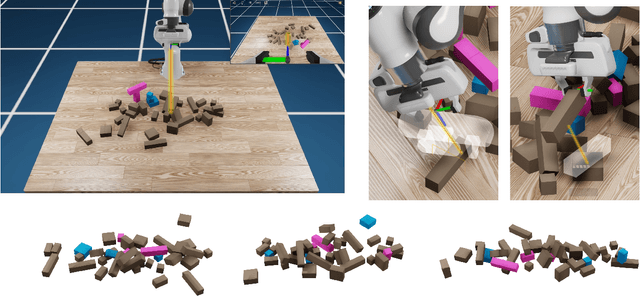

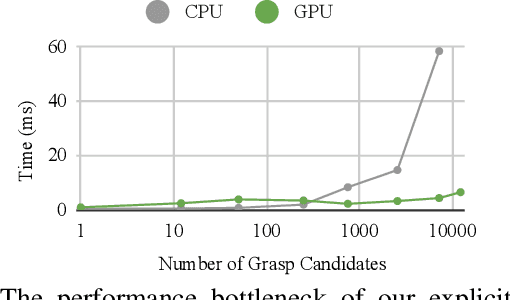

Abstract:The performance of prediction-based assistance for robot teleoperation degrades in unseen or goal-rich environments due to incorrect or quickly-changing intent inferences. Poor predictions can confuse operators or cause them to change their control input to implicitly signal their goal, resulting in unnatural movement. We present a new assistance algorithm and interface for robotic manipulation where an operator can explicitly communicate a manipulation goal by pointing the end-effector. Rapid optimization and parallel collision checking in a local region around the pointing target enable direct, interactive control over grasp and place pose candidates. We compare the explicit pointing interface to an implicit inference-based assistance scheme in a within-subjects user study (N=20) where participants teleoperate a simulated robot to complete a multi-step singulation and stacking task in cluttered environments. We find that operators prefer the explicit interface, which improved completion time, pick and place success rates, and NASA TLX scores. Our code is available at https://github.com/NVlabs/fast-explicit-teleop

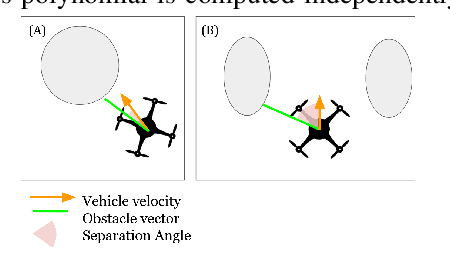

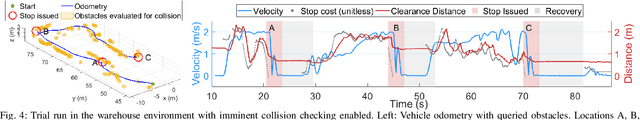

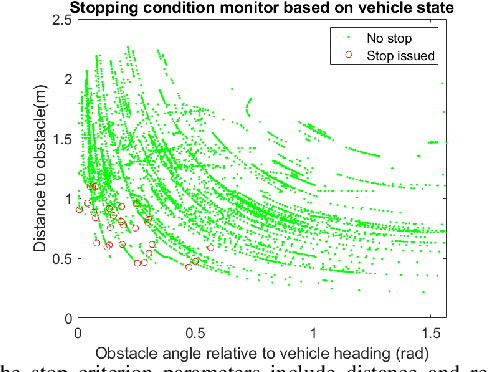

An imminent collision monitoring system with safe stopping interventions for autonomous aerial flights

Jun 17, 2022

Abstract:Collision avoidance requires tradeoffs in planning time horizons. Depending on the planner, safety cannot always be guaranteed in uncertain environments given map updates. To mitigate situations where the planner leads the vehicle into a state of collision or the vehicle reaches a point where no trajectories are feasible, we propose a continuous collision checking algorithm. The imminent collision checking system continuously monitors vehicle safety, and plans a safe trajectory that leads the vehicle to a stop within the observed map. We test our proposed pipeline alongside a teleoperated navigation in real-life experiments, and in simulated random-forest and warehouse environments where we show that with our method, we are able to mitigate collisions with a success rate of at least 90\%.

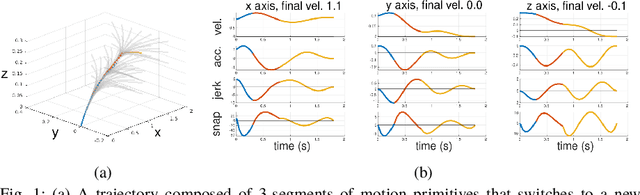

Fast and Agile Vision-Based Flight with Teleoperation and Collision Avoidance on a Multirotor

May 31, 2019

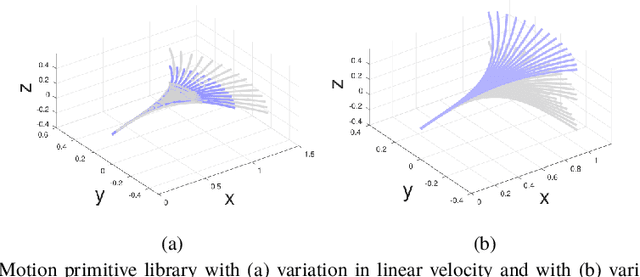

Abstract:We present a multirotor architecture capable of aggressive autonomous flight and collision-free teleoperation in unstructured, GPS-denied environments. The proposed system enables aggressive and safe autonomous flight around clutter by integrating recent advancements in visual-inertial state estimation and teleoperation. Our teleoperation framework maps user inputs onto smooth and dynamically feasible motion primitives. Collision-free trajectories are ensured by querying a locally consistent map that is incrementally constructed from forward-facing depth observations. Our system enables a non-expert operator to safely navigate a multirotor around obstacles at speeds of 10 m/s. We achieve autonomous flights at speeds exceeding 12 m/s and accelerations exceeding 12 m/s^2 in a series of outdoor field experiments that validate our approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge