Curtis Boirum

Carnegie Mellon University

Autonomous Cave Surveying with an Aerial Robot

Mar 31, 2020

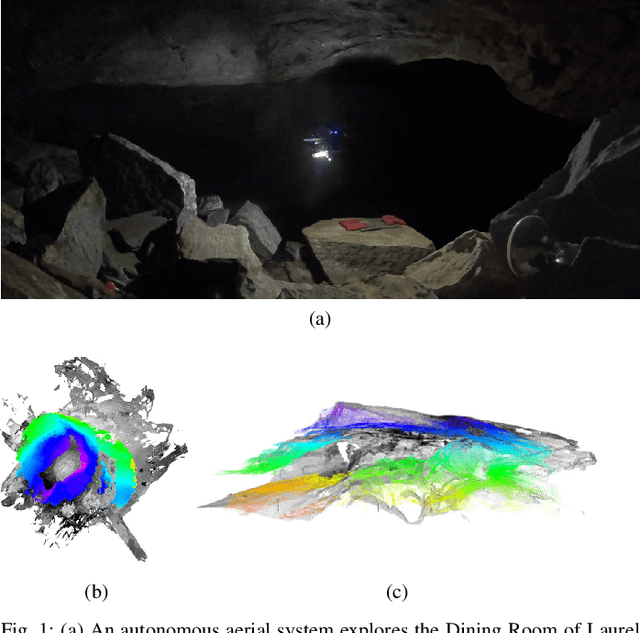

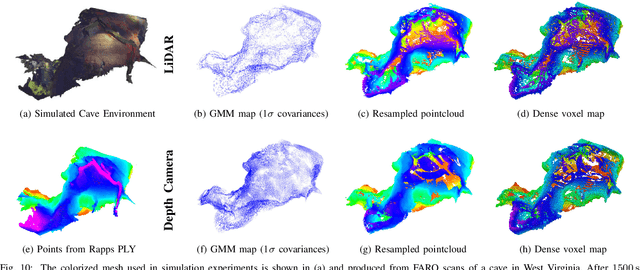

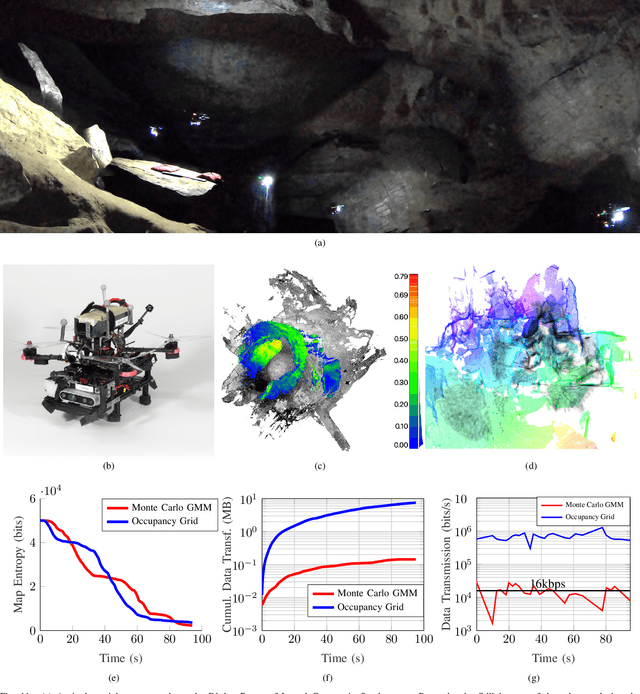

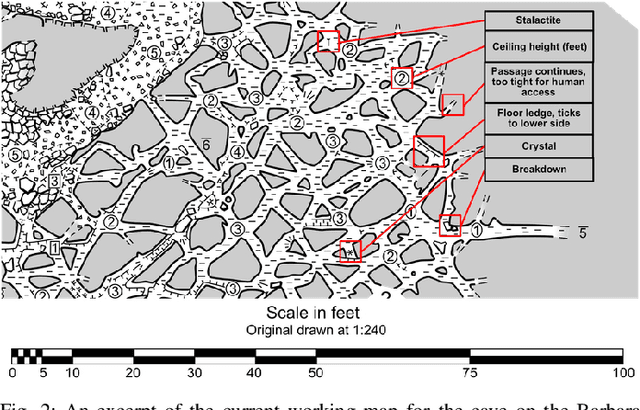

Abstract:This paper presents a method for cave surveying in complete darkness with an autonomous aerial vehicle equipped with a depth camera for mapping, downward-facing camera for state estimation, and forward and downward lights. Traditional methods of cave surveying are labor-intensive and dangerous due to the risk of hypothermia when collecting data over extended periods of time in cold and damp environments, the risk of injury when operating in darkness in rocky or muddy environments, and the potential structural instability of the subterranean environment. Robots could be leveraged to reduce risk to human surveyors, but undeveloped caves are challenging environments in which to operate due to low-bandwidth or nonexistent communications infrastructure. The potential for communications dropouts motivates autonomy in this context. Because the topography of the environment may not be known a priori, it is advantageous for human operators to receive real-time feedback of high-resolution map data that encodes both large and small passageways. Given this capability, directed exploration, where human operators transmit guidance to the autonomous robot to prioritize certain leads over others, lies within the realm of the possible. Few state-of-the-art, high-resolution perceptual modeling techniques quantify the time to transfer the model across low bandwidth, high reliability communications channels such as radio. To bridge this gap in the state of the art, this work compactly represents sensor observations as Gaussian mixture models and maintains a local occupancy grid map for a motion planner that greedily maximizes an information-theoretic objective function. The methodology is extensively evaluated in long duration simulations on an embedded PC and deployed to an aerial system in Laurel Caverns, a commercially owned and operated cave in Southwestern Pennsylvania, USA.

Fast and Agile Vision-Based Flight with Teleoperation and Collision Avoidance on a Multirotor

May 31, 2019

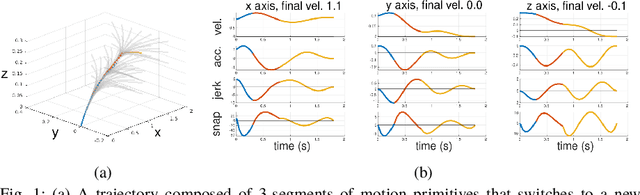

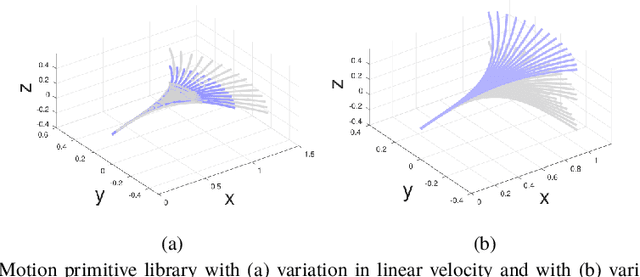

Abstract:We present a multirotor architecture capable of aggressive autonomous flight and collision-free teleoperation in unstructured, GPS-denied environments. The proposed system enables aggressive and safe autonomous flight around clutter by integrating recent advancements in visual-inertial state estimation and teleoperation. Our teleoperation framework maps user inputs onto smooth and dynamically feasible motion primitives. Collision-free trajectories are ensured by querying a locally consistent map that is incrementally constructed from forward-facing depth observations. Our system enables a non-expert operator to safely navigate a multirotor around obstacles at speeds of 10 m/s. We achieve autonomous flights at speeds exceeding 12 m/s and accelerations exceeding 12 m/s^2 in a series of outdoor field experiments that validate our approach.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge