Xinhua Wang

RoboMIND 2.0: A Multimodal, Bimanual Mobile Manipulation Dataset for Generalizable Embodied Intelligence

Dec 31, 2025Abstract:While data-driven imitation learning has revolutionized robotic manipulation, current approaches remain constrained by the scarcity of large-scale, diverse real-world demonstrations. Consequently, the ability of existing models to generalize across long-horizon bimanual tasks and mobile manipulation in unstructured environments remains limited. To bridge this gap, we present RoboMIND 2.0, a comprehensive real-world dataset comprising over 310K dual-arm manipulation trajectories collected across six distinct robot embodiments and 739 complex tasks. Crucially, to support research in contact-rich and spatially extended tasks, the dataset incorporates 12K tactile-enhanced episodes and 20K mobile manipulation trajectories. Complementing this physical data, we construct high-fidelity digital twins of our real-world environments, releasing an additional 20K-trajectory simulated dataset to facilitate robust sim-to-real transfer. To fully exploit the potential of RoboMIND 2.0, we propose MIND-2 system, a hierarchical dual-system frame-work optimized via offline reinforcement learning. MIND-2 integrates a high-level semantic planner (MIND-2-VLM) to decompose abstract natural language instructions into grounded subgoals, coupled with a low-level Vision-Language-Action executor (MIND-2-VLA), which generates precise, proprioception-aware motor actions.

Larger Hausdorff Dimension in Scanning Pattern Facilitates Mamba-Based Methods in Low-Light Image Enhancement

Oct 29, 2025Abstract:We propose an innovative enhancement to the Mamba framework by increasing the Hausdorff dimension of its scanning pattern through a novel Hilbert Selective Scan mechanism. This mechanism explores the feature space more effectively, capturing intricate fine-scale details and improving overall coverage. As a result, it mitigates information inconsistencies while refining spatial locality to better capture subtle local interactions without sacrificing the model's ability to handle long-range dependencies. Extensive experiments on publicly available benchmarks demonstrate that our approach significantly improves both the quantitative metrics and qualitative visual fidelity of existing Mamba-based low-light image enhancement methods, all while reducing computational resource consumption and shortening inference time. We believe that this refined strategy not only advances the state-of-the-art in low-light image enhancement but also holds promise for broader applications in fields that leverage Mamba-based techniques.

RoboMIND: Benchmark on Multi-embodiment Intelligence Normative Data for Robot Manipulation

Dec 18, 2024

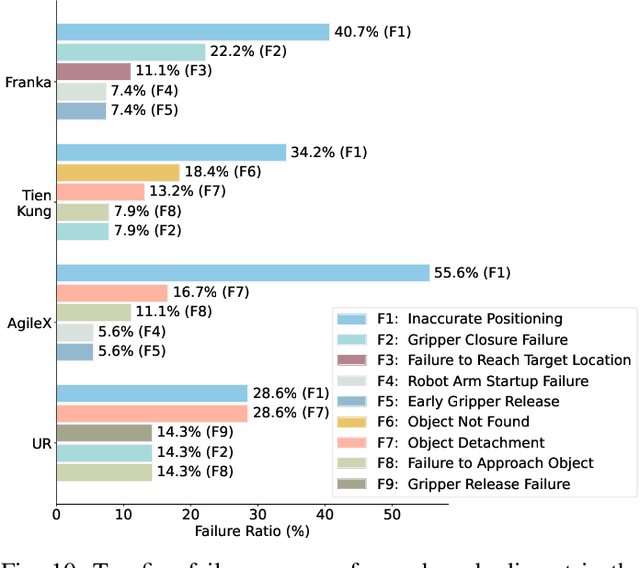

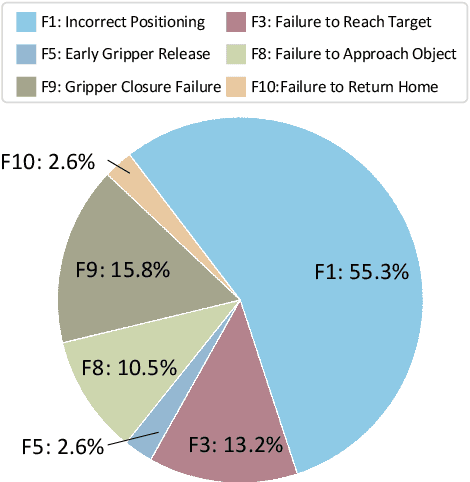

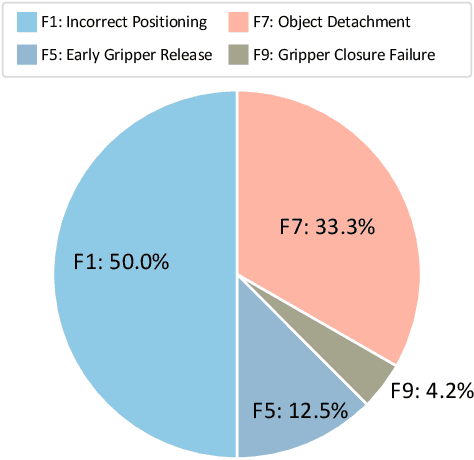

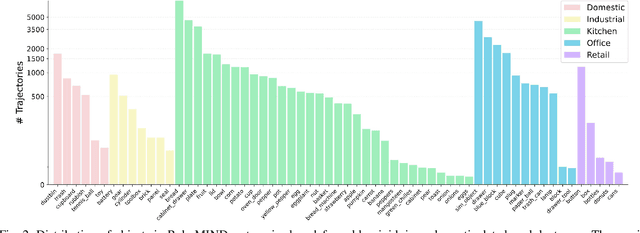

Abstract:Developing robust and general-purpose robotic manipulation policies is a key goal in the field of robotics. To achieve effective generalization, it is essential to construct comprehensive datasets that encompass a large number of demonstration trajectories and diverse tasks. Unlike vision or language data that can be collected from the Internet, robotic datasets require detailed observations and manipulation actions, necessitating significant investment in hardware-software infrastructure and human labor. While existing works have focused on assembling various individual robot datasets, there remains a lack of a unified data collection standard and insufficient diversity in tasks, scenarios, and robot types. In this paper, we introduce RoboMIND (Multi-embodiment Intelligence Normative Data for Robot manipulation), featuring 55k real-world demonstration trajectories across 279 diverse tasks involving 61 different object classes. RoboMIND is collected through human teleoperation and encompasses comprehensive robotic-related information, including multi-view RGB-D images, proprioceptive robot state information, end effector details, and linguistic task descriptions. To ensure dataset consistency and reliability during policy learning, RoboMIND is built on a unified data collection platform and standardized protocol, covering four distinct robotic embodiments. We provide a thorough quantitative and qualitative analysis of RoboMIND across multiple dimensions, offering detailed insights into the diversity of our datasets. In our experiments, we conduct extensive real-world testing with four state-of-the-art imitation learning methods, demonstrating that training with RoboMIND data results in a high manipulation success rate and strong generalization. Our project is at https://x-humanoid-robomind.github.io/.

Unbiased and Robust: External Attention-enhanced Graph Contrastive Learning for Cross-domain Sequential Recommendation

Oct 17, 2023

Abstract:Cross-domain sequential recommenders (CSRs) are gaining considerable research attention as they can capture user sequential preference by leveraging side information from multiple domains. However, these works typically follow an ideal setup, i.e., different domains obey similar data distribution, which ignores the bias brought by asymmetric interaction densities (a.k.a. the inter-domain density bias). Besides, the frequently adopted mechanism (e.g., the self-attention network) in sequence encoder only focuses on the interactions within a local view, which overlooks the global correlations between different training batches. To this end, we propose an External Attention-enhanced Graph Contrastive Learning framework, namely EA-GCL. Specifically, to remove the impact of the inter-domain density bias, an auxiliary Self-Supervised Learning (SSL) task is attached to the traditional graph encoder under a multi-task learning manner. To robustly capture users' behavioral patterns, we develop an external attention-based sequence encoder that contains an MLP-based memory-sharing structure. Unlike the self-attention mechanism, such a structure can effectively alleviate the bias interference from the batch-based training scheme. Extensive experiments on two real-world datasets demonstrate that EA-GCL outperforms several state-of-the-art baselines on CSR tasks. The source codes and relevant datasets are available at https://github.com/HoupingY/EA-GCL.

Automated Prompting for Non-overlapping Cross-domain Sequential Recommendation

Apr 09, 2023

Abstract:Cross-domain Recommendation (CR) has been extensively studied in recent years to alleviate the data sparsity issue in recommender systems by utilizing different domain information. In this work, we focus on the more general Non-overlapping Cross-domain Sequential Recommendation (NCSR) scenario. NCSR is challenging because there are no overlapped entities (e.g., users and items) between domains, and there is only users' implicit feedback and no content information. Previous CR methods cannot solve NCSR well, since (1) they either need extra content to align domains or need explicit domain alignment constraints to reduce the domain discrepancy from domain-invariant features, (2) they pay more attention to users' explicit feedback (i.e., users' rating data) and cannot well capture their sequential interaction patterns, (3) they usually do a single-target cross-domain recommendation task and seldom investigate the dual-target ones. Considering the above challenges, we propose Prompt Learning-based Cross-domain Recommender (PLCR), an automated prompting-based recommendation framework for the NCSR task. Specifically, to address the challenge (1), PLCR resorts to learning domain-invariant and domain-specific representations via its prompt learning component, where the domain alignment constraint is discarded. For challenges (2) and (3), PLCR introduces a pre-trained sequence encoder to learn users' sequential interaction patterns, and conducts a dual-learning target with a separation constraint to enhance recommendations in both domains. Our empirical study on two sub-collections of Amazon demonstrates the advance of PLCR compared with some related SOTA methods.

Towards Lightweight Cross-domain Sequential Recommendation via External Attention-enhanced Graph Convolution Network

Feb 07, 2023Abstract:Cross-domain Sequential Recommendation (CSR) is an emerging yet challenging task that depicts the evolution of behavior patterns for overlapped users by modeling their interactions from multiple domains. Existing studies on CSR mainly focus on using composite or in-depth structures that achieve significant improvement in accuracy but bring a huge burden to the model training. Moreover, to learn the user-specific sequence representations, existing works usually adopt the global relevance weighting strategy (e.g., self-attention mechanism), which has quadratic computational complexity. In this work, we introduce a lightweight external attention-enhanced GCN-based framework to solve the above challenges, namely LEA-GCN. Specifically, by only keeping the neighborhood aggregation component and using the Single-Layer Aggregating Protocol (SLAP), our lightweight GCN encoder performs more efficiently to capture the collaborative filtering signals of the items from both domains. To further alleviate the framework structure and aggregate the user-specific sequential pattern, we devise a novel dual-channel External Attention (EA) component, which calculates the correlation among all items via a lightweight linear structure. Extensive experiments are conducted on two real-world datasets, demonstrating that LEA-GCN requires a smaller volume and less training time without affecting the accuracy compared with several state-of-the-art methods.

Reinforcement Learning-enhanced Shared-account Cross-domain Sequential Recommendation

Jun 16, 2022

Abstract:Shared-account Cross-domain Sequential Recommendation (SCSR) is an emerging yet challenging task that simultaneously considers the shared-account and cross-domain characteristics in the sequential recommendation. Existing works on SCSR are mainly based on Recurrent Neural Network (RNN) and Graph Neural Network (GNN) but they ignore the fact that although multiple users share a single account, it is mainly occupied by one user at a time. This observation motivates us to learn a more accurate user-specific account representation by attentively focusing on its recent behaviors. Furthermore, though existing works endow lower weights to irrelevant interactions, they may still dilute the domain information and impede the cross-domain recommendation. To address the above issues, we propose a reinforcement learning-based solution, namely RL-ISN, which consists of a basic cross-domain recommender and a reinforcement learning-based domain filter. Specifically, to model the account representation in the shared-account scenario, the basic recommender first clusters users' mixed behaviors as latent users, and then leverages an attention model over them to conduct user identification. To reduce the impact of irrelevant domain information, we formulate the domain filter as a hierarchical reinforcement learning task, where a high-level task is utilized to decide whether to revise the whole transferred sequence or not, and if it does, a low-level task is further performed to determine whether to remove each interaction within it or not. To evaluate the performance of our solution, we conduct extensive experiments on two real-world datasets, and the experimental results demonstrate the superiority of our RL-ISN method compared with the state-of-the-art recommendation methods.

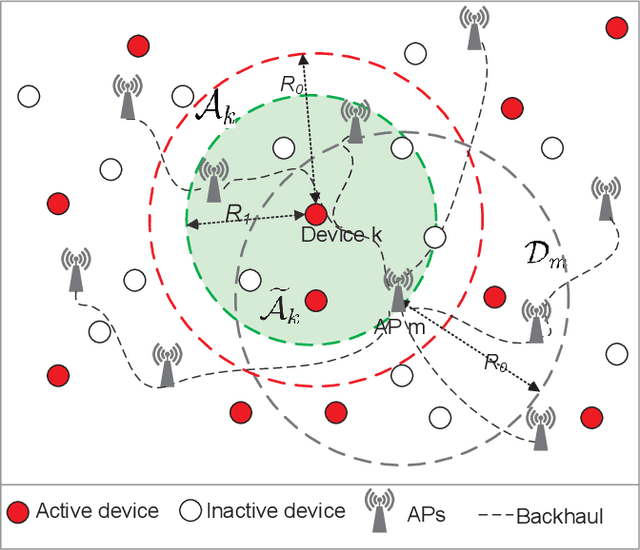

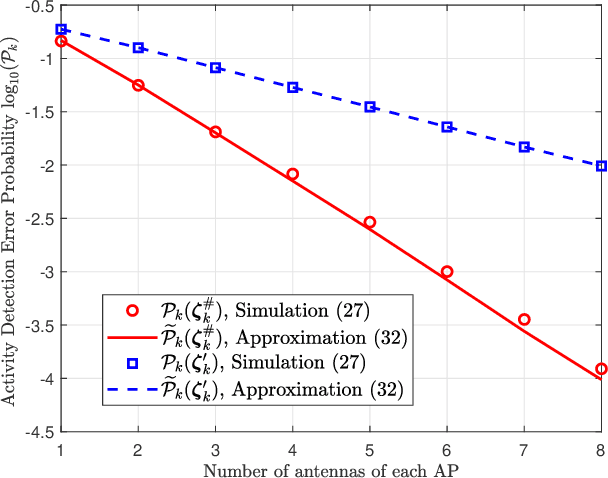

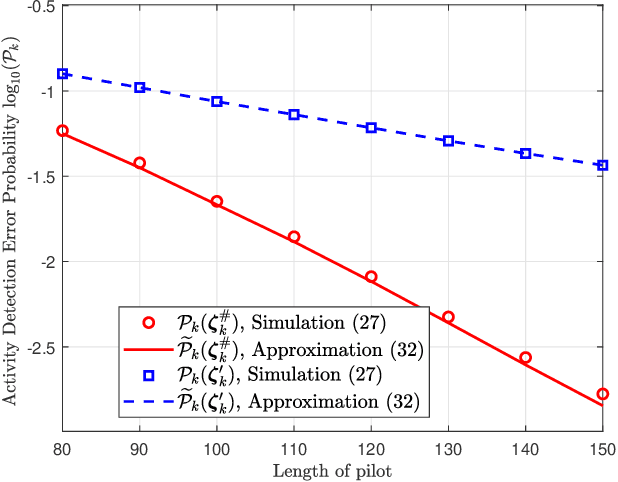

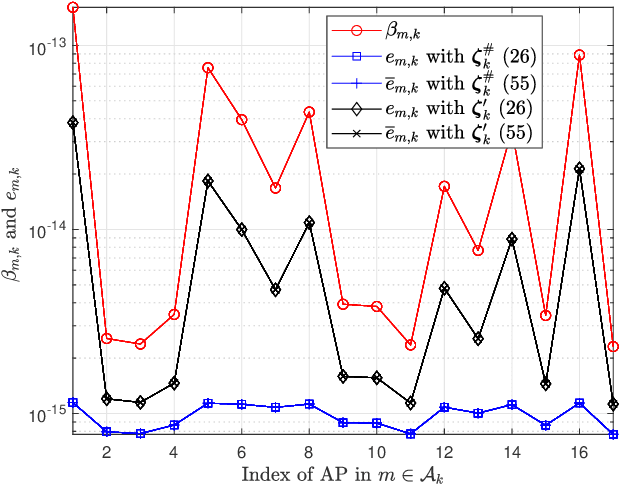

Two-Stage Channel Estimation Approach for Cell-Free IoT With Massive Random Access

Sep 28, 2021

Abstract:We investigate the activity detection and channel estimation issues for cell-free Internet of Things (IoT) networks with massive random access. In each time slot, only partial devices are active and communicate with neighboring access points (APs) using non-orthogonal random pilot sequences. Different from the centralized processing in cellular networks, the activity detection and channel estimation in cell-free IoT is more challenging due to the distributed and user-centric architecture. We propose a two-stage approach to detect the random activities of devices and estimate their channel states. In the first stage, the activity of each device is jointly detected by its adjacent APs based on the vector approximate message passing (Vector AMP) algorithm. In the second stage, each AP re-estimates the channel using the linear minimum mean square error (LMMSE) method based on the detected activities to improve the channel estimation accuracy. We derive closed-form expressions for the activity detection error probability and the mean-squared channel estimation errors for a typical device. Finally, we analyze the performance of the entire cell-free IoT network in terms of coverage probability. Simulation results validate the derived closed-form expressions and show that the cell-free IoT significantly outperforms the collocated massive MIMO and small-cell schemes in terms of coverage probability.

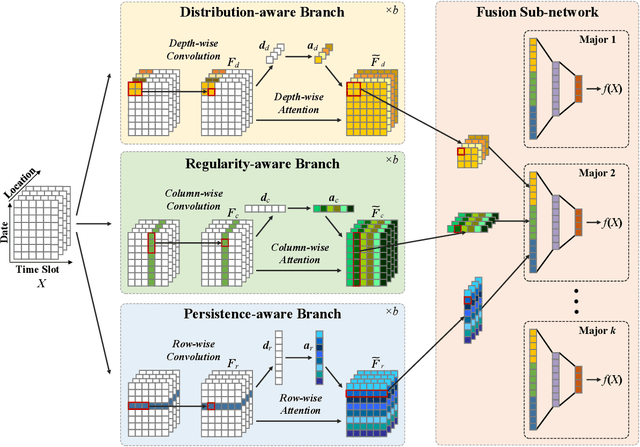

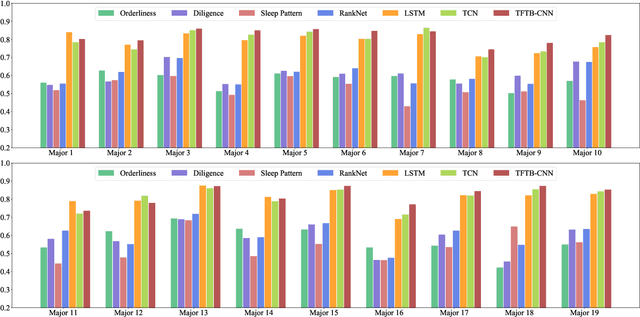

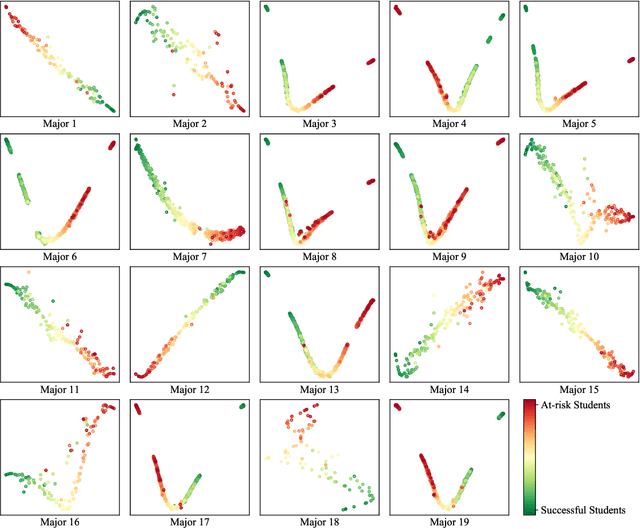

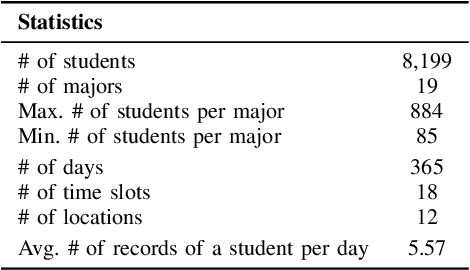

Tri-Branch Convolutional Neural Networks for Top-$k$ Focused Academic Performance Prediction

Jul 22, 2021

Abstract:Academic performance prediction aims to leverage student-related information to predict their future academic outcomes, which is beneficial to numerous educational applications, such as personalized teaching and academic early warning. In this paper, we address the problem by analyzing students' daily behavior trajectories, which can be comprehensively tracked with campus smartcard records. Different from previous studies, we propose a novel Tri-Branch CNN architecture, which is equipped with row-wise, column-wise, and depth-wise convolution and attention operations, to capture the characteristics of persistence, regularity, and temporal distribution of student behavior in an end-to-end manner, respectively. Also, we cast academic performance prediction as a top-$k$ ranking problem, and introduce a top-$k$ focused loss to ensure the accuracy of identifying academically at-risk students. Extensive experiments were carried out on a large-scale real-world dataset, and we show that our approach substantially outperforms recently proposed methods for academic performance prediction. For the sake of reproducibility, our codes have been released at https://github.com/ZongJ1111/Academic-Performance-Prediction.

Topic representation: finding more representative words in topic models

Oct 23, 2018

Abstract:The top word list, i.e., the top-M words with highest marginal probability in a given topic, is the standard topic representation in topic models. Most of recent automatical topic labeling algorithms and popular topic quality metrics are based on it. However, we find, empirically, words in this type of top word list are not always representative. The objective of this paper is to find more representative top word lists for topics. To achieve this, we rerank the words in a given topic by further considering marginal probability on words over every other topic. The reranking list of top-M words is used to be a novel topic representation for topic models. We investigate three reranking methodologies, using (1) standard deviation weight, (2) standard deviation weight with topic size and (3) Chi Square \c{hi}2statistic selection. Experimental results on real world collections indicate that our representations can extract more representative words for topics, agreeing with human judgements.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge