Xiandong Meng

Content Adaptive based Motion Alignment Framework for Learned Video Compression

Dec 15, 2025

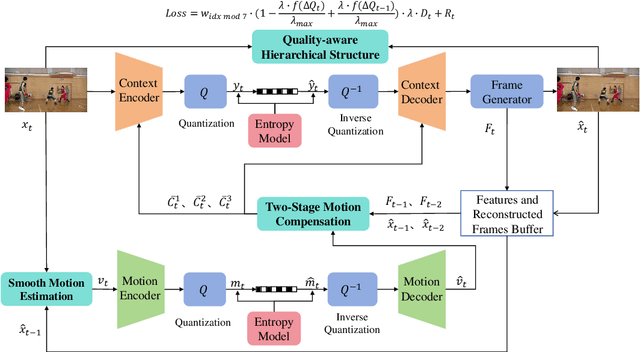

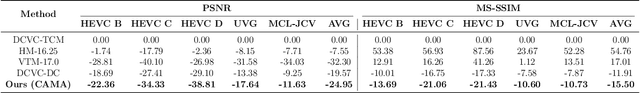

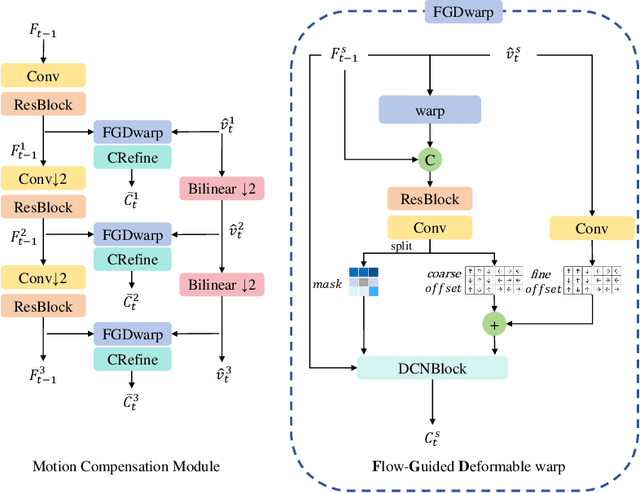

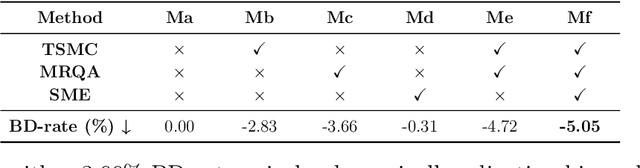

Abstract:Recent advances in end-to-end video compression have shown promising results owing to their unified end-to-end learning optimization. However, such generalized frameworks often lack content-specific adaptation, leading to suboptimal compression performance. To address this, this paper proposes a content adaptive based motion alignment framework that improves performance by adapting encoding strategies to diverse content characteristics. Specifically, we first introduce a two-stage flow-guided deformable warping mechanism that refines motion compensation with coarse-to-fine offset prediction and mask modulation, enabling precise feature alignment. Second, we propose a multi-reference quality aware strategy that adjusts distortion weights based on reference quality, and applies it to hierarchical training to reduce error propagation. Third, we integrate a training-free module that downsamples frames by motion magnitude and resolution to obtain smooth motion estimation. Experimental results on standard test datasets demonstrate that our framework CAMA achieves significant improvements over state-of-the-art Neural Video Compression models, achieving a 24.95% BD-rate (PSNR) savings over our baseline model DCVC-TCM, while also outperforming reproduced DCVC-DC and traditional codec HM-16.25.

L-STEC: Learned Video Compression with Long-term Spatio-Temporal Enhanced Context

Dec 14, 2025Abstract:Neural Video Compression has emerged in recent years, with condition-based frameworks outperforming traditional codecs. However, most existing methods rely solely on the previous frame's features to predict temporal context, leading to two critical issues. First, the short reference window misses long-term dependencies and fine texture details. Second, propagating only feature-level information accumulates errors over frames, causing prediction inaccuracies and loss of subtle textures. To address these, we propose the Long-term Spatio-Temporal Enhanced Context (L-STEC) method. We first extend the reference chain with LSTM to capture long-term dependencies. We then incorporate warped spatial context from the pixel domain, fusing spatio-temporal information through a multi-receptive field network to better preserve reference details. Experimental results show that L-STEC significantly improves compression by enriching contextual information, achieving 37.01% bitrate savings in PSNR and 31.65% in MS-SSIM compared to DCVC-TCM, outperforming both VTM-17.0 and DCVC-FM and establishing new state-of-the-art performance.

Feature-aligned Motion Transformation for Efficient Dynamic Point Cloud Compression

Sep 18, 2025Abstract:Dynamic point clouds are widely used in applications such as immersive reality, robotics, and autonomous driving. Efficient compression largely depends on accurate motion estimation and compensation, yet the irregular structure and significant local variations of point clouds make this task highly challenging. Current methods often rely on explicit motion estimation, whose encoded vectors struggle to capture intricate dynamics and fail to fully exploit temporal correlations. To overcome these limitations, we introduce a Feature-aligned Motion Transformation (FMT) framework for dynamic point cloud compression. FMT replaces explicit motion vectors with a spatiotemporal alignment strategy that implicitly models continuous temporal variations, using aligned features as temporal context within a latent-space conditional encoding framework. Furthermore, we design a random access (RA) reference strategy that enables bidirectional motion referencing and layered encoding, thereby supporting frame-level parallel compression. Extensive experiments demonstrate that our method surpasses D-DPCC and AdaDPCC in both encoding and decoding efficiency, while also achieving BD-Rate reductions of 20% and 9.4%, respectively. These results highlight the effectiveness of FMT in jointly improving compression efficiency and processing performance.

Knowledge Graph-Infused Fine-Tuning for Structured Reasoning in Large Language Models

Aug 20, 2025Abstract:This paper addresses the problems of missing reasoning chains and insufficient entity-level semantic understanding in large language models when dealing with tasks that require structured knowledge. It proposes a fine-tuning algorithm framework based on knowledge graph injection. The method builds on pretrained language models and introduces structured graph information for auxiliary learning. A graph neural network is used to encode entities and their relations, constructing a graph-based semantic representation. A fusion mechanism is then designed to jointly model the knowledge graph embeddings with the contextual representations from the language model. To enhance the robustness of knowledge integration, a gating mechanism is introduced to dynamically balance the contributions of linguistic semantics and structural knowledge. This effectively mitigates conflicts between different representational spaces. During training, a joint loss function is constructed to account for both task performance and structural alignment objectives. This helps improve the accuracy of entity prediction and semantic reasoning. The study also includes a series of systematic sensitivity experiments. It evaluates the effects of learning rate, graph coverage, and structural perturbations on model performance. The results further validate the effectiveness and stability of the proposed method across tasks such as entity recognition, question answering, and language generation. Experimental findings show that the proposed structure-aware fine-tuning framework significantly enhances the model's ability to represent complex semantic units. It demonstrates better semantic consistency and contextual logic modeling in scenarios involving structural reasoning and entity extraction.

Clinical NLP with Attention-Based Deep Learning for Multi-Disease Prediction

Jul 02, 2025Abstract:This paper addresses the challenges posed by the unstructured nature and high-dimensional semantic complexity of electronic health record texts. A deep learning method based on attention mechanisms is proposed to achieve unified modeling for information extraction and multi-label disease prediction. The study is conducted on the MIMIC-IV dataset. A Transformer-based architecture is used to perform representation learning over clinical text. Multi-layer self-attention mechanisms are employed to capture key medical entities and their contextual relationships. A Sigmoid-based multi-label classifier is then applied to predict multiple disease labels. The model incorporates a context-aware semantic alignment mechanism, enhancing its representational capacity in typical medical scenarios such as label co-occurrence and sparse information. To comprehensively evaluate model performance, a series of experiments were conducted, including baseline comparisons, hyperparameter sensitivity analysis, data perturbation studies, and noise injection tests. Results demonstrate that the proposed method consistently outperforms representative existing approaches across multiple performance metrics. The model maintains strong generalization under varying data scales, interference levels, and model depth configurations. The framework developed in this study offers an efficient algorithmic foundation for processing real-world clinical texts and presents practical significance for multi-label medical text modeling tasks.

Motion Matters: Compact Gaussian Streaming for Free-Viewpoint Video Reconstruction

May 22, 2025Abstract:3D Gaussian Splatting (3DGS) has emerged as a high-fidelity and efficient paradigm for online free-viewpoint video (FVV) reconstruction, offering viewers rapid responsiveness and immersive experiences. However, existing online methods face challenge in prohibitive storage requirements primarily due to point-wise modeling that fails to exploit the motion properties. To address this limitation, we propose a novel Compact Gaussian Streaming (ComGS) framework, leveraging the locality and consistency of motion in dynamic scene, that models object-consistent Gaussian point motion through keypoint-driven motion representation. By transmitting only the keypoint attributes, this framework provides a more storage-efficient solution. Specifically, we first identify a sparse set of motion-sensitive keypoints localized within motion regions using a viewspace gradient difference strategy. Equipped with these keypoints, we propose an adaptive motion-driven mechanism that predicts a spatial influence field for propagating keypoint motion to neighboring Gaussian points with similar motion. Moreover, ComGS adopts an error-aware correction strategy for key frame reconstruction that selectively refines erroneous regions and mitigates error accumulation without unnecessary overhead. Overall, ComGS achieves a remarkable storage reduction of over 159 X compared to 3DGStream and 14 X compared to the SOTA method QUEEN, while maintaining competitive visual fidelity and rendering speed. Our code will be released.

Fourier123: One Image to High-Quality 3D Object Generation with Hybrid Fourier Score Distillation

May 31, 2024Abstract:Single image-to-3D generation is pivotal for crafting controllable 3D assets. Given its underconstrained nature, we leverage geometric priors from a 3D novel view generation diffusion model and appearance priors from a 2D image generation method to guide the optimization process. We note that a disparity exists between the training datasets of 2D and 3D diffusion models, leading to their outputs showing marked differences in appearance. Specifically, 2D models tend to deliver more detailed visuals, whereas 3D models produce consistent yet over-smooth results across different views. Hence, we optimize a set of 3D Gaussians using 3D priors in spatial domain to ensure geometric consistency, while exploiting 2D priors in the frequency domain through Fourier transform for higher visual quality. This 2D-3D hybrid Fourier Score Distillation objective function (dubbed hy-FSD), can be integrated into existing 3D generation methods, yielding significant performance improvements. With this technique, we further develop an image-to-3D generation pipeline to create high-quality 3D objects within one minute, named Fourier123. Extensive experiments demonstrate that Fourier123 excels in efficient generation with rapid convergence speed and visual-friendly generation results.

Diffusion-Based Hierarchical Image Steganography

May 19, 2024

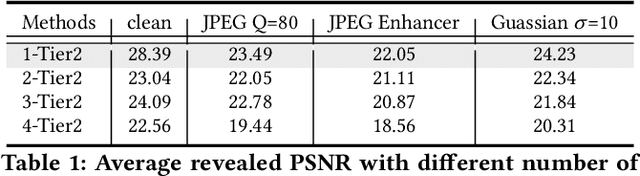

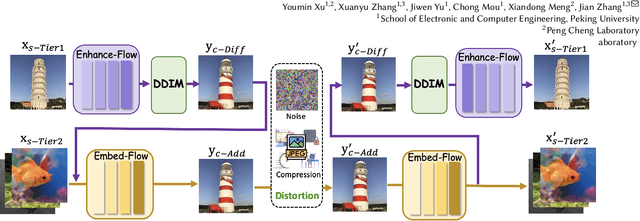

Abstract:This paper introduces Hierarchical Image Steganography, a novel method that enhances the security and capacity of embedding multiple images into a single container using diffusion models. HIS assigns varying levels of robustness to images based on their importance, ensuring enhanced protection against manipulation. It adaptively exploits the robustness of the Diffusion Model alongside the reversibility of the Flow Model. The integration of Embed-Flow and Enhance-Flow improves embedding efficiency and image recovery quality, respectively, setting HIS apart from conventional multi-image steganography techniques. This innovative structure can autonomously generate a container image, thereby securely and efficiently concealing multiple images and text. Rigorous subjective and objective evaluations underscore our advantage in analytical resistance, robustness, and capacity, illustrating its expansive applicability in content safeguarding and privacy fortification.

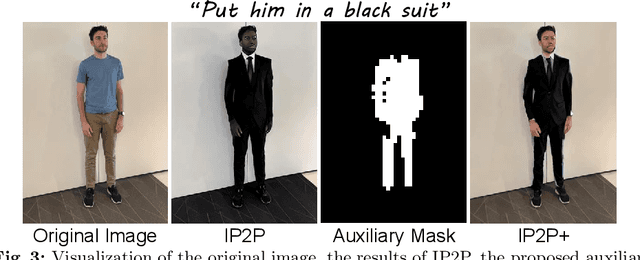

Neural Video Fields Editing

Dec 12, 2023

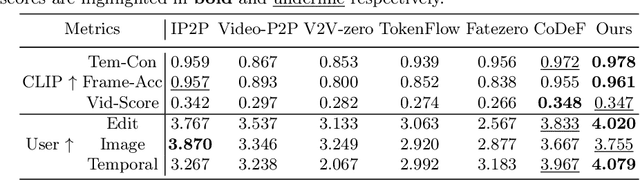

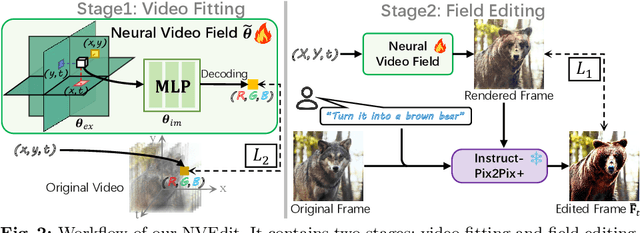

Abstract:Diffusion models have revolutionized text-driven video editing. However, applying these methods to real-world editing encounters two significant challenges: (1) the rapid increase in graphics memory demand as the number of frames grows, and (2) the inter-frame inconsistency in edited videos. To this end, we propose NVEdit, a novel text-driven video editing framework designed to mitigate memory overhead and improve consistent editing for real-world long videos. Specifically, we construct a neural video field, powered by tri-plane and sparse grid, to enable encoding long videos with hundreds of frames in a memory-efficient manner. Next, we update the video field through off-the-shelf Text-to-Image (T2I) models to impart text-driven editing effects. A progressive optimization strategy is developed to preserve original temporal priors. Importantly, both the neural video field and T2I model are adaptable and replaceable, thus inspiring future research. Experiments demonstrate that our approach successfully edits hundreds of frames with impressive inter-frame consistency.

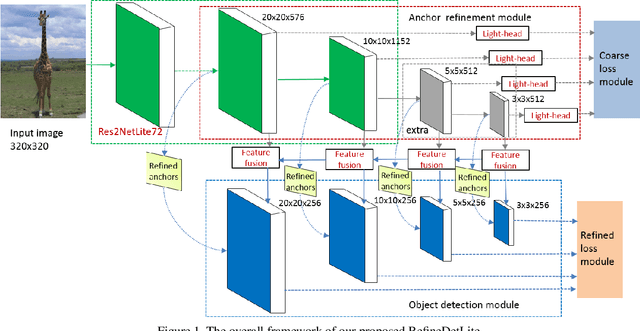

RefineDetLite: A Lightweight One-stage Object Detection Framework for CPU-only Devices

Nov 20, 2019

Abstract:Previous state-of-the-art real-time object detectors have been reported on GPUs which are extremely expensive for processing massive data and in resource-restricted scenarios. Therefore, high efficiency object detectors on CPU-only devices are urgently-needed in industry. The floating-point operations (FLOPs) of networks are not strictly proportional to the running speed on CPU devices, which inspires the design of an exactly "fast" and "accurate" object detector. After investigating the concern gaps between classification networks and detection backbones, and following the design principles of efficient networks, we propose a lightweight residual-like backbone with large receptive fields and wide dimensions for low-level features, which are crucial for detection tasks. Correspondingly, we also design a light-head detection part to match the backbone capability. Furthermore, by analyzing the drawbacks of current one-stage detector training strategies, we also propose three orthogonal training strategies---IOU-guided loss, classes-aware weighting method and balanced multi-task training approach. Without bells and whistles, our proposed RefineDetLite achieves 26.8 mAP on the MSCOCO benchmark at a speed of 130 ms/pic on a single-thread CPU. The detection accuracy can be further increased to 29.6 mAP by integrating all the proposed training strategies, without apparent speed drop.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge