Wenshuo Chen

Guided Path Sampling: Steering Diffusion Models Back on Track with Principled Path Guidance

Dec 28, 2025Abstract:Iterative refinement methods based on a denoising-inversion cycle are powerful tools for enhancing the quality and control of diffusion models. However, their effectiveness is critically limited when combined with standard Classifier-Free Guidance (CFG). We identify a fundamental limitation: CFG's extrapolative nature systematically pushes the sampling path off the data manifold, causing the approximation error to diverge and undermining the refinement process. To address this, we propose Guided Path Sampling (GPS), a new paradigm for iterative refinement. GPS replaces unstable extrapolation with a principled, manifold-constrained interpolation, ensuring the sampling path remains on the data manifold. We theoretically prove that this correction transforms the error series from unbounded amplification to strictly bounded, guaranteeing stability. Furthermore, we devise an optimal scheduling strategy that dynamically adjusts guidance strength, aligning semantic injection with the model's natural coarse-to-fine generation process. Extensive experiments on modern backbones like SDXL and Hunyuan-DiT show that GPS outperforms existing methods in both perceptual quality and complex prompt adherence. For instance, GPS achieves a superior ImageReward of 0.79 and HPS v2 of 0.2995 on SDXL, while improving overall semantic alignment accuracy on GenEval to 57.45%. Our work establishes that path stability is a prerequisite for effective iterative refinement, and GPS provides a robust framework to achieve it.

RMDM: Radio Map Diffusion Model with Physics Informed

Jan 31, 2025

Abstract:With the rapid development of wireless communication technology, the efficient utilization of spectrum resources, optimization of communication quality, and intelligent communication have become critical. Radio map reconstruction is essential for enabling advanced applications, yet challenges such as complex signal propagation and sparse data hinder accurate reconstruction. To address these issues, we propose the **Radio Map Diffusion Model (RMDM)**, a physics-informed framework that integrates **Physics-Informed Neural Networks (PINNs)** to incorporate constraints like the **Helmholtz equation**. RMDM employs a dual U-Net architecture: the first ensures physical consistency by minimizing PDE residuals, boundary conditions, and source constraints, while the second refines predictions via diffusion-based denoising. By leveraging physical laws, RMDM significantly enhances accuracy, robustness, and generalization. Experiments demonstrate that RMDM outperforms state-of-the-art methods, achieving **NMSE of 0.0031** and **RMSE of 0.0125** under the Static RM (SRM) setting, and **NMSE of 0.0047** and **RMSE of 0.0146** under the Dynamic RM (DRM) setting. These results establish a novel paradigm for integrating physics-informed and data-driven approaches in radio map reconstruction, particularly under sparse data conditions.

Free-T2M: Frequency Enhanced Text-to-Motion Diffusion Model With Consistency Loss

Jan 30, 2025

Abstract:Rapid progress in text-to-motion generation has been largely driven by diffusion models. However, existing methods focus solely on temporal modeling, thereby overlooking frequency-domain analysis. We identify two key phases in motion denoising: the **semantic planning stage** and the **fine-grained improving stage**. To address these phases effectively, we propose **Fre**quency **e**nhanced **t**ext-**to**-**m**otion diffusion model (**Free-T2M**), incorporating stage-specific consistency losses that enhance the robustness of static features and improve fine-grained accuracy. Extensive experiments demonstrate the effectiveness of our method. Specifically, on StableMoFusion, our method reduces the FID from **0.189** to **0.051**, establishing a new SOTA performance within the diffusion architecture. These findings highlight the importance of incorporating frequency-domain insights into text-to-motion generation for more precise and robust results.

Learning New Concepts, Remembering the Old: A Novel Continual Learning

Nov 25, 2024

Abstract:Concept Bottleneck Models (CBMs) enhance model interpretability by introducing human-understandable concepts within the architecture. However, existing CBMs assume static datasets, limiting their ability to adapt to real-world, continuously evolving data streams. To address this, we define a novel concept-incremental and class-incremental continual learning task for CBMs, enabling models to accumulate new concepts and classes over time while retaining previously learned knowledge. To achieve this, we propose CONceptual Continual Incremental Learning (CONCIL), a framework that prevents catastrophic forgetting by reformulating concept and decision layer updates as linear regression problems, thus eliminating the need for gradient-based updates. CONCIL requires only recursive matrix operations, making it computationally efficient and suitable for real-time and large-scale data applications. Experimental results demonstrate that CONCIL achieves "absolute knowledge memory" and outperforms traditional CBM methods in concept- and class-incremental settings, establishing a new benchmark for continual learning in CBMs.

Guarding the Gate: ConceptGuard Battles Concept-Level Backdoors in Concept Bottleneck Models

Nov 25, 2024Abstract:The increasing complexity of AI models, especially in deep learning, has raised concerns about transparency and accountability, particularly in high-stakes applications like medical diagnostics, where opaque models can undermine trust. Explainable Artificial Intelligence (XAI) aims to address these issues by providing clear, interpretable models. Among XAI techniques, Concept Bottleneck Models (CBMs) enhance transparency by using high-level semantic concepts. However, CBMs are vulnerable to concept-level backdoor attacks, which inject hidden triggers into these concepts, leading to undetectable anomalous behavior. To address this critical security gap, we introduce ConceptGuard, a novel defense framework specifically designed to protect CBMs from concept-level backdoor attacks. ConceptGuard employs a multi-stage approach, including concept clustering based on text distance measurements and a voting mechanism among classifiers trained on different concept subgroups, to isolate and mitigate potential triggers. Our contributions are threefold: (i) we present ConceptGuard as the first defense mechanism tailored for concept-level backdoor attacks in CBMs; (ii) we provide theoretical guarantees that ConceptGuard can effectively defend against such attacks within a certain trigger size threshold, ensuring robustness; and (iii) we demonstrate that ConceptGuard maintains the high performance and interpretability of CBMs, crucial for trustworthiness. Through comprehensive experiments and theoretical proofs, we show that ConceptGuard significantly enhances the security and trustworthiness of CBMs, paving the way for their secure deployment in critical applications.

Towards Multi-dimensional Explanation Alignment for Medical Classification

Oct 28, 2024

Abstract:The lack of interpretability in the field of medical image analysis has significant ethical and legal implications. Existing interpretable methods in this domain encounter several challenges, including dependency on specific models, difficulties in understanding and visualization, as well as issues related to efficiency. To address these limitations, we propose a novel framework called Med-MICN (Medical Multi-dimensional Interpretable Concept Network). Med-MICN provides interpretability alignment for various angles, including neural symbolic reasoning, concept semantics, and saliency maps, which are superior to current interpretable methods. Its advantages include high prediction accuracy, interpretability across multiple dimensions, and automation through an end-to-end concept labeling process that reduces the need for extensive human training effort when working with new datasets. To demonstrate the effectiveness and interpretability of Med-MICN, we apply it to four benchmark datasets and compare it with baselines. The results clearly demonstrate the superior performance and interpretability of our Med-MICN.

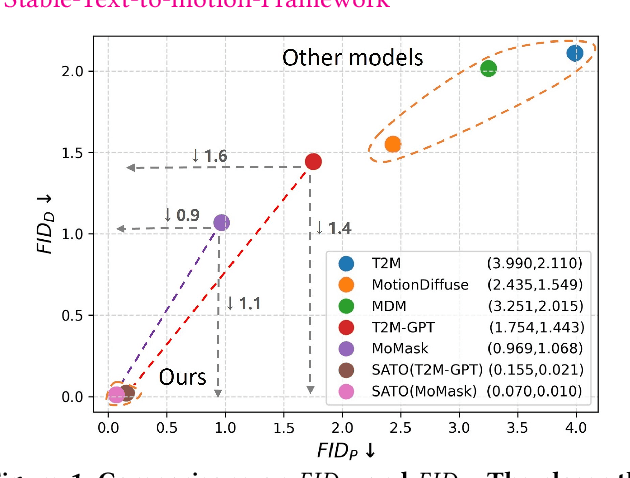

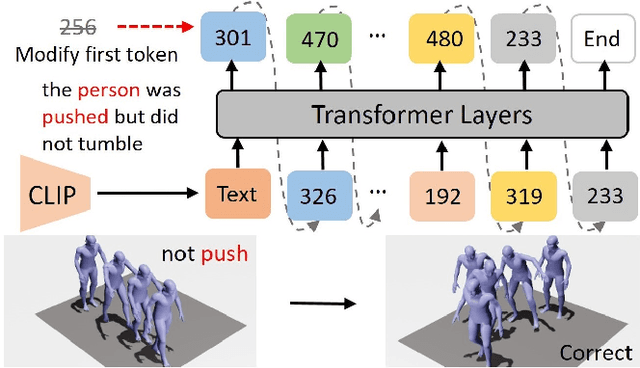

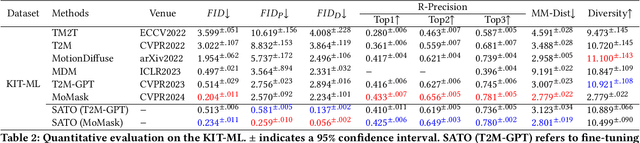

SATO: Stable Text-to-Motion Framework

May 02, 2024

Abstract:Is the Text to Motion model robust? Recent advancements in Text to Motion models primarily stem from more accurate predictions of specific actions. However, the text modality typically relies solely on pre-trained Contrastive Language-Image Pretraining (CLIP) models. Our research has uncovered a significant issue with the text-to-motion model: its predictions often exhibit inconsistent outputs, resulting in vastly different or even incorrect poses when presented with semantically similar or identical text inputs. In this paper, we undertake an analysis to elucidate the underlying causes of this instability, establishing a clear link between the unpredictability of model outputs and the erratic attention patterns of the text encoder module. Consequently, we introduce a formal framework aimed at addressing this issue, which we term the Stable Text-to-Motion Framework (SATO). SATO consists of three modules, each dedicated to stable attention, stable prediction, and maintaining a balance between accuracy and robustness trade-off. We present a methodology for constructing an SATO that satisfies the stability of attention and prediction. To verify the stability of the model, we introduced a new textual synonym perturbation dataset based on HumanML3D and KIT-ML. Results show that SATO is significantly more stable against synonyms and other slight perturbations while keeping its high accuracy performance.

Decomposed Human Motion Prior for Video Pose Estimation via Adversarial Training

May 31, 2023

Abstract:Estimating human pose from video is a task that receives considerable attention due to its applicability in numerous 3D fields. The complexity of prior knowledge of human body movements poses a challenge to neural network models in the task of regressing keypoints. In this paper, we address this problem by incorporating motion prior in an adversarial way. Different from previous methods, we propose to decompose holistic motion prior to joint motion prior, making it easier for neural networks to learn from prior knowledge thereby boosting the performance on the task. We also utilize a novel regularization loss to balance accuracy and smoothness introduced by motion prior. Our method achieves 9\% lower PA-MPJPE and 29\% lower acceleration error than previous methods tested on 3DPW. The estimator proves its robustness by achieving impressive performance on in-the-wild dataset.

Gen-L-Video: Multi-Text to Long Video Generation via Temporal Co-Denoising

May 29, 2023

Abstract:Leveraging large-scale image-text datasets and advancements in diffusion models, text-driven generative models have made remarkable strides in the field of image generation and editing. This study explores the potential of extending the text-driven ability to the generation and editing of multi-text conditioned long videos. Current methodologies for video generation and editing, while innovative, are often confined to extremely short videos (typically less than 24 frames) and are limited to a single text condition. These constraints significantly limit their applications given that real-world videos usually consist of multiple segments, each bearing different semantic information. To address this challenge, we introduce a novel paradigm dubbed as Gen-L-Video, capable of extending off-the-shelf short video diffusion models for generating and editing videos comprising hundreds of frames with diverse semantic segments without introducing additional training, all while preserving content consistency. We have implemented three mainstream text-driven video generation and editing methodologies and extended them to accommodate longer videos imbued with a variety of semantic segments with our proposed paradigm. Our experimental outcomes reveal that our approach significantly broadens the generative and editing capabilities of video diffusion models, offering new possibilities for future research and applications. The code is available at https://github.com/G-U-N/Gen-L-Video.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge