Haozhe Jia

Guided Path Sampling: Steering Diffusion Models Back on Track with Principled Path Guidance

Dec 28, 2025Abstract:Iterative refinement methods based on a denoising-inversion cycle are powerful tools for enhancing the quality and control of diffusion models. However, their effectiveness is critically limited when combined with standard Classifier-Free Guidance (CFG). We identify a fundamental limitation: CFG's extrapolative nature systematically pushes the sampling path off the data manifold, causing the approximation error to diverge and undermining the refinement process. To address this, we propose Guided Path Sampling (GPS), a new paradigm for iterative refinement. GPS replaces unstable extrapolation with a principled, manifold-constrained interpolation, ensuring the sampling path remains on the data manifold. We theoretically prove that this correction transforms the error series from unbounded amplification to strictly bounded, guaranteeing stability. Furthermore, we devise an optimal scheduling strategy that dynamically adjusts guidance strength, aligning semantic injection with the model's natural coarse-to-fine generation process. Extensive experiments on modern backbones like SDXL and Hunyuan-DiT show that GPS outperforms existing methods in both perceptual quality and complex prompt adherence. For instance, GPS achieves a superior ImageReward of 0.79 and HPS v2 of 0.2995 on SDXL, while improving overall semantic alignment accuracy on GenEval to 57.45%. Our work establishes that path stability is a prerequisite for effective iterative refinement, and GPS provides a robust framework to achieve it.

Super-Resolution Enhancement of Medical Images Based on Diffusion Model: An Optimization Scheme for Low-Resolution Gastric Images

Dec 22, 2025Abstract:Capsule endoscopy has enabled minimally invasive gastrointestinal imaging, but its clinical utility is limited by the inherently low resolution of captured images due to hardware, power, and transmission constraints. This limitation hampers the identification of fine-grained mucosal textures and subtle pathological features essential for early diagnosis. This work investigates a diffusion-based super-resolution framework to enhance capsule endoscopy images in a data-driven and anatomically consistent manner. We adopt the SR3 (Super-Resolution via Repeated Refinement) framework built upon Denoising Diffusion Probabilistic Models (DDPMs) to learn a probabilistic mapping from low-resolution to high-resolution images. Unlike GAN-based approaches that often suffer from training instability and hallucination artifacts, diffusion models provide stable likelihood-based training and improved structural fidelity. The HyperKvasir dataset, a large-scale publicly available gastrointestinal endoscopy dataset, is used for training and evaluation. Quantitative results demonstrate that the proposed method significantly outperforms bicubic interpolation and GAN-based super-resolution methods such as ESRGAN, achieving PSNR of 27.5 dB and SSIM of 0.65 for a baseline model, and improving to 29.3 dB and 0.71 with architectural enhancements including attention mechanisms. Qualitative results show improved preservation of anatomical boundaries, vascular patterns, and lesion structures. These findings indicate that diffusion-based super-resolution is a promising approach for enhancing non-invasive medical imaging, particularly in capsule endoscopy where image resolution is fundamentally constrained.

RMDM: Radio Map Diffusion Model with Physics Informed

Jan 31, 2025

Abstract:With the rapid development of wireless communication technology, the efficient utilization of spectrum resources, optimization of communication quality, and intelligent communication have become critical. Radio map reconstruction is essential for enabling advanced applications, yet challenges such as complex signal propagation and sparse data hinder accurate reconstruction. To address these issues, we propose the **Radio Map Diffusion Model (RMDM)**, a physics-informed framework that integrates **Physics-Informed Neural Networks (PINNs)** to incorporate constraints like the **Helmholtz equation**. RMDM employs a dual U-Net architecture: the first ensures physical consistency by minimizing PDE residuals, boundary conditions, and source constraints, while the second refines predictions via diffusion-based denoising. By leveraging physical laws, RMDM significantly enhances accuracy, robustness, and generalization. Experiments demonstrate that RMDM outperforms state-of-the-art methods, achieving **NMSE of 0.0031** and **RMSE of 0.0125** under the Static RM (SRM) setting, and **NMSE of 0.0047** and **RMSE of 0.0146** under the Dynamic RM (DRM) setting. These results establish a novel paradigm for integrating physics-informed and data-driven approaches in radio map reconstruction, particularly under sparse data conditions.

Free-T2M: Frequency Enhanced Text-to-Motion Diffusion Model With Consistency Loss

Jan 30, 2025

Abstract:Rapid progress in text-to-motion generation has been largely driven by diffusion models. However, existing methods focus solely on temporal modeling, thereby overlooking frequency-domain analysis. We identify two key phases in motion denoising: the **semantic planning stage** and the **fine-grained improving stage**. To address these phases effectively, we propose **Fre**quency **e**nhanced **t**ext-**to**-**m**otion diffusion model (**Free-T2M**), incorporating stage-specific consistency losses that enhance the robustness of static features and improve fine-grained accuracy. Extensive experiments demonstrate the effectiveness of our method. Specifically, on StableMoFusion, our method reduces the FID from **0.189** to **0.051**, establishing a new SOTA performance within the diffusion architecture. These findings highlight the importance of incorporating frequency-domain insights into text-to-motion generation for more precise and robust results.

DCTdiff: Intriguing Properties of Image Generative Modeling in the DCT Space

Dec 19, 2024Abstract:This paper explores image modeling from the frequency space and introduces DCTdiff, an end-to-end diffusion generative paradigm that efficiently models images in the discrete cosine transform (DCT) space. We investigate the design space of DCTdiff and reveal the key design factors. Experiments on different frameworks (UViT, DiT), generation tasks, and various diffusion samplers demonstrate that DCTdiff outperforms pixel-based diffusion models regarding generative quality and training efficiency. Remarkably, DCTdiff can seamlessly scale up to high-resolution generation without using the latent diffusion paradigm. Finally, we illustrate several intriguing properties of DCT image modeling. For example, we provide a theoretical proof of why `image diffusion can be seen as spectral autoregression', bridging the gap between diffusion and autoregressive models. The effectiveness of DCTdiff and the introduced properties suggest a promising direction for image modeling in the frequency space. The code is at \url{https://github.com/forever208/DCTdiff}.

Block-wise LoRA: Revisiting Fine-grained LoRA for Effective Personalization and Stylization in Text-to-Image Generation

Mar 12, 2024

Abstract:The objective of personalization and stylization in text-to-image is to instruct a pre-trained diffusion model to analyze new concepts introduced by users and incorporate them into expected styles. Recently, parameter-efficient fine-tuning (PEFT) approaches have been widely adopted to address this task and have greatly propelled the development of this field. Despite their popularity, existing efficient fine-tuning methods still struggle to achieve effective personalization and stylization in T2I generation. To address this issue, we propose block-wise Low-Rank Adaptation (LoRA) to perform fine-grained fine-tuning for different blocks of SD, which can generate images faithful to input prompts and target identity and also with desired style. Extensive experiments demonstrate the effectiveness of the proposed method.

DisControlFace: Disentangled Control for Personalized Facial Image Editing

Dec 11, 2023

Abstract:In this work, we focus on exploring explicit fine-grained control of generative facial image editing, all while generating faithful and consistent personalized facial appearances. We identify the key challenge of this task as the exploration of disentangled conditional control in the generation process, and accordingly propose a novel diffusion-based framework, named DisControlFace, comprising two decoupled components. Specifically, we leverage an off-the-shelf diffusion reconstruction model as the backbone and freeze its pre-trained weights, which helps to reduce identity shift and recover editing-unrelated details of the input image. Furthermore, we construct a parallel control network that is compatible with the reconstruction backbone to generate spatial control conditions based on estimated explicit face parameters. Finally, we further reformulate the training pipeline into a masked-autoencoding form to effectively achieve disentangled training of our DisControlFace. Our DisControlNet can perform robust editing on any facial image through training on large-scale 2D in-the-wild portraits and also supports low-cost fine-tuning with few additional images to further learn diverse personalized priors of a specific person. Extensive experiments demonstrate that DisControlFace can generate realistic facial images corresponding to various face control conditions, while significantly improving the preservation of the personalized facial details.

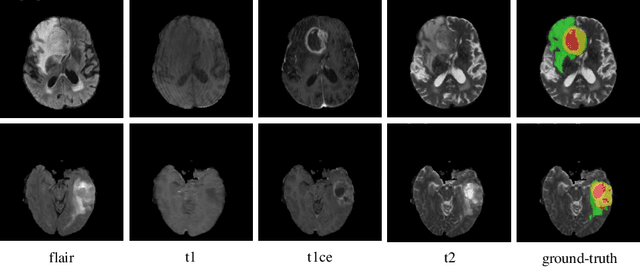

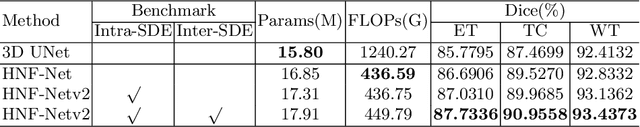

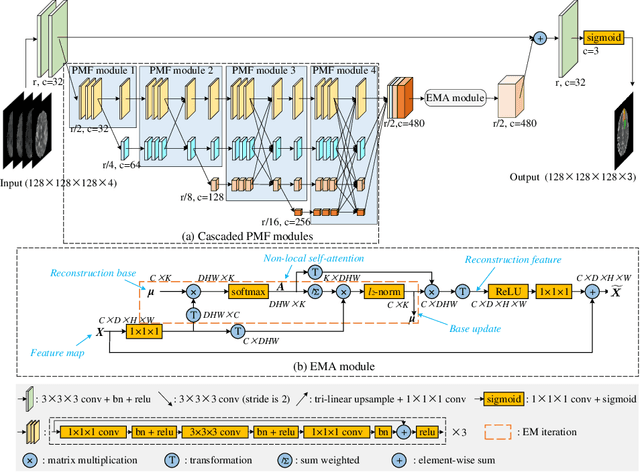

HNF-Netv2 for Brain Tumor Segmentation using multi-modal MR Imaging

Feb 10, 2022

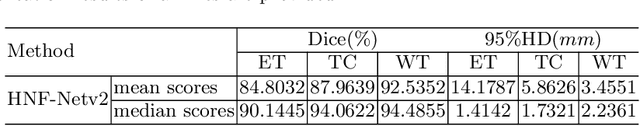

Abstract:In our previous work, $i.e.$, HNF-Net, high-resolution feature representation and light-weight non-local self-attention mechanism are exploited for brain tumor segmentation using multi-modal MR imaging. In this paper, we extend our HNF-Net to HNF-Netv2 by adding inter-scale and intra-scale semantic discrimination enhancing blocks to further exploit global semantic discrimination for the obtained high-resolution features. We trained and evaluated our HNF-Netv2 on the multi-modal Brain Tumor Segmentation Challenge (BraTS) 2021 dataset. The result on the test set shows that our HNF-Netv2 achieved the average Dice scores of 0.878514, 0.872985, and 0.924919, as well as the Hausdorff distances ($95\%$) of 8.9184, 16.2530, and 4.4895 for the enhancing tumor, tumor core, and whole tumor, respectively. Our method won the RSNA 2021 Brain Tumor AI Challenge Prize (Segmentation Task), which ranks 8th out of all 1250 submitted results.

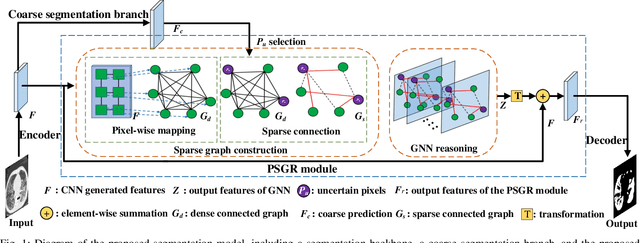

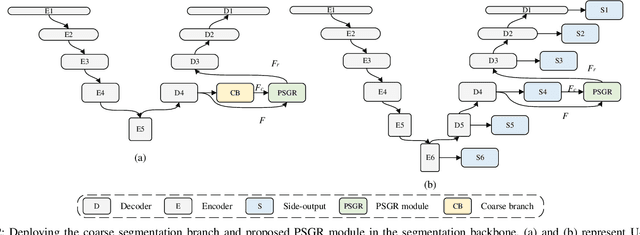

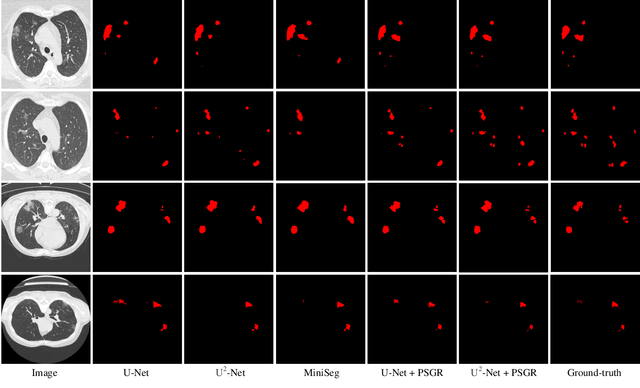

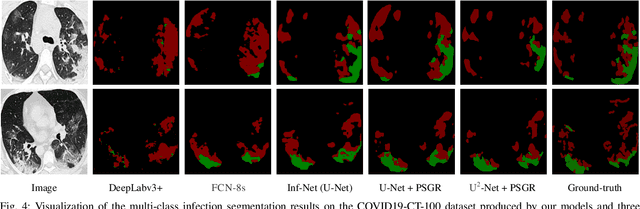

PSGR: Pixel-wise Sparse Graph Reasoning for COVID-19 Pneumonia Segmentation in CT Images

Aug 09, 2021

Abstract:Automated and accurate segmentation of the infected regions in computed tomography (CT) images is critical for the prediction of the pathological stage and treatment response of COVID-19. Several deep convolutional neural networks (DCNNs) have been designed for this task, whose performance, however, tends to be suppressed by their limited local receptive fields and insufficient global reasoning ability. In this paper, we propose a pixel-wise sparse graph reasoning (PSGR) module and insert it into a segmentation network to enhance the modeling of long-range dependencies for COVID-19 infected region segmentation in CT images. In the PSGR module, a graph is first constructed by projecting each pixel on a node based on the features produced by the segmentation backbone, and then converted into a sparsely-connected graph by keeping only K strongest connections to each uncertain pixel. The long-range information reasoning is performed on the sparsely-connected graph to generate enhanced features. The advantages of this module are two-fold: (1) the pixel-wise mapping strategy not only avoids imprecise pixel-to-node projections but also preserves the inherent information of each pixel for global reasoning; and (2) the sparsely-connected graph construction results in effective information retrieval and reduction of the noise propagation. The proposed solution has been evaluated against four widely-used segmentation models on three public datasets. The results show that the segmentation model equipped with our PSGR module can effectively segment COVID-19 infected regions in CT images, outperforming all other competing models.

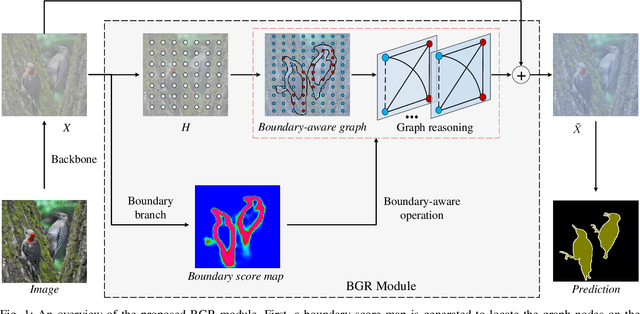

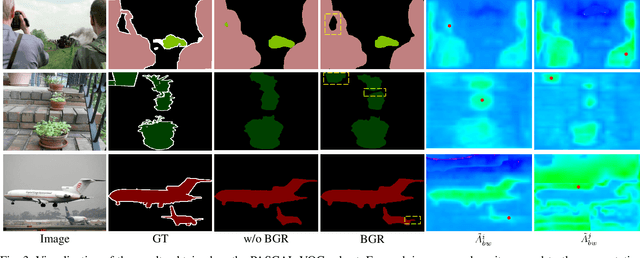

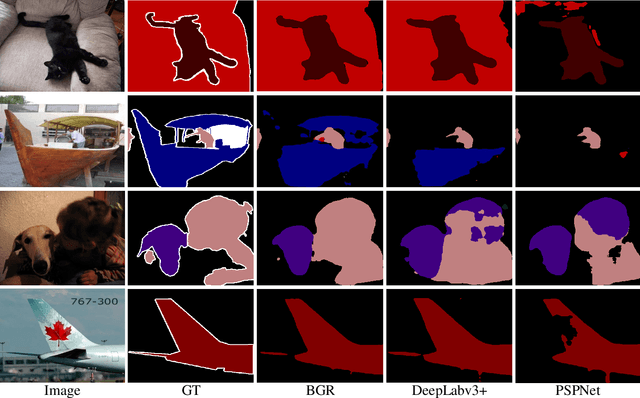

Boundary-aware Graph Reasoning for Semantic Segmentation

Aug 09, 2021

Abstract:In this paper, we propose a Boundary-aware Graph Reasoning (BGR) module to learn long-range contextual features for semantic segmentation. Rather than directly construct the graph based on the backbone features, our BGR module explores a reasonable way to combine segmentation erroneous regions with the graph construction scenario. Motivated by the fact that most hard-to-segment pixels broadly distribute on boundary regions, our BGR module uses the boundary score map as prior knowledge to intensify the graph node connections and thereby guide the graph reasoning focus on boundary regions. In addition, we employ an efficient graph convolution implementation to reduce the computational cost, which benefits the integration of our BGR module into current segmentation backbones. Extensive experiments on three challenging segmentation benchmarks demonstrate the effectiveness of our proposed BGR module for semantic segmentation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge