Weiqi Chen

Utilizing Strategic Pre-training to Reduce Overfitting: Baguan -- A Pre-trained Weather Forecasting Model

May 20, 2025Abstract:Weather forecasting has long posed a significant challenge for humanity. While recent AI-based models have surpassed traditional numerical weather prediction (NWP) methods in global forecasting tasks, overfitting remains a critical issue due to the limited availability of real-world weather data spanning only a few decades. Unlike fields like computer vision or natural language processing, where data abundance can mitigate overfitting, weather forecasting demands innovative strategies to address this challenge with existing data. In this paper, we explore pre-training methods for weather forecasting, finding that selecting an appropriately challenging pre-training task introduces locality bias, effectively mitigating overfitting and enhancing performance. We introduce Baguan, a novel data-driven model for medium-range weather forecasting, built on a Siamese Autoencoder pre-trained in a self-supervised manner and fine-tuned for different lead times. Experimental results show that Baguan outperforms traditional methods, delivering more accurate forecasts. Additionally, the pre-trained Baguan demonstrates robust overfitting control and excels in downstream tasks, such as subseasonal-to-seasonal (S2S) modeling and regional forecasting, after fine-tuning.

GraphSubDetector: Time Series Subsequence Anomaly Detection via Density-Aware Adaptive Graph Neural Network

Nov 26, 2024

Abstract:Time series subsequence anomaly detection is an important task in a large variety of real-world applications ranging from health monitoring to AIOps, and is challenging due to the following reasons: 1) how to effectively learn complex dynamics and dependencies in time series; 2) diverse and complicated anomalous subsequences as well as the inherent variance and noise of normal patterns; 3) how to determine the proper subsequence length for effective detection, which is a required parameter for many existing algorithms. In this paper, we present a novel approach to subsequence anomaly detection, namely GraphSubDetector. First, it adaptively learns the appropriate subsequence length with a length selection mechanism that highlights the characteristics of both normal and anomalous patterns. Second, we propose a density-aware adaptive graph neural network (DAGNN), which can generate further robust representations against variance of normal data for anomaly detection by message passing between subsequences. The experimental results demonstrate the effectiveness of the proposed algorithm, which achieves superior performance on multiple time series anomaly benchmark datasets compared to state-of-the-art algorithms.

Evolving Multi-Scale Normalization for Time Series Forecasting under Distribution Shifts

Sep 29, 2024

Abstract:Complex distribution shifts are the main obstacle to achieving accurate long-term time series forecasting. Several efforts have been conducted to capture the distribution characteristics and propose adaptive normalization techniques to alleviate the influence of distribution shifts. However, these methods neglect the intricate distribution dynamics observed from various scales and the evolving functions of distribution dynamics and normalized mapping relationships. To this end, we propose a novel model-agnostic Evolving Multi-Scale Normalization (EvoMSN) framework to tackle the distribution shift problem. Flexible normalization and denormalization are proposed based on the multi-scale statistics prediction module and adaptive ensembling. An evolving optimization strategy is designed to update the forecasting model and statistics prediction module collaboratively to track the shifting distributions. We evaluate the effectiveness of EvoMSN in improving the performance of five mainstream forecasting methods on benchmark datasets and also show its superiority compared to existing advanced normalization and online learning approaches. The code is publicly available at https://github.com/qindalin/EvoMSN.

Addressing Concept Shift in Online Time Series Forecasting: Detect-then-Adapt

Mar 22, 2024

Abstract:Online updating of time series forecasting models aims to tackle the challenge of concept drifting by adjusting forecasting models based on streaming data. While numerous algorithms have been developed, most of them focus on model design and updating. In practice, many of these methods struggle with continuous performance regression in the face of accumulated concept drifts over time. To address this limitation, we present a novel approach, Concept \textbf{D}rift \textbf{D}etection an\textbf{D} \textbf{A}daptation (D3A), that first detects drifting conception and then aggressively adapts the current model to the drifted concepts after the detection for rapid adaption. To best harness the utility of historical data for model adaptation, we propose a data augmentation strategy introducing Gaussian noise into existing training instances. It helps mitigate the data distribution gap, a critical factor contributing to train-test performance inconsistency. The significance of our data augmentation process is verified by our theoretical analysis. Our empirical studies across six datasets demonstrate the effectiveness of D3A in improving model adaptation capability. Notably, compared to a simple Temporal Convolutional Network (TCN) baseline, D3A reduces the average Mean Squared Error (MSE) by $43.9\%$. For the state-of-the-art (SOTA) model, the MSE is reduced by $33.3\%$.

WeatherGNN: Exploiting Complicated Relationships in Numerical Weather Prediction Bias Correction

Oct 09, 2023

Abstract:Numerical weather prediction (NWP) may be inaccurate or biased due to incomplete atmospheric physical processes, insufficient spatial-temporal resolution, and inherent uncertainty of weather. Previous studies have attempted to correct biases by using handcrafted features and domain knowledge, or by applying general machine learning models naively. They do not fully explore the complicated meteorologic interactions and spatial dependencies in the atmosphere dynamically, which limits their applicability in NWP bias-correction. Specifically, weather factors interact with each other in complex ways, and these interactions can vary regionally. In addition, the interactions between weather factors are further complicated by the spatial dependencies between regions, which are influenced by varied terrain and atmospheric motions. To address these issues, we propose WeatherGNN, an NWP bias-correction method that utilizes Graph Neural Networks (GNN) to learn meteorologic and geographic relationships in a unified framework. Our approach includes a factor-wise GNN that captures meteorological interactions within each grid (a specific location) adaptively, and a fast hierarchical GNN that captures spatial dependencies between grids dynamically. Notably, the fast hierarchical GNN achieves linear complexity with respect to the number of grids, enhancing model efficiency and scalability. Our experimental results on two real-world datasets demonstrate the superiority of WeatherGNN in comparison with other SOTA methods, with an average improvement of 40.50\% on RMSE compared to the original NWP.

OneNet: Enhancing Time Series Forecasting Models under Concept Drift by Online Ensembling

Sep 22, 2023

Abstract:Online updating of time series forecasting models aims to address the concept drifting problem by efficiently updating forecasting models based on streaming data. Many algorithms are designed for online time series forecasting, with some exploiting cross-variable dependency while others assume independence among variables. Given every data assumption has its own pros and cons in online time series modeling, we propose \textbf{On}line \textbf{e}nsembling \textbf{Net}work (OneNet). It dynamically updates and combines two models, with one focusing on modeling the dependency across the time dimension and the other on cross-variate dependency. Our method incorporates a reinforcement learning-based approach into the traditional online convex programming framework, allowing for the linear combination of the two models with dynamically adjusted weights. OneNet addresses the main shortcoming of classical online learning methods that tend to be slow in adapting to the concept drift. Empirical results show that OneNet reduces online forecasting error by more than $\mathbf{50\%}$ compared to the State-Of-The-Art (SOTA) method. The code is available at \url{https://github.com/yfzhang114/OneNet}.

Transformers in Time Series: A Survey

Mar 07, 2022

Abstract:Transformers have achieved superior performances in many tasks in natural language processing and computer vision, which also intrigues great interests in the time series community. Among multiple advantages of transformers, the ability to capture long-range dependencies and interactions is especially attractive for time series modeling, leading to exciting progress in various time series applications. In this paper, we systematically review transformer schemes for time series modeling by highlighting their strengths as well as limitations through a new taxonomy to summarize existing time series transformers in two perspectives. From the perspective of network modifications, we summarize the adaptations of module level and architecture level of the time series transformers. From the perspective of applications, we categorize time series transformers based on common tasks including forecasting, anomaly detection, and classification. Empirically, we perform robust analysis, model size analysis, and seasonal-trend decomposition analysis to study how Transformers perform in time series. Finally, we discuss and suggest future directions to provide useful research guidance. A corresponding resource list that will be continuously updated can be found in the GitHub repository. To the best of our knowledge, this paper is the first work to comprehensively and systematically summarize the recent advances of Transformers for modeling time series data. We hope this survey will ignite further research interests in time series Transformers.

Learning from Multiple Time Series: A Deep Disentangled Approach to Diversified Time Series Forecasting

Nov 09, 2021

Abstract:Time series forecasting is a significant problem in many applications, e.g., financial predictions and business optimization. Modern datasets can have multiple correlated time series, which are often generated with global (shared) regularities and local (specific) dynamics. In this paper, we seek to tackle such forecasting problems with DeepDGL, a deep forecasting model that disentangles dynamics into global and local temporal patterns. DeepDGL employs an encoder-decoder architecture, consisting of two encoders to learn global and local temporal patterns, respectively, and a decoder to make multi-step forecasting. Specifically, to model complicated global patterns, the vector quantization (VQ) module is introduced, allowing the global feature encoder to learn a shared codebook among all time series. To model diversified and heterogenous local patterns, an adaptive parameter generation module enhanced by the contrastive multi-horizon coding (CMC) is proposed to generate the parameters of the local feature encoder for each individual time series, which maximizes the mutual information between the series-specific context variable and the long/short-term representations of the corresponding time series. Our experiments on several real-world datasets show that DeepDGL outperforms existing state-of-the-art models.

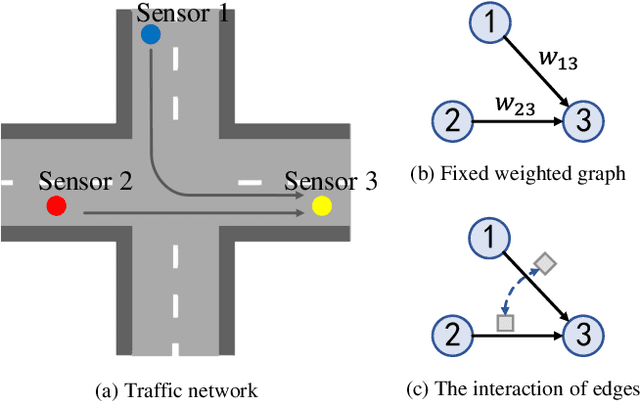

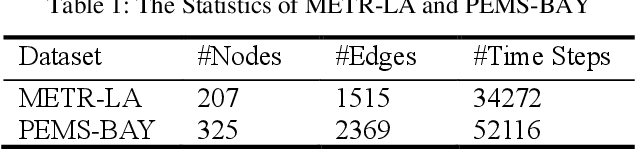

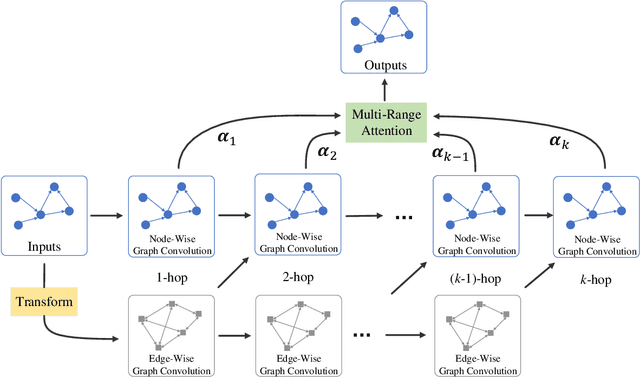

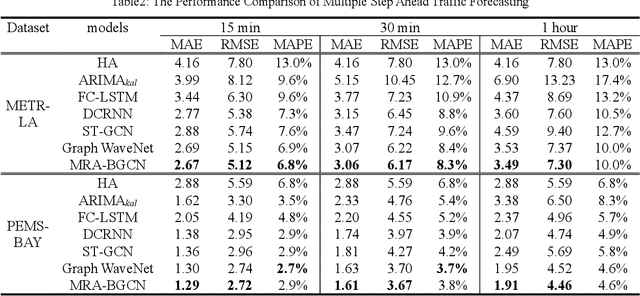

Multi-Range Attentive Bicomponent Graph Convolutional Network for Traffic Forecasting

Nov 27, 2019

Abstract:Traffic forecasting is of great importance to transportation management and public safety, and very challenging due to the complicated spatial-temporal dependency and essential uncertainty brought about by the road network and traffic conditions. Latest studies mainly focus on modeling the spatial dependency by utilizing graph convolutional networks (GCNs) throughout a fixed weighted graph. However, edges, i.e., the correlations between pair-wise nodes, are much more complicated and interact with each other. In this paper, we propose the Multi-Range Attentive Bicomponent GCN (MRA-BGCN), a novel deep learning model for traffic forecasting. We first build the node-wise graph according to the road network distance and the edge-wise graph according to various edge interaction patterns. Then, we implement the interactions of both nodes and edges using bicomponent graph convolution. The multi-range attention mechanism is introduced to aggregate information in different neighborhood ranges and automatically learn the importance of different ranges. Extensive experiments on two real-world road network traffic datasets, METR-LA and PEMS-BAY, show that our MRA-BGCN achieves the state-of-the-art results.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge