Weijie Gan

Plug-and-Play Posterior Sampling for Blind Inverse Problems

May 28, 2025

Abstract:We introduce Blind Plug-and-Play Diffusion Models (Blind-PnPDM) as a novel framework for solving blind inverse problems where both the target image and the measurement operator are unknown. Unlike conventional methods that rely on explicit priors or separate parameter estimation, our approach performs posterior sampling by recasting the problem into an alternating Gaussian denoising scheme. We leverage two diffusion models as learned priors: one to capture the distribution of the target image and another to characterize the parameters of the measurement operator. This PnP integration of diffusion models ensures flexibility and ease of adaptation. Our experiments on blind image deblurring show that Blind-PnPDM outperforms state-of-the-art methods in terms of both quantitative metrics and visual fidelity. Our results highlight the effectiveness of treating blind inverse problems as a sequence of denoising subproblems while harnessing the expressive power of diffusion-based priors.

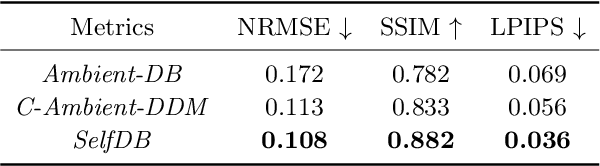

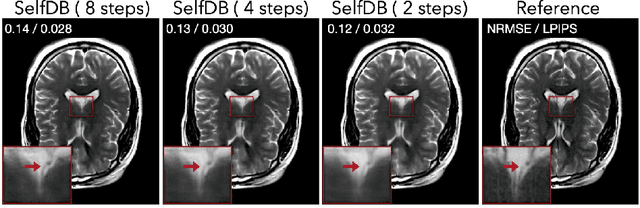

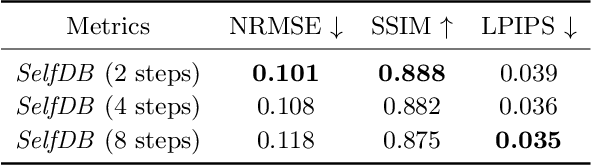

A Self-supervised Diffusion Bridge for MRI Reconstruction

Jan 06, 2025

Abstract:Diffusion bridges (DBs) are a class of diffusion models that enable faster sampling by interpolating between two paired image distributions. Training traditional DBs for image reconstruction requires high-quality reference images, which limits their applicability to settings where such references are unavailable. We propose SelfDB as a novel self-supervised method for training DBs directly on available noisy measurements without any high-quality reference images. SelfDB formulates the diffusion process by further sub-sampling the available measurements two additional times and training a neural network to reverse the corresponding degradation process by using the available measurements as the training targets. We validate SelfDB on compressed sensing MRI, showing its superior performance compared to the denoising diffusion models.

A Generalizable 3D Diffusion Framework for Low-Dose and Few-View Cardiac SPECT

Dec 21, 2024Abstract:Myocardial perfusion imaging using SPECT is widely utilized to diagnose coronary artery diseases, but image quality can be negatively affected in low-dose and few-view acquisition settings. Although various deep learning methods have been introduced to improve image quality from low-dose or few-view SPECT data, previous approaches often fail to generalize across different acquisition settings, limiting their applicability in reality. This work introduced DiffSPECT-3D, a diffusion framework for 3D cardiac SPECT imaging that effectively adapts to different acquisition settings without requiring further network re-training or fine-tuning. Using both image and projection data, a consistency strategy is proposed to ensure that diffusion sampling at each step aligns with the low-dose/few-view projection measurements, the image data, and the scanner geometry, thus enabling generalization to different low-dose/few-view settings. Incorporating anatomical spatial information from CT and total variation constraint, we proposed a 2.5D conditional strategy to allow the DiffSPECT-3D to observe 3D contextual information from the entire image volume, addressing the 3D memory issues in diffusion model. We extensively evaluated the proposed method on 1,325 clinical 99mTc tetrofosmin stress/rest studies from 795 patients. Each study was reconstructed into 5 different low-count and 5 different few-view levels for model evaluations, ranging from 1% to 50% and from 1 view to 9 view, respectively. Validated against cardiac catheterization results and diagnostic comments from nuclear cardiologists, the presented results show the potential to achieve low-dose and few-view SPECT imaging without compromising clinical performance. Additionally, DiffSPECT-3D could be directly applied to full-dose SPECT images to further improve image quality, especially in a low-dose stress-first cardiac SPECT imaging protocol.

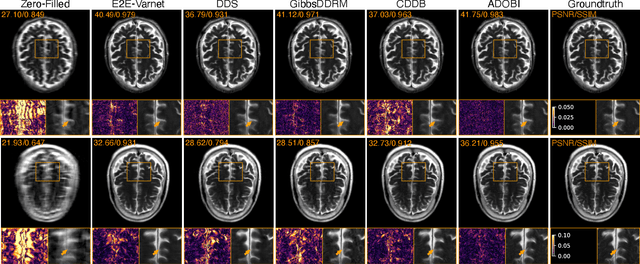

ADOBI: Adaptive Diffusion Bridge For Blind Inverse Problems with Application to MRI Reconstruction

Nov 25, 2024

Abstract:Diffusion bridges (DB) have emerged as a promising alternative to diffusion models for imaging inverse problems, achieving faster sampling by directly bridging low- and high-quality image distributions. While incorporating measurement consistency has been shown to improve performance, existing DB methods fail to maintain this consistency in blind inverse problems, where the forward model is unknown. To address this limitation, we introduce ADOBI (Adaptive Diffusion Bridge for Inverse Problems), a novel framework that adaptively calibrates the unknown forward model to enforce measurement consistency throughout sampling iterations. Our adaptation strategy allows ADOBI to achieve high-quality parallel magnetic resonance imaging (PMRI) reconstruction in only 5-10 steps. Our numerical results show that ADOBI consistently delivers state-of-the-art performance, and further advances the Pareto frontier for the perception-distortion trade-off.

Stochastic Deep Restoration Priors for Imaging Inverse Problems

Oct 02, 2024

Abstract:Deep neural networks trained as image denoisers are widely used as priors for solving imaging inverse problems. While Gaussian denoising is thought sufficient for learning image priors, we show that priors from deep models pre-trained as more general restoration operators can perform better. We introduce Stochastic deep Restoration Priors (ShaRP), a novel method that leverages an ensemble of such restoration models to regularize inverse problems. ShaRP improves upon methods using Gaussian denoiser priors by better handling structured artifacts and enabling self-supervised training even without fully sampled data. We prove ShaRP minimizes an objective function involving a regularizer derived from the score functions of minimum mean square error (MMSE) restoration operators, and theoretically analyze its convergence. Empirically, ShaRP achieves state-of-the-art performance on tasks such as magnetic resonance imaging reconstruction and single-image super-resolution, surpassing both denoiser-and diffusion-model-based methods without requiring retraining.

Pseudo-MRI-Guided PET Image Reconstruction Method Based on a Diffusion Probabilistic Model

Mar 26, 2024

Abstract:Anatomically guided PET reconstruction using MRI information has been shown to have the potential to improve PET image quality. However, these improvements are limited to PET scans with paired MRI information. In this work we employed a diffusion probabilistic model (DPM) to infer T1-weighted-MRI (deep-MRI) images from FDG-PET brain images. We then use the DPM-generated T1w-MRI to guide the PET reconstruction. The model was trained with brain FDG scans, and tested in datasets containing multiple levels of counts. Deep-MRI images appeared somewhat degraded than the acquired MRI images. Regarding PET image quality, volume of interest analysis in different brain regions showed that both PET reconstructed images using the acquired and the deep-MRI images improved image quality compared to OSEM. Same conclusions were found analysing the decimated datasets. A subjective evaluation performed by two physicians confirmed that OSEM scored consistently worse than the MRI-guided PET images and no significant differences were observed between the MRI-guided PET images. This proof of concept shows that it is possible to infer DPM-based MRI imagery to guide the PET reconstruction, enabling the possibility of changing reconstruction parameters such as the strength of the prior on anatomically guided PET reconstruction in the absence of MRI.

Convergence of Nonconvex PnP-ADMM with MMSE Denoisers

Nov 30, 2023Abstract:Plug-and-Play Alternating Direction Method of Multipliers (PnP-ADMM) is a widely-used algorithm for solving inverse problems by integrating physical measurement models and convolutional neural network (CNN) priors. PnP-ADMM has been theoretically proven to converge for convex data-fidelity terms and nonexpansive CNNs. It has however been observed that PnP-ADMM often empirically converges even for expansive CNNs. This paper presents a theoretical explanation for the observed stability of PnP-ADMM based on the interpretation of the CNN prior as a minimum mean-squared error (MMSE) denoiser. Our explanation parallels a similar argument recently made for the iterative shrinkage/thresholding algorithm variant of PnP (PnP-ISTA) and relies on the connection between MMSE denoisers and proximal operators. We also numerically evaluate the performance gap between PnP-ADMM using a nonexpansive DnCNN denoiser and expansive DRUNet denoiser, thus motivating the use of expansive CNNs.

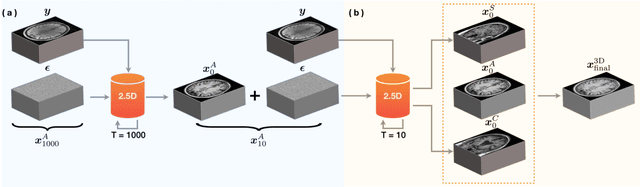

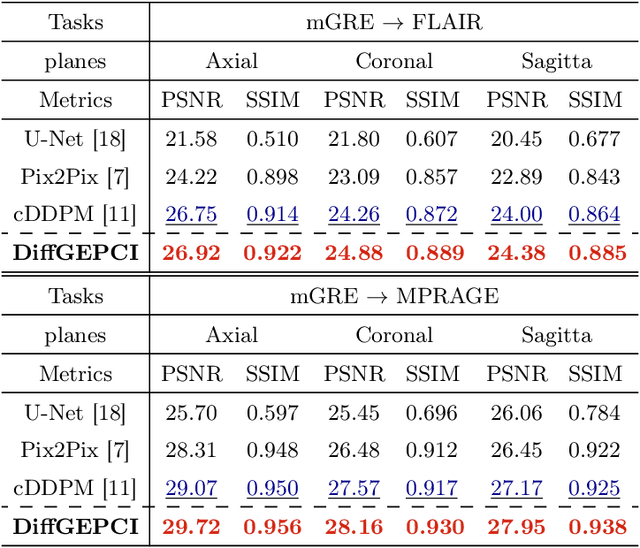

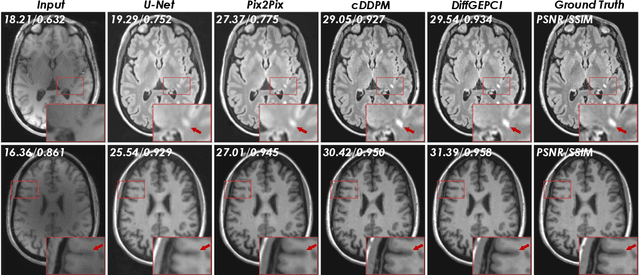

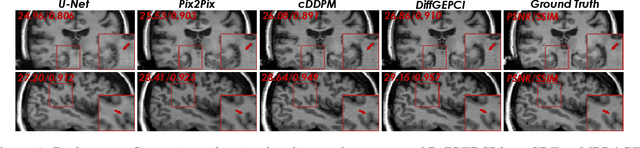

DiffGEPCI: 3D MRI Synthesis from mGRE Signals using 2.5D Diffusion Model

Nov 29, 2023

Abstract:We introduce a new framework called DiffGEPCI for cross-modality generation in magnetic resonance imaging (MRI) using a 2.5D conditional diffusion model. DiffGEPCI can synthesize high-quality Fluid Attenuated Inversion Recovery (FLAIR) and Magnetization Prepared-Rapid Gradient Echo (MPRAGE) images, without acquiring corresponding measurements, by leveraging multi-Gradient-Recalled Echo (mGRE) MRI signals as conditional inputs. DiffGEPCI operates in a two-step fashion: it initially estimates a 3D volume slice-by-slice using the axial plane and subsequently applies a refinement algorithm (referred to as 2.5D) to enhance the quality of the coronal and sagittal planes. Experimental validation on real mGRE data shows that DiffGEPCI achieves excellent performance, surpassing generative adversarial networks (GANs) and traditional diffusion models.

FLAIR: A Conditional Diffusion Framework with Applications to Face Video Restoration

Nov 26, 2023

Abstract:Face video restoration (FVR) is a challenging but important problem where one seeks to recover a perceptually realistic face videos from a low-quality input. While diffusion probabilistic models (DPMs) have been shown to achieve remarkable performance for face image restoration, they often fail to preserve temporally coherent, high-quality videos, compromising the fidelity of reconstructed faces. We present a new conditional diffusion framework called FLAIR for FVR. FLAIR ensures temporal consistency across frames in a computationally efficient fashion by converting a traditional image DPM into a video DPM. The proposed conversion uses a recurrent video refinement layer and a temporal self-attention at different scales. FLAIR also uses a conditional iterative refinement process to balance the perceptual and distortion quality during inference. This process consists of two key components: a data-consistency module that analytically ensures that the generated video precisely matches its degraded observation and a coarse-to-fine image enhancement module specifically for facial regions. Our extensive experiments show superiority of FLAIR over the current state-of-the-art (SOTA) for video super-resolution, deblurring, JPEG restoration, and space-time frame interpolation on two high-quality face video datasets.

Dose-aware Diffusion Model for 3D Ultra Low-dose PET Imaging

Nov 07, 2023Abstract:As PET imaging is accompanied by substantial radiation exposure and cancer risk, reducing radiation dose in PET scans is an important topic. Recently, diffusion models have emerged as the new state-of-the-art generative model to generate high-quality samples and have demonstrated strong potential for various tasks in medical imaging. However, it is difficult to extend diffusion models for 3D image reconstructions due to the memory burden. Directly stacking 2D slices together to create 3D image volumes would results in severe inconsistencies between slices. Previous works tried to either applying a penalty term along the z-axis to remove inconsistencies or reconstructing the 3D image volumes with 2 pre-trained perpendicular 2D diffusion models. Nonetheless, these previous methods failed to produce satisfactory results in challenging cases for PET image denoising. In addition to administered dose, the noise-levels in PET images are affected by several other factors in clinical settings, such as scan time, patient size, and weight, etc. Therefore, a method to simultaneously denoise PET images with different noise-levels is needed. Here, we proposed a dose-aware diffusion model for 3D low-dose PET imaging (DDPET) to address these challenges. The proposed DDPET method was tested on 295 patients from three different medical institutions globally with different low-dose levels. These patient data were acquired on three different commercial PET scanners, including Siemens Vision Quadra, Siemens mCT, and United Imaging Healthcare uExplorere. The proposed method demonstrated superior performance over previously proposed diffusion models for 3D imaging problems as well as models proposed for noise-aware medical image denoising. Code is available at: xxx.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge