Vincent Fortuin

Data-Driven Discovery of Feature Groups in Clinical Time Series

Nov 11, 2025Abstract:Clinical time series data are critical for patient monitoring and predictive modeling. These time series are typically multivariate and often comprise hundreds of heterogeneous features from different data sources. The grouping of features based on similarity and relevance to the prediction task has been shown to enhance the performance of deep learning architectures. However, defining these groups a priori using only semantic knowledge is challenging, even for domain experts. To address this, we propose a novel method that learns feature groups by clustering weights of feature-wise embedding layers. This approach seamlessly integrates into standard supervised training and discovers the groups that directly improve downstream performance on clinically relevant tasks. We demonstrate that our method outperforms static clustering approaches on synthetic data and achieves performance comparable to expert-defined groups on real-world medical data. Moreover, the learned feature groups are clinically interpretable, enabling data-driven discovery of task-relevant relationships between variables.

ProSpero: Active Learning for Robust Protein Design Beyond Wild-Type Neighborhoods

May 28, 2025Abstract:Designing protein sequences of both high fitness and novelty is a challenging task in data-efficient protein engineering. Exploration beyond wild-type neighborhoods often leads to biologically implausible sequences or relies on surrogate models that lose fidelity in novel regions. Here, we propose ProSpero, an active learning framework in which a frozen pre-trained generative model is guided by a surrogate updated from oracle feedback. By integrating fitness-relevant residue selection with biologically-constrained Sequential Monte Carlo sampling, our approach enables exploration beyond wild-type neighborhoods while preserving biological plausibility. We show that our framework remains effective even when the surrogate is misspecified. ProSpero consistently outperforms or matches existing methods across diverse protein engineering tasks, retrieving sequences of both high fitness and novelty.

Sparse Gaussian Neural Processes

Apr 02, 2025Abstract:Despite significant recent advances in probabilistic meta-learning, it is common for practitioners to avoid using deep learning models due to a comparative lack of interpretability. Instead, many practitioners simply use non-meta-models such as Gaussian processes with interpretable priors, and conduct the tedious procedure of training their model from scratch for each task they encounter. While this is justifiable for tasks with a limited number of data points, the cubic computational cost of exact Gaussian process inference renders this prohibitive when each task has many observations. To remedy this, we introduce a family of models that meta-learn sparse Gaussian process inference. Not only does this enable rapid prediction on new tasks with sparse Gaussian processes, but since our models have clear interpretations as members of the neural process family, it also allows manual elicitation of priors in a neural process for the first time. In meta-learning regimes for which the number of observed tasks is small or for which expert domain knowledge is available, this offers a crucial advantage.

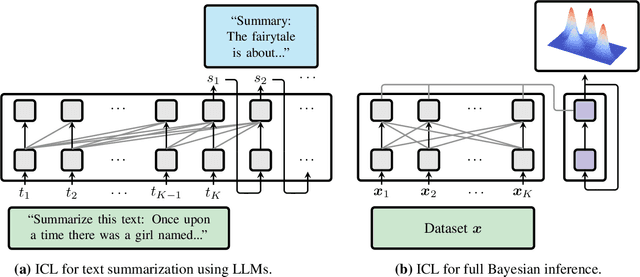

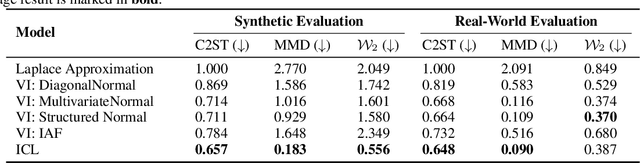

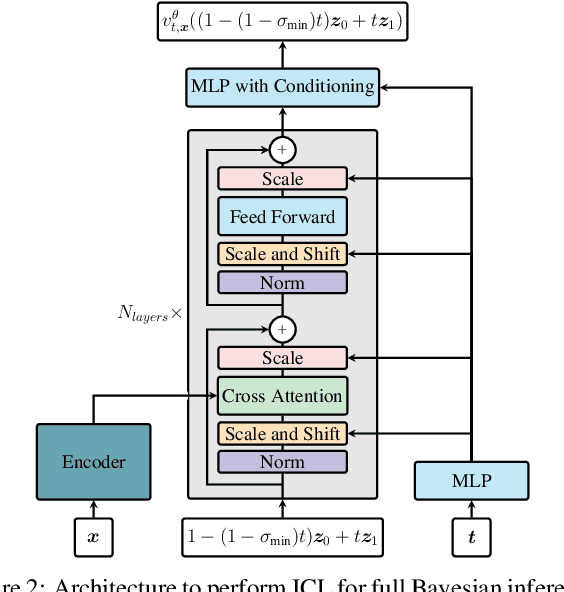

Can Transformers Learn Full Bayesian Inference in Context?

Jan 28, 2025

Abstract:Transformers have emerged as the dominant architecture in the field of deep learning, with a broad range of applications and remarkable in-context learning (ICL) capabilities. While not yet fully understood, ICL has already proved to be an intriguing phenomenon, allowing transformers to learn in context -- without requiring further training. In this paper, we further advance the understanding of ICL by demonstrating that transformers can perform full Bayesian inference for commonly used statistical models in context. More specifically, we introduce a general framework that builds on ideas from prior fitted networks and continuous normalizing flows which enables us to infer complex posterior distributions for methods such as generalized linear models and latent factor models. Extensive experiments on real-world datasets demonstrate that our ICL approach yields posterior samples that are similar in quality to state-of-the-art MCMC or variational inference methods not operating in context.

OneProt: Towards Multi-Modal Protein Foundation Models

Nov 07, 2024

Abstract:Recent AI advances have enabled multi-modal systems to model and translate diverse information spaces. Extending beyond text and vision, we introduce OneProt, a multi-modal AI for proteins that integrates structural, sequence, alignment, and binding site data. Using the ImageBind framework, OneProt aligns the latent spaces of modality encoders along protein sequences. It demonstrates strong performance in retrieval tasks and surpasses state-of-the-art methods in various downstream tasks, including metal ion binding classification, gene-ontology annotation, and enzyme function prediction. This work expands multi-modal capabilities in protein models, paving the way for applications in drug discovery, biocatalytic reaction planning, and protein engineering.

Stein Variational Newton Neural Network Ensembles

Nov 04, 2024

Abstract:Deep neural network ensembles are powerful tools for uncertainty quantification, which have recently been re-interpreted from a Bayesian perspective. However, current methods inadequately leverage second-order information of the loss landscape, despite the recent availability of efficient Hessian approximations. We propose a novel approximate Bayesian inference method that modifies deep ensembles to incorporate Stein Variational Newton updates. Our approach uniquely integrates scalable modern Hessian approximations, achieving faster convergence and more accurate posterior distribution approximations. We validate the effectiveness of our method on diverse regression and classification tasks, demonstrating superior performance with a significantly reduced number of training epochs compared to existing ensemble-based methods, while enhancing uncertainty quantification and robustness against overfitting.

Parameter-efficient Bayesian Neural Networks for Uncertainty-aware Depth Estimation

Sep 25, 2024

Abstract:State-of-the-art computer vision tasks, like monocular depth estimation (MDE), rely heavily on large, modern Transformer-based architectures. However, their application in safety-critical domains demands reliable predictive performance and uncertainty quantification. While Bayesian neural networks provide a conceptually simple approach to serve those requirements, they suffer from the high dimensionality of the parameter space. Parameter-efficient fine-tuning (PEFT) methods, in particular low-rank adaptations (LoRA), have emerged as a popular strategy for adapting large-scale models to down-stream tasks by performing parameter inference on lower-dimensional subspaces. In this work, we investigate the suitability of PEFT methods for subspace Bayesian inference in large-scale Transformer-based vision models. We show that, indeed, combining BitFit, DiffFit, LoRA, and CoLoRA, a novel LoRA-inspired PEFT method, with Bayesian inference enables more robust and reliable predictive performance in MDE.

FSP-Laplace: Function-Space Priors for the Laplace Approximation in Bayesian Deep Learning

Jul 18, 2024

Abstract:Laplace approximations are popular techniques for endowing deep networks with epistemic uncertainty estimates as they can be applied without altering the predictions of the neural network, and they scale to large models and datasets. While the choice of prior strongly affects the resulting posterior distribution, computational tractability and lack of interpretability of weight space typically limit the Laplace approximation to isotropic Gaussian priors, which are known to cause pathological behavior as depth increases. As a remedy, we directly place a prior on function space. More precisely, since Lebesgue densities do not exist on infinite-dimensional function spaces, we have to recast training as finding the so-called weak mode of the posterior measure under a Gaussian process (GP) prior restricted to the space of functions representable by the neural network. Through the GP prior, one can express structured and interpretable inductive biases, such as regularity or periodicity, directly in function space, while still exploiting the implicit inductive biases that allow deep networks to generalize. After model linearization, the training objective induces a negative log-posterior density to which we apply a Laplace approximation, leveraging highly scalable methods from matrix-free linear algebra. Our method provides improved results where prior knowledge is abundant, e.g., in many scientific inference tasks. At the same time, it stays competitive for black-box regression and classification tasks where neural networks typically excel.

Towards Dynamic Feature Acquisition on Medical Time Series by Maximizing Conditional Mutual Information

Jul 18, 2024

Abstract:Knowing which features of a multivariate time series to measure and when is a key task in medicine, wearables, and robotics. Better acquisition policies can reduce costs while maintaining or even improving the performance of downstream predictors. Inspired by the maximization of conditional mutual information, we propose an approach to train acquirers end-to-end using only the downstream loss. We show that our method outperforms random acquisition policy, matches a model with an unrestrained budget, but does not yet overtake a static acquisition strategy. We highlight the assumptions and outline avenues for future work.

How Useful is Intermittent, Asynchronous Expert Feedback for Bayesian Optimization?

Jun 10, 2024

Abstract:Bayesian optimization (BO) is an integral part of automated scientific discovery -- the so-called self-driving lab -- where human inputs are ideally minimal or at least non-blocking. However, scientists often have strong intuition, and thus human feedback is still useful. Nevertheless, prior works in enhancing BO with expert feedback, such as by incorporating it in an offline or online but blocking (arrives at each BO iteration) manner, are incompatible with the spirit of self-driving labs. In this work, we study whether a small amount of randomly arriving expert feedback that is being incorporated in a non-blocking manner can improve a BO campaign. To this end, we run an additional, independent computing thread on top of the BO loop to handle the feedback-gathering process. The gathered feedback is used to learn a Bayesian preference model that can readily be incorporated into the BO thread, to steer its exploration-exploitation process. Experiments on toy and chemistry datasets suggest that even just a few intermittent, asynchronous expert feedback can be useful for improving or constraining BO. This can especially be useful for its implication in improving self-driving labs, e.g. making them more data-efficient and less costly.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge