Tianrong Chen

One Layer Is Enough: Adapting Pretrained Visual Encoders for Image Generation

Dec 16, 2025Abstract:Visual generative models (e.g., diffusion models) typically operate in compressed latent spaces to balance training efficiency and sample quality. In parallel, there has been growing interest in leveraging high-quality pre-trained visual representations, either by aligning them inside VAEs or directly within the generative model. However, adapting such representations remains challenging due to fundamental mismatches between understanding-oriented features and generation-friendly latent spaces. Representation encoders benefit from high-dimensional latents that capture diverse hypotheses for masked regions, whereas generative models favor low-dimensional latents that must faithfully preserve injected noise. This discrepancy has led prior work to rely on complex objectives and architectures. In this work, we propose FAE (Feature Auto-Encoder), a simple yet effective framework that adapts pre-trained visual representations into low-dimensional latents suitable for generation using as little as a single attention layer, while retaining sufficient information for both reconstruction and understanding. The key is to couple two separate deep decoders: one trained to reconstruct the original feature space, and a second that takes the reconstructed features as input for image generation. FAE is generic; it can be instantiated with a variety of self-supervised encoders (e.g., DINO, SigLIP) and plugged into two distinct generative families: diffusion models and normalizing flows. Across class-conditional and text-to-image benchmarks, FAE achieves strong performance. For example, on ImageNet 256x256, our diffusion model with CFG attains a near state-of-the-art FID of 1.29 (800 epochs) and 1.70 (80 epochs). Without CFG, FAE reaches the state-of-the-art FID of 1.48 (800 epochs) and 2.08 (80 epochs), demonstrating both high quality and fast learning.

Optimal Control Theoretic Neural Optimizer: From Backpropagation to Dynamic Programming

Oct 15, 2025Abstract:Optimization of deep neural networks (DNNs) has been a driving force in the advancement of modern machine learning and artificial intelligence. With DNNs characterized by a prolonged sequence of nonlinear propagation, determining their optimal parameters given an objective naturally fits within the framework of Optimal Control Programming. Such an interpretation of DNNs as dynamical systems has proven crucial in offering a theoretical foundation for principled analysis from numerical equations to physics. In parallel to these theoretical pursuits, this paper focuses on an algorithmic perspective. Our motivated observation is the striking algorithmic resemblance between the Backpropagation algorithm for computing gradients in DNNs and the optimality conditions for dynamical systems, expressed through another backward process known as dynamic programming. Consolidating this connection, where Backpropagation admits a variational structure, solving an approximate dynamic programming up to the first-order expansion leads to a new class of optimization methods exploring higher-order expansions of the Bellman equation. The resulting optimizer, termed Optimal Control Theoretic Neural Optimizer (OCNOpt), enables rich algorithmic opportunities, including layer-wise feedback policies, game-theoretic applications, and higher-order training of continuous-time models such as Neural ODEs. Extensive experiments demonstrate that OCNOpt improves upon existing methods in robustness and efficiency while maintaining manageable computational complexity, paving new avenues for principled algorithmic design grounded in dynamical systems and optimal control theory.

Linear Preference Optimization: Decoupled Gradient Control via Absolute Regularization

Aug 20, 2025Abstract:DPO (Direct Preference Optimization) has become a widely used offline preference optimization algorithm due to its simplicity and training stability. However, DPO is prone to overfitting and collapse. To address these challenges, we propose Linear Preference Optimization (LPO), a novel alignment framework featuring three key innovations. First, we introduce gradient decoupling by replacing the log-sigmoid function with an absolute difference loss, thereby isolating the optimization dynamics. Second, we improve stability through an offset constraint combined with a positive regularization term to preserve the chosen response quality. Third, we implement controllable rejection suppression using gradient separation with straightforward estimation and a tunable coefficient that linearly regulates the descent of the rejection probability. Through extensive experiments, we demonstrate that LPO consistently improves performance on various tasks, including general text tasks, math tasks, and text-to-speech (TTS) tasks. These results establish LPO as a robust and tunable paradigm for preference alignment, and we release the source code, models, and training data publicly.

STARFlow: Scaling Latent Normalizing Flows for High-resolution Image Synthesis

Jun 06, 2025

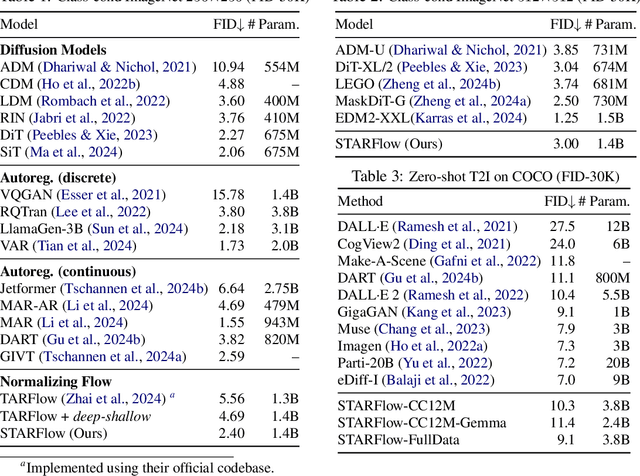

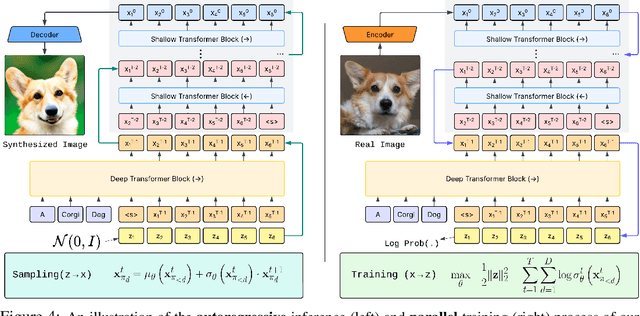

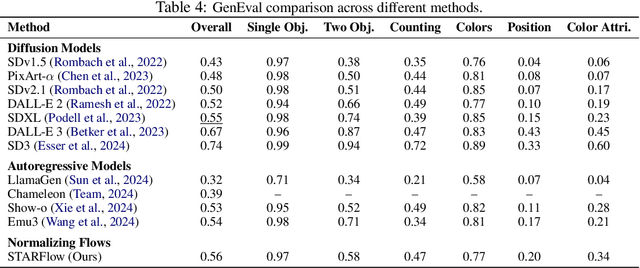

Abstract:We present STARFlow, a scalable generative model based on normalizing flows that achieves strong performance in high-resolution image synthesis. The core of STARFlow is Transformer Autoregressive Flow (TARFlow), which combines the expressive power of normalizing flows with the structured modeling capabilities of Autoregressive Transformers. We first establish the theoretical universality of TARFlow for modeling continuous distributions. Building on this foundation, we introduce several key architectural and algorithmic innovations to significantly enhance scalability: (1) a deep-shallow design, wherein a deep Transformer block captures most of the model representational capacity, complemented by a few shallow Transformer blocks that are computationally efficient yet substantially beneficial; (2) modeling in the latent space of pretrained autoencoders, which proves more effective than direct pixel-level modeling; and (3) a novel guidance algorithm that significantly boosts sample quality. Crucially, our model remains an end-to-end normalizing flow, enabling exact maximum likelihood training in continuous spaces without discretization. STARFlow achieves competitive performance in both class-conditional and text-conditional image generation tasks, approaching state-of-the-art diffusion models in sample quality. To our knowledge, this work is the first successful demonstration of normalizing flows operating effectively at this scale and resolution.

UniTTS: An end-to-end TTS system without decoupling of acoustic and semantic information

May 23, 2025Abstract:The emergence of multi-codebook neutral audio codecs such as Residual Vector Quantization (RVQ) and Group Vector Quantization (GVQ) has significantly advanced Large-Language-Model (LLM) based Text-to-Speech (TTS) systems. These codecs are crucial in separating semantic and acoustic information while efficiently harnessing semantic priors. However, since semantic and acoustic information cannot be fully aligned, a significant drawback of these methods when applied to LLM-based TTS is that large language models may have limited access to comprehensive audio information. To address this limitation, we propose DistilCodec and UniTTS, which collectively offer the following advantages: 1) This method can distill a multi-codebook audio codec into a single-codebook audio codec with 32,768 codes while achieving a near 100\% utilization. 2) As DistilCodec does not employ a semantic alignment scheme, a large amount of high-quality unlabeled audio (such as audiobooks with sound effects, songs, etc.) can be incorporated during training, further expanding data diversity and broadening its applicability. 3) Leveraging the comprehensive audio information modeling of DistilCodec, we integrated three key tasks into UniTTS's pre-training framework: audio modality autoregression, text modality autoregression, and speech-text cross-modal autoregression. This allows UniTTS to accept interleaved text and speech/audio prompts while substantially preserving LLM's text capabilities. 4) UniTTS employs a three-stage training process: Pre-Training, Supervised Fine-Tuning (SFT), and Alignment. Source code and model checkpoints are publicly available at https://github.com/IDEA-Emdoor-Lab/UniTTS and https://github.com/IDEA-Emdoor-Lab/DistilCodec.

Deep Generalized Schrödinger Bridges: From Image Generation to Solving Mean-Field Games

Dec 28, 2024Abstract:Generalized Schr\"odinger Bridges (GSBs) are a fundamental mathematical framework used to analyze the most likely particle evolution based on the principle of least action including kinetic and potential energy. In parallel to their well-established presence in the theoretical realms of quantum mechanics and optimal transport, this paper focuses on an algorithmic perspective, aiming to enhance practical usage. Our motivated observation is that transportation problems with the optimality structures delineated by GSBs are pervasive across various scientific domains, such as generative modeling in machine learning, mean-field games in stochastic control, and more. Exploring the intrinsic connection between the mathematical modeling of GSBs and the modern algorithmic characterization therefore presents a crucial, yet untapped, avenue. In this paper, we reinterpret GSBs as probabilistic models and demonstrate that, with a delicate mathematical tool known as the nonlinear Feynman-Kac lemma, rich algorithmic concepts, such as likelihoods, variational gaps, and temporal differences, emerge naturally from the optimality structures of GSBs. The resulting computational framework, driven by deep learning and neural networks, operates in a fully continuous state space (i.e., mesh-free) and satisfies distribution constraints, setting it apart from prior numerical solvers relying on spatial discretization or constraint relaxation. We demonstrate the efficacy of our method in generative modeling and mean-field games, highlighting its transformative applications at the intersection of mathematical modeling, stochastic process, control, and machine learning.

Normalizing Flows are Capable Generative Models

Dec 10, 2024Abstract:Normalizing Flows (NFs) are likelihood-based models for continuous inputs. They have demonstrated promising results on both density estimation and generative modeling tasks, but have received relatively little attention in recent years. In this work, we demonstrate that NFs are more powerful than previously believed. We present TarFlow: a simple and scalable architecture that enables highly performant NF models. TarFlow can be thought of as a Transformer-based variant of Masked Autoregressive Flows (MAFs): it consists of a stack of autoregressive Transformer blocks on image patches, alternating the autoregression direction between layers. TarFlow is straightforward to train end-to-end, and capable of directly modeling and generating pixels. We also propose three key techniques to improve sample quality: Gaussian noise augmentation during training, a post training denoising procedure, and an effective guidance method for both class-conditional and unconditional settings. Putting these together, TarFlow sets new state-of-the-art results on likelihood estimation for images, beating the previous best methods by a large margin, and generates samples with quality and diversity comparable to diffusion models, for the first time with a stand-alone NF model. We make our code available at https://github.com/apple/ml-tarflow.

Trivialized Momentum Facilitates Diffusion Generative Modeling on Lie Groups

May 25, 2024

Abstract:The generative modeling of data on manifold is an important task, for which diffusion models in flat spaces typically need nontrivial adaptations. This article demonstrates how a technique called `trivialization' can transfer the effectiveness of diffusion models in Euclidean spaces to Lie groups. In particular, an auxiliary momentum variable was algorithmically introduced to help transport the position variable between data distribution and a fixed, easy-to-sample distribution. Normally, this would incur further difficulty for manifold data because momentum lives in a space that changes with the position. However, our trivialization technique creates to a new momentum variable that stays in a simple $\textbf{fixed vector space}$. This design, together with a manifold preserving integrator, simplifies implementation and avoids inaccuracies created by approximations such as projections to tangent space and manifold, which were typically used in prior work, hence facilitating generation with high-fidelity and efficiency. The resulting method achieves state-of-the-art performance on protein and RNA torsion angle generation and sophisticated torus datasets. We also, arguably for the first time, tackle the generation of data on high-dimensional Special Orthogonal and Unitary groups, the latter essential for quantum problems.

React-OT: Optimal Transport for Generating Transition State in Chemical Reactions

Apr 20, 2024

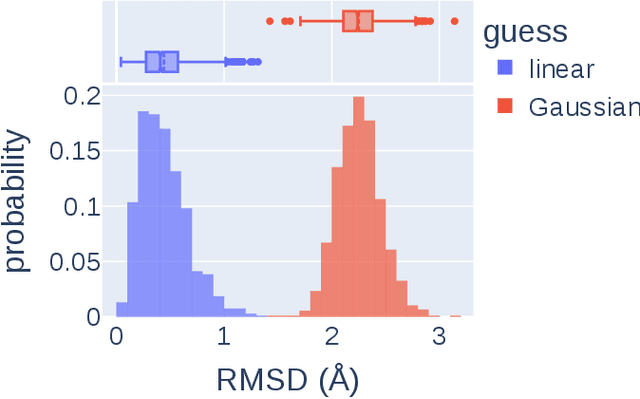

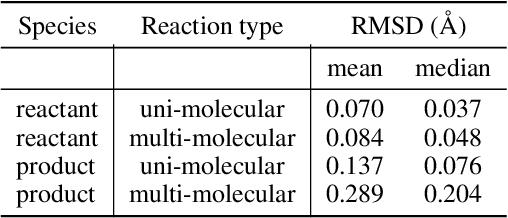

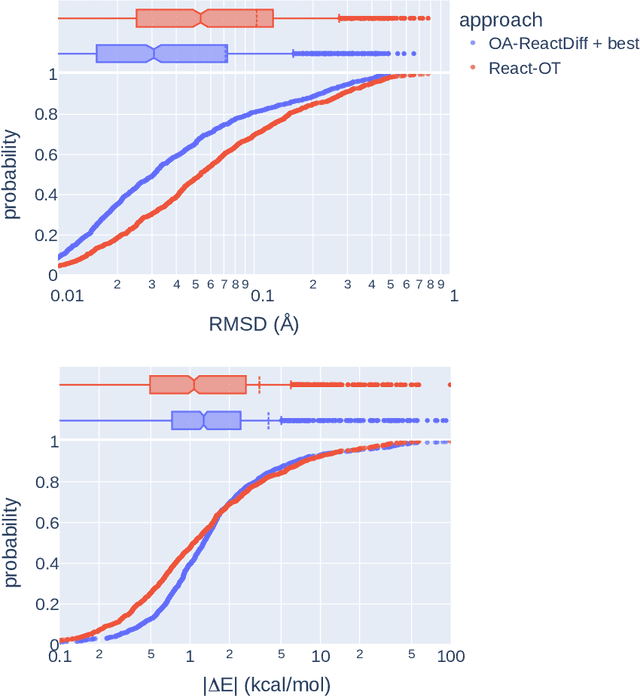

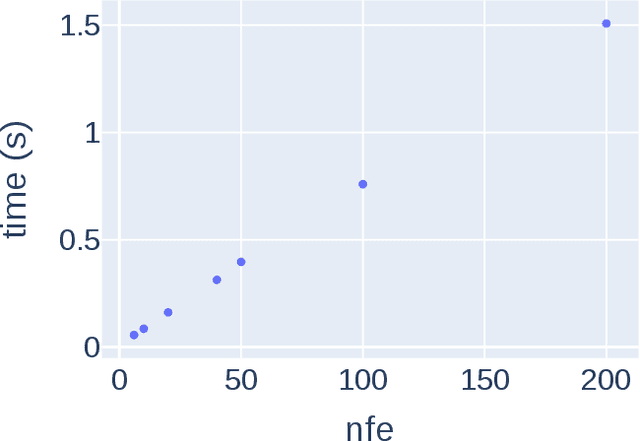

Abstract:Transition states (TSs) are transient structures that are key in understanding reaction mechanisms and designing catalysts but challenging to be captured in experiments. Alternatively, many optimization algorithms have been developed to search for TSs computationally. Yet the cost of these algorithms driven by quantum chemistry methods (usually density functional theory) is still high, posing challenges for their applications in building large reaction networks for reaction exploration. Here we developed React-OT, an optimal transport approach for generating unique TS structures from reactants and products. React-OT generates highly accurate TS structures with a median structural root mean square deviation (RMSD) of 0.053{\AA} and median barrier height error of 1.06 kcal/mol requiring only 0.4 second per reaction. The RMSD and barrier height error is further improved by roughly 25% through pretraining React-OT on a large reaction dataset obtained with a lower level of theory, GFN2-xTB. We envision the great accuracy and fast inference of React-OT useful in targeting TSs when exploring chemical reactions with unknown mechanisms.

Quantum State Generation with Structure-Preserving Diffusion Model

Apr 09, 2024

Abstract:This article considers the generative modeling of the states of quantum systems, and an approach based on denoising diffusion model is proposed. The key contribution is an algorithmic innovation that respects the physical nature of quantum states. More precisely, the commonly used density matrix representation of mixed-state has to be complex-valued Hermitian, positive semi-definite, and trace one. Generic diffusion models, or other generative methods, may not be able to generate data that strictly satisfy these structural constraints, even if all training data do. To develop a machine learning algorithm that has physics hard-wired in, we leverage the recent development of Mirror Diffusion Model and design a previously unconsidered mirror map, to enable strict structure-preserving generation. Both unconditional generation and conditional generation via classifier-free guidance are experimentally demonstrated efficacious, the latter even enabling the design of new quantum states when generated on unseen labels.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge