Teng Liu

UpBench: A Dynamically Evolving Real-World Labor-Market Agentic Benchmark Framework Built for Human-Centric AI

Nov 15, 2025Abstract:As large language model (LLM) agents increasingly undertake digital work, reliable frameworks are needed to evaluate their real-world competence, adaptability, and capacity for human collaboration. Existing benchmarks remain largely static, synthetic, or domain-limited, providing limited insight into how agents perform in dynamic, economically meaningful environments. We introduce UpBench, a dynamically evolving benchmark grounded in real jobs drawn from the global Upwork labor marketplace. Each task corresponds to a verified client transaction, anchoring evaluation in genuine work activity and financial outcomes. UpBench employs a rubric-based evaluation framework, in which expert freelancers decompose each job into detailed, verifiable acceptance criteria and assess AI submissions with per-criterion feedback. This structure enables fine-grained analysis of model strengths, weaknesses, and instruction-following fidelity beyond binary pass/fail metrics. Human expertise is integrated throughout the data pipeline (from job curation and rubric construction to evaluation) ensuring fidelity to real professional standards and supporting research on human-AI collaboration. By regularly refreshing tasks to reflect the evolving nature of online work, UpBench provides a scalable, human-centered foundation for evaluating agentic systems in authentic labor-market contexts, offering a path toward a collaborative framework, where AI amplifies human capability through partnership rather than replacement.

Decadal sink-source shifts of forest aboveground carbon since 1988

Jun 13, 2025Abstract:As enduring carbon sinks, forest ecosystems are vital to the terrestrial carbon cycle and help moderate global warming. However, the long-term dynamics of aboveground carbon (AGC) in forests and their sink-source transitions remain highly uncertain, owing to changing disturbance regimes and inconsistencies in observations, data processing, and analysis methods. Here, we derive reliable, harmonized AGC stocks and fluxes in global forests from 1988 to 2021 at high spatial resolution by integrating multi-source satellite observations with probabilistic deep learning models. Our approach simultaneously estimates AGC and associated uncertainties, showing high reliability across space and time. We find that, although global forests remained an AGC sink of 6.2 PgC over 30 years, moist tropical forests shifted to a substantial AGC source between 2001 and 2010 and, together with boreal forests, transitioned toward a source in the 2011-2021 period. Temperate, dry tropical and subtropical forests generally exhibited increasing AGC stocks, although Europe and Australia became sources after 2011. Regionally, pronounced sink-to-source transitions occurred in tropical forests over the past three decades. The interannual relationship between global atmospheric CO2 growth rates and tropical AGC flux variability became increasingly negative, reaching Pearson's r = -0.63 (p < 0.05) in the most recent decade. In the Brazilian Amazon, the contribution of deforested regions to AGC losses declined from 60% in 1989-2000 to 13% in 2011-2021, while the share from untouched areas increased from 33% to 76%. Our findings suggest a growing role of tropical forest AGC in modulating variability in the terrestrial carbon cycle, with anthropogenic climate change potentially contributing increasingly to AGC changes, particularly in previously untouched areas.

MBrain: A Multi-channel Self-Supervised Learning Framework for Brain Signals

Jun 15, 2023Abstract:Brain signals are important quantitative data for understanding physiological activities and diseases of human brain. Most existing studies pay attention to supervised learning methods, which, however, require high-cost clinical labels. In addition, the huge difference in the clinical patterns of brain signals measured by invasive (e.g., SEEG) and non-invasive (e.g., EEG) methods leads to the lack of a unified method. To handle the above issues, we propose to study the self-supervised learning (SSL) framework for brain signals that can be applied to pre-train either SEEG or EEG data. Intuitively, brain signals, generated by the firing of neurons, are transmitted among different connecting structures in human brain. Inspired by this, we propose MBrain to learn implicit spatial and temporal correlations between different channels (i.e., contacts of the electrode, corresponding to different brain areas) as the cornerstone for uniformly modeling different types of brain signals. Specifically, we represent the spatial correlation by a graph structure, which is built with proposed multi-channel CPC. We theoretically prove that optimizing the goal of multi-channel CPC can lead to a better predictive representation and apply the instantaneou-time-shift prediction task based on it. Then we capture the temporal correlation by designing the delayed-time-shift prediction task. Finally, replace-discriminative-learning task is proposed to preserve the characteristics of each channel. Extensive experiments of seizure detection on both EEG and SEEG large-scale real-world datasets demonstrate that our model outperforms several state-of-the-art time series SSL and unsupervised models, and has the ability to be deployed to clinical practice.

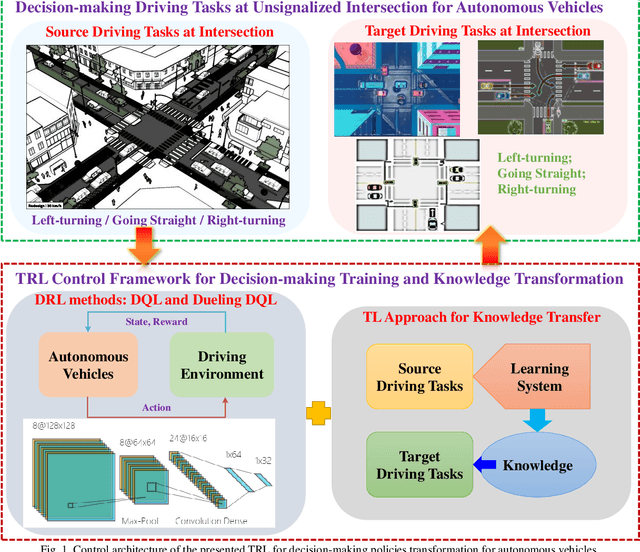

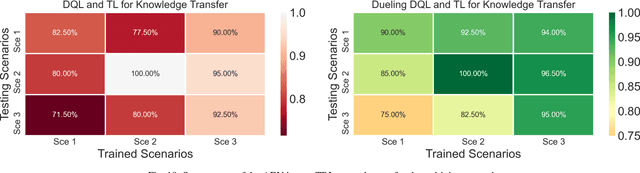

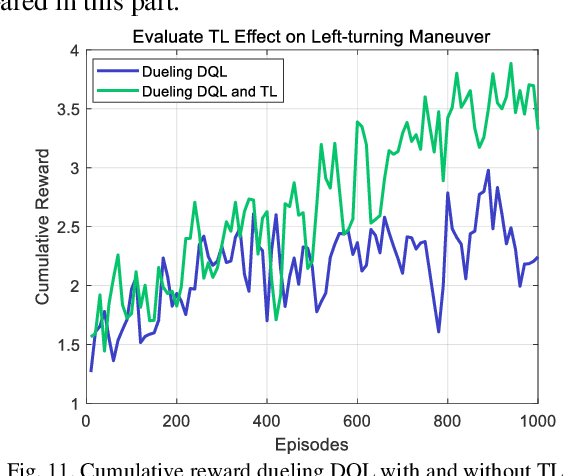

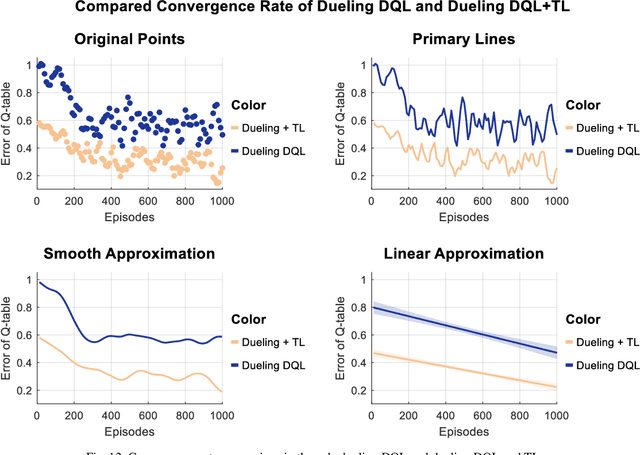

Driving Tasks Transfer in Deep Reinforcement Learning for Decision-making of Autonomous Vehicles

Oct 10, 2020

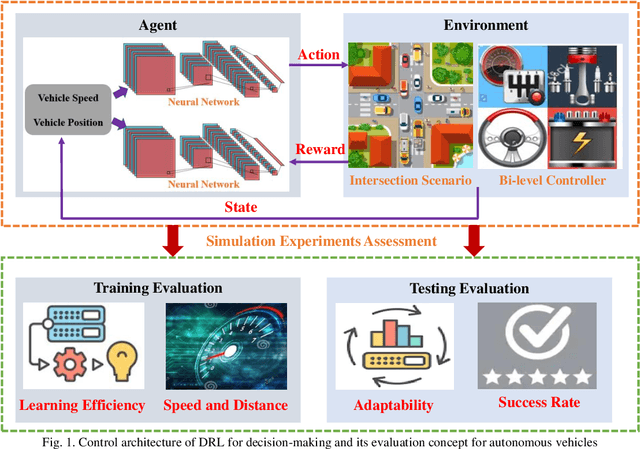

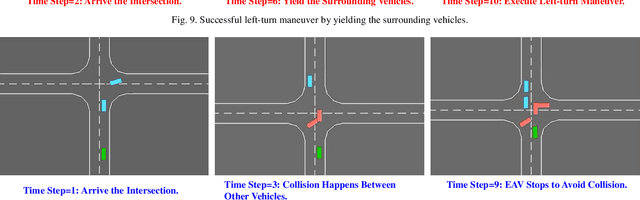

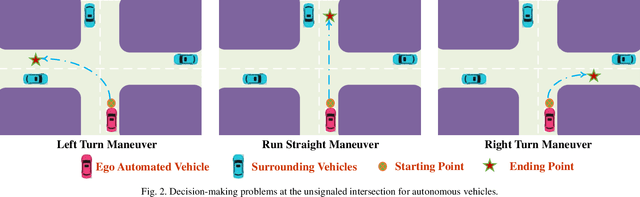

Abstract:Knowledge transfer is a promising concept to achieve real-time decision-making for autonomous vehicles. This paper constructs a transfer deep reinforcement learning framework to transform the driving tasks in inter-section environments. The driving missions at the un-signalized intersection are cast into a left turn, right turn, and running straight for automated vehicles. The goal of the autonomous ego vehicle (AEV) is to drive through the intersection situation efficiently and safely. This objective promotes the studied vehicle to increase its speed and avoid crashing other vehicles. The decision-making pol-icy learned from one driving task is transferred and evaluated in another driving mission. Simulation results reveal that the decision-making strategies related to similar tasks are transferable. It indicates that the presented control framework could reduce the time consumption and realize online implementation.

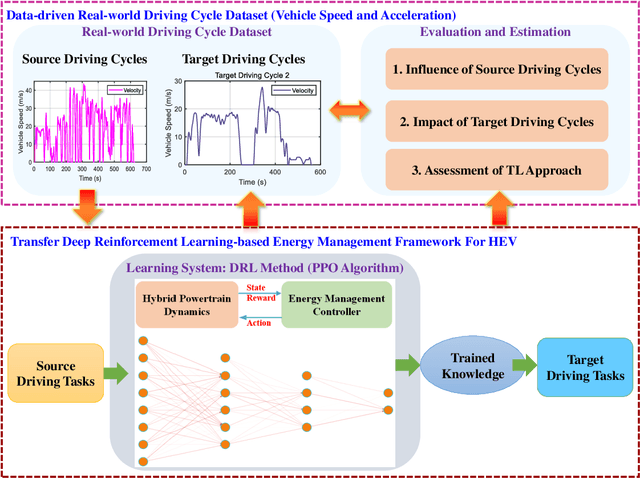

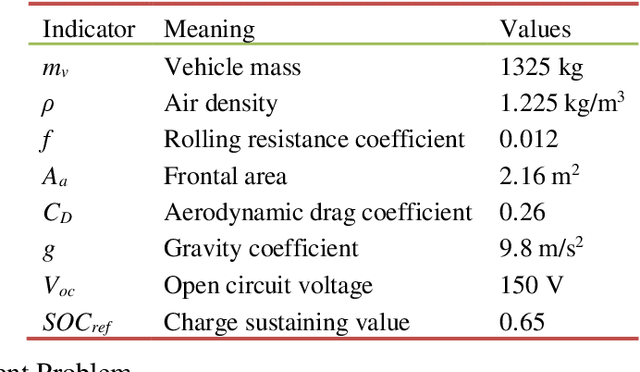

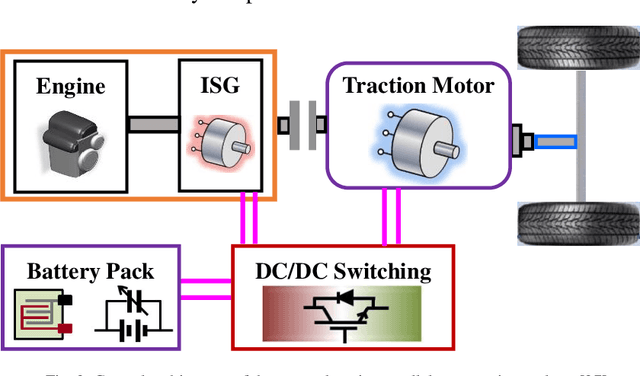

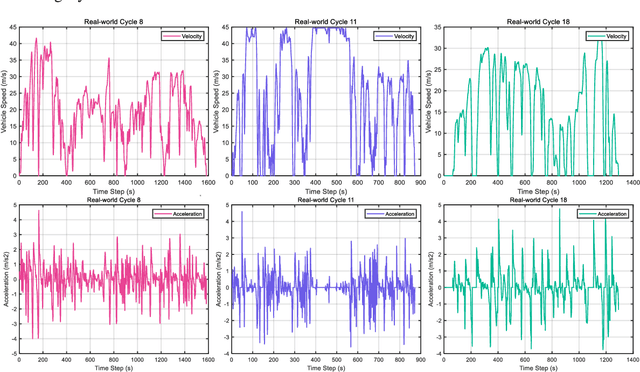

Data-Driven Transferred Energy Management Strategy for Hybrid Electric Vehicles via Deep Reinforcement Learning

Sep 20, 2020

Abstract:Real-time applications of energy management strategies (EMSs) in hybrid electric vehicles (HEVs) are the harshest requirements for researchers and engineers. Inspired by the excellent problem-solving capabilities of deep reinforcement learning (DRL), this paper proposes a real-time EMS via incorporating the DRL method and transfer learning (TL). The related EMSs are derived from and evaluated on the real-world collected driving cycle dataset from Transportation Secure Data Center (TSDC). The concrete DRL algorithm is proximal policy optimization (PPO) belonging to the policy gradient (PG) techniques. For specification, many source driving cycles are utilized for training the parameters of deep network based on PPO. The learned parameters are transformed into the target driving cycles under the TL framework. The EMSs related to the target driving cycles are estimated and compared in different training conditions. Simulation results indicate that the presented transfer DRL-based EMS could effectively reduce time consumption and guarantee control performance.

Decision-making for Autonomous Vehicles on Highway: Deep Reinforcement Learning with Continuous Action Horizon

Aug 26, 2020

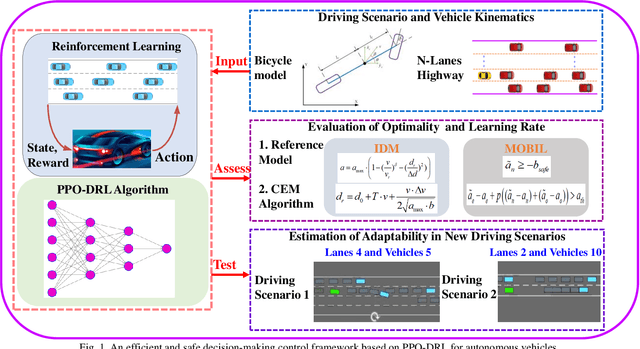

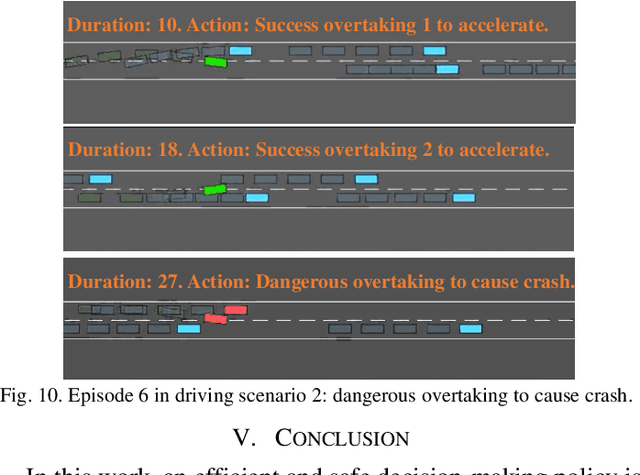

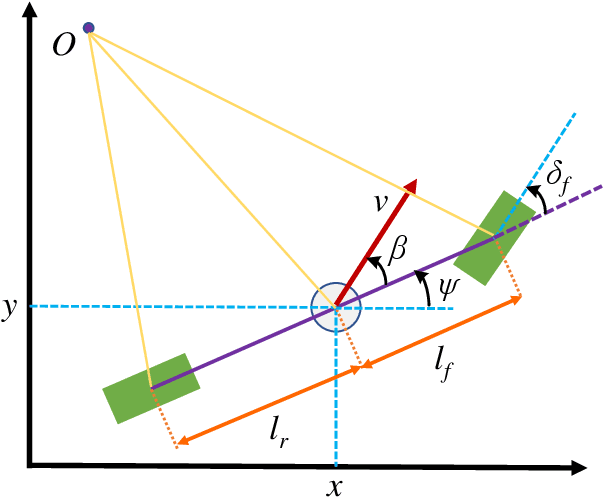

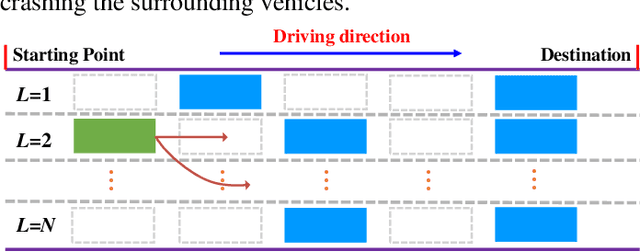

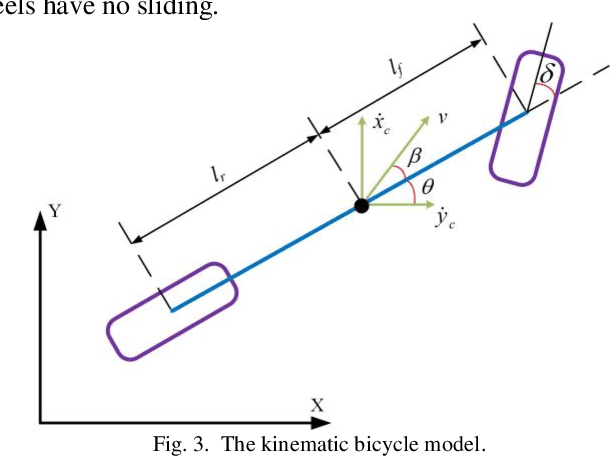

Abstract:Decision-making strategy for autonomous vehicles de-scribes a sequence of driving maneuvers to achieve a certain navigational mission. This paper utilizes the deep reinforcement learning (DRL) method to address the continuous-horizon decision-making problem on the highway. First, the vehicle kinematics and driving scenario on the freeway are introduced. The running objective of the ego automated vehicle is to execute an efficient and smooth policy without collision. Then, the particular algorithm named proximal policy optimization (PPO)-enhanced DRL is illustrated. To overcome the challenges in tardy training efficiency and sample inefficiency, this applied algorithm could realize high learning efficiency and excellent control performance. Finally, the PPO-DRL-based decision-making strategy is estimated from multiple perspectives, including the optimality, learning efficiency, and adaptability. Its potential for online application is discussed by applying it to similar driving scenarios.

Decision-making at Unsignalized Intersection for Autonomous Vehicles: Left-turn Maneuver with Deep Reinforcement Learning

Aug 14, 2020

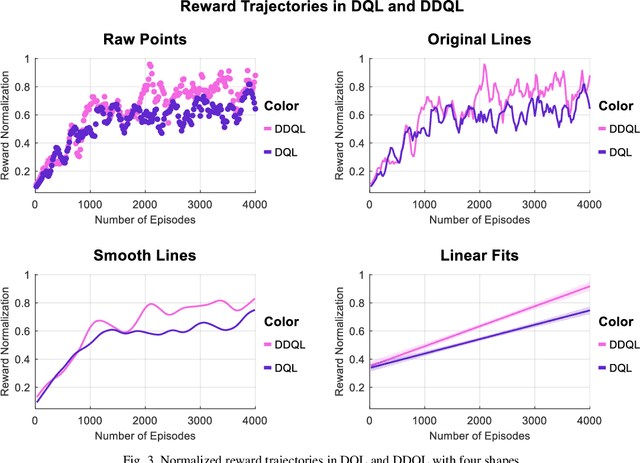

Abstract:Decision-making module enables autonomous vehicles to reach appropriate maneuvers in the complex urban environments, especially the intersection situations. This work proposes a deep reinforcement learning (DRL) based left-turn decision-making framework at unsignalized intersection for autonomous vehicles. The objective of the studied automated vehicle is to make an efficient and safe left-turn maneuver at a four-way unsignalized intersection. The exploited DRL methods include deep Q-learning (DQL) and double DQL. Simulation results indicate that the presented decision-making strategy could efficaciously reduce the collision rate and improve transport efficiency. This work also reveals that the constructed left-turn control structure has a great potential to be applied in real-time.

A Comparative Analysis of Deep Reinforcement Learning-enabled Freeway Decision-making for Automated Vehicles

Aug 04, 2020

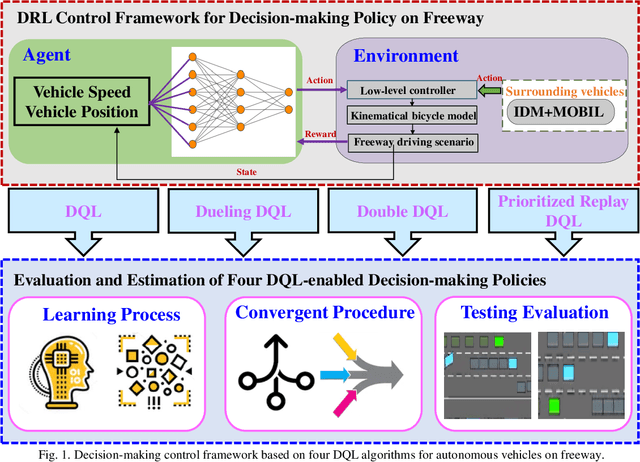

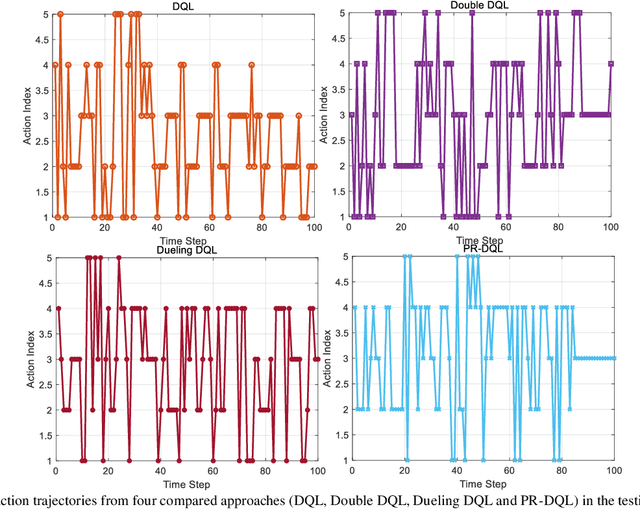

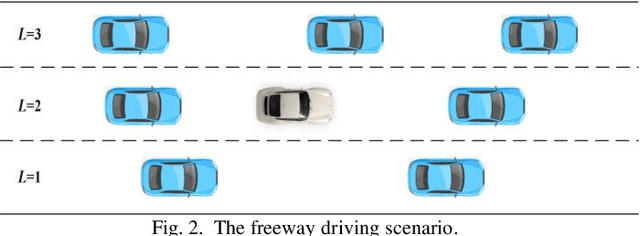

Abstract:Deep reinforcement learning (DRL) is becoming a prevalent and powerful methodology to address the artificial intelligent problems. Owing to its tremendous potentials in self-learning and self-improvement, DRL is broadly serviced in many research fields. This article conducted a comprehensive comparison of multiple DRL approaches on the freeway decision-making problem for autonomous vehicles. These techniques include the common deep Q learning (DQL), double DQL (DDQL), dueling DQL, and prioritized replay DQL. First, the reinforcement learning (RL) framework is introduced. As an extension, the implementations of the above mentioned DRL methods are established mathematically. Then, the freeway driving scenario for the automated vehicles is constructed, wherein the decision-making problem is transferred as a control optimization problem. Finally, a series of simulation experiments are achieved to evaluate the control performance of these DRL-enabled decision-making strategies. A comparative analysis is realized to connect the autonomous driving results with the learning characteristics of these DRL techniques.

Defining Digital Quadruplets in the Cyber-Physical-Social Space for Parallel Driving

Jul 26, 2020

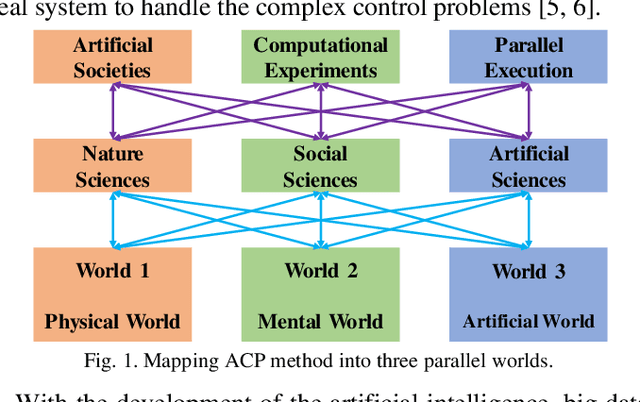

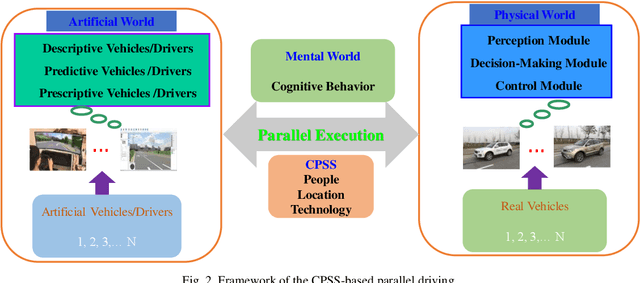

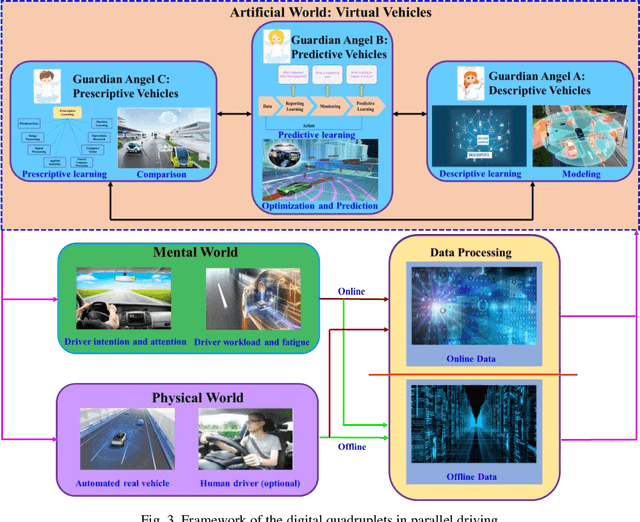

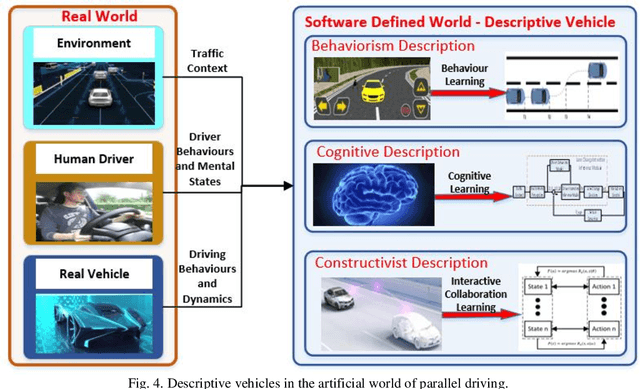

Abstract:Parallel driving is a novel framework to synthesize vehicle intelligence and transport automation. This article aims to define digital quadruplets in parallel driving. In the cyber-physical-social systems (CPSS), based on the ACP method, the names of the digital quadruplets are first given, which are descriptive, predictive, prescriptive and real vehicles. The objectives of the three virtual digital vehicles are interacting, guiding, simulating and improving with the real vehicles. Then, the three virtual components of the digital quadruplets are introduced in detail and their applications are also illustrated. Finally, the real vehicles in the parallel driving system and the research process of the digital quadruplets are depicted. The presented digital quadruplets in parallel driving are expected to make the future connected automated driving safety, efficiently and synergistically.

Adaptive Energy Management for Real Driving Conditions via Transfer Reinforcement Learning

Jul 24, 2020

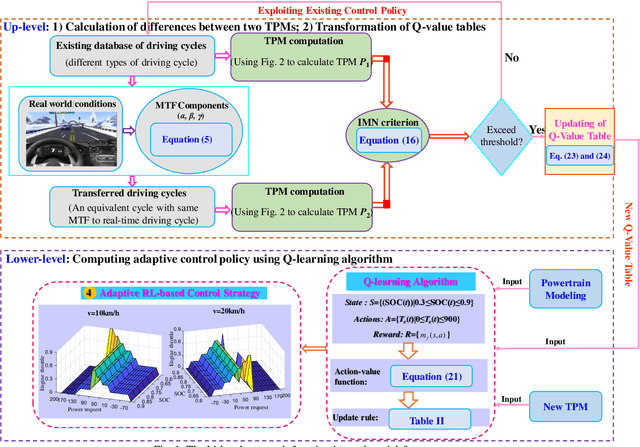

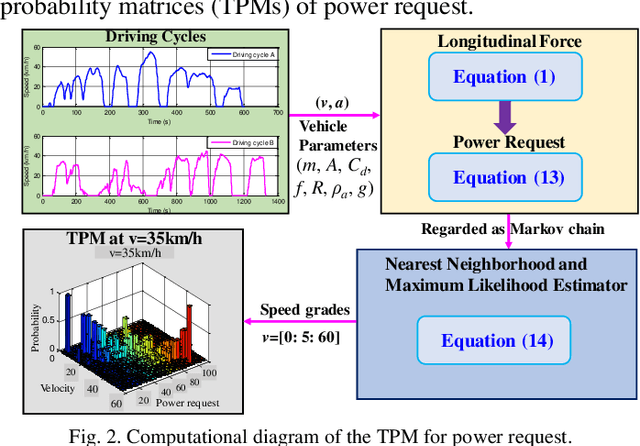

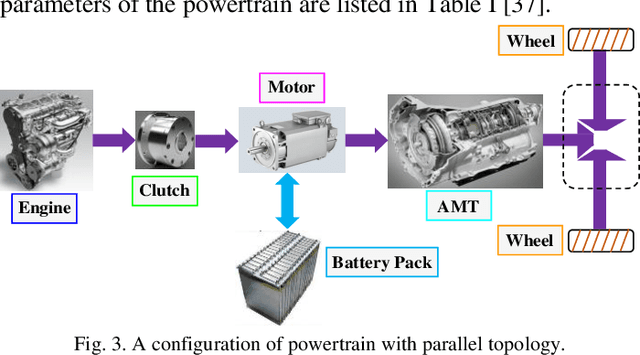

Abstract:This article proposes a transfer reinforcement learning (RL) based adaptive energy managing approach for a hybrid electric vehicle (HEV) with parallel topology. This approach is bi-level. The up-level characterizes how to transform the Q-value tables in the RL framework via driving cycle transformation (DCT). Especially, transition probability matrices (TPMs) of power request are computed for different cycles, and induced matrix norm (IMN) is employed as a critical criterion to identify the transformation differences and to determine the alteration of the control strategy. The lower-level determines how to set the corresponding control strategies with the transformed Q-value tables and TPMs by using model-free reinforcement learning (RL) algorithm. Numerical tests illustrate that the transferred performance can be tuned by IMN value and the transfer RL controller could receive a higher fuel economy. The comparison demonstrates that the proposed strategy exceeds the conventional RL approach in both calculation speed and control performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge