Xiaolin Tang

Decision-making at Unsignalized Intersection for Autonomous Vehicles: Left-turn Maneuver with Deep Reinforcement Learning

Aug 14, 2020

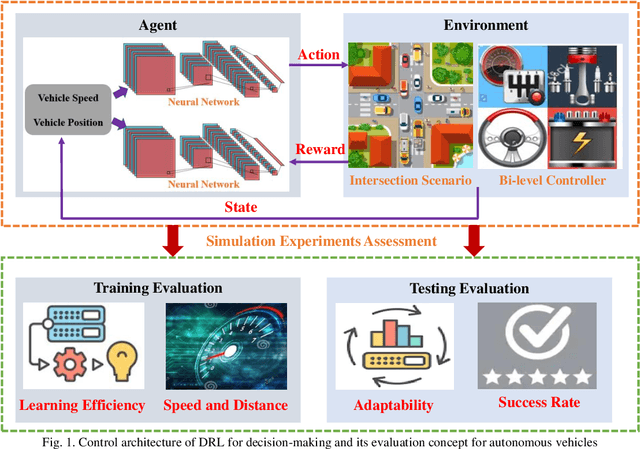

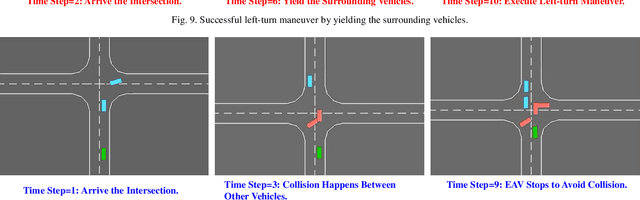

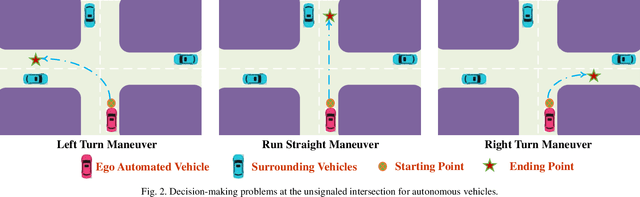

Abstract:Decision-making module enables autonomous vehicles to reach appropriate maneuvers in the complex urban environments, especially the intersection situations. This work proposes a deep reinforcement learning (DRL) based left-turn decision-making framework at unsignalized intersection for autonomous vehicles. The objective of the studied automated vehicle is to make an efficient and safe left-turn maneuver at a four-way unsignalized intersection. The exploited DRL methods include deep Q-learning (DQL) and double DQL. Simulation results indicate that the presented decision-making strategy could efficaciously reduce the collision rate and improve transport efficiency. This work also reveals that the constructed left-turn control structure has a great potential to be applied in real-time.

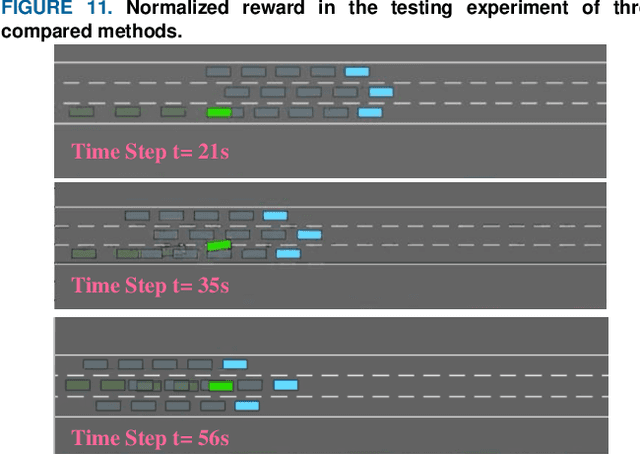

A Comparative Analysis of Deep Reinforcement Learning-enabled Freeway Decision-making for Automated Vehicles

Aug 04, 2020

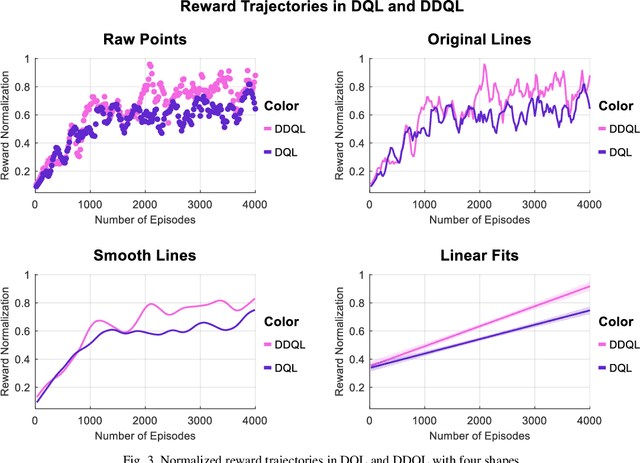

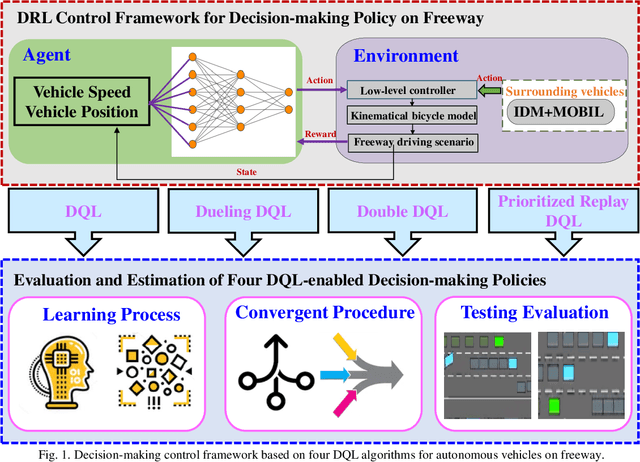

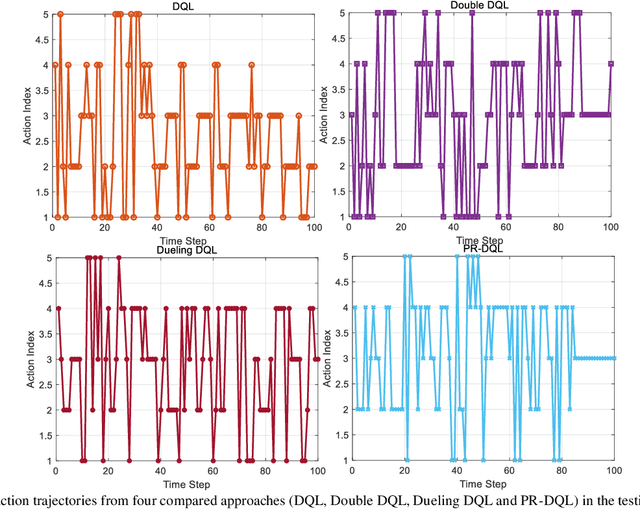

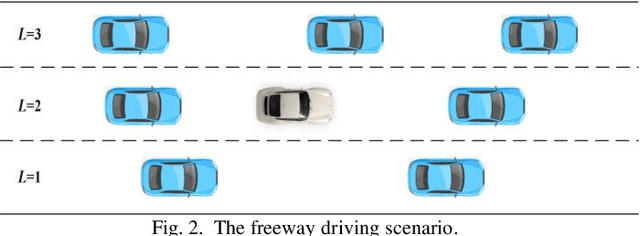

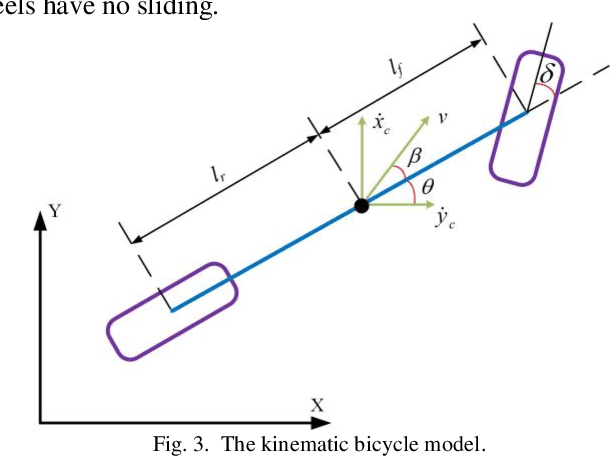

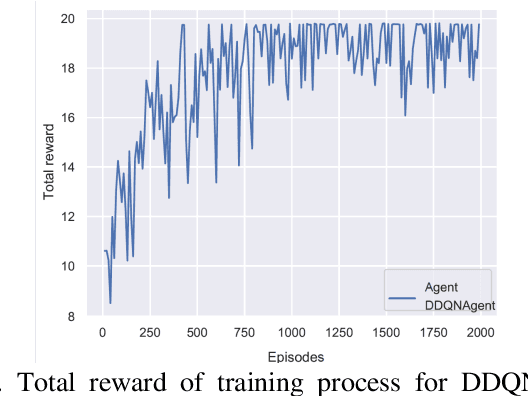

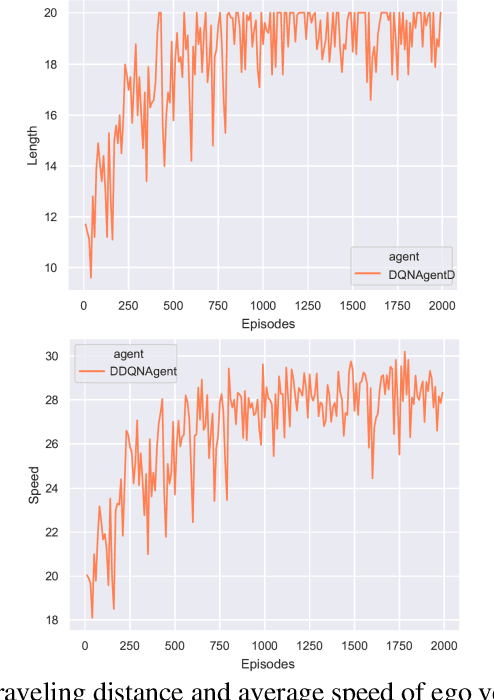

Abstract:Deep reinforcement learning (DRL) is becoming a prevalent and powerful methodology to address the artificial intelligent problems. Owing to its tremendous potentials in self-learning and self-improvement, DRL is broadly serviced in many research fields. This article conducted a comprehensive comparison of multiple DRL approaches on the freeway decision-making problem for autonomous vehicles. These techniques include the common deep Q learning (DQL), double DQL (DDQL), dueling DQL, and prioritized replay DQL. First, the reinforcement learning (RL) framework is introduced. As an extension, the implementations of the above mentioned DRL methods are established mathematically. Then, the freeway driving scenario for the automated vehicles is constructed, wherein the decision-making problem is transferred as a control optimization problem. Finally, a series of simulation experiments are achieved to evaluate the control performance of these DRL-enabled decision-making strategies. A comparative analysis is realized to connect the autonomous driving results with the learning characteristics of these DRL techniques.

Adaptive Energy Management for Real Driving Conditions via Transfer Reinforcement Learning

Jul 24, 2020

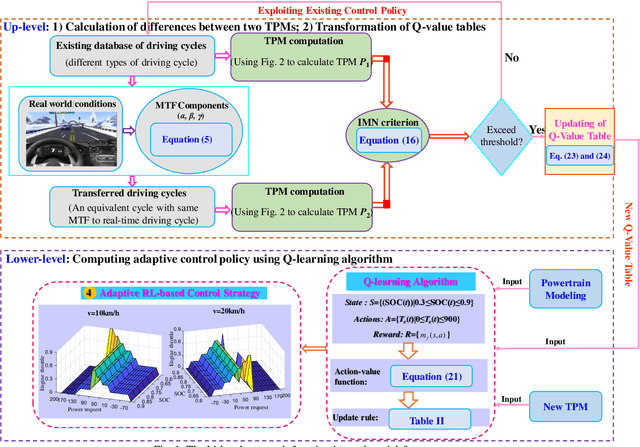

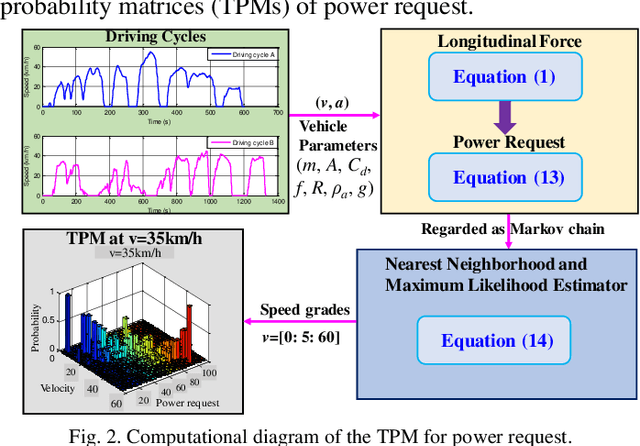

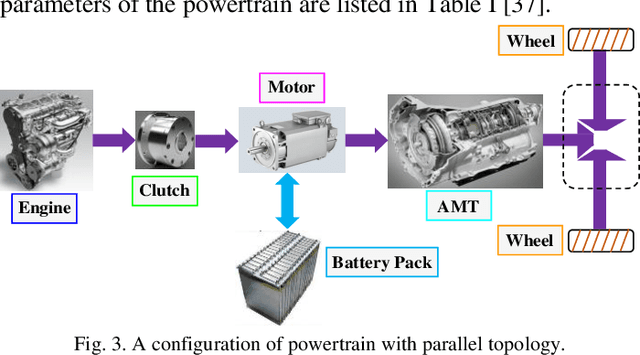

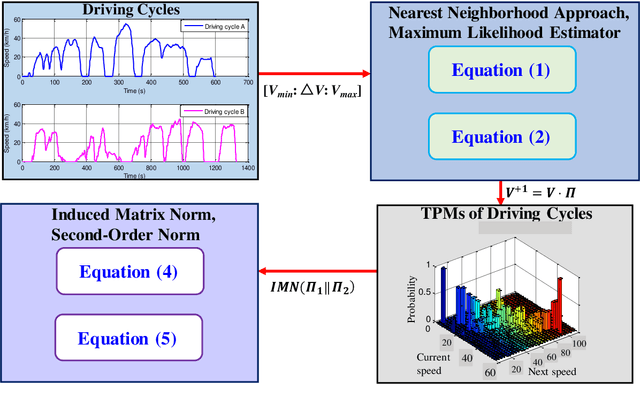

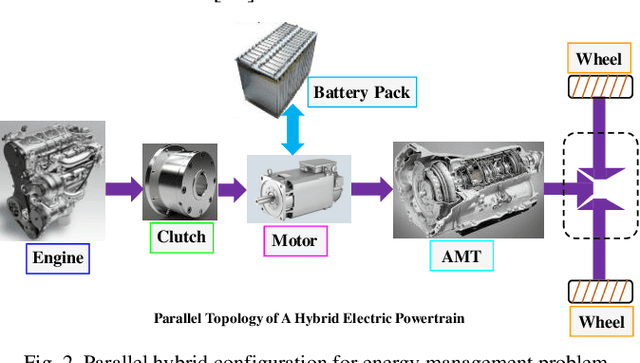

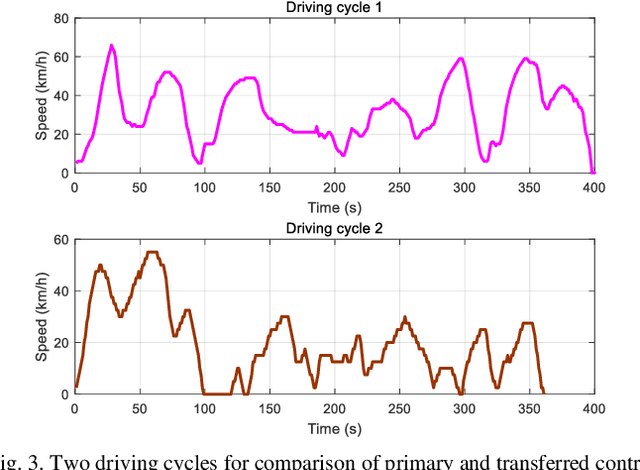

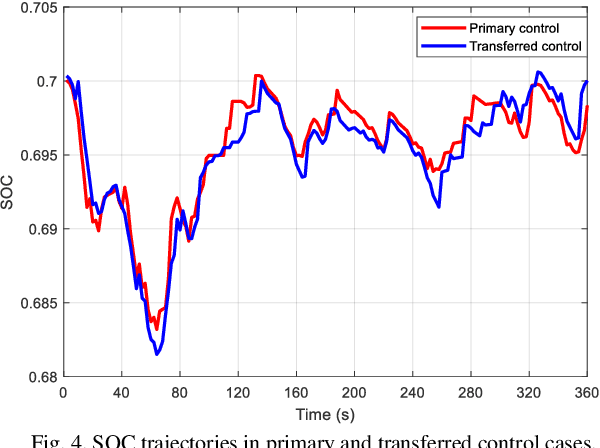

Abstract:This article proposes a transfer reinforcement learning (RL) based adaptive energy managing approach for a hybrid electric vehicle (HEV) with parallel topology. This approach is bi-level. The up-level characterizes how to transform the Q-value tables in the RL framework via driving cycle transformation (DCT). Especially, transition probability matrices (TPMs) of power request are computed for different cycles, and induced matrix norm (IMN) is employed as a critical criterion to identify the transformation differences and to determine the alteration of the control strategy. The lower-level determines how to set the corresponding control strategies with the transformed Q-value tables and TPMs by using model-free reinforcement learning (RL) algorithm. Numerical tests illustrate that the transferred performance can be tuned by IMN value and the transfer RL controller could receive a higher fuel economy. The comparison demonstrates that the proposed strategy exceeds the conventional RL approach in both calculation speed and control performance.

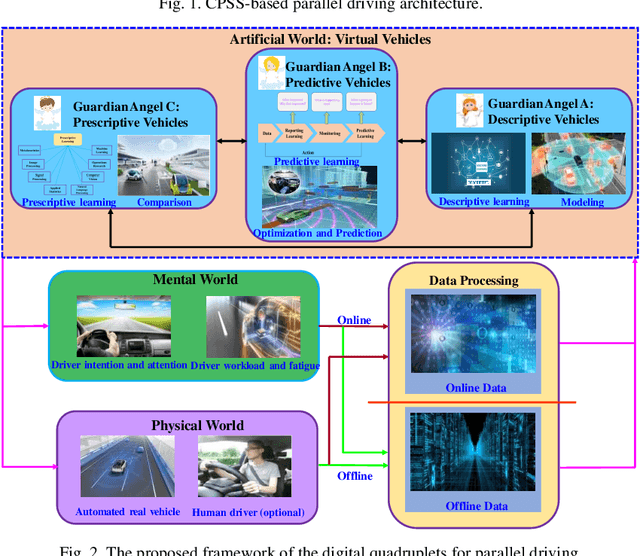

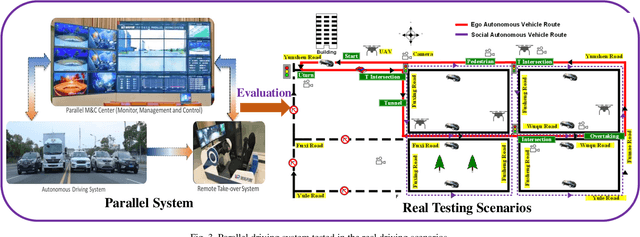

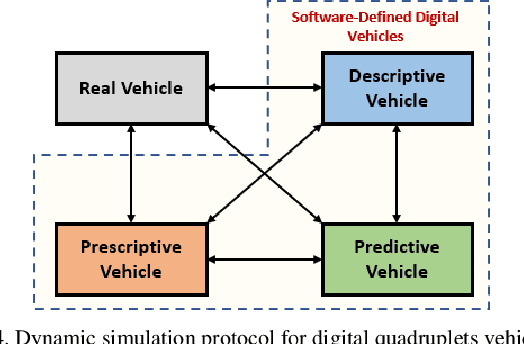

Digital Quadruplets for Cyber-Physical-Social Systems based Parallel Driving: From Concept to Applications

Jul 21, 2020

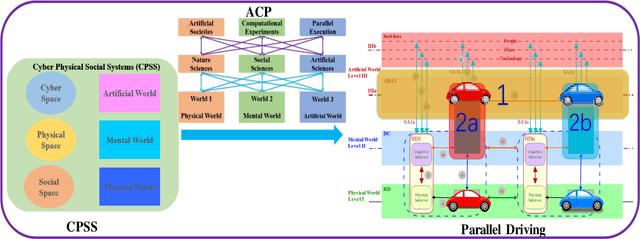

Abstract:Digital quadruplets aiming to improve road safety, traffic efficiency, and driving cooperation for future connected automated vehicles are proposed with the enlightenment of ACP based parallel driving. The ACP method denotes Artificial societies, Computational experiments, and Parallel execution modules for cyber-physical-social systems. Four agents are designed in the framework of digital quadruplets: descriptive vehicles, predictive vehicles, prescriptive vehicles, and real vehicles. The three virtual vehicles (descriptive, predictive, and prescriptive) dynamically interact with the real one in order to enhance the safety and performance of the real vehicle. The details of the three virtual vehicles in the digital quadruplets are described. Then, the interactions between the virtual and real vehicles are presented. The experimental results of the digital quadruplets demonstrate the effectiveness of the proposed framework.

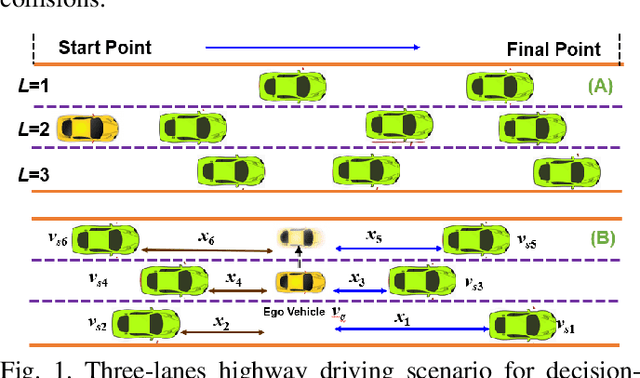

Decision-making Strategy on Highway for Autonomous Vehicles using Deep Reinforcement Learning

Jul 16, 2020

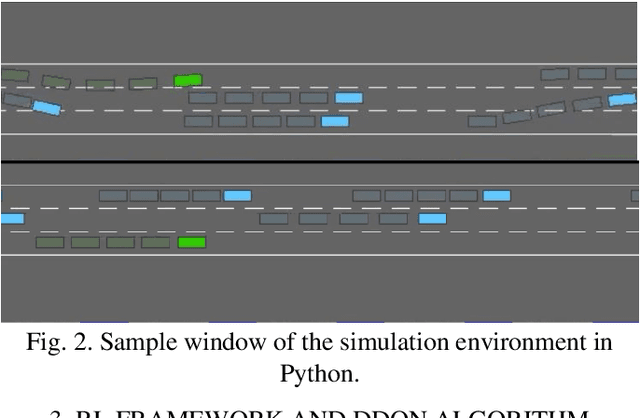

Abstract:Autonomous driving is a promising technology to reduce traffic accidents and improve driving efficiency. In this work, a deep reinforcement learning (DRL)-enabled decision-making policy is constructed for autonomous vehicles to address the overtaking behaviors on the highway. First, a highway driving environment is founded, wherein the ego vehicle aims to pass through the surrounding vehicles with an efficient and safe maneuver. A hierarchical control framework is presented to control these vehicles, which indicates the upper-level manages the driving decisions, and the lower-level cares about the supervision of vehicle speed and acceleration. Then, the particular DRL method named dueling deep Q-network (DDQN) algorithm is applied to derive the highway decision-making strategy. The exhaustive calculative procedures of deep Q-network and DDQN algorithms are discussed and compared. Finally, a series of estimation simulation experiments are conducted to evaluate the effectiveness of the proposed highway decision-making policy. The advantages of the proposed framework in convergence rate and control performance are illuminated. Simulation results reveal that the DDQN-based overtaking policy could accomplish highway driving tasks efficiently and safely.

Transfer Deep Reinforcement Learning-enabled Energy Management Strategy for Hybrid Tracked Vehicle

Jul 16, 2020

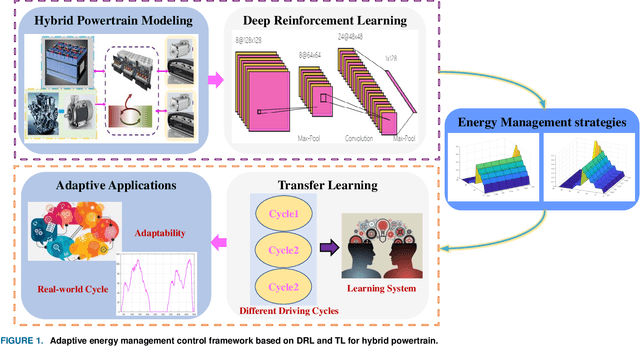

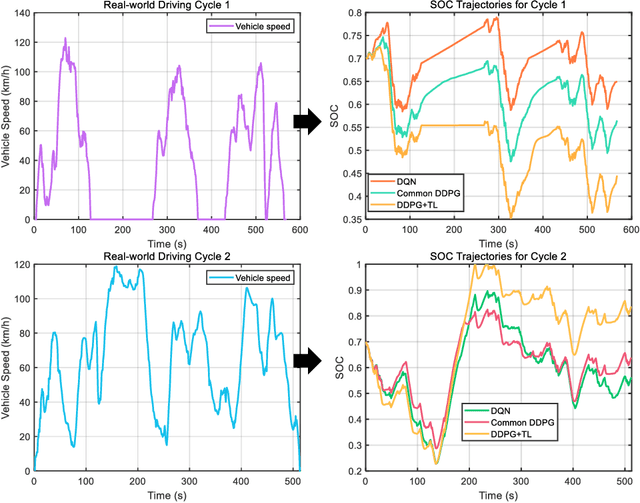

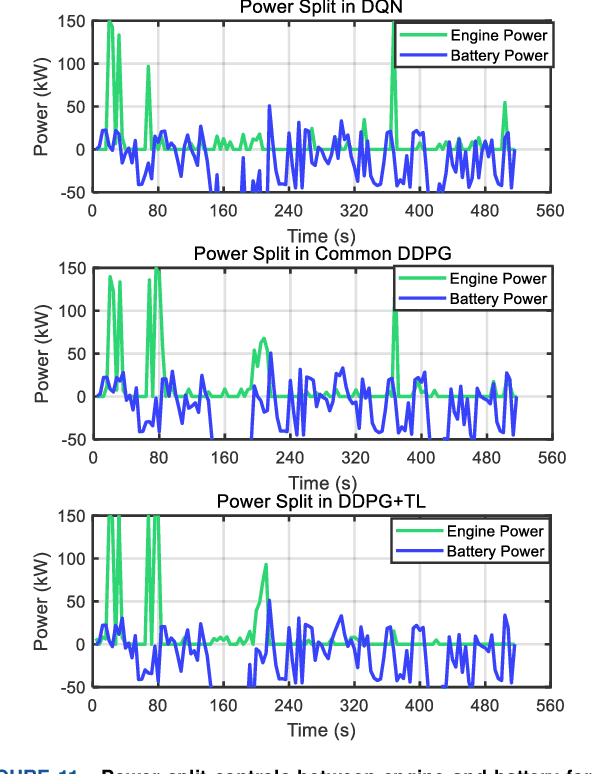

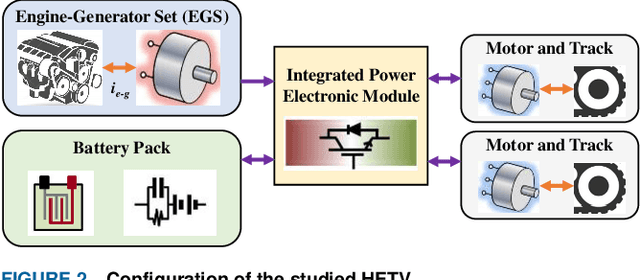

Abstract:This paper proposes an adaptive energy management strategy for hybrid electric vehicles by combining deep reinforcement learning (DRL) and transfer learning (TL). This work aims to address the defect of DRL in tedious training time. First, an optimization control modeling of a hybrid tracked vehicle is built, wherein the elaborate powertrain components are introduced. Then, a bi-level control framework is constructed to derive the energy management strategies (EMSs). The upper-level is applying the particular deep deterministic policy gradient (DDPG) algorithms for EMS training at different speed intervals. The lower-level is employing the TL method to transform the pre-trained neural networks for a novel driving cycle. Finally, a series of experiments are executed to prove the effectiveness of the presented control framework. The optimality and adaptability of the formulated EMS are illuminated. The founded DRL and TL-enabled control policy is capable of enhancing energy efficiency and improving system performance.

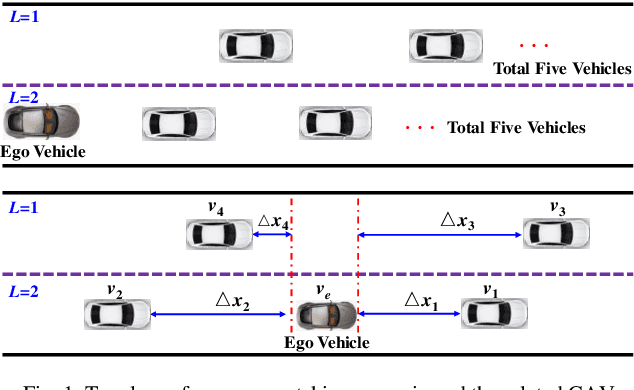

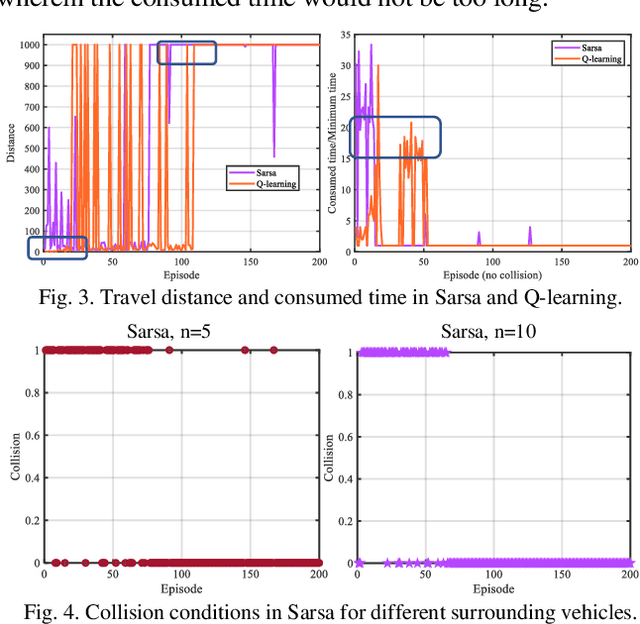

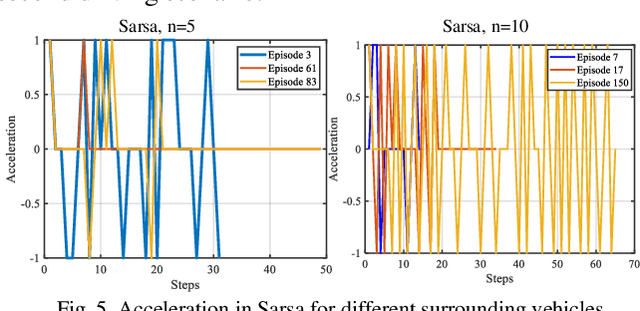

Reinforcement Learning-Enabled Decision-Making Strategies for a Vehicle-Cyber-Physical-System in Connected Environment

Jul 16, 2020

Abstract:As a typical vehicle-cyber-physical-system (V-CPS), connected automated vehicles attracted more and more attention in recent years. This paper focuses on discussing the decision-making (DM) strategy for autonomous vehicles in a connected environment. First, the highway DM problem is formulated, wherein the vehicles can exchange information via wireless networking. Then, two classical reinforcement learning (RL) algorithms, Q-learning and Dyna, are leveraged to derive the DM strategies in a predefined driving scenario. Finally, the control performance of the derived DM policies in safety and efficiency is analyzed. Furthermore, the inherent differences of the RL algorithms are embodied and discussed in DM strategies.

Human-like Energy Management Based on Deep Reinforcement Learning and Historical Driving Experiences

Jul 16, 2020

Abstract:Development of hybrid electric vehicles depends on an advanced and efficient energy management strategy (EMS). With online and real-time requirements in mind, this article presents a human-like energy management framework for hybrid electric vehicles according to deep reinforcement learning methods and collected historical driving data. The hybrid powertrain studied has a series-parallel topology, and its control-oriented modeling is founded first. Then, the distinctive deep reinforcement learning (DRL) algorithm, named deep deterministic policy gradient (DDPG), is introduced. To enhance the derived power split controls in the DRL framework, the global optimal control trajectories obtained from dynamic programming (DP) are regarded as expert knowledge to train the DDPG model. This operation guarantees the optimality of the proposed control architecture. Moreover, the collected historical driving data based on experienced drivers are employed to replace the DP-based controls, and thus construct the human-like EMSs. Finally, different categories of experiments are executed to estimate the optimality and adaptability of the proposed human-like EMS. Improvements in fuel economy and convergence rate indicate the effectiveness of the constructed control structure.

Dueling Deep Q Network for Highway Decision Making in Autonomous Vehicles: A Case Study

Jul 16, 2020

Abstract:This work optimizes the highway decision making strategy of autonomous vehicles by using deep reinforcement learning (DRL). First, the highway driving environment is built, wherein the ego vehicle, surrounding vehicles, and road lanes are included. Then, the overtaking decision-making problem of the automated vehicle is formulated as an optimal control problem. Then relevant control actions, state variables, and optimization objectives are elaborated. Finally, the deep Q-network is applied to derive the intelligent driving policies for the ego vehicle. Simulation results reveal that the ego vehicle could safely and efficiently accomplish the driving task after learning and training.

Transferred Energy Management Strategies for Hybrid Electric Vehicles Based on Driving Conditions Recognition

Jul 16, 2020

Abstract:Energy management strategies (EMSs) are the most significant components in hybrid electric vehicles (HEVs) because they decide the potential of energy conservation and emission reduction. This work presents a transferred EMS for a parallel HEV via combining the reinforcement learning method and driving conditions recognition. First, the Markov decision process (MDP) and the transition probability matrix are utilized to differentiate the driving conditions. Then, reinforcement learning algorithms are formulated to achieve power split controls, in which Q-tables are tuned by current driving situations. Finally, the proposed transferred framework is estimated and validated in a parallel hybrid topology. Its advantages in computational efficiency and fuel economy are summarized and proved.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge