Zejian Deng

Generalizable Trajectory Prediction via Inverse Reinforcement Learning with Mamba-Graph Architecture

Jun 14, 2025Abstract:Accurate driving behavior modeling is fundamental to safe and efficient trajectory prediction, yet remains challenging in complex traffic scenarios. This paper presents a novel Inverse Reinforcement Learning (IRL) framework that captures human-like decision-making by inferring diverse reward functions, enabling robust cross-scenario adaptability. The learned reward function is utilized to maximize the likelihood of output by the encoder-decoder architecture that combines Mamba blocks for efficient long-sequence dependency modeling with graph attention networks to encode spatial interactions among traffic agents. Comprehensive evaluations on urban intersections and roundabouts demonstrate that the proposed method not only outperforms various popular approaches in prediction accuracy but also achieves 2 times higher generalization performance to unseen scenarios compared to other IRL-based method.

ARTEMIS: Autoregressive End-to-End Trajectory Planning with Mixture of Experts for Autonomous Driving

Apr 28, 2025Abstract:This paper presents ARTEMIS, an end-to-end autonomous driving framework that combines autoregressive trajectory planning with Mixture-of-Experts (MoE). Traditional modular methods suffer from error propagation, while existing end-to-end models typically employ static one-shot inference paradigms that inadequately capture the dynamic changes of the environment. ARTEMIS takes a different method by generating trajectory waypoints sequentially, preserves critical temporal dependencies while dynamically routing scene-specific queries to specialized expert networks. It effectively relieves trajectory quality degradation issues encountered when guidance information is ambiguous, and overcomes the inherent representational limitations of singular network architectures when processing diverse driving scenarios. Additionally, we use a lightweight batch reallocation strategy that significantly improves the training speed of the Mixture-of-Experts model. Through experiments on the NAVSIM dataset, ARTEMIS exhibits superior competitive performance, achieving 87.0 PDMS and 83.1 EPDMS with ResNet-34 backbone, demonstrates state-of-the-art performance on multiple metrics.

CHARMS: Cognitive Hierarchical Agent with Reasoning and Motion Styles

Apr 03, 2025Abstract:To address the current challenges of low intelligence and simplistic vehicle behavior modeling in autonomous driving simulation scenarios, this paper proposes the Cognitive Hierarchical Agent with Reasoning and Motion Styles (CHARMS). The model can reason about the behavior of other vehicles like a human driver and respond with different decision-making styles, thereby improving the intelligence and diversity of the surrounding vehicles in the driving scenario. By introducing the Level-k behavioral game theory, the paper models the decision-making process of human drivers and employs deep reinforcement learning to train the models with diverse decision styles, simulating different reasoning approaches and behavioral characteristics. Building on the Poisson cognitive hierarchy theory, this paper also presents a novel driving scenario generation method. The method controls the proportion of vehicles with different driving styles in the scenario using Poisson and binomial distributions, thus generating controllable and diverse driving environments. Experimental results demonstrate that CHARMS not only exhibits superior decision-making capabilities as ego vehicles, but also generates more complex and diverse driving scenarios as surrounding vehicles. We will release code for CHARMS at https://github.com/WUTAD-Wjy/CHARMS.

Multi-Agent Trajectory Prediction with Difficulty-Guided Feature Enhancement Network

Jul 29, 2024

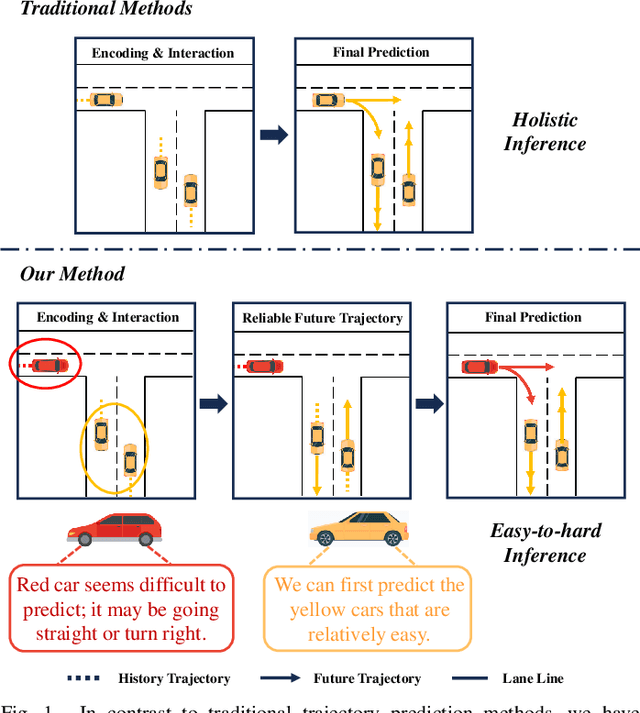

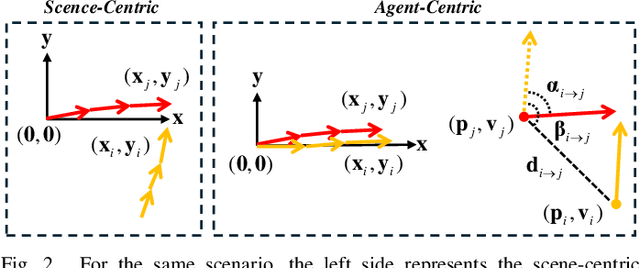

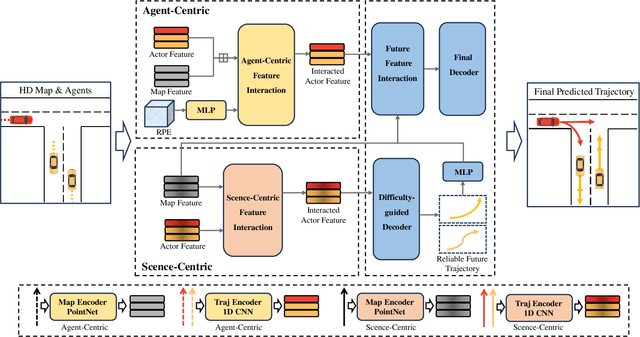

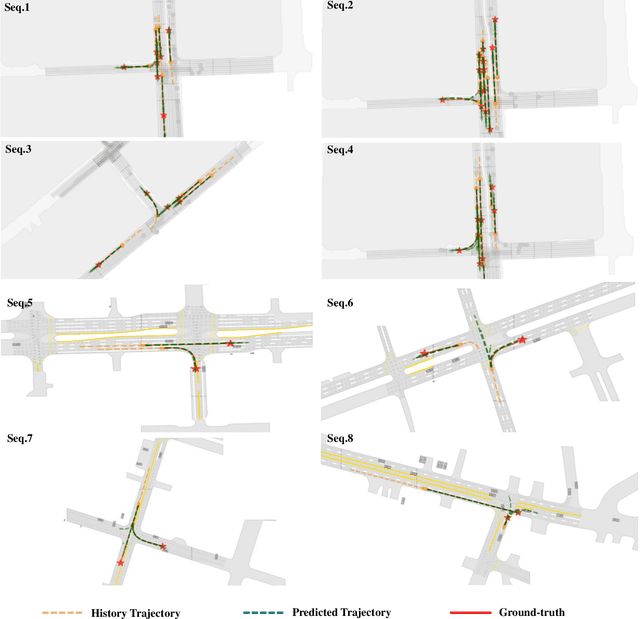

Abstract:Trajectory prediction is crucial for autonomous driving as it aims to forecast the future movements of traffic participants. Traditional methods usually perform holistic inference on the trajectories of agents, neglecting the differences in prediction difficulty among agents. This paper proposes a novel Difficulty-Guided Feature Enhancement Network (DGFNet), which leverages the prediction difficulty differences among agents for multi-agent trajectory prediction. Firstly, we employ spatio-temporal feature encoding and interaction to capture rich spatio-temporal features. Secondly, a difficulty-guided decoder is used to control the flow of future trajectories into subsequent modules, obtaining reliable future trajectories. Then, feature interaction and fusion are performed through the future feature interaction module. Finally, the fused agent features are fed into the final predictor to generate the predicted trajectory distributions for multiple participants. Experimental results demonstrate that our DGFNet achieves state-of-the-art performance on the Argoverse 1\&2 motion forecasting benchmarks. Ablation studies further validate the effectiveness of each module. Moreover, compared with SOTA methods, our method balances trajectory prediction accuracy and real-time inference speed.

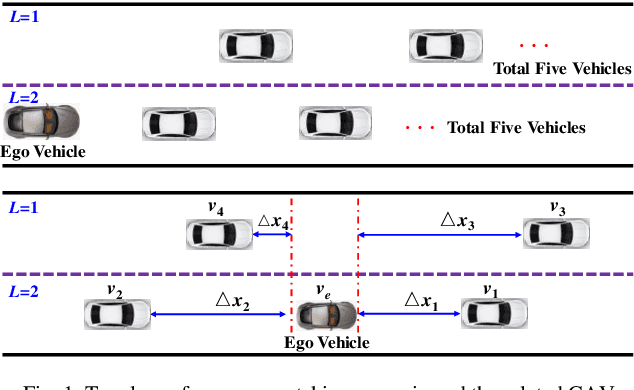

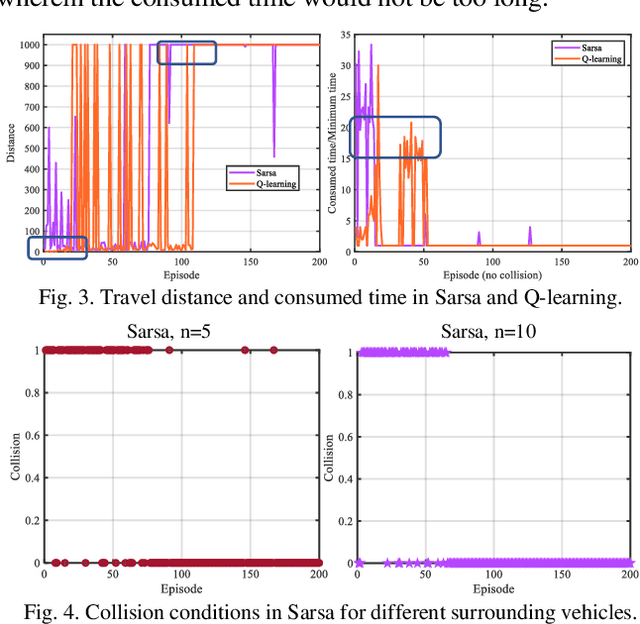

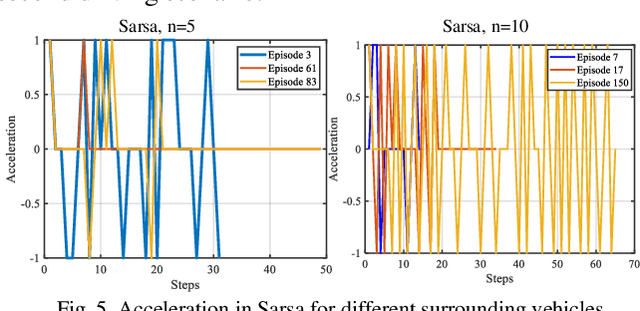

Reinforcement Learning-Enabled Decision-Making Strategies for a Vehicle-Cyber-Physical-System in Connected Environment

Jul 16, 2020

Abstract:As a typical vehicle-cyber-physical-system (V-CPS), connected automated vehicles attracted more and more attention in recent years. This paper focuses on discussing the decision-making (DM) strategy for autonomous vehicles in a connected environment. First, the highway DM problem is formulated, wherein the vehicles can exchange information via wireless networking. Then, two classical reinforcement learning (RL) algorithms, Q-learning and Dyna, are leveraged to derive the DM strategies in a predefined driving scenario. Finally, the control performance of the derived DM policies in safety and efficiency is analyzed. Furthermore, the inherent differences of the RL algorithms are embodied and discussed in DM strategies.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge