Adaptive Energy Management for Real Driving Conditions via Transfer Reinforcement Learning

Paper and Code

Jul 24, 2020

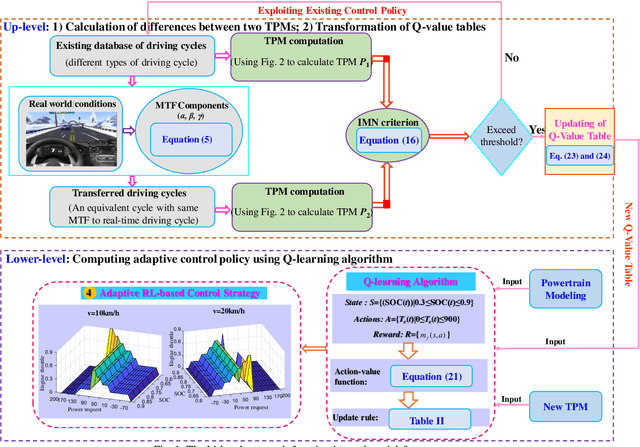

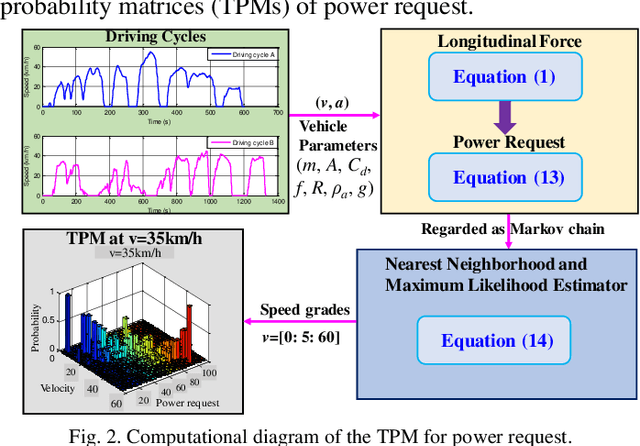

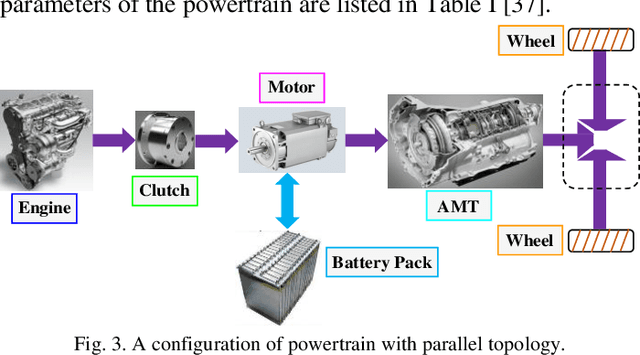

This article proposes a transfer reinforcement learning (RL) based adaptive energy managing approach for a hybrid electric vehicle (HEV) with parallel topology. This approach is bi-level. The up-level characterizes how to transform the Q-value tables in the RL framework via driving cycle transformation (DCT). Especially, transition probability matrices (TPMs) of power request are computed for different cycles, and induced matrix norm (IMN) is employed as a critical criterion to identify the transformation differences and to determine the alteration of the control strategy. The lower-level determines how to set the corresponding control strategies with the transformed Q-value tables and TPMs by using model-free reinforcement learning (RL) algorithm. Numerical tests illustrate that the transferred performance can be tuned by IMN value and the transfer RL controller could receive a higher fuel economy. The comparison demonstrates that the proposed strategy exceeds the conventional RL approach in both calculation speed and control performance.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge