Bing Huang

Proactive Detection of Physical Inter-rule Vulnerabilities in IoT Services Using a Deep Learning Approach

Jun 06, 2024Abstract:Emerging Internet of Things (IoT) platforms provide sophisticated capabilities to automate IoT services by enabling occupants to create trigger-action rules. Multiple trigger-action rules can physically interact with each other via shared environment channels, such as temperature, humidity, and illumination. We refer to inter-rule interactions via shared environment channels as a physical inter-rule vulnerability. Such vulnerability can be exploited by attackers to launch attacks against IoT systems. We propose a new framework to proactively discover possible physical inter-rule interactions from user requirement specifications (i.e., descriptions) using a deep learning approach. Specifically, we utilize the Transformer model to generate trigger-action rules from their associated descriptions. We discover two types of physical inter-rule vulnerabilities and determine associated environment channels using natural language processing (NLP) tools. Given the extracted trigger-action rules and associated environment channels, an approach is proposed to identify hidden physical inter-rule vulnerabilities among them. Our experiment on 27983 IFTTT style rules shows that the Transformer can successfully extract trigger-action rules from descriptions with 95.22% accuracy. We also validate the effectiveness of our approach on 60 SmartThings official IoT apps and discover 99 possible physical inter-rule vulnerabilities.

Conflict Detection in IoT-based Smart Homes

Jul 28, 2021

Abstract:We propose a novel framework that detects conflicts in IoT-based smart homes. Conflicts may arise during interactions between the resident and IoT services in smart homes. We propose a generic knowledge graph to represent the relations between IoT services and environment entities. We also profile a generic knowledge graph to a specific smart home setting based on the context information. We propose a conflict taxonomy to capture different types of conflicts in a single resident smart home setting. A conflict detection algorithm is proposed to identify potential conflicts using the profiled knowledge graph. We conduct a set of experiments on real datasets and synthesized datasets to validate the effectiveness and efficiency of our proposed approach.

Decision-making at Unsignalized Intersection for Autonomous Vehicles: Left-turn Maneuver with Deep Reinforcement Learning

Aug 14, 2020

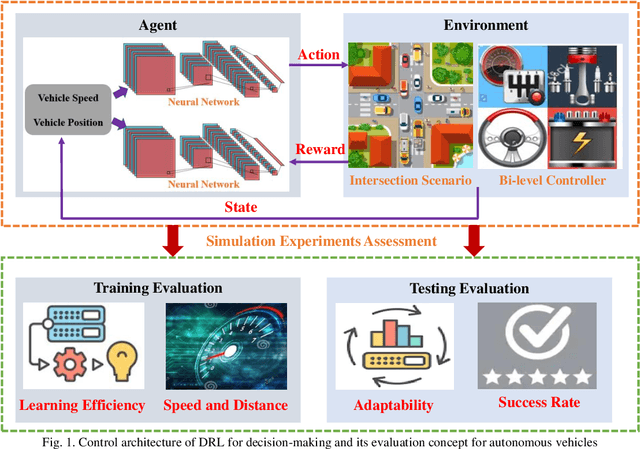

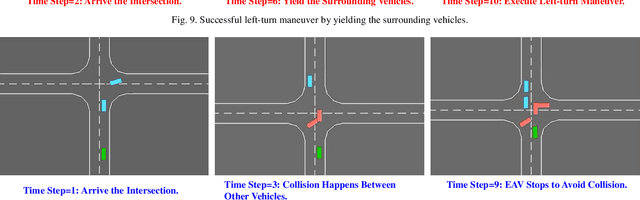

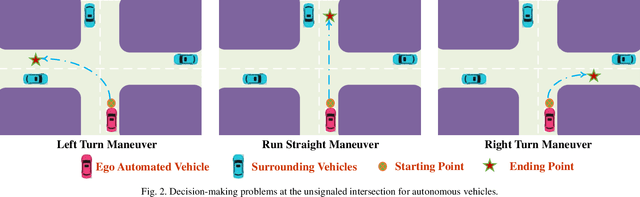

Abstract:Decision-making module enables autonomous vehicles to reach appropriate maneuvers in the complex urban environments, especially the intersection situations. This work proposes a deep reinforcement learning (DRL) based left-turn decision-making framework at unsignalized intersection for autonomous vehicles. The objective of the studied automated vehicle is to make an efficient and safe left-turn maneuver at a four-way unsignalized intersection. The exploited DRL methods include deep Q-learning (DQL) and double DQL. Simulation results indicate that the presented decision-making strategy could efficaciously reduce the collision rate and improve transport efficiency. This work also reveals that the constructed left-turn control structure has a great potential to be applied in real-time.

A Comparative Analysis of Deep Reinforcement Learning-enabled Freeway Decision-making for Automated Vehicles

Aug 04, 2020

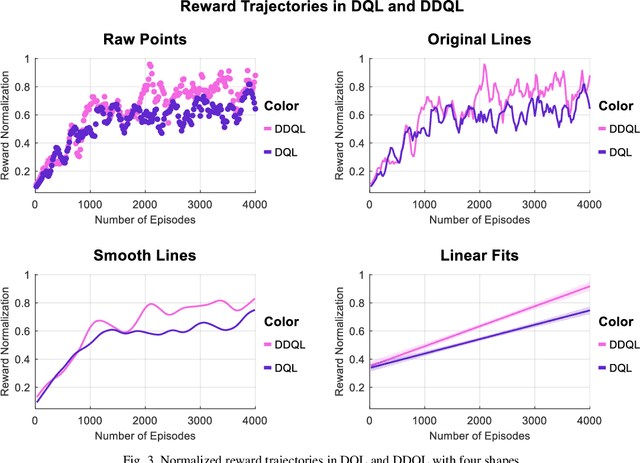

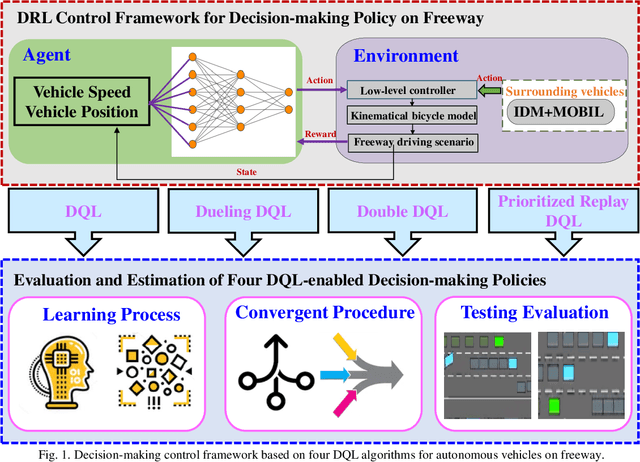

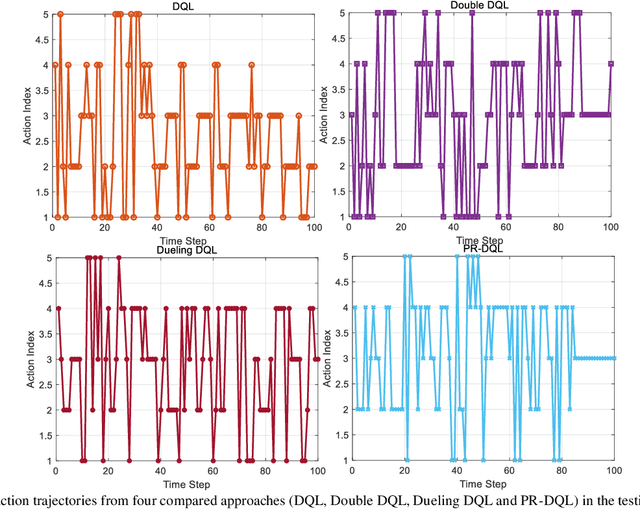

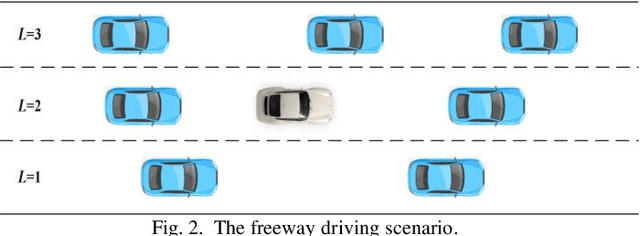

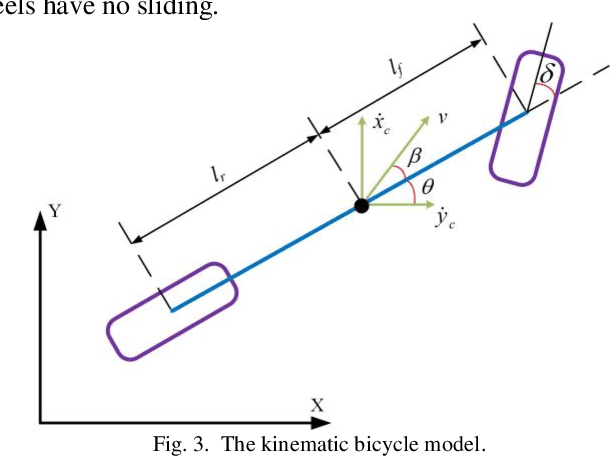

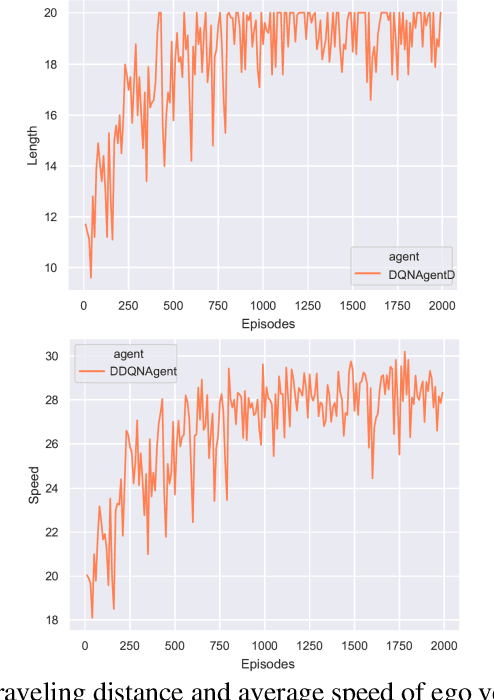

Abstract:Deep reinforcement learning (DRL) is becoming a prevalent and powerful methodology to address the artificial intelligent problems. Owing to its tremendous potentials in self-learning and self-improvement, DRL is broadly serviced in many research fields. This article conducted a comprehensive comparison of multiple DRL approaches on the freeway decision-making problem for autonomous vehicles. These techniques include the common deep Q learning (DQL), double DQL (DDQL), dueling DQL, and prioritized replay DQL. First, the reinforcement learning (RL) framework is introduced. As an extension, the implementations of the above mentioned DRL methods are established mathematically. Then, the freeway driving scenario for the automated vehicles is constructed, wherein the decision-making problem is transferred as a control optimization problem. Finally, a series of simulation experiments are achieved to evaluate the control performance of these DRL-enabled decision-making strategies. A comparative analysis is realized to connect the autonomous driving results with the learning characteristics of these DRL techniques.

Decision-making Strategy on Highway for Autonomous Vehicles using Deep Reinforcement Learning

Jul 16, 2020

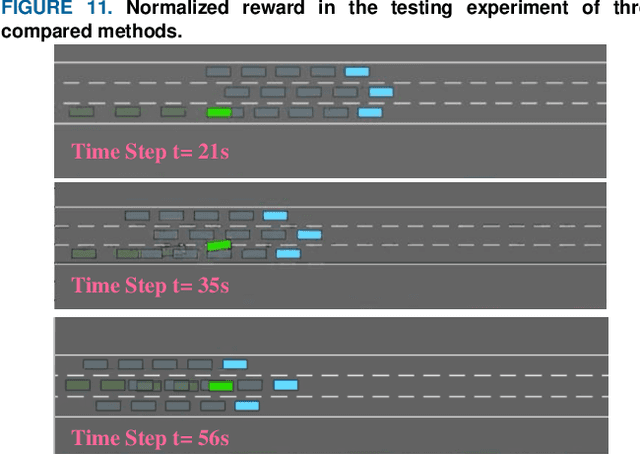

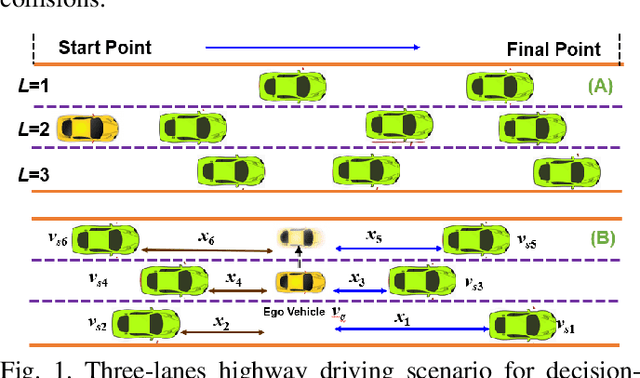

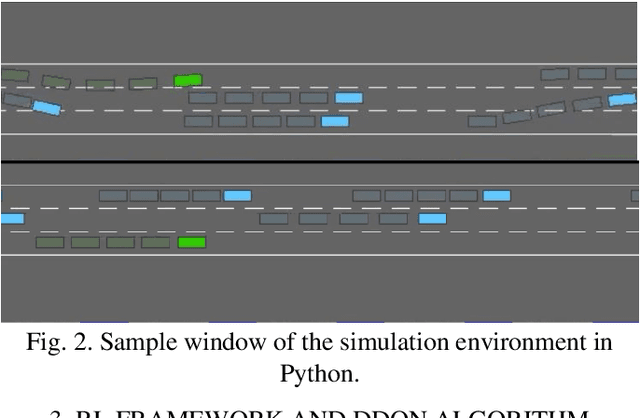

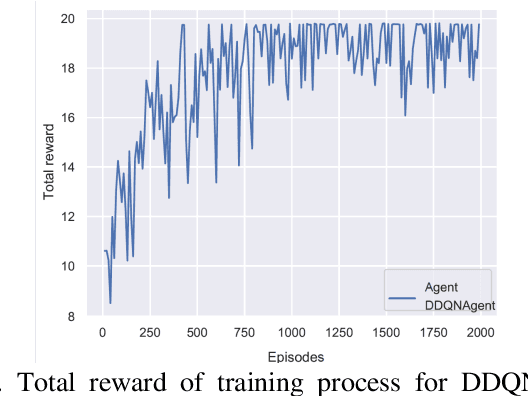

Abstract:Autonomous driving is a promising technology to reduce traffic accidents and improve driving efficiency. In this work, a deep reinforcement learning (DRL)-enabled decision-making policy is constructed for autonomous vehicles to address the overtaking behaviors on the highway. First, a highway driving environment is founded, wherein the ego vehicle aims to pass through the surrounding vehicles with an efficient and safe maneuver. A hierarchical control framework is presented to control these vehicles, which indicates the upper-level manages the driving decisions, and the lower-level cares about the supervision of vehicle speed and acceleration. Then, the particular DRL method named dueling deep Q-network (DDQN) algorithm is applied to derive the highway decision-making strategy. The exhaustive calculative procedures of deep Q-network and DDQN algorithms are discussed and compared. Finally, a series of estimation simulation experiments are conducted to evaluate the effectiveness of the proposed highway decision-making policy. The advantages of the proposed framework in convergence rate and control performance are illuminated. Simulation results reveal that the DDQN-based overtaking policy could accomplish highway driving tasks efficiently and safely.

Dueling Deep Q Network for Highway Decision Making in Autonomous Vehicles: A Case Study

Jul 16, 2020

Abstract:This work optimizes the highway decision making strategy of autonomous vehicles by using deep reinforcement learning (DRL). First, the highway driving environment is built, wherein the ego vehicle, surrounding vehicles, and road lanes are included. Then, the overtaking decision-making problem of the automated vehicle is formulated as an optimal control problem. Then relevant control actions, state variables, and optimization objectives are elaborated. Finally, the deep Q-network is applied to derive the intelligent driving policies for the ego vehicle. Simulation results reveal that the ego vehicle could safely and efficiently accomplish the driving task after learning and training.

Service mining for Internet of Things

Jun 09, 2020

Abstract:A service mining framework is proposed that enables discovering interesting relationships in Internet of Things services bottom-up. The service relationships are modeled based on spatial-temporal aspects, environment, people, and operation. An ontology-based service model is proposed to describe services. We present a set of metrics to evaluate the interestingness of discovered service relationships. Analytical and simulation results are presented to show the effectiveness of the proposed evaluation measures.

Cognitive Amplifier for Internet of Things

May 14, 2020

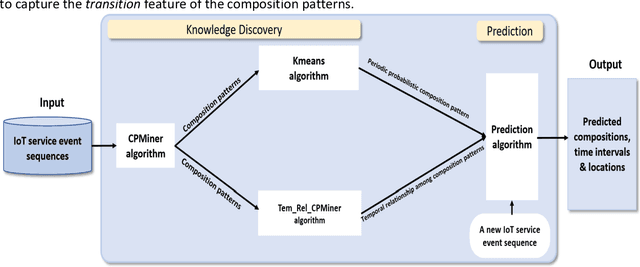

Abstract:We present a Cognitive Amplifier framework to augment things part of an IoT, with cognitive capabilities for the purpose of improving life convenience. Specifically, the Cognitive Amplifier consists of knowledge discovery and prediction components. The knowledge discovery component focuses on finding natural activity patterns considering their regularity, variations, and transitions in real life setting. The prediction component takes the discovered knowledge as the base for inferring what, when, and where the next activity will happen. Experimental results on real-life data validate the feasibility and applicability of the proposed approach.

Enabling Edge Cloud Intelligence for Activity Learning in Smart Home

May 14, 2020

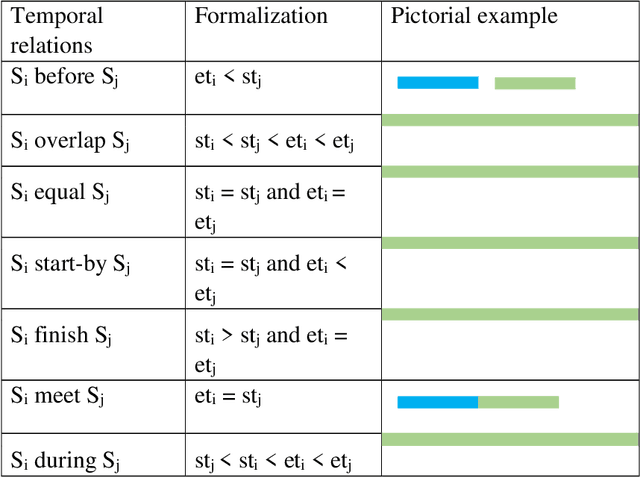

Abstract:We propose a novel activity learning framework based on Edge Cloud architecture for the purpose of recognizing and predicting human activities. Although activity recognition has been vastly studied by many researchers, the temporal features that constitute an activity, which can provide useful insights for activity models, have not been exploited to their full potentials by mining algorithms. In this paper, we utilize temporal features for activity recognition and prediction in a single smart home setting. We discover activity patterns and temporal relations such as the order of activities from real data to develop a prompting system. Analysis of real data collected from smart homes was used to validate the proposed method.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge