Sungrack Yun

Steering Guidance for Personalized Text-to-Image Diffusion Models

Aug 01, 2025Abstract:Personalizing text-to-image diffusion models is crucial for adapting the pre-trained models to specific target concepts, enabling diverse image generation. However, fine-tuning with few images introduces an inherent trade-off between aligning with the target distribution (e.g., subject fidelity) and preserving the broad knowledge of the original model (e.g., text editability). Existing sampling guidance methods, such as classifier-free guidance (CFG) and autoguidance (AG), fail to effectively guide the output toward well-balanced space: CFG restricts the adaptation to the target distribution, while AG compromises text alignment. To address these limitations, we propose personalization guidance, a simple yet effective method leveraging an unlearned weak model conditioned on a null text prompt. Moreover, our method dynamically controls the extent of unlearning in a weak model through weight interpolation between pre-trained and fine-tuned models during inference. Unlike existing guidance methods, which depend solely on guidance scales, our method explicitly steers the outputs toward a balanced latent space without additional computational overhead. Experimental results demonstrate that our proposed guidance can improve text alignment and target distribution fidelity, integrating seamlessly with various fine-tuning strategies.

MultiHuman-Testbench: Benchmarking Image Generation for Multiple Humans

Jun 25, 2025Abstract:Generation of images containing multiple humans, performing complex actions, while preserving their facial identities, is a significant challenge. A major factor contributing to this is the lack of a a dedicated benchmark. To address this, we introduce MultiHuman-Testbench, a novel benchmark for rigorously evaluating generative models for multi-human generation. The benchmark comprises 1800 samples, including carefully curated text prompts, describing a range of simple to complex human actions. These prompts are matched with a total of 5,550 unique human face images, sampled uniformly to ensure diversity across age, ethnic background, and gender. Alongside captions, we provide human-selected pose conditioning images which accurately match the prompt. We propose a multi-faceted evaluation suite employing four key metrics to quantify face count, ID similarity, prompt alignment, and action detection. We conduct a thorough evaluation of a diverse set of models, including zero-shot approaches and training-based methods, with and without regional priors. We also propose novel techniques to incorporate image and region isolation using human segmentation and Hungarian matching, significantly improving ID similarity. Our proposed benchmark and key findings provide valuable insights and a standardized tool for advancing research in multi-human image generation.

Hollowed Net for On-Device Personalization of Text-to-Image Diffusion Models

Nov 02, 2024Abstract:Recent advancements in text-to-image diffusion models have enabled the personalization of these models to generate custom images from textual prompts. This paper presents an efficient LoRA-based personalization approach for on-device subject-driven generation, where pre-trained diffusion models are fine-tuned with user-specific data on resource-constrained devices. Our method, termed Hollowed Net, enhances memory efficiency during fine-tuning by modifying the architecture of a diffusion U-Net to temporarily remove a fraction of its deep layers, creating a hollowed structure. This approach directly addresses on-device memory constraints and substantially reduces GPU memory requirements for training, in contrast to previous methods that primarily focus on minimizing training steps and reducing the number of parameters to update. Additionally, the personalized Hollowed Net can be transferred back into the original U-Net, enabling inference without additional memory overhead. Quantitative and qualitative analyses demonstrate that our approach not only reduces training memory to levels as low as those required for inference but also maintains or improves personalization performance compared to existing methods.

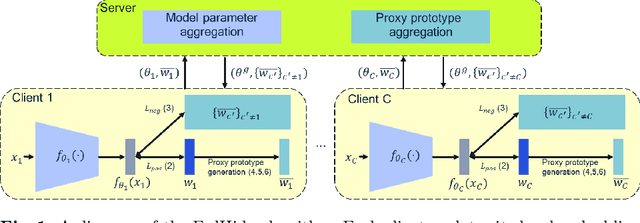

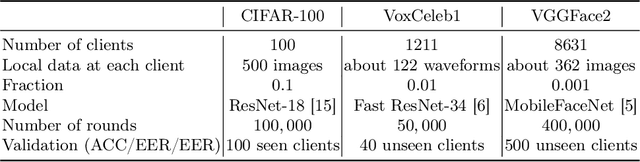

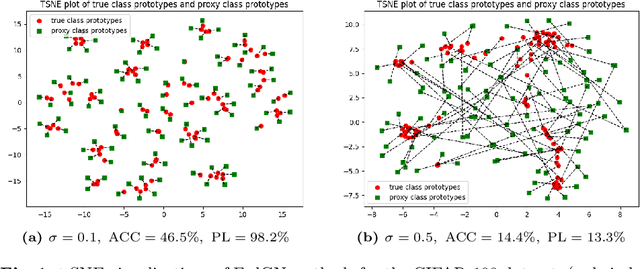

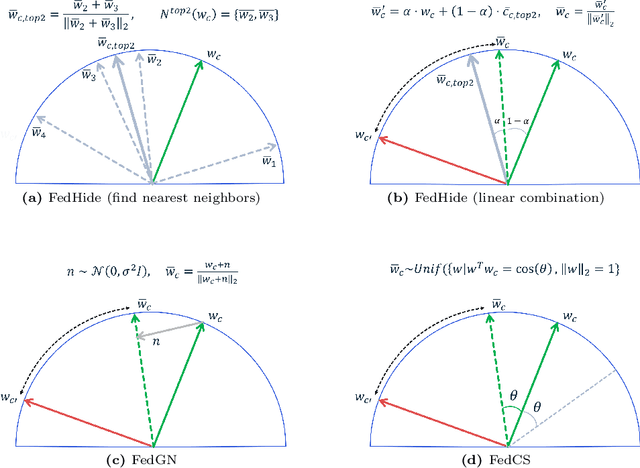

FedHide: Federated Learning by Hiding in the Neighbors

Sep 12, 2024

Abstract:We propose a prototype-based federated learning method designed for embedding networks in classification or verification tasks. Our focus is on scenarios where each client has data from a single class. The main challenge is to develop an embedding network that can distinguish between different classes while adhering to privacy constraints. Sharing true class prototypes with the server or other clients could potentially compromise sensitive information. To tackle this issue, we propose a proxy class prototype that will be shared among clients instead of the true class prototype. Our approach generates proxy class prototypes by linearly combining them with their nearest neighbors. This technique conceals the true class prototype while enabling clients to learn discriminative embedding networks. We compare our method to alternative techniques, such as adding random Gaussian noise and using random selection with cosine similarity constraints. Furthermore, we evaluate the robustness of our approach against gradient inversion attacks and introduce a measure for prototype leakage. This measure quantifies the extent of private information revealed when sharing the proposed proxy class prototype. Moreover, we provide a theoretical analysis of the convergence properties of our approach. Our proposed method for federated learning from scratch demonstrates its effectiveness through empirical results on three benchmark datasets: CIFAR-100, VoxCeleb1, and VGGFace2.

Feature Diversification and Adaptation for Federated Domain Generalization

Jul 11, 2024

Abstract:Federated learning, a distributed learning paradigm, utilizes multiple clients to build a robust global model. In real-world applications, local clients often operate within their limited domains, leading to a `domain shift' across clients. Privacy concerns limit each client's learning to its own domain data, which increase the risk of overfitting. Moreover, the process of aggregating models trained on own limited domain can be potentially lead to a significant degradation in the global model performance. To deal with these challenges, we introduce the concept of federated feature diversification. Each client diversifies the own limited domain data by leveraging global feature statistics, i.e., the aggregated average statistics over all participating clients, shared through the global model's parameters. This data diversification helps local models to learn client-invariant representations while preserving privacy. Our resultant global model shows robust performance on unseen test domain data. To enhance performance further, we develop an instance-adaptive inference approach tailored for test domain data. Our proposed instance feature adapter dynamically adjusts feature statistics to align with the test input, thereby reducing the domain gap between the test and training domains. We show that our method achieves state-of-the-art performance on several domain generalization benchmarks within a federated learning setting.

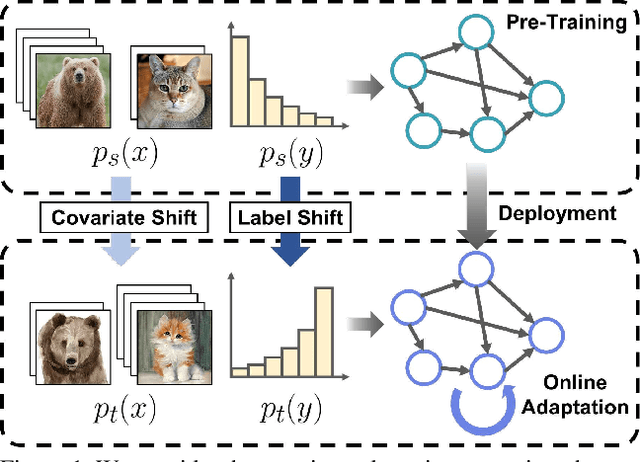

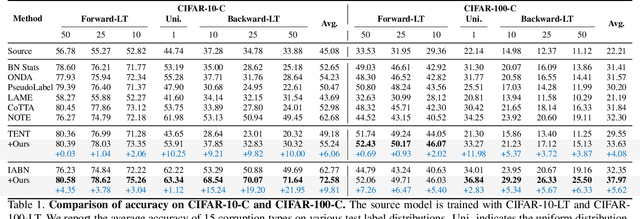

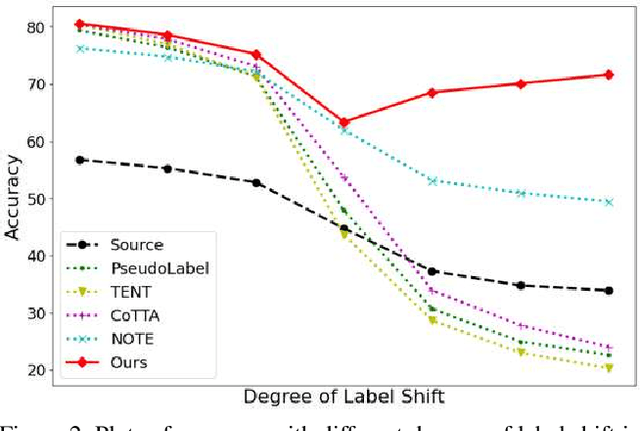

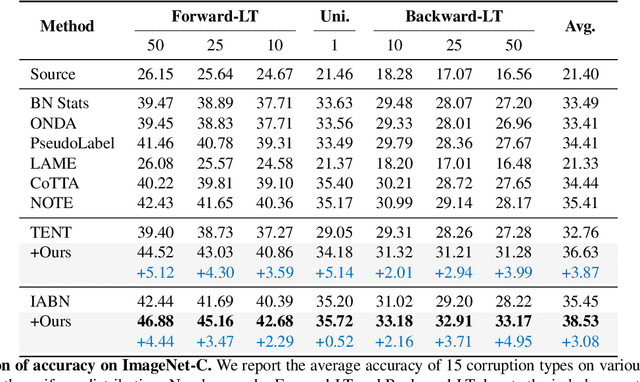

Label Shift Adapter for Test-Time Adaptation under Covariate and Label Shifts

Aug 17, 2023

Abstract:Test-time adaptation (TTA) aims to adapt a pre-trained model to the target domain in a batch-by-batch manner during inference. While label distributions often exhibit imbalances in real-world scenarios, most previous TTA approaches typically assume that both source and target domain datasets have balanced label distribution. Due to the fact that certain classes appear more frequently in certain domains (e.g., buildings in cities, trees in forests), it is natural that the label distribution shifts as the domain changes. However, we discover that the majority of existing TTA methods fail to address the coexistence of covariate and label shifts. To tackle this challenge, we propose a novel label shift adapter that can be incorporated into existing TTA approaches to deal with label shifts during the TTA process effectively. Specifically, we estimate the label distribution of the target domain to feed it into the label shift adapter. Subsequently, the label shift adapter produces optimal parameters for the target label distribution. By predicting only the parameters for a part of the pre-trained source model, our approach is computationally efficient and can be easily applied, regardless of the model architectures. Through extensive experiments, we demonstrate that integrating our strategy with TTA approaches leads to substantial performance improvements under the joint presence of label and covariate shifts.

Progressive Random Convolutions for Single Domain Generalization

Apr 02, 2023Abstract:Single domain generalization aims to train a generalizable model with only one source domain to perform well on arbitrary unseen target domains. Image augmentation based on Random Convolutions (RandConv), consisting of one convolution layer randomly initialized for each mini-batch, enables the model to learn generalizable visual representations by distorting local textures despite its simple and lightweight structure. However, RandConv has structural limitations in that the generated image easily loses semantics as the kernel size increases, and lacks the inherent diversity of a single convolution operation. To solve the problem, we propose a Progressive Random Convolution (Pro-RandConv) method that recursively stacks random convolution layers with a small kernel size instead of increasing the kernel size. This progressive approach can not only mitigate semantic distortions by reducing the influence of pixels away from the center in the theoretical receptive field, but also create more effective virtual domains by gradually increasing the style diversity. In addition, we develop a basic random convolution layer into a random convolution block including deformable offsets and affine transformation to support texture and contrast diversification, both of which are also randomly initialized. Without complex generators or adversarial learning, we demonstrate that our simple yet effective augmentation strategy outperforms state-of-the-art methods on single domain generalization benchmarks.

Improving Test-Time Adaptation via Shift-agnostic Weight Regularization and Nearest Source Prototypes

Jul 24, 2022

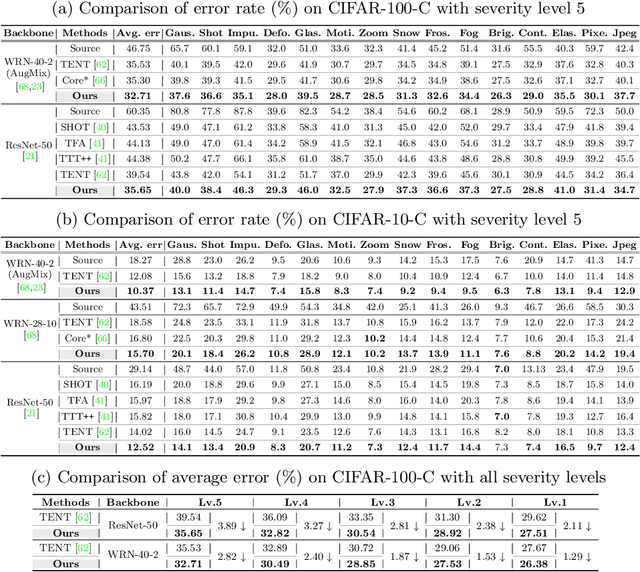

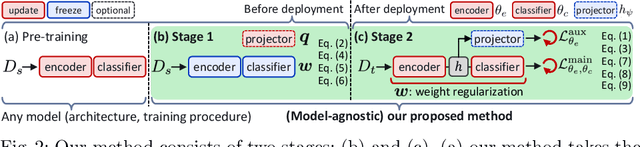

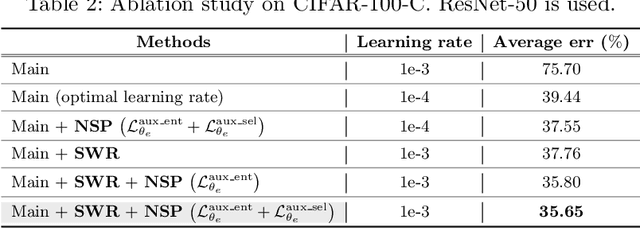

Abstract:This paper proposes a novel test-time adaptation strategy that adjusts the model pre-trained on the source domain using only unlabeled online data from the target domain to alleviate the performance degradation due to the distribution shift between the source and target domains. Adapting the entire model parameters using the unlabeled online data may be detrimental due to the erroneous signals from an unsupervised objective. To mitigate this problem, we propose a shift-agnostic weight regularization that encourages largely updating the model parameters sensitive to distribution shift while slightly updating those insensitive to the shift, during test-time adaptation. This regularization enables the model to quickly adapt to the target domain without performance degradation by utilizing the benefit of a high learning rate. In addition, we present an auxiliary task based on nearest source prototypes to align the source and target features, which helps reduce the distribution shift and leads to further performance improvement. We show that our method exhibits state-of-the-art performance on various standard benchmarks and even outperforms its supervised counterpart.

Domain Agnostic Few-shot Learning for Speaker Verification

Jun 28, 2022

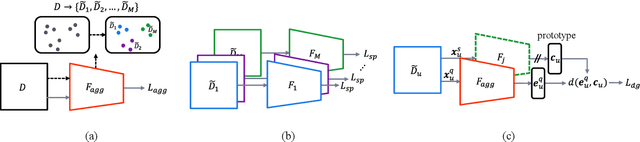

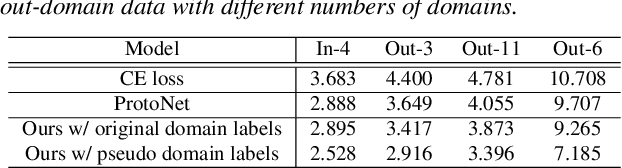

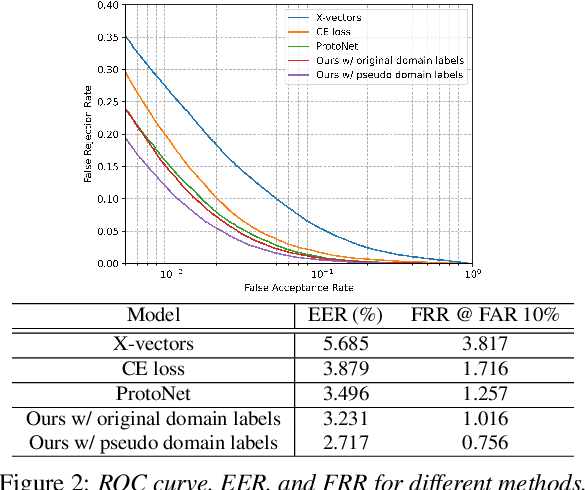

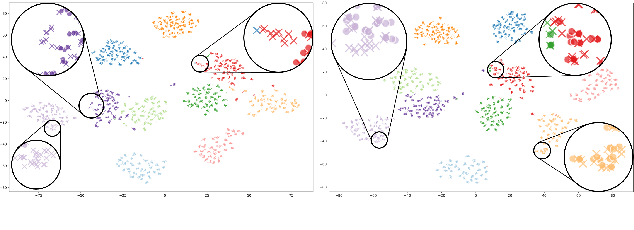

Abstract:Deep learning models for verification systems often fail to generalize to new users and new environments, even though they learn highly discriminative features. To address this problem, we propose a few-shot domain generalization framework that learns to tackle distribution shift for new users and new domains. Our framework consists of domain-specific and domain-aggregation networks, which are the experts on specific and combined domains, respectively. By using these networks, we generate episodes that mimic the presence of both novel users and novel domains in the training phase to eventually produce better generalization. To save memory, we reduce the number of domain-specific networks by clustering similar domains together. Upon extensive evaluation on artificially generated noise domains, we can explicitly show generalization ability of our framework. In addition, we apply our proposed methods to the existing competitive architecture on the standard benchmark, which shows further performance improvements.

Distribution Estimation to Automate Transformation Policies for Self-Supervision

Nov 24, 2021

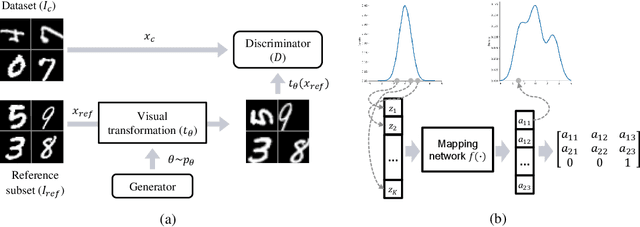

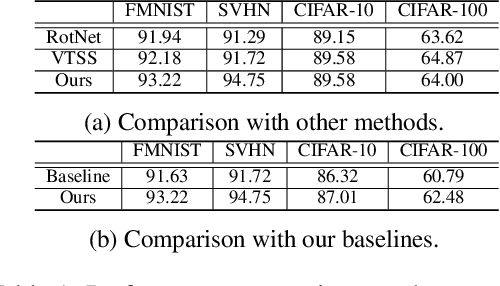

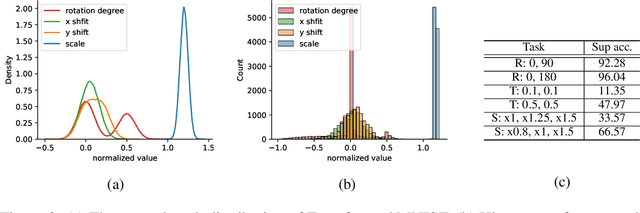

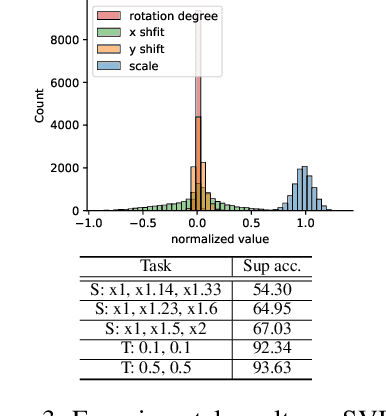

Abstract:In recent visual self-supervision works, an imitated classification objective, called pretext task, is established by assigning labels to transformed or augmented input images. The goal of pretext can be predicting what transformations are applied to the image. However, it is observed that image transformations already present in the dataset might be less effective in learning such self-supervised representations. Building on this observation, we propose a framework based on generative adversarial network to automatically find the transformations which are not present in the input dataset and thus effective for the self-supervised learning. This automated policy allows to estimate the transformation distribution of a dataset and also construct its complementary distribution from which training pairs are sampled for the pretext task. We evaluated our framework using several visual recognition datasets to show the efficacy of our automated transformation policy.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge