Shruti Joshi

From Isolation to Entanglement: When Do Interpretability Methods Identify and Disentangle Known Concepts?

Dec 17, 2025Abstract:A central goal of interpretability is to recover representations of causally relevant concepts from the activations of neural networks. The quality of these concept representations is typically evaluated in isolation, and under implicit independence assumptions that may not hold in practice. Thus, it is unclear whether common featurization methods - including sparse autoencoders (SAEs) and sparse probes - recover disentangled representations of these concepts. This study proposes a multi-concept evaluation setting where we control the correlations between textual concepts, such as sentiment, domain, and tense, and analyze performance under increasing correlations between them. We first evaluate the extent to which featurizers can learn disentangled representations of each concept under increasing correlational strengths. We observe a one-to-many relationship from concepts to features: features correspond to no more than one concept, but concepts are distributed across many features. Then, we perform steering experiments, measuring whether each concept is independently manipulable. Even when trained on uniform distributions of concepts, SAE features generally affect many concepts when steered, indicating that they are neither selective nor independent; nonetheless, features affect disjoint subspaces. These results suggest that correlational metrics for measuring disentanglement are generally not sufficient for establishing independence when steering, and that affecting disjoint subspaces is not sufficient for concept selectivity. These results underscore the importance of compositional evaluations in interpretability research.

Contextual fusion enhances robustness to image blurring

Jun 07, 2024Abstract:Mammalian brains handle complex reasoning by integrating information across brain regions specialized for particular sensory modalities. This enables improved robustness and generalization versus deep neural networks, which typically process one modality and are vulnerable to perturbations. While defense methods exist, they do not generalize well across perturbations. We developed a fusion model combining background and foreground features from CNNs trained on Imagenet and Places365. We tested its robustness to human-perceivable perturbations on MS COCO. The fusion model improved robustness, especially for classes with greater context variability. Our proposed solution for integrating multiple modalities provides a new approach to enhance robustness and may be complementary to existing methods.

Dynamic Inference with Neural Interpreters

Oct 12, 2021

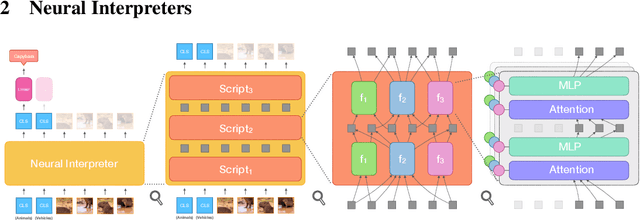

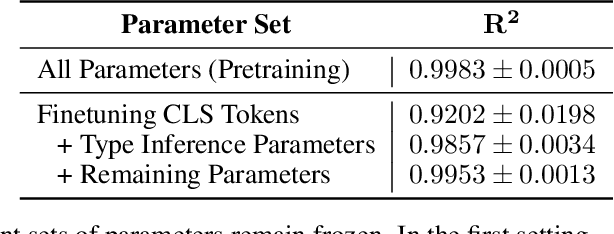

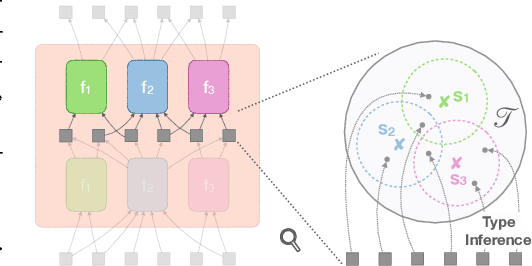

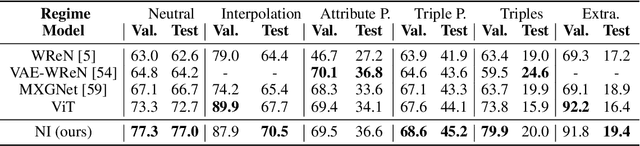

Abstract:Modern neural network architectures can leverage large amounts of data to generalize well within the training distribution. However, they are less capable of systematic generalization to data drawn from unseen but related distributions, a feat that is hypothesized to require compositional reasoning and reuse of knowledge. In this work, we present Neural Interpreters, an architecture that factorizes inference in a self-attention network as a system of modules, which we call \emph{functions}. Inputs to the model are routed through a sequence of functions in a way that is end-to-end learned. The proposed architecture can flexibly compose computation along width and depth, and lends itself well to capacity extension after training. To demonstrate the versatility of Neural Interpreters, we evaluate it in two distinct settings: image classification and visual abstract reasoning on Raven Progressive Matrices. In the former, we show that Neural Interpreters perform on par with the vision transformer using fewer parameters, while being transferrable to a new task in a sample efficient manner. In the latter, we find that Neural Interpreters are competitive with respect to the state-of-the-art in terms of systematic generalization

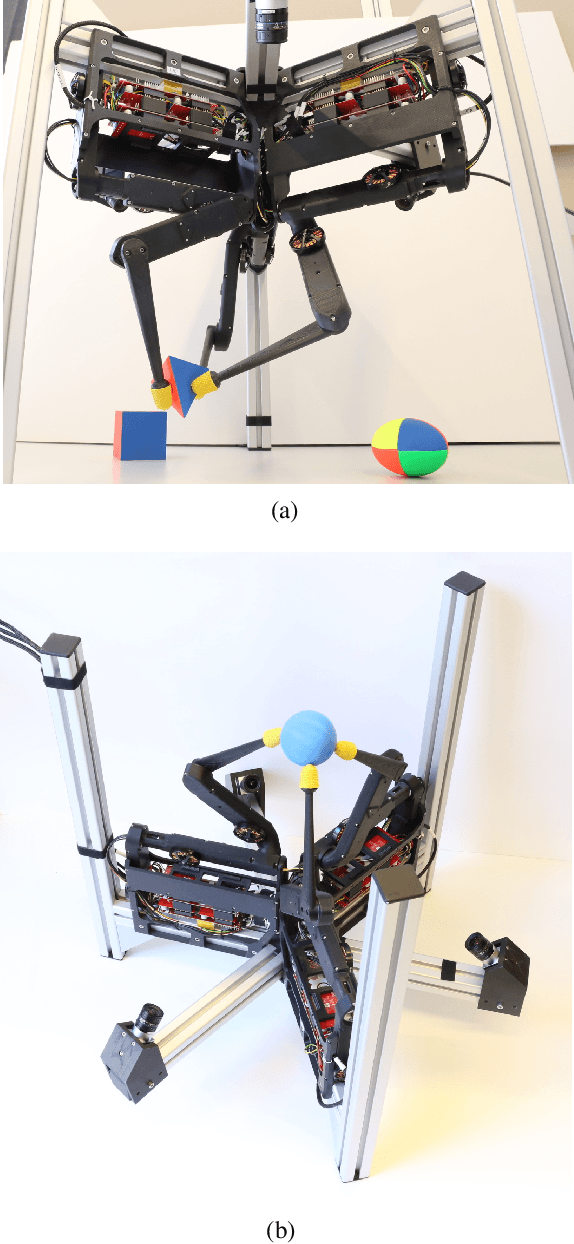

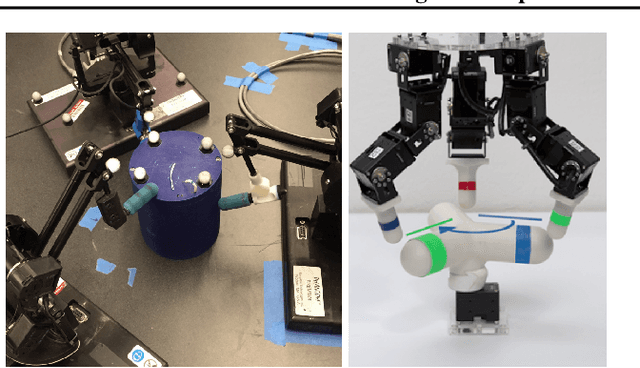

A Robot Cluster for Reproducible Research in Dexterous Manipulation

Sep 22, 2021

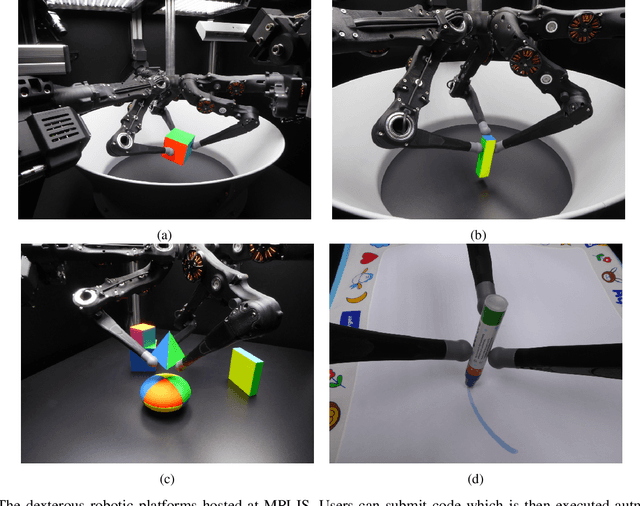

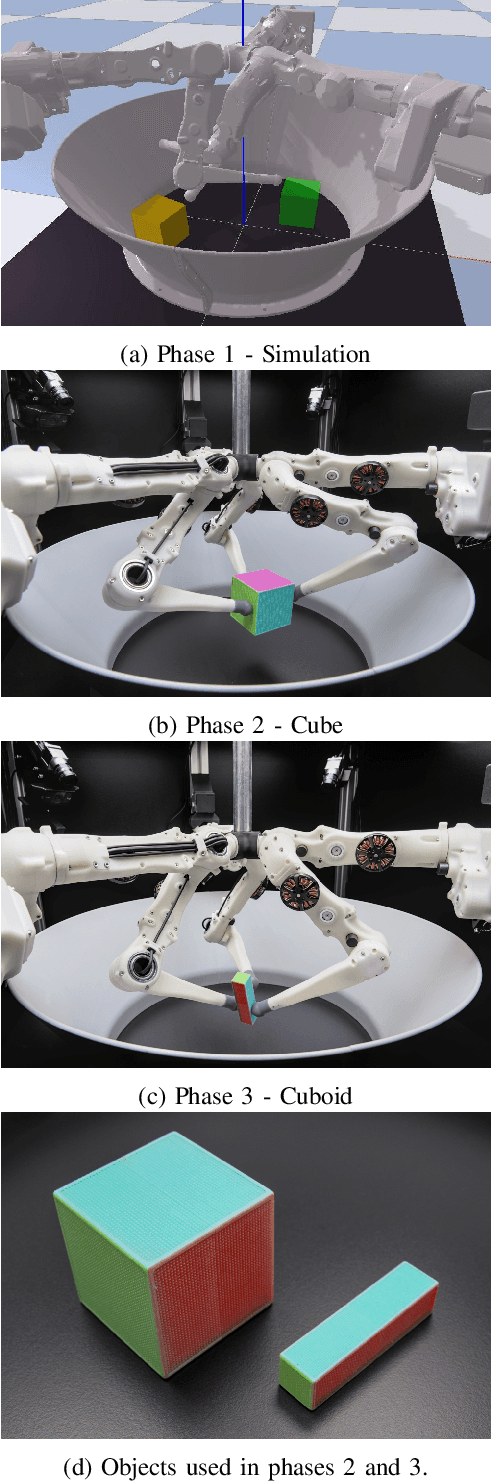

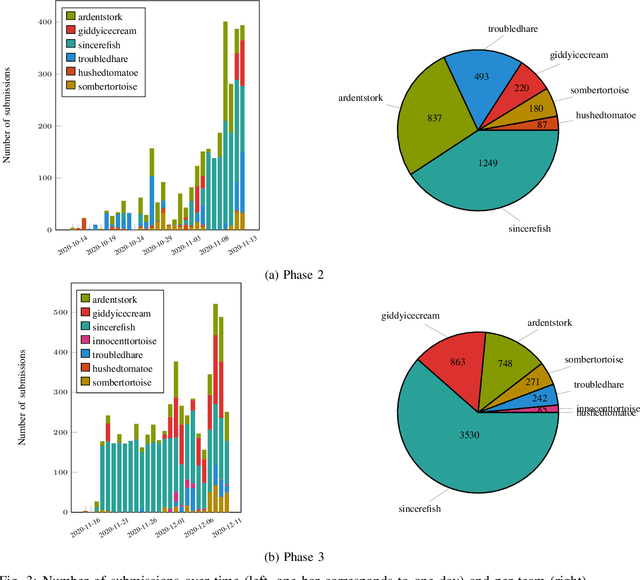

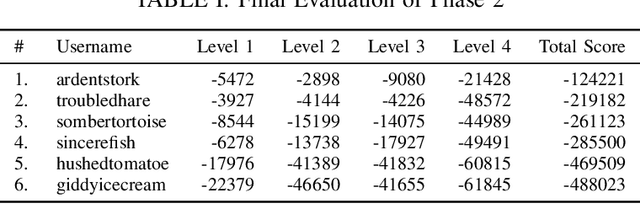

Abstract:Dexterous manipulation remains an open problem in robotics. To coordinate efforts of the research community towards tackling this problem, we propose a shared benchmark. We designed and built robotic platforms that are hosted at the MPI-IS and can be accessed remotely. Each platform consists of three robotic fingers that are capable of dexterous object manipulation. Users are able to control the platforms remotely by submitting code that is executed automatically, akin to a computational cluster. Using this setup, i) we host robotics competitions, where teams from anywhere in the world access our platforms to tackle challenging tasks, ii) we publish the datasets collected during these competitions (consisting of hundreds of robot hours), and iii) we give researchers access to these platforms for their own projects.

Function Contrastive Learning of Transferable Representations

Oct 14, 2020

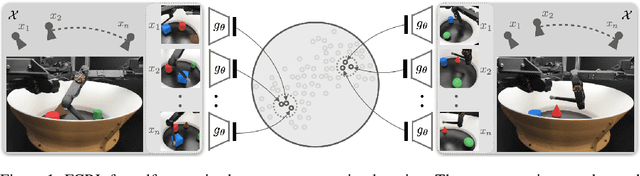

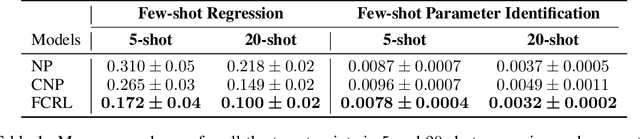

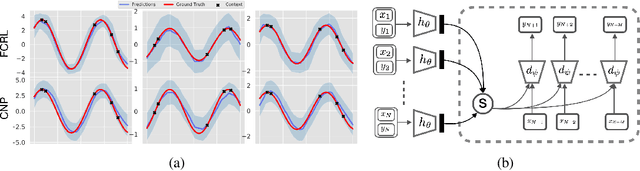

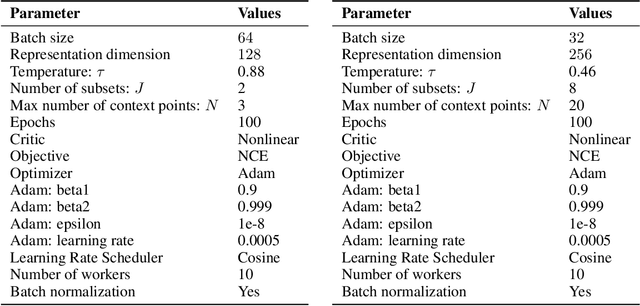

Abstract:Few-shot-learning seeks to find models that are capable of fast-adaptation to novel tasks. Unlike typical few-shot learning algorithms, we propose a contrastive learning method which is not trained to solve a set of tasks, but rather attempts to find a good representation of the underlying data-generating processes (\emph{functions}). This allows for finding representations which are useful for an entire series of tasks sharing the same function. In particular, our training scheme is driven by the self-supervision signal indicating whether two sets of samples stem from the same underlying function. Our experiments on a number of synthetic and real-world datasets show that the representations we obtain can outperform strong baselines in terms of downstream performance and noise robustness, even when these baselines are trained in an end-to-end manner.

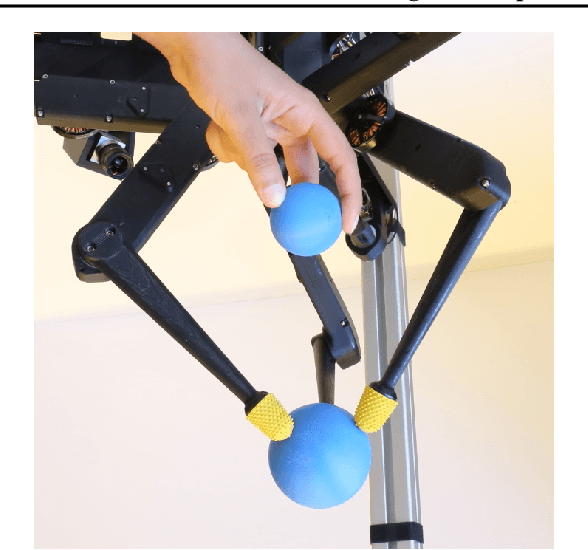

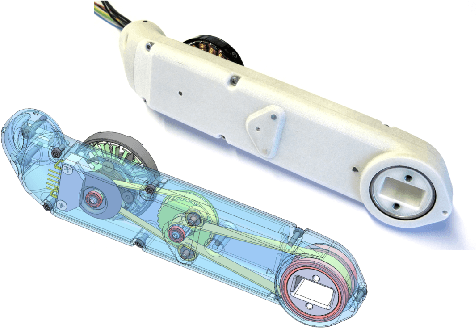

TriFinger: An Open-Source Robot for Learning Dexterity

Aug 08, 2020

Abstract:Dexterous object manipulation remains an open problem in robotics, despite the rapid progress in machine learning during the past decade. We argue that a hindrance is the high cost of experimentation on real systems, in terms of both time and money. We address this problem by proposing an open-source robotic platform which can safely operate without human supervision. The hardware is inexpensive (about \SI{5000}[\$]{}) yet highly dynamic, robust, and capable of complex interaction with external objects. The software operates at 1-kilohertz and performs safety checks to prevent the hardware from breaking. The easy-to-use front-end (in C++ and Python) is suitable for real-time control as well as deep reinforcement learning. In addition, the software framework is largely robot-agnostic and can hence be used independently of the hardware proposed herein. Finally, we illustrate the potential of the proposed platform through a number of experiments, including real-time optimal control, deep reinforcement learning from scratch, throwing, and writing.

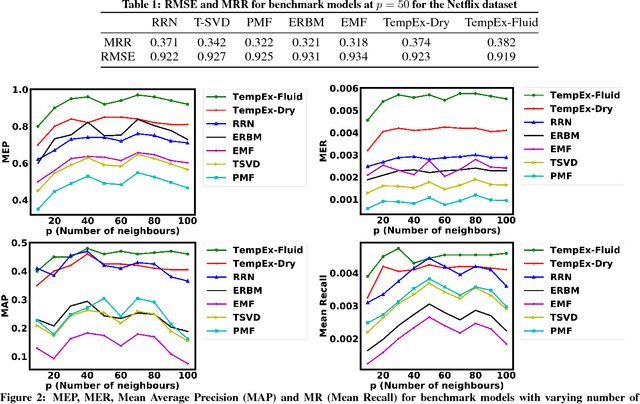

Explanations for Temporal Recommendations

Jul 17, 2018

Abstract:Recommendation systems are an integral part of Artificial Intelligence (AI) and have become increasingly important in the growing age of commercialization in AI. Deep learning (DL) techniques for recommendation systems (RS) provide powerful latent-feature models for effective recommendation but suffer from the major drawback of being non-interpretable. In this paper we describe a framework for explainable temporal recommendations in a DL model. We consider an LSTM based Recurrent Neural Network (RNN) architecture for recommendation and a neighbourhood-based scheme for generating explanations in the model. We demonstrate the effectiveness of our approach through experiments on the Netflix dataset by jointly optimizing for both prediction accuracy and explainability.

* Accepted at the XAI Workshop in IJCAI/ECAI 2018

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge