Maxim Bazhenov

Contextual fusion enhances robustness to image blurring

Jun 07, 2024Abstract:Mammalian brains handle complex reasoning by integrating information across brain regions specialized for particular sensory modalities. This enables improved robustness and generalization versus deep neural networks, which typically process one modality and are vulnerable to perturbations. While defense methods exist, they do not generalize well across perturbations. We developed a fusion model combining background and foreground features from CNNs trained on Imagenet and Places365. We tested its robustness to human-perceivable perturbations on MS COCO. The fusion model improved robustness, especially for classes with greater context variability. Our proposed solution for integrating multiple modalities provides a new approach to enhance robustness and may be complementary to existing methods.

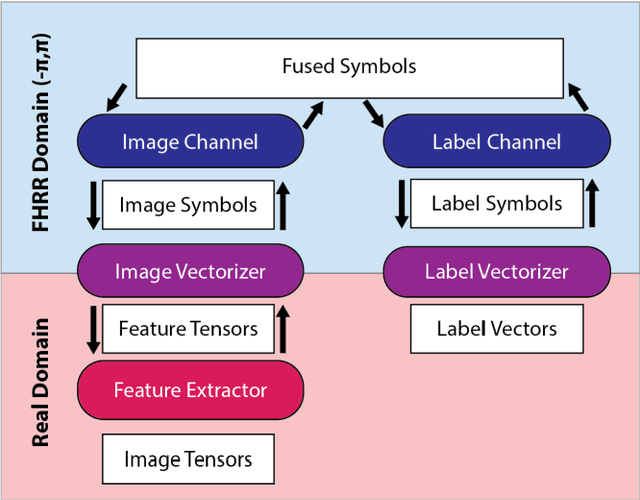

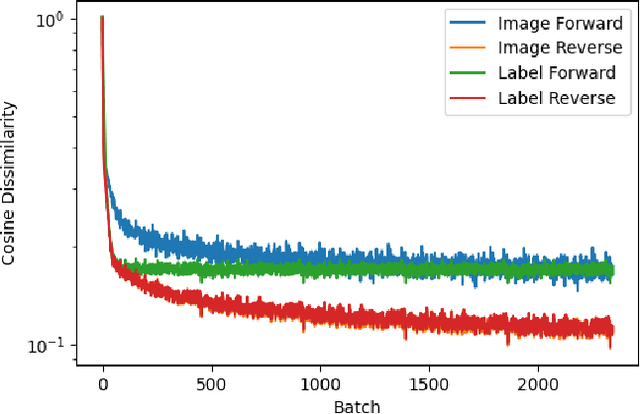

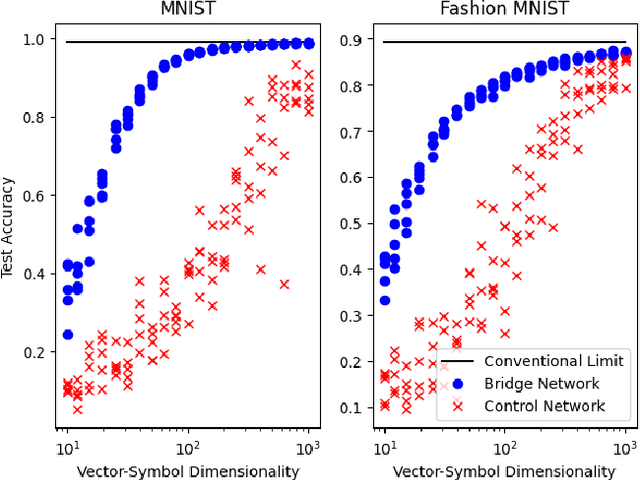

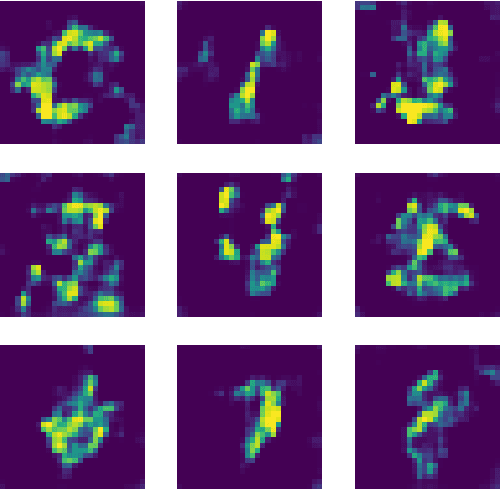

Bridge Networks

Jun 15, 2021

Abstract:Despite rapid progress, current deep learning methods face a number of critical challenges. These include high energy consumption, catastrophic forgetting, dependance on global losses, and an inability to reason symbolically. By combining concepts from information bottleneck theory and vector-symbolic architectures, we propose and implement a novel information processing architecture, the 'Bridge network.' We show this architecture provides unique advantages which can address the problem of global losses and catastrophic forgetting. Furthermore, we argue that it provides a further basis for increasing energy efficiency of execution and the ability to reason symbolically.

Deep Phasor Networks: Connecting Conventional and Spiking Neural Networks

Jun 15, 2021

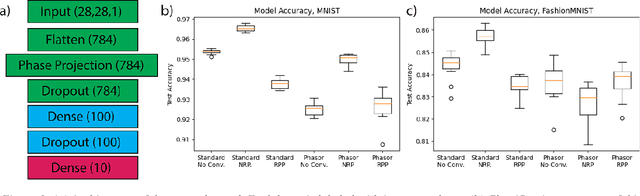

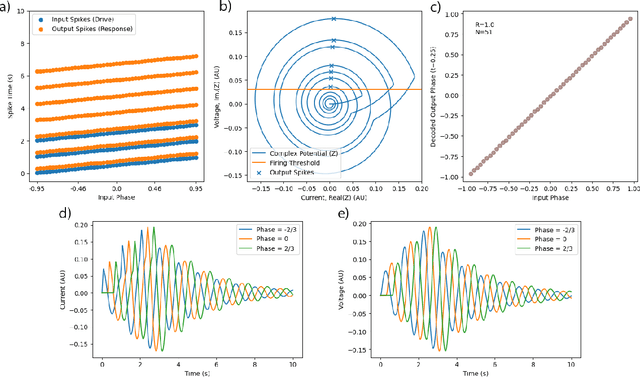

Abstract:In this work, we extend standard neural networks by building upon an assumption that neuronal activations correspond to the angle of a complex number lying on the unit circle, or 'phasor.' Each layer in such a network produces new activations by taking a weighted superposition of the previous layer's phases and calculating the new phase value. This generalized architecture allows models to reach high accuracy and carries the singular advantage that mathematically equivalent versions of the network can be executed with or without regard to a temporal variable. Importantly, the value of a phase angle in the temporal domain can be sparsely represented by a periodically repeating series of delta functions or 'spikes'. We demonstrate the atemporal training of a phasor network on standard deep learning tasks and show that these networks can then be executed in either the traditional atemporal domain or spiking temporal domain with no conversion step needed. This provides a novel basis for constructing deep networkswhich operate via temporal, spike-based calculations suitable for neuromorphic computing hardware.

Replay in Deep Learning: Current Approaches and Missing Biological Elements

Apr 01, 2021

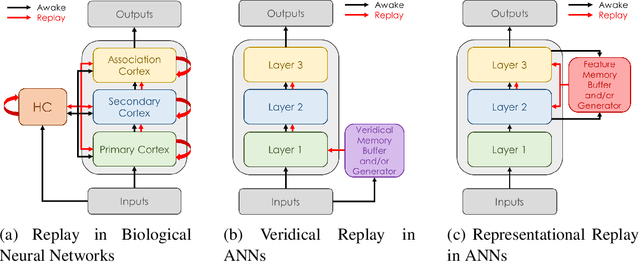

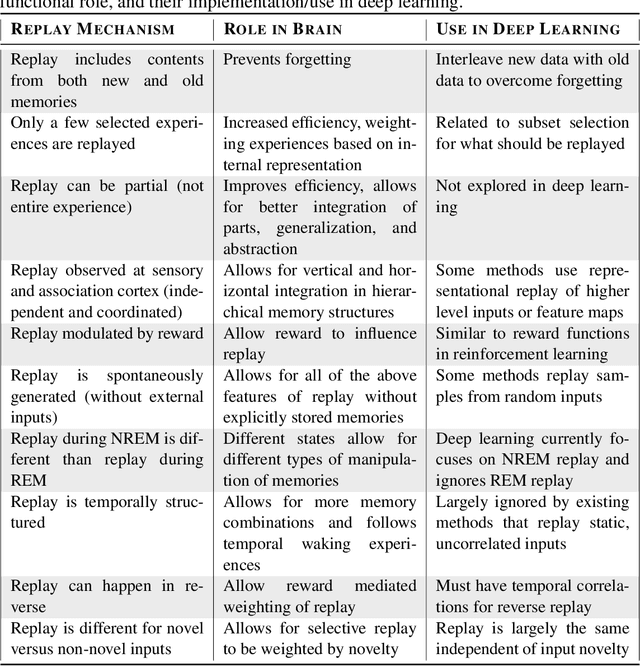

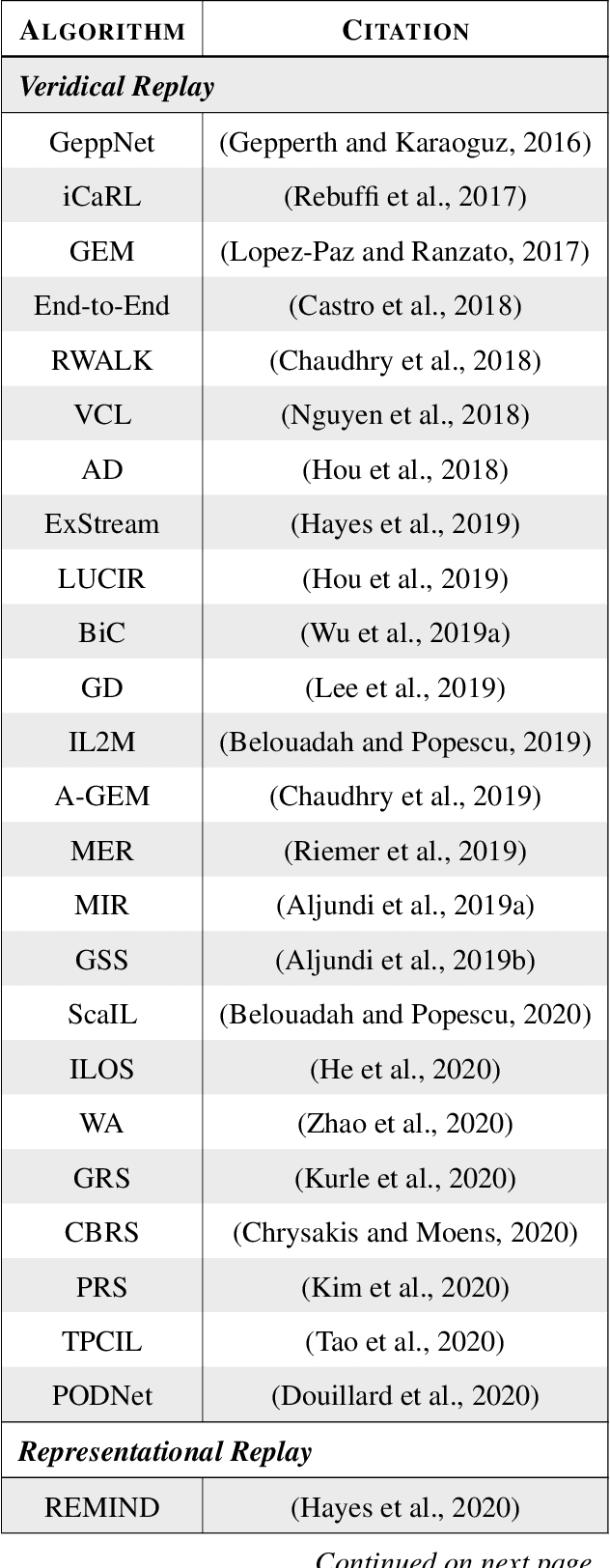

Abstract:Replay is the reactivation of one or more neural patterns, which are similar to the activation patterns experienced during past waking experiences. Replay was first observed in biological neural networks during sleep, and it is now thought to play a critical role in memory formation, retrieval, and consolidation. Replay-like mechanisms have been incorporated into deep artificial neural networks that learn over time to avoid catastrophic forgetting of previous knowledge. Replay algorithms have been successfully used in a wide range of deep learning methods within supervised, unsupervised, and reinforcement learning paradigms. In this paper, we provide the first comprehensive comparison between replay in the mammalian brain and replay in artificial neural networks. We identify multiple aspects of biological replay that are missing in deep learning systems and hypothesize how they could be utilized to improve artificial neural networks.

A Dual-Memory Architecture for Reinforcement Learning on Neuromorphic Platforms

Mar 05, 2021

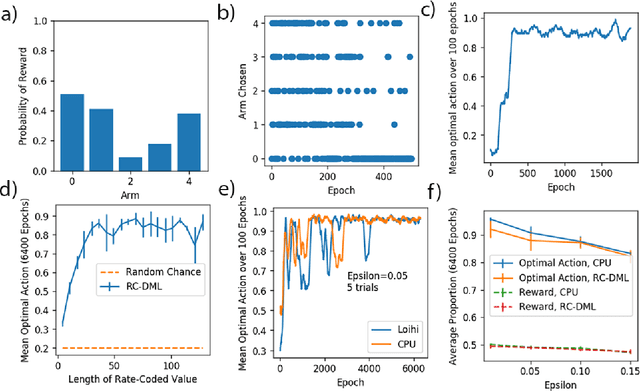

Abstract:Reinforcement learning (RL) is a foundation of learning in biological systems and provides a framework to address numerous challenges with real-world artificial intelligence applications. Efficient implementations of RL techniques could allow for agents deployed in edge-use cases to gain novel abilities, such as improved navigation, understanding complex situations and critical decision making. Towards this goal, we describe a flexible architecture to carry out reinforcement learning on neuromorphic platforms. This architecture was implemented using an Intel neuromorphic processor and demonstrated solving a variety of tasks using spiking dynamics. Our study proposes a usable energy efficient solution for real-world RL applications and demonstrates applicability of the neuromorphic platforms for RL problems.

Biologically inspired sleep algorithm for artificial neural networks

Aug 01, 2019

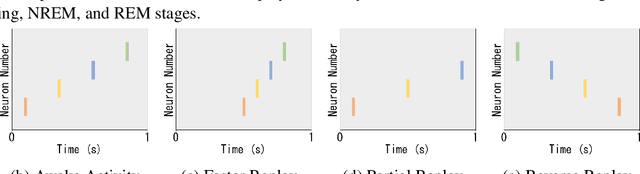

Abstract:Sleep plays an important role in incremental learning and consolidation of memories in biological systems. Motivated by the processes that are known to be involved in sleep generation in biological networks, we developed an algorithm that implements a sleep-like phase in artificial neural networks (ANNs). After initial training phase, we convert the ANN to a spiking neural network (SNN) and simulate an offline sleep-like phase using spike-timing dependent plasticity rules to modify synaptic weights. The SNN is then converted back to the ANN and evaluated or trained on new inputs. We demonstrate several performance improvements after applying this processing to ANNs trained on MNIST, CUB200 and a motivating toy dataset. First, in an incremental learning framework, sleep is able to recover older tasks that were otherwise forgotten in the ANN without sleep phase due to catastrophic forgetting. Second, sleep results in forward transfer learning of unseen tasks. Finally, sleep improves generalization ability of the ANNs to classify images with various types of noise. We provide a theoretical basis for the beneficial role of the brain-inspired sleep-like phase for the ANNs and present an algorithmic way for future implementations of the various features of sleep in deep learning ANNs. Overall, these results suggest that biological sleep can help mitigate a number of problems ANNs suffer from, such as poor generalization and catastrophic forgetting for incremental learning.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge