Giri P. Krishnan

Quantifying the Role of OpenFold Components in Protein Structure Prediction

Nov 06, 2025Abstract:Models such as AlphaFold2 and OpenFold have transformed protein structure prediction, yet their inner workings remain poorly understood. We present a methodology to systematically evaluate the contribution of individual OpenFold components to structure prediction accuracy. We identify several components that are critical for most proteins, while others vary in importance across proteins. We further show that the contribution of several components is correlated with protein length. These findings provide insight into how OpenFold achieves accurate predictions and highlight directions for interpreting protein prediction networks more broadly.

Unsupervised Replay Strategies for Continual Learning with Limited Data

Oct 21, 2024Abstract:Artificial neural networks (ANNs) show limited performance with scarce or imbalanced training data and face challenges with continuous learning, such as forgetting previously learned data after new tasks training. In contrast, the human brain can learn continuously and from just a few examples. This research explores the impact of 'sleep', an unsupervised phase incorporating stochastic activation with local Hebbian learning rules, on ANNs trained incrementally with limited and imbalanced datasets, specifically MNIST and Fashion MNIST. We discovered that introducing a sleep phase significantly enhanced accuracy in models trained with limited data. When a few tasks were trained sequentially, sleep replay not only rescued previously learned information that had been catastrophically forgetting following new task training but often enhanced performance in prior tasks, especially those trained with limited data. This study highlights the multifaceted role of sleep replay in augmenting learning efficiency and facilitating continual learning in ANNs.

Replay in Deep Learning: Current Approaches and Missing Biological Elements

Apr 01, 2021

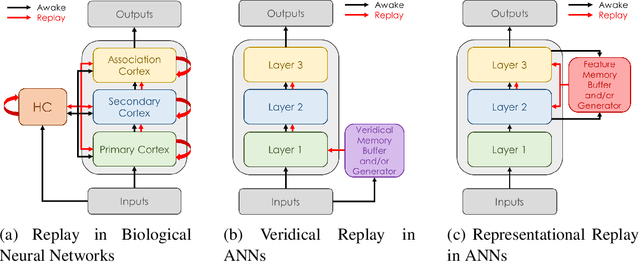

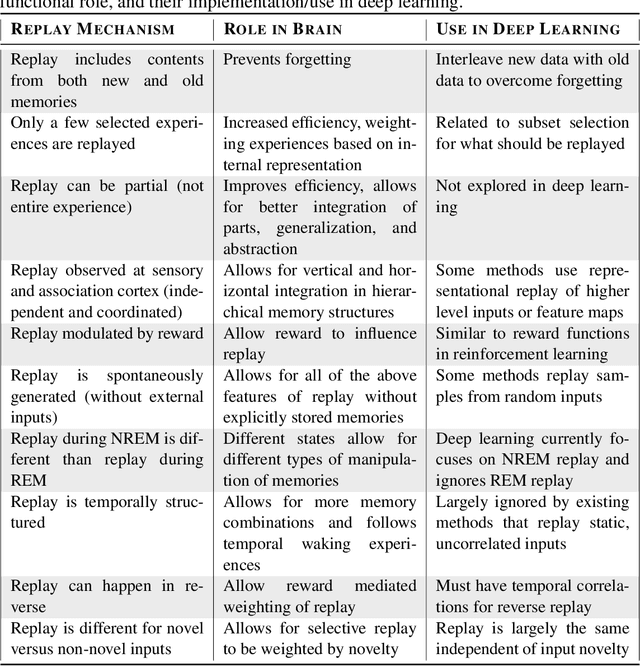

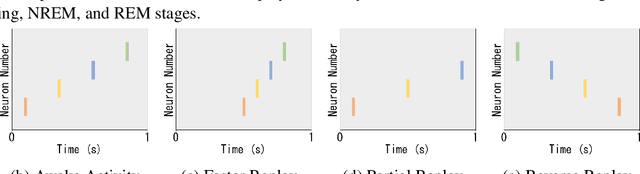

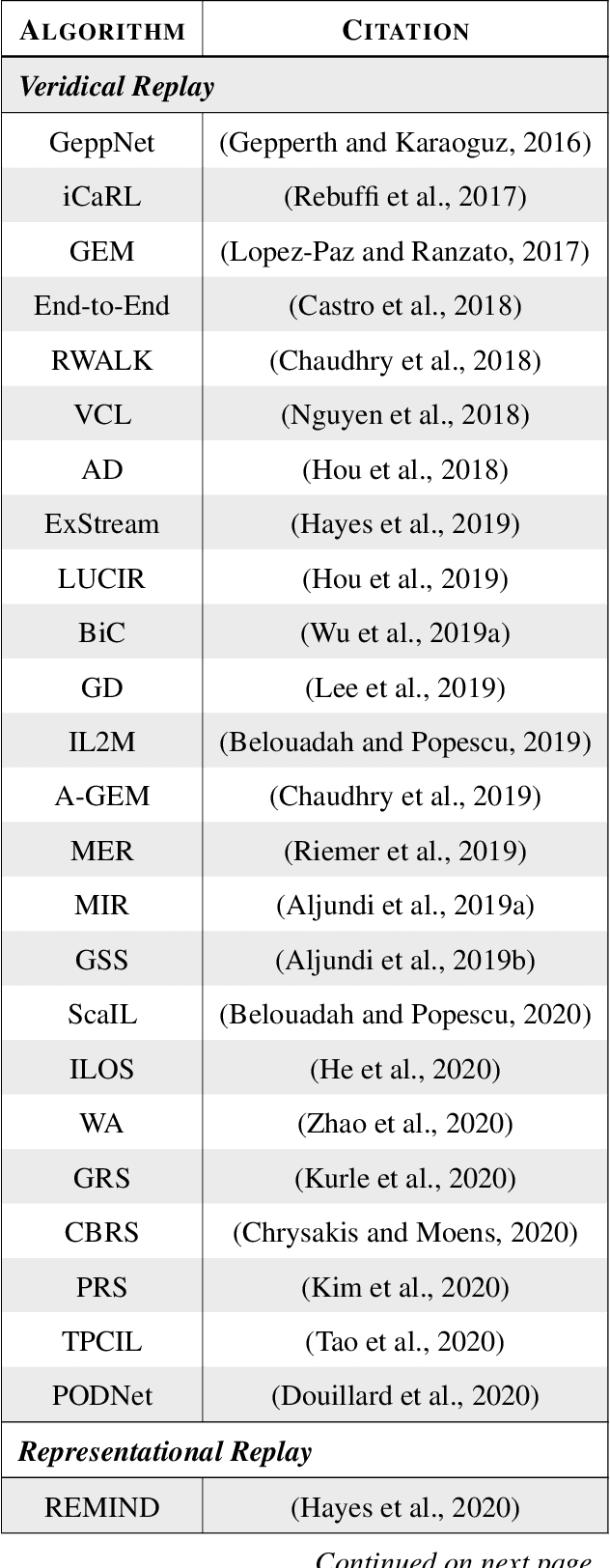

Abstract:Replay is the reactivation of one or more neural patterns, which are similar to the activation patterns experienced during past waking experiences. Replay was first observed in biological neural networks during sleep, and it is now thought to play a critical role in memory formation, retrieval, and consolidation. Replay-like mechanisms have been incorporated into deep artificial neural networks that learn over time to avoid catastrophic forgetting of previous knowledge. Replay algorithms have been successfully used in a wide range of deep learning methods within supervised, unsupervised, and reinforcement learning paradigms. In this paper, we provide the first comprehensive comparison between replay in the mammalian brain and replay in artificial neural networks. We identify multiple aspects of biological replay that are missing in deep learning systems and hypothesize how they could be utilized to improve artificial neural networks.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge