Shen Cheng

Blind-Spot Guided Diffusion for Self-supervised Real-World Denoising

Sep 19, 2025Abstract:In this work, we present Blind-Spot Guided Diffusion, a novel self-supervised framework for real-world image denoising. Our approach addresses two major challenges: the limitations of blind-spot networks (BSNs), which often sacrifice local detail and introduce pixel discontinuities due to spatial independence assumptions, and the difficulty of adapting diffusion models to self-supervised denoising. We propose a dual-branch diffusion framework that combines a BSN-based diffusion branch, generating semi-clean images, with a conventional diffusion branch that captures underlying noise distributions. To enable effective training without paired data, we use the BSN-based branch to guide the sampling process, capturing noise structure while preserving local details. Extensive experiments on the SIDD and DND datasets demonstrate state-of-the-art performance, establishing our method as a highly effective self-supervised solution for real-world denoising. Code and pre-trained models are released at: https://github.com/Sumching/BSGD.

Estimating 2D Camera Motion with Hybrid Motion Basis

Jul 30, 2025Abstract:Estimating 2D camera motion is a fundamental computer vision task that models the projection of 3D camera movements onto the 2D image plane. Current methods rely on either homography-based approaches, limited to planar scenes, or meshflow techniques that use grid-based local homographies but struggle with complex non-linear transformations. A key insight of our work is that combining flow fields from different homographies creates motion patterns that cannot be represented by any single homography. We introduce CamFlow, a novel framework that represents camera motion using hybrid motion bases: physical bases derived from camera geometry and stochastic bases for complex scenarios. Our approach includes a hybrid probabilistic loss function based on the Laplace distribution that enhances training robustness. For evaluation, we create a new benchmark by masking dynamic objects in existing optical flow datasets to isolate pure camera motion. Experiments show CamFlow outperforms state-of-the-art methods across diverse scenarios, demonstrating superior robustness and generalization in zero-shot settings. Code and datasets are available at our project page: https://lhaippp.github.io/CamFlow/.

You Only Look Around: Learning Illumination Invariant Feature for Low-light Object Detection

Oct 24, 2024Abstract:In this paper, we introduce YOLA, a novel framework for object detection in low-light scenarios. Unlike previous works, we propose to tackle this challenging problem from the perspective of feature learning. Specifically, we propose to learn illumination-invariant features through the Lambertian image formation model. We observe that, under the Lambertian assumption, it is feasible to approximate illumination-invariant feature maps by exploiting the interrelationships between neighboring color channels and spatially adjacent pixels. By incorporating additional constraints, these relationships can be characterized in the form of convolutional kernels, which can be trained in a detection-driven manner within a network. Towards this end, we introduce a novel module dedicated to the extraction of illumination-invariant features from low-light images, which can be easily integrated into existing object detection frameworks. Our empirical findings reveal significant improvements in low-light object detection tasks, as well as promising results in both well-lit and over-lit scenarios. Code is available at \url{https://github.com/MingboHong/YOLA}.

Neural Spectral Decomposition for Dataset Distillation

Aug 29, 2024

Abstract:In this paper, we propose Neural Spectrum Decomposition, a generic decomposition framework for dataset distillation. Unlike previous methods, we consider the entire dataset as a high-dimensional observation that is low-rank across all dimensions. We aim to discover the low-rank representation of the entire dataset and perform distillation efficiently. Toward this end, we learn a set of spectrum tensors and transformation matrices, which, through simple matrix multiplication, reconstruct the data distribution. Specifically, a spectrum tensor can be mapped back to the image space by a transformation matrix, and efficient information sharing during the distillation learning process is achieved through pairwise combinations of different spectrum vectors and transformation matrices. Furthermore, we integrate a trajectory matching optimization method guided by a real distribution. Our experimental results demonstrate that our approach achieves state-of-the-art performance on benchmarks, including CIFAR10, CIFAR100, Tiny Imagenet, and ImageNet Subset. Our code are available at \url{https://github.com/slyang2021/NSD}.

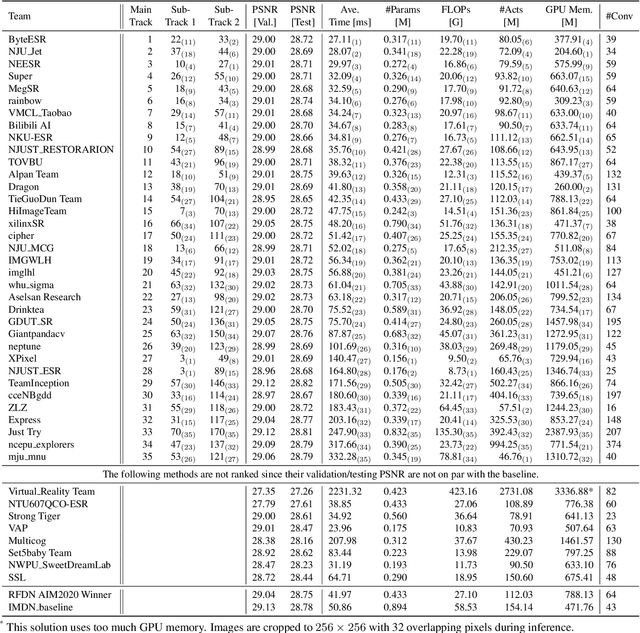

NTIRE 2022 Challenge on Efficient Super-Resolution: Methods and Results

May 11, 2022

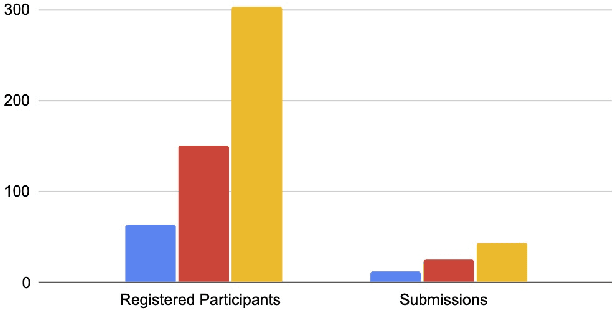

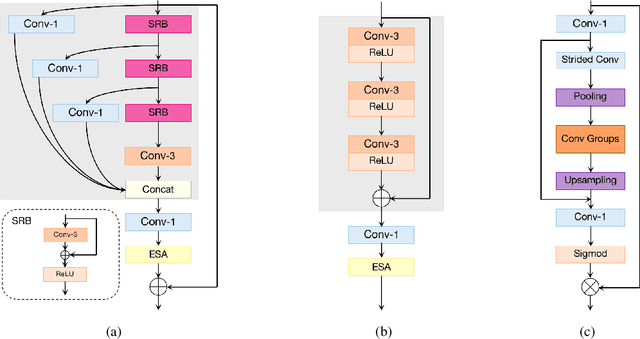

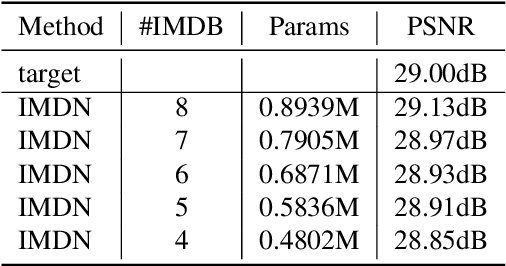

Abstract:This paper reviews the NTIRE 2022 challenge on efficient single image super-resolution with focus on the proposed solutions and results. The task of the challenge was to super-resolve an input image with a magnification factor of $\times$4 based on pairs of low and corresponding high resolution images. The aim was to design a network for single image super-resolution that achieved improvement of efficiency measured according to several metrics including runtime, parameters, FLOPs, activations, and memory consumption while at least maintaining the PSNR of 29.00dB on DIV2K validation set. IMDN is set as the baseline for efficiency measurement. The challenge had 3 tracks including the main track (runtime), sub-track one (model complexity), and sub-track two (overall performance). In the main track, the practical runtime performance of the submissions was evaluated. The rank of the teams were determined directly by the absolute value of the average runtime on the validation set and test set. In sub-track one, the number of parameters and FLOPs were considered. And the individual rankings of the two metrics were summed up to determine a final ranking in this track. In sub-track two, all of the five metrics mentioned in the description of the challenge including runtime, parameter count, FLOPs, activations, and memory consumption were considered. Similar to sub-track one, the rankings of five metrics were summed up to determine a final ranking. The challenge had 303 registered participants, and 43 teams made valid submissions. They gauge the state-of-the-art in efficient single image super-resolution.

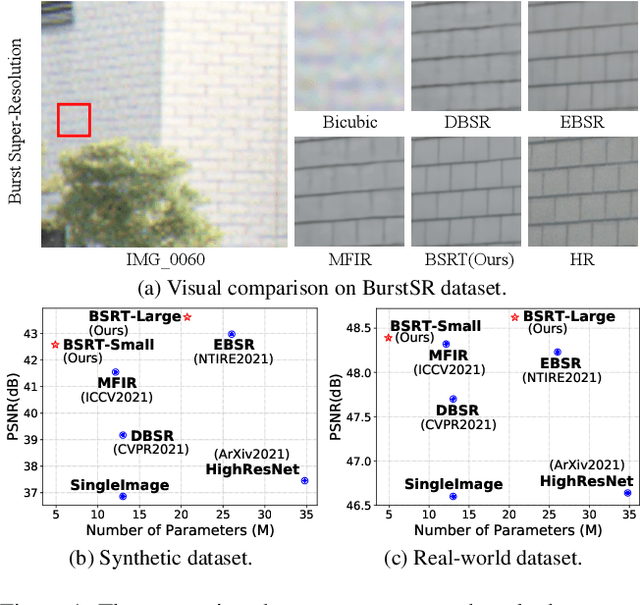

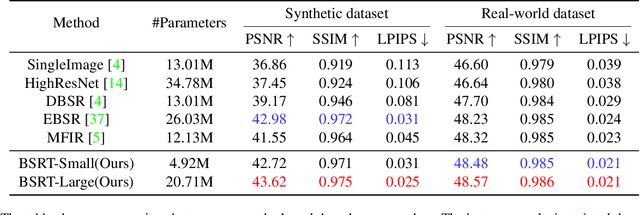

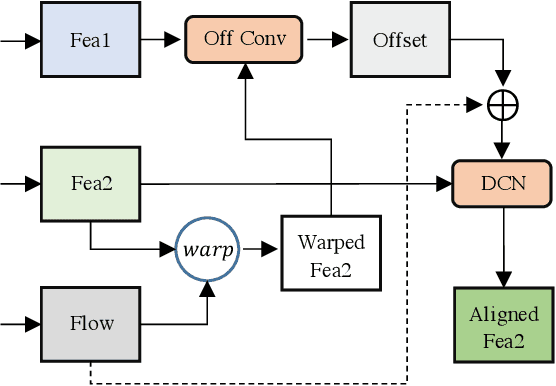

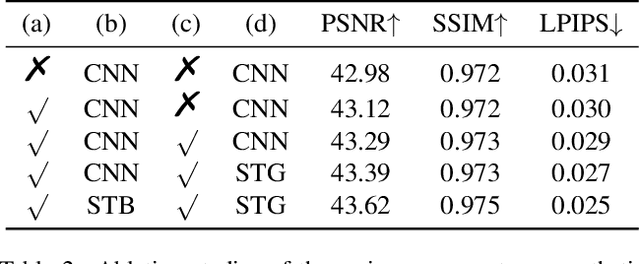

BSRT: Improving Burst Super-Resolution with Swin Transformer and Flow-Guided Deformable Alignment

Apr 22, 2022

Abstract:This work addresses the Burst Super-Resolution (BurstSR) task using a new architecture, which requires restoring a high-quality image from a sequence of noisy, misaligned, and low-resolution RAW bursts. To overcome the challenges in BurstSR, we propose a Burst Super-Resolution Transformer (BSRT), which can significantly improve the capability of extracting inter-frame information and reconstruction. To achieve this goal, we propose a Pyramid Flow-Guided Deformable Convolution Network (Pyramid FG-DCN) and incorporate Swin Transformer Blocks and Groups as our main backbone. More specifically, we combine optical flows and deformable convolutions, hence our BSRT can handle misalignment and aggregate the potential texture information in multi-frames more efficiently. In addition, our Transformer-based structure can capture long-range dependency to further improve the performance. The evaluation on both synthetic and real-world tracks demonstrates that our approach achieves a new state-of-the-art in BurstSR task. Further, our BSRT wins the championship in the NTIRE2022 Burst Super-Resolution Challenge.

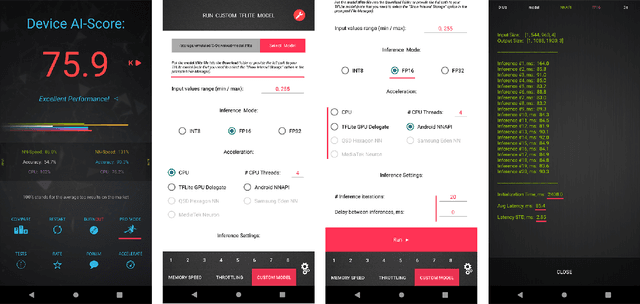

Fast Camera Image Denoising on Mobile GPUs with Deep Learning, Mobile AI 2021 Challenge: Report

May 17, 2021

Abstract:Image denoising is one of the most critical problems in mobile photo processing. While many solutions have been proposed for this task, they are usually working with synthetic data and are too computationally expensive to run on mobile devices. To address this problem, we introduce the first Mobile AI challenge, where the target is to develop an end-to-end deep learning-based image denoising solution that can demonstrate high efficiency on smartphone GPUs. For this, the participants were provided with a novel large-scale dataset consisting of noisy-clean image pairs captured in the wild. The runtime of all models was evaluated on the Samsung Exynos 2100 chipset with a powerful Mali GPU capable of accelerating floating-point and quantized neural networks. The proposed solutions are fully compatible with any mobile GPU and are capable of processing 480p resolution images under 40-80 ms while achieving high fidelity results. A detailed description of all models developed in the challenge is provided in this paper.

NBNet: Noise Basis Learning for Image Denoising with Subspace Projection

Dec 30, 2020

Abstract:In this paper, we introduce NBNet, a novel framework for image denoising. Unlike previous works, we propose to tackle this challenging problem from a new perspective: noise reduction by image-adaptive projection. Specifically, we propose to train a network that can separate signal and noise by learning a set of reconstruction basis in the feature space. Subsequently, image denosing can be achieved by selecting corresponding basis of the signal subspace and projecting the input into such space. Our key insight is that projection can naturally maintain the local structure of input signal, especially for areas with low light or weak textures. Towards this end, we propose SSA, a non-local subspace attention module designed explicitly to learn the basis generation as well as the subspace projection. We further incorporate SSA with NBNet, a UNet structured network designed for end-to-end image denosing. We conduct evaluations on benchmarks, including SIDD and DND, and NBNet achieves state-of-the-art performance on PSNR and SSIM with significantly less computational cost.

Latent Dirichlet Allocation in Generative Adversarial Networks

Dec 28, 2018

Abstract:Mode collapse is one of the key challenges in the training of Generative Adversarial Networks(GANs). Previous approaches have tried to address this challenge either by changing the loss of GANs, or by modifying optimization strategies. We argue that it is more desirable if we can find the underlying structure of real data and build a structured generative model to further get rid of mode collapse. To this end, we propose Latent Dirichlet Allocation based Generative Adversarial Networks (LDAGAN), which have high capacity of modeling complex image data. Moreover, we optimize our model by combing variational expectation-maximization (EM) algorithm with adversarial learning. Stochastic optimization strategy ensures the training process of LDAGAN is not time consuming. Experimental results demonstrate our method outperforms the existing standard CNN based GANs on the task of image generation.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge