Shahaf Bassan

SHAP Meets Tensor Networks: Provably Tractable Explanations with Parallelism

Oct 24, 2025Abstract:Although Shapley additive explanations (SHAP) can be computed in polynomial time for simple models like decision trees, they unfortunately become NP-hard to compute for more expressive black-box models like neural networks - where generating explanations is often most critical. In this work, we analyze the problem of computing SHAP explanations for *Tensor Networks (TNs)*, a broader and more expressive class of models than those for which current exact SHAP algorithms are known to hold, and which is widely used for neural network abstraction and compression. First, we introduce a general framework for computing provably exact SHAP explanations for general TNs with arbitrary structures. Interestingly, we show that, when TNs are restricted to a *Tensor Train (TT)* structure, SHAP computation can be performed in *poly-logarithmic* time using *parallel* computation. Thanks to the expressiveness power of TTs, this complexity result can be generalized to many other popular ML models such as decision trees, tree ensembles, linear models, and linear RNNs, therefore tightening previously reported complexity results for these families of models. Finally, by leveraging reductions of binarized neural networks to Tensor Network representations, we demonstrate that SHAP computation can become *efficiently tractable* when the network's *width* is fixed, while it remains computationally hard even with constant *depth*. This highlights an important insight: for this class of models, width - rather than depth - emerges as the primary computational bottleneck in SHAP computation.

Explaining, Fast and Slow: Abstraction and Refinement of Provable Explanations

Jun 10, 2025Abstract:Despite significant advancements in post-hoc explainability techniques for neural networks, many current methods rely on heuristics and do not provide formally provable guarantees over the explanations provided. Recent work has shown that it is possible to obtain explanations with formal guarantees by identifying subsets of input features that are sufficient to determine that predictions remain unchanged using neural network verification techniques. Despite the appeal of these explanations, their computation faces significant scalability challenges. In this work, we address this gap by proposing a novel abstraction-refinement technique for efficiently computing provably sufficient explanations of neural network predictions. Our method abstracts the original large neural network by constructing a substantially reduced network, where a sufficient explanation of the reduced network is also provably sufficient for the original network, hence significantly speeding up the verification process. If the explanation is in sufficient on the reduced network, we iteratively refine the network size by gradually increasing it until convergence. Our experiments demonstrate that our approach enhances the efficiency of obtaining provably sufficient explanations for neural network predictions while additionally providing a fine-grained interpretation of the network's predictions across different abstraction levels.

What makes an Ensemble (Un) Interpretable?

Jun 09, 2025Abstract:Ensemble models are widely recognized in the ML community for their limited interpretability. For instance, while a single decision tree is considered interpretable, ensembles of trees (e.g., boosted trees) are often treated as black-boxes. Despite this folklore recognition, there remains a lack of rigorous mathematical understanding of what particularly makes an ensemble (un)-interpretable, including how fundamental factors like the (1) *number*, (2) *size*, and (3) *type* of base models influence its interpretability. In this work, we seek to bridge this gap by applying concepts from computational complexity theory to study the challenges of generating explanations for various ensemble configurations. Our analysis uncovers nuanced complexity patterns influenced by various factors. For example, we demonstrate that under standard complexity assumptions like P$\neq$NP, interpreting ensembles remains intractable even when base models are of constant size. Surprisingly, the complexity changes drastically with the number of base models: small ensembles of decision trees are efficiently interpretable, whereas interpreting ensembles with even a constant number of linear models remains intractable. We believe that our findings provide a more robust foundation for understanding the interpretability of ensembles, emphasizing the benefits of examining it through a computational complexity lens.

CLATTER: Comprehensive Entailment Reasoning for Hallucination Detection

Jun 05, 2025Abstract:A common approach to hallucination detection casts it as a natural language inference (NLI) task, often using LLMs to classify whether the generated text is entailed by corresponding reference texts. Since entailment classification is a complex reasoning task, one would expect that LLMs could benefit from generating an explicit reasoning process, as in CoT reasoning or the explicit ``thinking'' of recent reasoning models. In this work, we propose that guiding such models to perform a systematic and comprehensive reasoning process -- one that both decomposes the text into smaller facts and also finds evidence in the source for each fact -- allows models to execute much finer-grained and accurate entailment decisions, leading to increased performance. To that end, we define a 3-step reasoning process, consisting of (i) claim decomposition, (ii) sub-claim attribution and entailment classification, and (iii) aggregated classification, showing that such guided reasoning indeed yields improved hallucination detection. Following this reasoning framework, we introduce an analysis scheme, consisting of several metrics that measure the quality of the intermediate reasoning steps, which provided additional empirical evidence for the improved quality of our guided reasoning scheme.

On the Computational Tractability of the (Many) Shapley Values

Feb 17, 2025Abstract:Recent studies have examined the computational complexity of computing Shapley additive explanations (also known as SHAP) across various models and distributions, revealing their tractability or intractability in different settings. However, these studies primarily focused on a specific variant called Conditional SHAP, though many other variants exist and address different limitations. In this work, we analyze the complexity of computing a much broader range of such variants, including Conditional, Interventional, and Baseline SHAP, while exploring both local and global computations. We show that both local and global Interventional and Baseline SHAP can be computed in polynomial time for various ML models under Hidden Markov Model distributions, extending popular algorithms such as TreeSHAP beyond empirical distributions. On the downside, we prove intractability results for these variants over a wide range of neural networks and tree ensembles. We believe that our results emphasize the intricate diversity of computing Shapley values, demonstrating how their complexity is substantially shaped by both the specific SHAP variant, the model type, and the distribution.

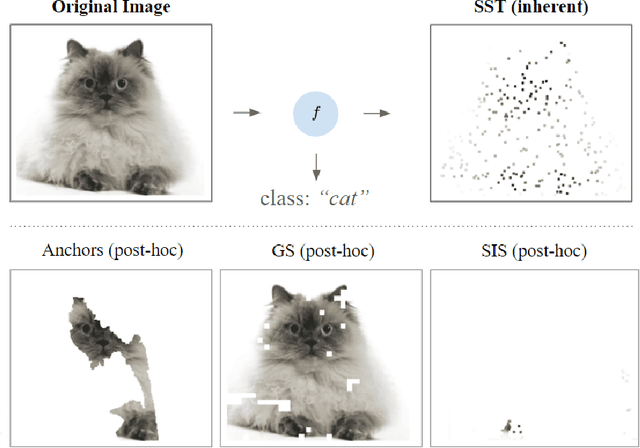

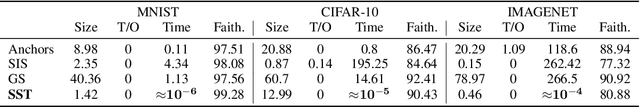

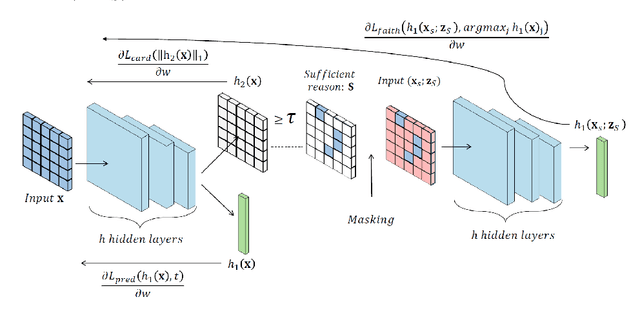

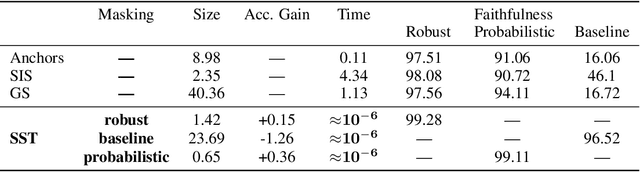

Explain Yourself, Briefly! Self-Explaining Neural Networks with Concise Sufficient Reasons

Feb 05, 2025

Abstract:Minimal sufficient reasons represent a prevalent form of explanation - the smallest subset of input features which, when held constant at their corresponding values, ensure that the prediction remains unchanged. Previous post-hoc methods attempt to obtain such explanations but face two main limitations: (1) Obtaining these subsets poses a computational challenge, leading most scalable methods to converge towards suboptimal, less meaningful subsets; (2) These methods heavily rely on sampling out-of-distribution input assignments, potentially resulting in counterintuitive behaviors. To tackle these limitations, we propose in this work a self-supervised training approach, which we term *sufficient subset training* (SST). Using SST, we train models to generate concise sufficient reasons for their predictions as an integral part of their output. Our results indicate that our framework produces succinct and faithful subsets substantially more efficiently than competing post-hoc methods, while maintaining comparable predictive performance.

Hard to Explain: On the Computational Hardness of In-Distribution Model Interpretation

Aug 07, 2024

Abstract:The ability to interpret Machine Learning (ML) models is becoming increasingly essential. However, despite significant progress in the field, there remains a lack of rigorous characterization regarding the innate interpretability of different models. In an attempt to bridge this gap, recent work has demonstrated that it is possible to formally assess interpretability by studying the computational complexity of explaining the decisions of various models. In this setting, if explanations for a particular model can be obtained efficiently, the model is considered interpretable (since it can be explained ``easily''). However, if generating explanations over an ML model is computationally intractable, it is considered uninterpretable. Prior research identified two key factors that influence the complexity of interpreting an ML model: (i) the type of the model (e.g., neural networks, decision trees, etc.); and (ii) the form of explanation (e.g., contrastive explanations, Shapley values, etc.). In this work, we claim that a third, important factor must also be considered for this analysis -- the underlying distribution over which the explanation is obtained. Considering the underlying distribution is key in avoiding explanations that are socially misaligned, i.e., convey information that is biased and unhelpful to users. We demonstrate the significant influence of the underlying distribution on the resulting overall interpretation complexity, in two settings: (i) prediction models paired with an external out-of-distribution (OOD) detector; and (ii) prediction models designed to inherently generate socially aligned explanations. Our findings prove that the expressiveness of the distribution can significantly influence the overall complexity of interpretation, and identify essential prerequisites that a model must possess to generate socially aligned explanations.

Local vs. Global Interpretability: A Computational Complexity Perspective

Jun 07, 2024

Abstract:The local and global interpretability of various ML models has been studied extensively in recent years. However, despite significant progress in the field, many known results remain informal or lack sufficient mathematical rigor. We propose a framework for bridging this gap, by using computational complexity theory to assess local and global perspectives of interpreting ML models. We begin by proposing proofs for two novel insights that are essential for our analysis: (1) a duality between local and global forms of explanations; and (2) the inherent uniqueness of certain global explanation forms. We then use these insights to evaluate the complexity of computing explanations, across three model types representing the extremes of the interpretability spectrum: (1) linear models; (2) decision trees; and (3) neural networks. Our findings offer insights into both the local and global interpretability of these models. For instance, under standard complexity assumptions such as P != NP, we prove that selecting global sufficient subsets in linear models is computationally harder than selecting local subsets. Interestingly, with neural networks and decision trees, the opposite is true: it is harder to carry out this task locally than globally. We believe that our findings demonstrate how examining explainability through a computational complexity lens can help us develop a more rigorous grasp of the inherent interpretability of ML models.

Marabou 2.0: A Versatile Formal Analyzer of Neural Networks

Jan 25, 2024

Abstract:This paper serves as a comprehensive system description of version 2.0 of the Marabou framework for formal analysis of neural networks. We discuss the tool's architectural design and highlight the major features and components introduced since its initial release.

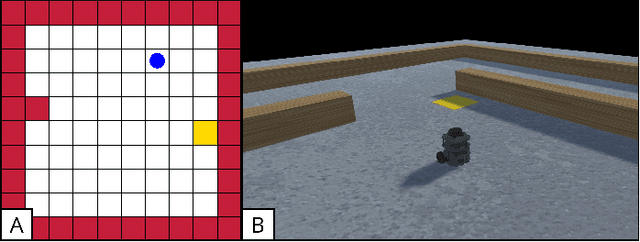

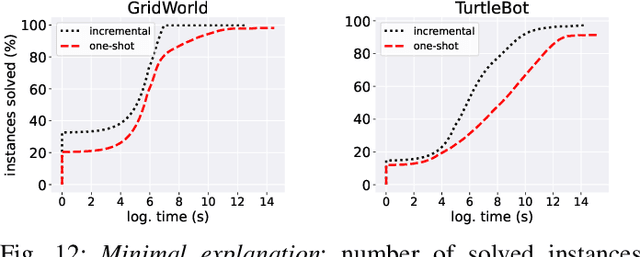

Formally Explaining Neural Networks within Reactive Systems

Aug 06, 2023

Abstract:Deep neural networks (DNNs) are increasingly being used as controllers in reactive systems. However, DNNs are highly opaque, which renders it difficult to explain and justify their actions. To mitigate this issue, there has been a surge of interest in explainable AI (XAI) techniques, capable of pinpointing the input features that caused the DNN to act as it did. Existing XAI techniques typically face two limitations: (i) they are heuristic, and do not provide formal guarantees that the explanations are correct; and (ii) they often apply to ``one-shot'' systems, where the DNN is invoked independently of past invocations, as opposed to reactive systems. Here, we begin bridging this gap, and propose a formal DNN-verification-based XAI technique for reasoning about multi-step, reactive systems. We suggest methods for efficiently calculating succinct explanations, by exploiting the system's transition constraints in order to curtail the search space explored by the underlying verifier. We evaluate our approach on two popular benchmarks from the domain of automated navigation; and observe that our methods allow the efficient computation of minimal and minimum explanations, significantly outperforming the state of the art. We also demonstrate that our methods produce formal explanations that are more reliable than competing, non-verification-based XAI techniques.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge