Matthew Daggitt

Marabou 2.0: A Versatile Formal Analyzer of Neural Networks

Jan 25, 2024

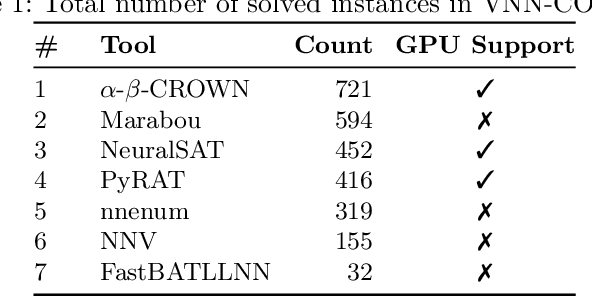

Abstract:This paper serves as a comprehensive system description of version 2.0 of the Marabou framework for formal analysis of neural networks. We discuss the tool's architectural design and highlight the major features and components introduced since its initial release.

CheckINN: Wide Range Neural Network Verification in Imandra (Extended)

Jul 28, 2022

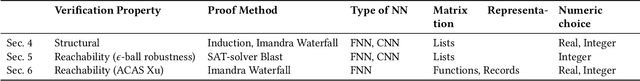

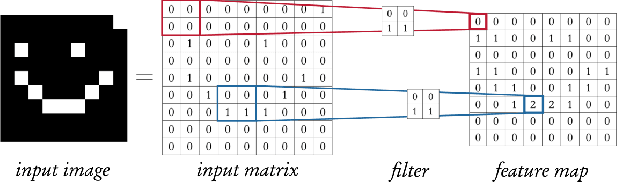

Abstract:Neural networks are increasingly relied upon as components of complex safety-critical systems such as autonomous vehicles. There is high demand for tools and methods that embed neural network verification in a larger verification cycle. However, neural network verification is difficult due to a wide range of verification properties of interest, each typically only amenable to verification in specialised solvers. In this paper, we show how Imandra, a functional programming language and a theorem prover originally designed for verification, validation and simulation of financial infrastructure can offer a holistic infrastructure for neural network verification. We develop a novel library CheckINN that formalises neural networks in Imandra, and covers different important facets of neural network verification.

Property-driven Training: All You Ever Wanted to Know About

Apr 03, 2021

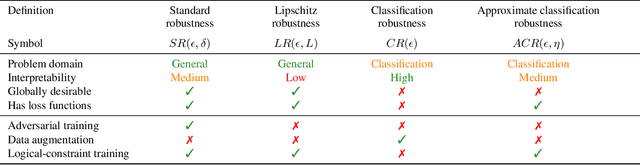

Abstract:Neural networks are known for their ability to detect general patterns in noisy data. This makes them a popular tool for perception components in complex AI systems. Paradoxically, they are also known for being vulnerable to adversarial attacks. In response, various methods such as adversarial training, data-augmentation and Lipschitz robustness training have been proposed as means of improving their robustness. However, as this paper explores, these training methods each optimise for a different definition of robustness. We perform an in-depth comparison of these different definitions, including their relationship, assumptions, interpretability and verifiability after training. We also look at constraint-driven training, a general approach designed to encode arbitrary constraints, and show that not all of these definitions are directly encodable. Finally we perform experiments to compare the applicability and efficacy of the training methods at ensuring the network obeys these different definitions. These results highlight that even the encoding of such a simple piece of knowledge such as robustness in neural network training is fraught with difficult choices and pitfalls.

Add to Chrome

Add to Chrome Add to Firefox

Add to Firefox Add to Edge

Add to Edge